SAP HANA on Hyperconverged Infrastructure (HCI) Solutions Powered by VMware vSAN

Abstract

This document provides best practices and architecture guidelines to design and build an SAP HANA environment on Hyperconverged Infrastructure (HCI) solutions based on Skylake, Cascade Lake, and Cooper Lake (second generation of INTEL® XEON® SCALABLE processor) based systems running with VMware vSphere® and VMware vSAN™.

The document provides information any hardware vendor can use as a starting point to build their own unique SAP HANA HCI solutions.

The vendor is responsible to define and select the best vSAN ready hardware components, VMware vSphere, and vSAN configuration for its unique solution. The HCI solution vendor is also responsible to certify its solution accordingly the SAP HANA Hardware Certification Hyperconverged Infrastructure certification scenario “HANA-HyCI 1.0 v1.2”. For details and requirements, contact the SAP Integration and Certification Center (ICC).

This paper also includes information about the support processes and requirements of SAP HANA on vSAN.

The version 2.3 of this document includes support for 8-socket wide VM configurations and vSphere and vSAN 7.0 U3c.

Executive Summary

Overview

For more than 10 years, VMware has successfully virtualized SAP applications on x86 server hardware. It is a logical next step to virtualize and use software to define the storage for these applications and their data. SAP has provided production support for their applications on VMware vSphere for the last 10 years, and for HANA since 2014.

SAP HANA based applications require dynamic, reliable, and high-performing server systems coupled with a predictable and reliable storage subsystem. The servers and storage must deliver the needed storage throughput and IOPS capacity at the right protection level and costs for all SAP HANA VMs in the environment.

Storage solutions like large Fibre Channel SAN or standalone SAP HANA appliance configurations, are not able to quickly react to today’s changing and dynamic environment. Their static configurations have difficulty keeping pace with modern and resource-demanding applications.

VMware vSAN, a software-defined storage solution, allows enterprises to build and operate highly flexible and application-driven storage configurations, which meets SAP HANA application requirements. Detailed SAP support information for VMware vSAN can be found in SAP Note 2718982 - SAP HANA on VMware vSphere and vSAN. A valid SAP s-user is required to view the note.

Audience

This paper is intended for SAP and VMware hardware partners who want to build and offer SAP HANA certified HCI solutions based on VMware technologies.

Solution Overview

SAP HANA on vSAN

SAP HANA is an in-memory data platform that converges database and application platforms, which eliminates data redundancy and improves performance with lower latency. SAP HANA architecture enables converged, online transaction processing (OLTP) and online analytical processing (OLAP) within a single in-memory, column-based data store that adheres to the Atomicity, Consistency, Isolation, Durability (ACID) compliance model.

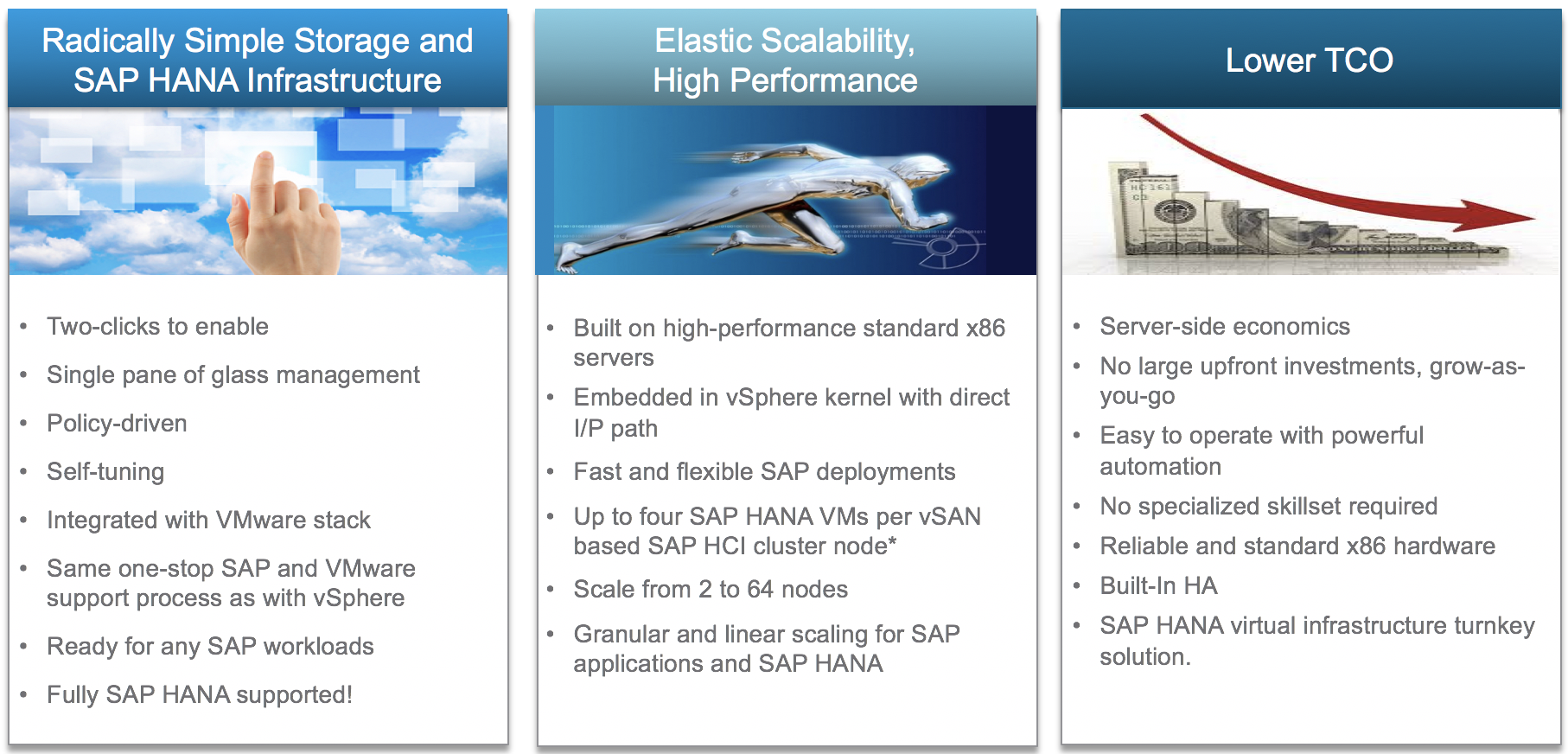

SAP environments with SAP HANA can use vSAN running on certified x86 servers to eliminate traditional IT silos of compute, storage, and networking with this hyperconverged solution.

All intelligence and management are moved into a single software stack, allowing for a VM- and application-centric policy-based control and automation.

This brings better security, predictable performance, operational simplicity, and cost-effectiveness into SAP HANA environments. vSAN also simplifies the complexity of traditional IT silos for compute, storage, and networking, as it allows managing the SAP HANA storage just like compute resources.

HCI lowers costs by combining the economics and simplicity of local storage with the features and benefits of shared storage and can get used for SAP HANA Scale-Up and Scale-Out deployments.

Following figure summarizes the benefits of VMware based SAP HCI solutions.

Only SAP and VMware certified and tested components, like server and storage systems, are supported. These components must comply with the SAP HANA Hardware Certification Hyperconverged Infrastructure (HANA-HyCI) test scenario.

Note: Only SAP and VMware HCI solution vendor offered solutions, that get SAP HANA HCI solution certified, are supported for SAP HANA workloads. SAP applications other than HANA are fully supported on a VMware vSAN cluster. No additional support notes or specific support processes are required from SAP when running other applications on vSAN, like an SAP application server.

Solution Components

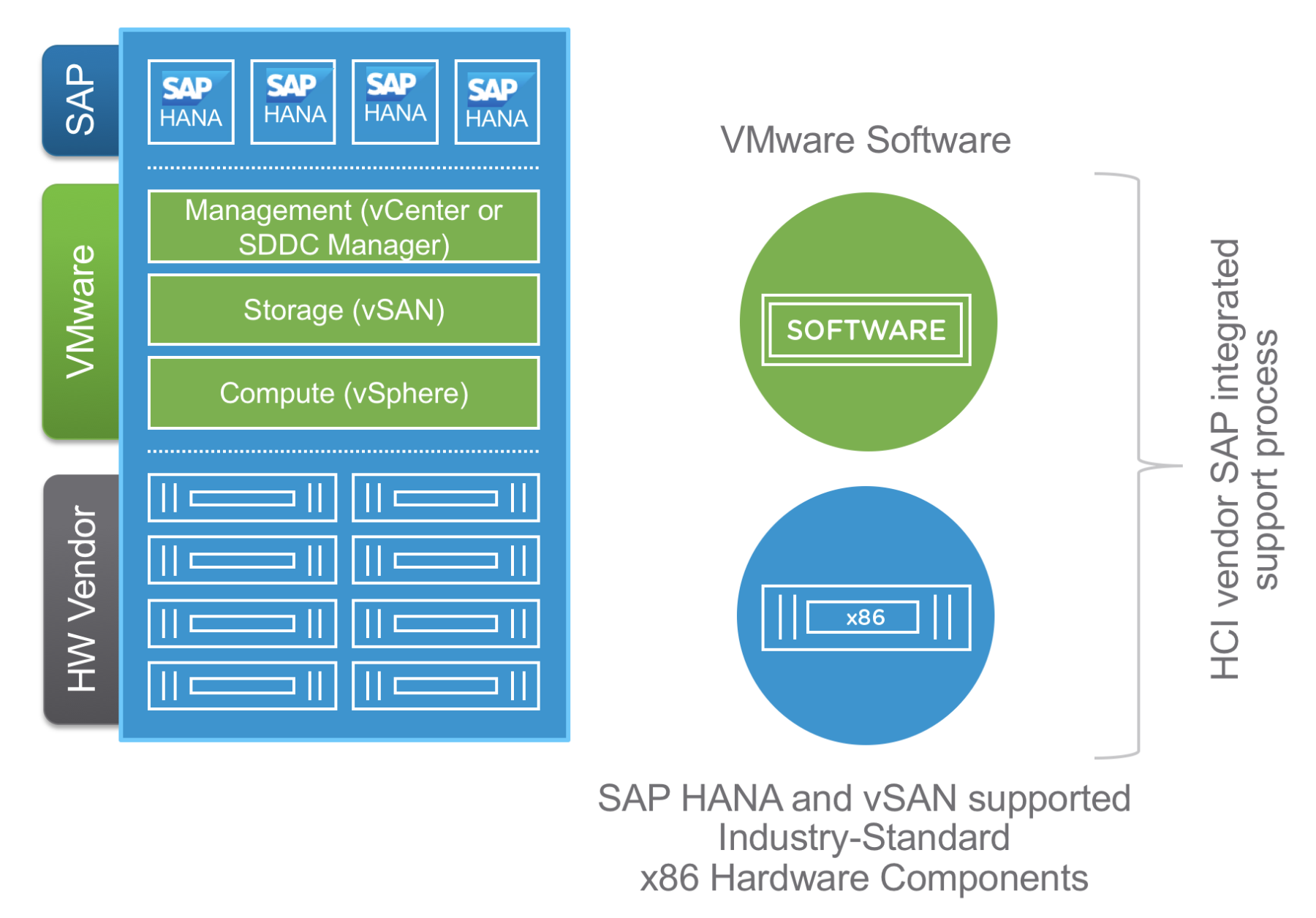

An SAP HANA HCI solution based on VMware technologies is a fully virtualized and cloud ready infrastructure turnkey solution running on VMware vSphere and vSAN. All components like compute, storage, and networking are delivered together as an HCI vendor specific SAP HANA solution. It is based on a tightly integrated VMware software stack, where vSAN is part of the VMware ESXi™ kernel to efficiently provide great performing storage.

There are two ways to build a vSAN SAP HANA HCI cluster for an OEM:

- Turnkey deployment using the existing vSAN/VCF appliances and modify these systems to be SAP HANA HCI ready.

- Use the certified vSAN Ready Nodes and components and build an SAP HANA ready HCI solution.

The solution consists of the following components:

- VMware certified vSAN HW partner solutions, as listed on the VMware vSAN HCL (vSAN Appliances or Ready Nodes with vSAN Ready Components).

- SAP HANA supported server systems, as listed on the SAP HANA HCL.

- VMware vSphere Enterprise Plus starting with version 6.5 update 2. For Cascade Lake based servers vSphere 6.7u3 or later and for Cooper Lake based servers vSphere 7.0 U2 or later is required. Review SAP note 2393917 for an updated list of supported vSphere versions.

- VMware vSAN Enterprise starting with version 6.6. For Cascade Lake based servers vSAN 6.7u3 or later is required. Review SAP note 2718982 for an updated list of supported vSAN versions.

- VMware vCenter starting with version 6.5. Note that vCenter versions need to be aligned with the used vSphere version.

- HCI vendor implemented the vSAN HCI detection script provided by VMware (adds vSAN HCI identifier to the VM configuration file, see the SAP HANA HCI VMware vSAN Detection Script section for details).

- HCI vendor SAP-integrated support process.

After the solutions are defined and built, SAP ICC validates and lists these solutions in Certified HCI Solutions on the SAP HANA Supported and Certified Hardware Directory section.

Figure 1 provides an overview of the solution components.

Figure 1. SAP HANA HCI Solution Overview

SAP HANA HCI on vSAN—Support Status as of Note 2718982

SAP HANA HCI solutions are currently supported with the following VMware vSphere and vSAN versions and hardware:

- VMware vSphere Hypervisor 6.5, 6.7, 6.7 U2/U3, 7.0 U1/U2/U3c

- SAP HANA SPS 12 Rev. 122.19 (or later releases) for production single-VM and multi-VM use cases. See SAP Note 2393917 for detailed information and constraints. SAP HANA on vSAN has additional constraints, see next section.

- vSphere 7.0 U1/U2/U3c are supported as of today for SAP HANA HCI solutions.

- VMware vSAN 6.6, 6.7, 6.7 U1/U2/U3), 7.0 U1/U2/7.0 U3c[1]

- vSAN 6.6, 6.7, 6.7 U1/U2/U3, 7.0 U1/U2/U3c: Maximum 4 SAP HANA VMs on 2, 4, and 8 socket servers

- Starting from above vSAN versions, the HCI vendor can select the appropriate vSAN version for their HCI solution.

- The vSAN versions 6.6, 6.7, 7.0 U1/U2/U3c are included in vSphere version 6.5 U2 and vSphere version 6.7, 6.7 U2/U3, 7.0 U1/U2/U3c respectively.

- Minimal 3, maximal 64 nodes per SAP HANA HCI vSAN cluster.

- 4 or more vSAN nodes are recommended for optimal failure resilience.

- No CPU sockets need to get reserved for vSAN. Note that vSAN will need, just like ESXi, system resources. Refer to VMware KB 2113954 for information about vSAN resource needs.

- CPU architecture and VM maximums:

- Any supported Intel Skylake, Cascade Lake or Cooper Lake processors with 2, 4, and 8 socket servers with existing VMware vSphere virtualization boundaries, for example:

- max. 12 TB RAM and 448 vCPUs per SAP HANA Scale-Up VM, see SAP Note 3102813 for details. Also note that the actual usable vCPUs depend on the used CPU type: the current SAP HANA vSphere maximum vCPU number is 448 with 8-socket host systems.

- The certified hardware for HCI solutions can be found on the Certified Hyper-Converged Infrastructure Solutions

- Any supported Intel Skylake, Cascade Lake or Cooper Lake processors with 2, 4, and 8 socket servers with existing VMware vSphere virtualization boundaries, for example:

- SAP HANA Deployment Options (SAP note 3102813):

- SAP HANA Scale-Up:

- OLTP or mixed type workload up to 12 TB RAM and up to 448 vCPUs per VM

- OLAP type workload:

- SAP sizing Class L: up to 6 TB RAM and up to 448 vCPUs per VM

- SAP sizing Class M: up to 12 TB RAM and up to 448 vCPUs per VM

- SAP HANA Scale-Out:

- Up to 1 master and 7 worker nodes are possible, plus HA node(s). HA nodes should be added according to the cluster size. FAQ: SAP HANA High Availability

- SAP HANA Scale-Out system max. 16 TB memory with SAP HANA CPU L-Class* sizing:

- Up to 2 TB of RAM and up to 256 vCPUs per Scale-Out node (VM), sizing up to SAP HANA CPU Sizing Class L type workloads

- SAP HANA Scale-Out system max. 24 TB memory with SAP HANA M-Class* CPU sizing:

- Up to 3 TB of RAM and up to 256 vCPUs per Scale-Out node (VM), sizing up to SAP HANA CPU Sizing Class M** type workloads

- Additional requirements:

- Scale-Out is only support by SAP with 4 or 8 socket servers and minimal 4-socket wide VMs that use up to 256 logical CPU threads, in the case of Cascade Lake 28 core CPUs this represents a maximal VM size with 224 vCPUs.

- SAP HANA Scale-Up:

Note: SAP Note 2718982 - SAP HANA on VMware vSphere and vSAN will be continuously updated with the latest support information without further notice. Above support status is from February 2022. Before planning an SAP HANA HCI vSAN based solution, check note 2718982 for any updates.

The SAP HANA BW needed CPU Sizing Class can be found in the BW sizing report under the heading "RSDDSTAT ANALYSIS DETAILS". See SAP note 2610534 for details.

Reference Architecture

The purpose of the introduced vSAN for SAP HANA reference architectures is to provide a starting point for HCI solution vendors who want to build a vSAN based solution and to provide best practices and guidelines.

The reference architecture is tested and validated with the SAP specified HCI tests and is known to be good with the shown SAP HANA configuration.

Scope

This reference architecture:

- Provides a technical overview of an SAP HANA on a VMware HCI solution

- Shows the components used to test and validate vSAN with the SAP HCI tests and to meet the storage performance requirements of SAP HANA Tailored Datacenter Integration (TDI)

- Explains configuration guidelines and best practices

- Provides information about vSAN/HCI solution sizing for SAP HANA VMs

- Explains the deployment options from single DC to multiple suite configurations

SAP HANA HCI on vSAN—Architecture Overview

vSAN Clusters consist of two or more physical hosts that contain either a combination of magnetic disks and flash devices (hybrid configuration) or all-flash devices (all-flash configuration). All vSAN nodes contribute cache and capacity to the vSAN distributed/clustered datastore and are interconnected through a dedicated 10 GbE (minimum) or better path redundant network.

Note: In SAP HANA environments, four nodes are recommended for easier maintenance and Stretched Cluster readiness, but the minimum requirement of a three-host vSAN cluster for SAP HANA HCI can be used.

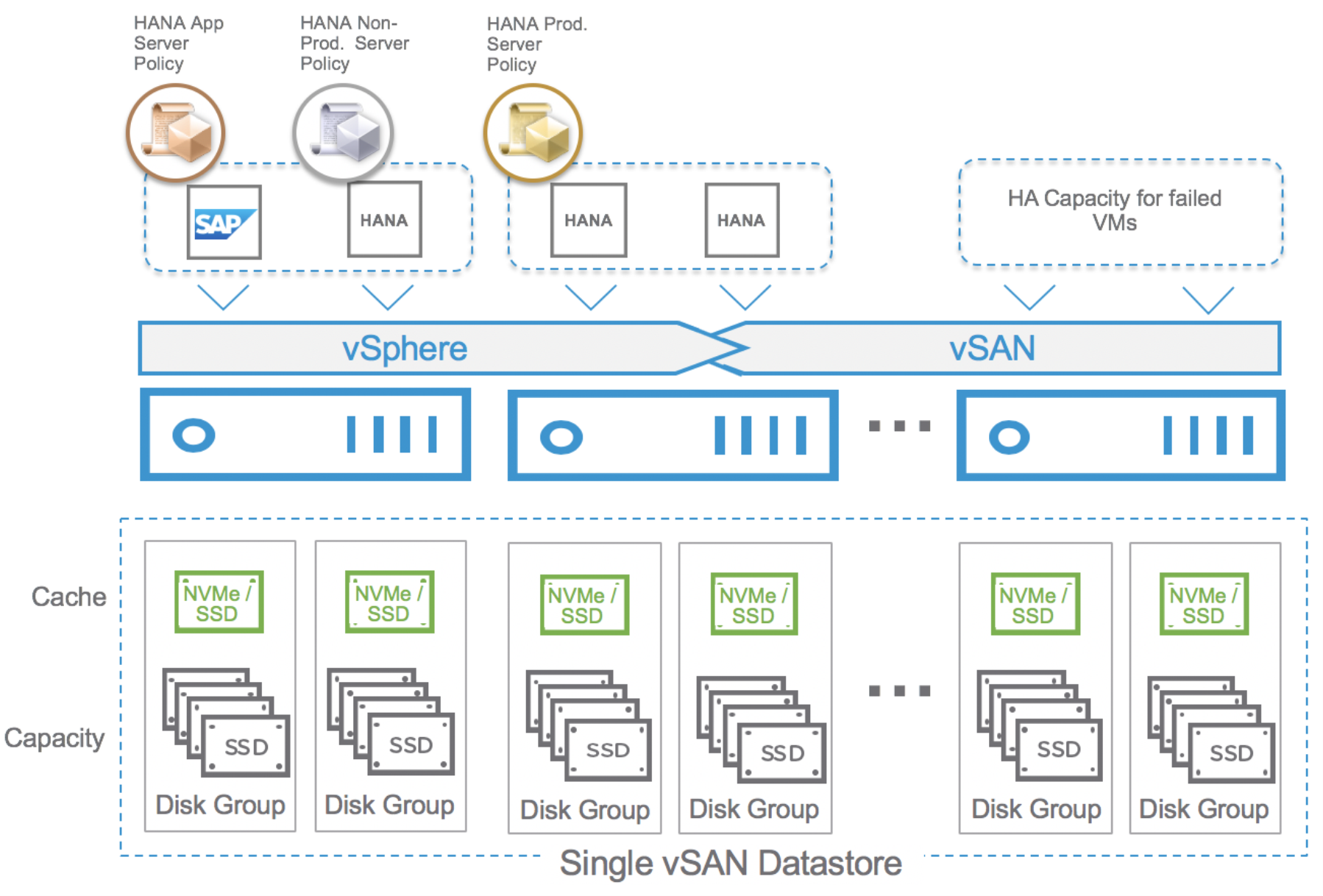

Figure 2 shows the high-level architecture of an SAP landscape running on a VMware-based SAP HANA HCI solution built upon several vSphere hosts and vSAN for a single data center configuration. Unlike with other SAP HANA HCI solutions, a VMware vSAN powered SAP HANA HCI solution can leverage all available ESXi host CPU and RAM resources. As shown in the figure, one host has enough compute resources available to support a complete host failover.

Figure 2. SAP HANA Applications on vSAN High-Level Architecture

Storage policies for less demanding SAP HANA application servers and for IO demanding HANA VMs, as shown in Figure 2 (bronze, silver, and gold), will ensure that all VMs get the performance, resource commitments, failure tolerance, and quality of service as a required policy-driven dynamic environment. With such an environment, changing the assigned storage policy in response to the changing needs of a virtualized application can directly influence the underlying infrastructure. The different storage policies allow changing the level of protection or possible IO limits without disturbing operation of a VM, allowing it to remain available and maintain the defined SLAs and performance KPIs.

SAP HANA HCI on vSAN—Scalability and Sizes

SAP HANA on vSphere is supported across a range of SAP HANA system size. The smallest size is a half socket CPU configuration with a minimum of 8 physical CPU cores and 128 GB of RAM. The largest size, as of today, is a 8 socket wide VM with 12 TB of RAM and 448* vCPUs. Scale-Out SAP HANA deployments are also supported with up to eight 3 TB nodes. In total, up to 24 TB system memory can be deployed on SAP HANA vSAN HCI solutions for OLAP type workloads. Starting with vSphere 6.7 U2, there is also an alternative sizing possibility based on the SAP HANA workload. More information in SAP note 2779240.

vSphere 6.7 U3, 7.0 U1/U2 and U3c* as supported for vSAN provides the following maximums per physical host (table 1). For SAP HANA, only servers with up to 8 physical CPU sockets are currently supported.

*Actual usable number of vCPU depends on the selected CPU type and vSphere version. vSphere 7.0 U2 or later supports VMs with up to 24 TB and 768 vCPUs.

Table 1. Current vSphere HCI Supported Host Maximums

|

|

ESX 6.7 U3 / 7.0 |

ESX 7.0 U2/U3c |

|

Logical CPUs per host |

768 |

896 |

|

Virtual machines per host |

1024 |

|

|

Virtual CPUs per host |

4096 |

|

|

Virtual CPUs per core |

32 |

|

|

RAM per host |

16 TB |

24 TB |

|

NUMA Nodes / CPU sockets per host |

16 (SAP HANA only 8 CPU sockets) |

|

Table 2 shows the maximum size of an SAP HANA VM and some relevant parameters such as virtual disk size and number of virtual NICs per VM. The current VM size is limited for SAP HANA to 448 vCPUs and 12 TB of RAM (8-socket wide VM).

Table 2. vSphere Guest (VM) Maximums

|

|

ESX 6.7 U3 / 7.0 |

ESX 7.0 U2/U3c |

|

Virtual CPUs per virtual machine |

256 |

768 |

|

RAM per virtual machine |

6128 GB |

24 TB |

|

Virtual CPUs per HANA virtual machine |

224 |

448 |

|

RAM per HANA virtual machine |

6128 GB |

<=12 TB |

|

Virtual SCSI adapters per virtual machine |

4 |

|

|

Virtual NVMe adapters per virtual machine |

4 |

|

|

Virtual disk size |

62TB |

|

|

Virtual NICs per virtual machine |

10 |

|

Table 3 shows the vSAN maximum values: the component number or the size of a vSAN SAP HANA HCI cluster depends on the SAP HANA HCI vendor. The minimum SAP HANA supported cluster configuration is 3 vSAN cluster nodes, and up to 64 cluster nodes are supported by vSAN.

Table 3. vSAN Maximums

|

VMware vSAN 6.7 (incl. U3) and 7.0 U1/U2 |

vSAN ESXi host |

vSAN Cluster |

vSAN Virtual Machines |

|

vSAN disk groups per host |

5 |

|

|

|

Cache disks per disk group |

1 |

|

|

|

Capacity tier maximum devices per disk group |

7 |

|

|

|

Cache tier maximum devices per host |

5 |

|

|

|

Capacity tier maximum devices |

35 |

|

|

|

Components per vSAN host |

9,000 |

|

|

|

|

|

|

|

|

Number of vSAN hosts in an All-Flash cluster |

|

64 |

|

|

Number of vSAN hosts in a hybrid cluster |

|

64 |

|

|

Number of vSAN datastores per cluster |

|

1 |

|

|

|

|

|

|

|

Virtual machines per host |

|

|

200 |

|

Virtual machine virtual disk size |

|

|

62 TB |

|

Disk stripes per object |

|

|

12 |

|

Percentage of flash read cache reservation |

|

|

100 |

|

Percentage of object space reservation |

|

|

100 |

|

vSAN networks/physical network fabrics |

|

|

2 |

Table 4 shows the size of SAP HANA VMs possible on a supported SAP HANA HCI solution for production use case with vSphere and vSAN 6.7 U3, 7.0 U1/U2 and U3c.

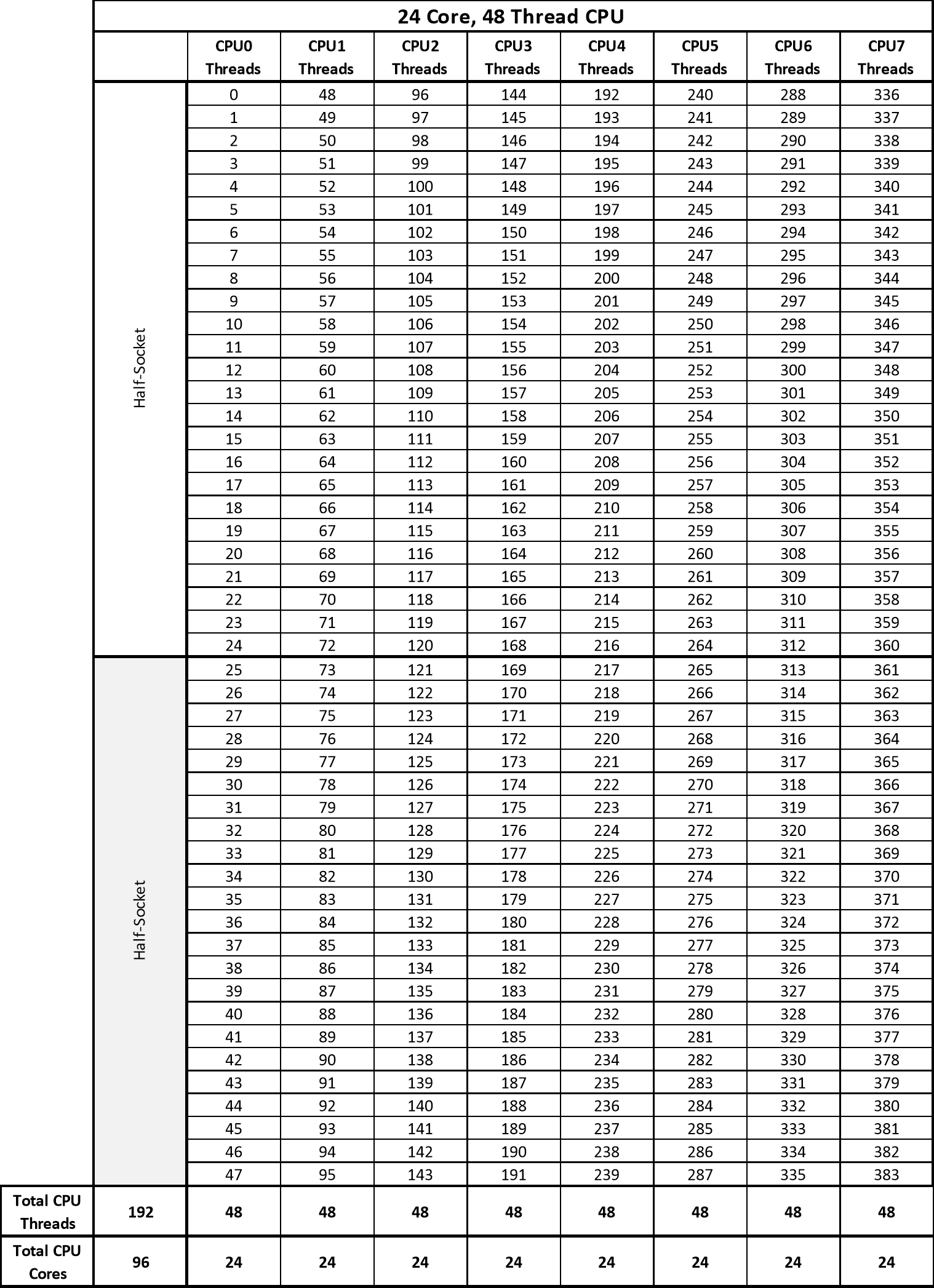

SAP HANA VM can be configured as half-socket, full-socket, 2, 3, 4, 5, 6, 7 or 8-socket wide VMs. No CPU sockets need to be reserved for vSAN. If an SAP HANA system fits into these maximums, it can be virtualized with vSphere in a single VM. If it is larger, in terms of CPU or RAM needs, Scale-Out or SAP HANA data-tiering solutions can be used alternatively to meet the requirements.

We have validated that 4 SAP HANA VMs can work consolidated on a vSAN configuration running on 2- or more-socket systems. For detailed information, see the Reference Test System Configuration section.

Table 4 shows the example CPU’s VM sizes that can be deployed on a vSAN SAP HANA HCI node.

Note: RAM must be reserved for ESXi. The RAM figures listed in Table 4 show the theoretical RAM maximums of a VM. The RAM for ESXi needs to get subtracted from this RAM size. No CPU sockets or other system resources need to get reserved for vSAN. VMware KB 2113954 provides details about the vSAN and memory consumption in ESXi. For sizing purposes an additional 10% sizing buffer should get subtracted from the virtual SAP HANA SAPS capacity to factor the vSAN CPU resource costs in. This sizing buffer is a sizing value, which can be much lower in typical customer environments, or in rare situations like during vSAN storage re-organization tasks, can be higher.

|

|

||||

|

SAP Benchmark |

2016067 (20. Dec. 2016) |

2020015 (05. May 2020) |

2019023 (02. April 2019) |

2020050 (11. Dec. 2020) |

|

Max supported RAM per CPU as of Intel datasheet. |

3 TB, (maximal supported RAM by SAP is 1 TB1) |

1024 GB |

4.5 TB |

4.5 TB (HL) |

|

CPU Cores per socket as of Intel datasheet. |

24 |

28 |

28 |

28 |

|

Max. NUMA Nodes per ESXi Server |

82 |

2 |

82 |

82 |

|

1785 (core w/o HT) 315 (HT gain) Based on SD benchmark cert.2016067 |

2459 (core w/o HT) 434 (HT gain) Based on SD benchmark cert. 2020015 |

2596 (core w/o HT) 458 (HT gain) Based on SD benchmark cert. 2019023 |

2432 (core w/o HT) 429 (HT gain) Based on SD benchmark cert.2020050 |

|

|

0.5 Socket SAP HANA VM (Half-Socket) |

1 to 16 x 12 physical core VM with min. 128 GB and max. 512 GB3 |

1 to 4 x 14 physical core VMs with min. 128 GB RAM and max. 512GB3 |

1 to 16 x 14 physical core VMs with min. 128 GB RAM and max. 768 GB3 |

1 to 16 x 14 physical core VMs with min. 128 GB RAM and max. 768 GB3 |

|

vSAPS4,5 21,000, 24 vCPUs |

vSAPS4,5 34,000, 28 vCPUs |

vSAPS4,5 36,000, 28 vCPUs |

vSAPS4,5 34,000, 28 vCPUs |

|

|

1 Socket SAP HANA VM |

1 to 8 x 24 physical core VM with min. 128 GB RAM and max. 1024 GB |

1 to 2 x 28 physical core VM with min. 128 GB RAM and max. 1024 GB |

1 to 8 x 28 physical core VM with min. 128 GB RAM and max. 1536 GB |

1 to 8 x 28 physical core VM with min. 128 GB RAM and max. 1536 GB |

|

vSAPS4,5 50,000, 48 vCPUs |

vSAPS4,5 81,000, 56 vCPUs |

vSAPS4,5 85,000, 56 vCPUs |

vSAPS4,5 80,000 56 vCPUs |

|

|

2 Socket SAP HANA VM |

1 to 4 x 48 physical core VM with min. 128 GB RAM and max. 2048 GB |

1 x 56 physical core VM with min. 128 GB RAM and max. 2048 GB |

1 to 4 x 56 physical core VM with min. 128 GB RAM and max. 3072 GB |

1 to 4 x 56 physical core VM with min. 128 GB RAM and max. 3072 GB |

|

vSAPS4,5 100,000, 96 vCPUs |

vSAPS4,5 162,000, 112 vCPUs |

vSAPS4,5 171,000, 112 vCPUs |

vSAPS4,5 160,000, 112 vCPUs |

|

|

3 Socket SAP HANA VM |

1 to 2 x 72 physical core VM with min. 128 GB RAM and max. 3072 GB |

- |

1 to 2 x 84 physical core VM with min. 128 GB RAM and max. 4608 GB |

1 to 2 x 84 physical core VM with min. 128 GB RAM and max. 4608 GB |

|

vSAPS4,5 151,000, 144 vCPUs |

- |

vSAPS4,5 256,000, 168 vCPUs |

vSAPS4,5 240,000, 168 vCPUs |

|

|

4 Socket SAP HANA VM |

1 to 2 x 96 physical core VM with min. 128 GB RAM and max. 4096 GB |

- |

1 to 2 x 112 physical core VM with min. 128 GB RAM and max. 6128 GB |

1 to 2 x 112 physical core VM with min. 128 GB RAM and max. 6128 GB |

|

vSAPS4,5 201,000, 192 vCPUs |

- |

vSAPS4,5 342,000, 224 vCPUs |

vSAPS4,5 320,000, 224 vCPUs |

|

|

8 Socket SAP HANA VM |

- |

- |

1 x 224 physical core VM with min. 128 GB RAM and max. 12096 GB |

1 x 224 physical core VM with min. 128 GB RAM and max. 12096 GB |

|

- |

- |

vSAPS4,5 684,000, 448 vCPUs |

vSAPS4,5 640,000, 448 vCPUs |

2Maximal 224 vCPUs can get configured per VM with vSphere 6.7 U2 and later with a Skylake, Cascade Lake or Cooper Lake CPU.

3The half-socket RAM figures listed in the table show even configured half-socket VM CPU configurations and RAM sizes.

4The listed vSAPS figures are based on published SD benchmark results with Hyperthreading (2-vCPU configuration) and minus 10% virtualization costs. In the case of a half-socket configuration in addition to the 10% virt. costs, 15% from the SD capacity must get subtracted. The shown figures are rounded figures and based on rounded SAPS performance figures published SAP SD benchmarks, and can get only used for Suite or BW on HANA or BW/4HANA workloads. For mixed HANA workloads sizing parameters contact SAP or you HW vendor.

5In the SAP HANA HCI (vSAN) use case an additional 10% sizing buffer should get removed from the shown vSAPS capacity.

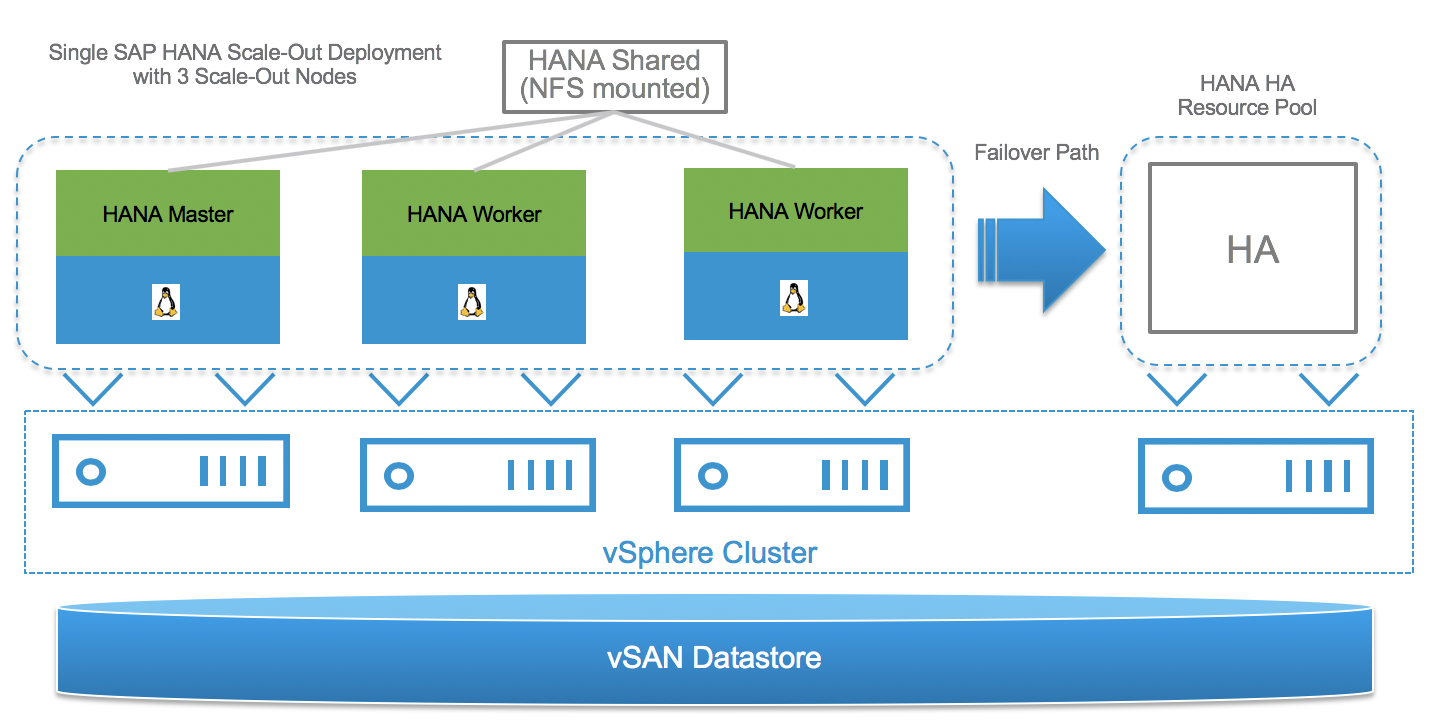

Scale-Out deployments are supported with up to eight[2] 3 TB 4-socket wide VMs. This provides for an OLAP type SAP HANA instance up to 24 TB in total system RAM by utilizing the Scale-Out deployment model.

Figure 3 shows a 4+1 SAP HANA HCI BW cluster. The required SAP HANA “Shared Directory” gets mounted directly to SAP HANA VMs via NFS from an SAP HANA HCI external NFS server. Minimal one vSAN node is needed to support HA.

Figure 3. SAP HANA Scale-Out Example Cluster on vSAN (HCI servers)

VMware HA

VMware vSphere HA delivers a vSphere built-in high availability solution, which can be used to protect SAP HANA. VMware HA provides unified and cost-effective failover protection against hardware and operating system outages and works with a vSAN data store just like it does with any other supported shared storage solution with VMFS. These options are for single site.

VMware HA to Protect Scale-Up and Scale-Out SAP HANA Systems

VMware HA protects SAP HANA Scale-Up and Scale-Out deployments without any dependencies on external components, such as DNS servers, or solutions, such as the SAP HANA Storage Connector API or STONITH scripts.

Note: See the Architecture Guidelines and Best Practices for Deployments of SAP HANA on VMware vSphere for detailed information about how VMware HA is used to protect any SAP HANA deployment on vSphere and how to install an SAP HANA Scale-Out system on VMware vSphere.

In the event of a hardware or OS failure, the entire SAP HANA VM is restarted either on the same or other hosts in the vSphere cluster. Because all virtual disks, such as OS, data, and log VMDKs are stored on the shared vSAN datastore, no specific storage tasks, STONITH scripts, or extra cluster solutions are required.

Figure 4 shows the concept of a typical n+1 configuration, which is the standard deployment of an SAP HANA HCI solution. It is recommended deploying an n+2 configuration for easy maintenance and to protect your systems against a failure while you perform maintenance.

Figure 4. VMware HA Protected SAP HANA VMs in an n+1 vSAN Example Cluster Configuration

Figure 5 shows the same configuration with an active-active cluster. This ensures that all hosts of a vSAN cluster are used by still providing enough failover capacity for all running VMs in the case of a host failure.

Figure 5. VMware HA Active-Active n+1 vSAN Example Cluster Configuration

The same concept is also used for SAP HANA Scale-Out configurations. Note that for SAP HANA Scale-Out, only 4-socket or 8-socket servers are supported. The minimum SAP HANA VM must be configured with 4 virtual CPU sockets respectively. Figure 6 shows a configuration of 3 SAP HANA VMs (1 x master with 2 worker nodes) running on the virtual SAP HANA HCI platform. The NFS mounted SAP HANA shared directory is not part of the vSAN cluster and needs to be provided as an vSAN external NFS service[*].

Figure 6. SAP HANA Scale-Out VMware HA n+1 vSAN Example Cluster Configuration

Unlike physical deployments, there are no dependencies on external components, such as DNS servers, SAP HANA Storage Connector API, or STONITH scripts. VMware HA will simply restart the failed SAP HANA VM on the VMware HA/standby server. HANA shared is mounted via NFS, just as recommended with physical systems and will move with the VM that has failed. The access to HANA shared is therefore guaranteed.

Note: VMware HA can protect only against OS or VM crashes or HW failures. It cannot protect against logical failures or OS file system corruptions that are not handled by the OS file system. vSAN 7.0 U1 File Service (NFS) are yet not supported.

vSphere Clustering Service (vCLS)

Starting with vSphere 7.0 Update 1, vSphere Cluster Service (vCLS) is enabled by upgrading the vCenter to 7.0U1 and runs in all vSphere clusters. VMware would like to make critical cluster services like vSphere High Availability (HA) and vSphere Distributed Resource Scheduler (DRS) always available and vCLS is an initiative to reach the vision.

The dependency of these cluster services on vCenter is not ideal and VMware introduced in the vSphere 7 Update 1 release the vSphere Clustering Service (vCLS), which is the first step to decouple and distribute the control plane for clustering services in vSphere and to remove the vCenter dependency. If vCenter Server becomes unavailable, in future, vCLS will ensure that the cluster services remain available to maintain the resources and health of the workloads that run in the clusters.

vCLS Deployment Guidelines for SAP HANA Landscapes

As of SAP notes 2937606 and 3102813, it is not supported to run a non-SAP HANA VM on the same NUMA node where a SAP HANA VM already runs:

“SAP HANA VMs can get co-deployed with SAP non-production HANA or any other workload VMs on the same vSphere ESXi host, as long as the production SAP HANA VMs are not negatively impacted by the co-deployed VMs. In case of negative impact on SAP HANA, SAP may ask to remove any other workload”. Also, “no NUMA node sharing between SAP HANA and non-HANA allowed”.

Due to the mandatory and automated installation process of vCLS VMs, when upgrading to vCenter 7.0 Update 1, it is necessary, because of above guidelines, to check if vCLS VMs got co-deployed on vSphere ESXi hosts that run SAP HANA production level VMs. If this is the case, then these VMs must get migrated to hosts that do not run SAP HANA production level VMs.

This can get achieved by configuring vCLS VM Anti-Affinity Policies. A vCLS VM anti-affinity policy describes a relationship between VMs that have been assigned a special anti-affinity tag (e.g. tag name SAP HANA) and vCLS system VMs.

If this tag is assigned to SAP HANA VMs, the vCLS VM anti-affinity policy discourages placement of vCLS VMs and SAP HANA VMs on the same host. With such a policy it can get assured that vCLS VMs and SAP HANA VMs do not get co-deployed.

After the policy is created and tags were assigned, the placement engine attempts to place vCLS VMs on the hosts where tagged VMs are not running.

In the case of an SAP HANA HCI partner system validation or if an additional non-SAP HANA ESXi host cannot get added to the cluster then the Retreat Mode can get used to remove the vCLS VMs from this cluster. Please note the impacted cluster services (DRS) due to the enablement of Retreat Mode on a cluster.

Note: Setting vCLS VM Anti-Affinity Policies ensures that a vCLS VM does not get placed on hosts that run SAP HANA VMs. This requires hosts that do not run SAP HANA tagged VMs. If this is not possible then you can use the Retreat Mode to remove the vCLS VMs from the cluster. In the case of VCF an additional node to offload the vCLS VMs is required per cluster (e.g. the HA node).

For more details and vCLS deployment examples in the context of SAP HANA please refer to this blog.

VMware vSAN Stretched Cluster Support for SAP HANA Systems

Stretched clusters extend the vSAN cluster from a single data site to two sites for a faster level of availability and cross-site load balancing. Stretched clusters are typically deployed in environments where the distance between data centers is limited, such as metropolitan or campus environments. SAP HANA is now tested and is supported on vSAN stretched cluster 7.0 U1/U2/U3c environments. SAP HANA requires that log file writes have a less than 1ms latency, and this log file write latency should not exceed 1ms across primary and secondary sites. The underlying network infrastructure plays a key role, as the distances supported between the two sites solely depends on ability to achieve latency of 1ms across these sites for SAP HANA.

Tests conducted with one of our HCI hardware partners showed that distances up to 30 km between the two sites, all SAP HANA performance throughput and latency KPIs were passed across the tested SAP HANA VMs. Actual distances could be lower since the network latency is depending on the used network components and architecture. Therefore, distances up to 5 km are supported. SAP HANA HCI support of stretched cluster configurations is upon the SAP HANA HCI hardware vendor and not VMware.

You can use stretched clusters to manage planned maintenance and avoid disaster scenarios, because maintenance or loss of one site does not affect the overall operation of the cluster. In a stretched cluster configuration, both data sites are active sites. If either site fails, vSAN uses the storage on the other site. vSphere HA restarts any VM that must be restarted on the remaining active site.

You must designate one site as the preferred site. The other site becomes a secondary or nonpreferred site. If the network connection between the two active sites is lost, vSAN continues operation with the preferred site. The site designated as preferred typically is the one that remains in operation unless it is resyncing or has another issue. The site that leads to maximum data availability is the one that remains in operation.

Below example shows a simplified stretched cluster configuration. Site X is the preferred (prod. site), which can be a complete datacenter or a single rack. Site Y is the secondary site and in this case the failover site in the case of site failure. Local failures can get compensated by providing HA capacity inside the site and a site failover can get initiated by providing enough failover capacity at site Y, or by freeing up failover capacity or by only failing over production level SAP HAN VMs. The witness appliance must run on a host placed in an independent site Z. The witness decides ultimately on a site failover and can run for instance on an ESXi host which supports the SAP application server VMs.

Figure 7. SAP HANA Stretched Cluster Example Configuration

Summary:

- Tested distances maintain <= 1 ms KPI for SAP HANA log writing up to 30 km

- Stretched clusters are normally used campus wide, therefore distances up to 5 km supportable.

- Witness VM can run on any vSphere host in 3rd size “z”.

- Failover capacity is n+1, where 1 stands for one host failure. N+2 would support two host failures.

- Failover can be bi-directional.

SAP HANA Stretched Clusters VM Storage Policy

For an SAP HANA VM in vSAN stretched cluster, the VM storage policy should be enhanced for Dual Site Mirroring and with Failures to tolerate equal to 1 failure – RAID1 (Mirroring) as shown in figure 8.

Figure 8. SAP HANA VM Storage Policy in vSAN Stretched Cluster

When Site Disaster Tolerance is set to “Dual Site Mirroring”, a copy of the data goes to both sites, When the “Primary Failures to Tolerate” rule is equal to 1, writes will continue to be written in a mirrored fashion across sites, therefore check and ensure that the required disk capacity is available on each site.

Reference Test System Configuration

This section introduces the resources and configurations including:

- Reference environment

- Quality of service policy

- Hardware resources

- Software resources

Reference Environment

To ensure that a vSAN based HCI solution is SAP HANA ready, SAP provided test tools and test cases (HANA-HyCI 1.0 v1.2 test scenario). These test tools validate performance, scalability, and consistency. Under the “Certified Hyper-Converged Infrastructure Solutions“, a complete set of vSAN supported configurations from different vendors are listed. For example, this link provides a list for Cascade Lake and vSAN supported configurations.

The reference configurations listed on the next pages are validated by VMware and provide an example for Scale-Up and Scale-Out configurations. The Scale-Up example configurations are based on 2-,4 and 8-socket systems, configured as a 3-host and 4-host vSAN cluster with 2 disk groups and 4 disk groups per server. The configurations had Intel Optane NVMe devices as caching layer, and SSD based capacity disks. With both configurations, it is possible to meet the SAP HANA KPIs defined for each configuration. The Scale-Out test configuration ran on a 4-host vSAN cluster. Each host had 3 TB memory, 4 CPU sockets, and 4 disk groups installed and configured. In the 8-socket host case two individual Scale-Out systems could get consolidated on an 8-socket host configuration.

IOPS Limit per Partition Policy—Quality of Service Option to Storage Throughput

vSAN storage policies provide multiple options, many features are built into storage policy that has capability to limit the IOPS on the partition that this policy is enforced upon. This IOPS value can be set when a policy is created. Typically, a value of “0” ensures that there is no IOPS limit on partition that this policy is enforced upon. After the policy is created, it can be applied to any partition or partitions across VMs or single VM depending on requirements.

A typical HANA instance has log and data partition. We have done internal testing scenarios where the IOPS limit is set on data partition only, across a set of 8 HANA VMs RAID 1, running on a 3-host cluster with one HA host. To do this, configure a policy with limits and allocate this policy on VMDK which will be mounted as data partition. A minimum setting of around 13,700 IOPS on the test setup's data partition, has shown capability to handle the KPI requirements of data partition. This is the minimum value and anything above this value is also valid. However, for your configuration, it is advisable to do a sanity check with around above ballpark value. For log partition, it is not recommended to set any limits at this time.

Use cases :

1. Depending on application requirements, such a policy will ensure that there is fair IO distribution among different VMs for that specific partition. This is an option that customers can use, if there are multiple HANA VMs running, which requires control to ensure a minimum IO distribution among HANA VMs.

2. Other use cases are that you would want to control the IOPS done by other VMs but want to have one main database VM (unlimited IOPs) as the one getting most of IO resources.

Hardware Resources

Different vSAN clusters were configured in the 4-host and 3-host environments respectively:

- A vSAN SAP HANA HCI environment with four 2-socket based Cascade Lake processors with 2 disk groups per each vSAN host (example 1).

- A vSAN SAP HANA HCI environment with 4 hosts, having a combination of three 4 socket Cascade Lake and one 2 socket Cascade Lake processors configured with 4 disk groups per each vSAN host (example 2).

- A vSAN SAP HANA HCI environment with 3 hosts, having a combination of two 8 socket Cascade Lake and one 4 socket Cascade Lake processors configured with 3 disk groups per each vSAN host (example 3).

- A vSAN SAP HANA HCI environment with 4 hosts, with 4 x 4 socket Cascade Lake processors configured with 4 disk groups per each vSAN host as a scale-out SAP HANA system (example 4).

- A vSAN SAP HANA HCI environment with 4 hosts, with 3 x 4 socket Cooper Lake processors configured with 4 disk groups per each vSAN host (example 5).

2-Socket/2-Disk Group SAP HANA HCI vSAN Based—Example 1

The 2-socket/2-disk group test environment had four vSphere ESXi servers configured and used direct-attached SAS and NVMe devices inside the hosts to provide a vSAN datastore that meets the SAP HANA HCI test requirements and KPIs for two SAP HANA VMs per server.

The fourth node was part of the vSAN datastore and provided HA capabilities for the total 6 running SAP HANA VMs in the vSAN cluster.

Each vSphere ESXi server had two disk groups each consisting of one 1.46 TB NVMe device for caching and four capacity tier 3.49 TB SAS SSDs. The raw capacity of the vSAN datastore of this 4-node vSAN cluster was 112 TB. The vSAN cluster limit is up to 64 cluster nodes. The vSAN cluster node number that an HCI vendor supports is up to the vendor.

Two 25 Gbit network adapters were used for vSAN and another network traffic in active-standby failover manner.

Each ESXi Server in the vSAN Cluster had the following configurations as shown in the following table, based on this configuration, up to two running SAP HANA VMs per HCI server.

|

Property |

SPECIFICATION |

|

ESXi server model |

HW Vendor SAP HANA Supported Cascade Lake Server Model |

|

ESXi host CPU |

2 x Intel® X®(R) Gold 6248 CPU, 2 Sockets, 20 Physical Cores/Socket. |

|

ESXi host RAM |

1.5 TB |

|

Network adapters |

2 x 25 Gbit or better as a shared network for vSAN, SAP application and admin network traffic. Optional a dedicated NIC for the admin network. See the network section of this guide for the recommended network configurations. SAP HANA HCI Network Configuration |

|

Storage adapter |

4 x 8 channel PCI-EXPRESS 3.0 SAS 12GBS/SATA 6GBS SCSI controller |

|

Storage configuration |

Disk Groups: 2 Cache Tier: 2 x 750 GB Intel Optane DC 4800X Series Capacity Tier: 12 X 3.8 TB 3840 Micron 5200 TCG-E |

|

Linux LVM configuration |

Used Linux LVM for log and data. Used 4 VMDK disks in LVM each (total 8) to create the needed log and a data volume for SAP HANA. |

4-Socket/4-Disk Group SAP HANA HCI vSAN Cluster—Example 2

The 4-socket test environment had three vSphere ESXi servers configured and used direct-attached SSDs and NVMe inside the hosts to provide a vSAN datastore that meets the SAP HANA HCI test requirements and KPIs.

The third node was part of the vSAN datastore and provided HA capabilities for the total 8 running SAP HANA VMs. Adding the fourth node would allow you to run more SAP HANA VMs on the vSAN datastore. The vSAN cluster limit is up to 64 cluster nodes. The vSAN cluster node number that an HCI vendor supports is determined by the vendor.

Each vSphere ESXi server had four disk groups with each consisting of 800 GB NVMe devices for caching and four capacity tier 890GB SAS SSDs. The raw capacity of the vSAN datastore of this 3-node vSAN cluster was for data around 43 TB. The vSAN cluster limit is up to 64 cluster nodes.

Two 25 Gbit network adapters were used for vSAN and other network traffic in active-standby failover manner.

Review the network section for details about how the network cards impact the number of SAP HANA VMs per host and the sizing section for how many SAP HANA VMs can be deployed on a Skylake based server system.

Each ESXi Server in the vSAN Cluster had the following configuration as shown in Table 5. This configuration supports up to four SAP HANA VMs per HCI server.

Table 5. ESXi Server Configuration of the 4-socket Server SAP HANA on 3-node HCI vSAN Cluster

|

PROPERTY |

SPECIFICATION |

|

ESXi server model |

HW Vendor SAP HANA Supported Cascade Lake supported Model |

|

ESXi host CPU |

4 x Intel® Xeon(R) Platinum 8280M CPU 2.70GHz , 4 Sockets, 28 Physical Cores/Socket |

|

ESXi host RAM |

1.5 TB |

|

Network adapters |

2 x 25 Gbit or better as a shared network for vSAN, SAP application and admin network traffic. Optional a dedicated NIC for the admin network. See the network section of this guide for recommended network configurations. |

|

Storage adapter |

SAS 12GBS/SATA 6GBS SCSI controller |

|

Storage configuration |

Disk Groups: 4 Cache Tier: 4 x Express Flash PM1725a 800GB SFF Capacity Tier: 16 x SAS 894 GB SSD |

8-Socket/4-socket 3 Disk Group SAP HANA–HCI vSAN Cluster—Example 3

The 8-socket and 4-socket test environment had a total of three vSphere ESXi servers configured and used direct-attached SAS SSDs and NVMe cache drives inside the hosts to provide a vSAN datastore that meets the SAP HANA HCI test requirements and KPIs.

The third node was part of the vSAN datastore and provided HA capabilities for the total 8 running SAP HANA VMs. Adding the fourth node would allow you to run more SAP HANA VMs on the vSAN datastore. The vSAN cluster limit is up to 64 cluster nodes. The vSAN cluster node number that an HCI vendor supports is determined by the vendor.

Each vSphere ESXi server had three disk groups with each consisting of 3TB NVMe devices for caching and four capacity tier 1.6TB SAS SSDs. The raw capacity of the vSAN datastore of this 3-node vSAN cluster was for data around 58 TB. The vSAN cluster limit is up to 64 cluster nodes.

Two 25 Gbit network adapters were used for vSAN and other network traffic in active-standby failover manner.

Review the network section for details about how the network cards impact the number of SAP HANA VMs per host and the sizing section for how many SAP HANA VMs can be deployed on a Skylake based server system.

Each ESXi Server in the vSAN Cluster had the following configuration as shown in Table 6. This configuration supports up to four SAP HANA VMs per HCI server.

Table 6. ESXi Server Configuration of the 4-socket Server SAP HANA on 3-node HCI vSAN Cluster

|

PROPERTY |

SPECIFICATION |

|

ESXi server model |

HW Vendor SAP HANA Supported Cascade Lake supported Model |

|

ESXi host CPU |

Intel® Xeon®Platinum 8276L CPU @ 2.2GHz, 28 cores, 2 hosts of 8 socket 1 host of 4 Sockets |

|

ESXi host RAM |

3 TB |

|

Network adapters |

2 x 25 Gbit or better as a shared network for vSAN, SAP application and admin network traffic. Optional a dedicated NIC for the admin network. See the network section of this guide for recommended network configurations. |

|

Storage adapter |

SAS 12GBS SCSI controller |

|

Storage configuration |

Disk Groups: 3 Cache Tier: 3 x 3.2 TB P4610 NVMe cache disks Capacity Tier: 12 x 1.6 TB PM1643a SSD capacity disks |

4-Socket/4-Disk Group SAP HANA Scale-Out HCI vSAN Cluster — Example 4

The 4-socket test environment had four vSphere ESXi host servers in the vSAN cluster. Each host had 4 Intel Optane NVMe 750G for caching layer, 3TB memory and class M based CPU. The SSD based capacity drives provided the vSAN datastore for SAP HANA scale out configuration. Up to 8 hosts (7+1 HA) can be configured for the scale out environment.

The shared partition for NFS can be provided by vSAN data store with a VM running on the HA node or a separate NFS based storage. In this config, a separate NFS based storage was used for shared partition. BWH benchmark was run and a separate ESXi host running NetWeaver VM is used as a driver to do user throughput testing.

Each vSphere ESXi server had four disk groups each consisting of 1.6TB devices for caching and four capacity tier 4TB SAS SSDs.

Two 25 Gbit network adapters were used for vSAN and other network traffic in active-standby failover manner.

Review the network section for details on how the network cards impact the number of SAP HANA VMs per host and the sizing section for how many SAP HANA VMs can be deployed on a Skylake based server system.

Each ESXi Server in the vSAN Cluster had the following configuration as shown in Table 7. This configuration supported running up to four SAP HANA VMs per HCI server.

Table 7. SAP HANA Scale-Out Example Configuration

|

PROPERTY |

SPECIFICATION |

|

ESXi server model |

HW Vendor SAP HANA Supported Cascade Lake Server Model |

|

ESXi host CPU |

4 x Intel(R) Xeon(R) Gold 6230 20C CPU 2.10GHz , 4 Sockets, 20 Physical Cores/Socket |

|

ESXi host RAM |

3 TB |

|

Network adapters |

2 x 25 Gbit or better as a shared network for vSAN, SAP application, and admin network traffic. Optional a dedicated NIC for the admin network. See the network section of this guide for the recommended network configurations. |

|

Storage adapter |

Dell HBA330 Adapter SAS 12GBS/SATA 6GBS SCSI controller |

|

Storage configuration |

Disk Groups: 4 Cache Tier: 4 NVMe p4610 1.6TB Capacity Tier: 16 x SAS p4510 4TB SFF |

|

Linux LVM configuration |

Linux LVM used with 8 VMDK for Data partition and 8 VMDK for Log partition |

4-Socket/4-Disk Group on Cooper Lake SAP HANA HCI vSAN Cluster — Example 5

This 4-socket and 4-disk group test environment had a total of three vSphere ESXi servers configured and used an all-NVMe cache and capacity drives inside the hosts to provide a vSAN datastore that meets the SAP HANA HCI test requirements and KPIs.

The third node was part of the vSAN datastore and provided HA capabilities for the total 8 running SAP HANA VMs. Adding the fourth node would allow you to run more SAP HANA VMs on the vSAN datastore. The vSAN cluster limit is up to 64 cluster nodes. The vSAN cluster node number that an HCI vendor supports is determined by the vendor.

Each vSphere ESXi server had four disk groups with each consisting of one 750G Optane NVMe devices for caching and four capacity tier 1.6TB NVMe. The raw capacity of the vSAN datastore of this 3-node vSAN cluster was for data around 58 TB. The vSAN cluster limit is up to 64 cluster nodes.

Two 100 Gbit network adapters were used for vSAN and other network traffic in active-standby failover manner.

Review the network section for details about how the network cards impact the number of SAP HANA VMs per host and the sizing section for how many SAP HANA VMs can be deployed on a Skylake based server system.

Table 8. SAP HANA Cooper Lake Example Configuration

|

PROPERTY |

SPECIFICATION |

|

ESXi server model |

HW Vendor SAP HANA Supported Cooper Lake Server Model |

|

ESXi host CPU |

4 x Intel(R) Xeon(R) Platinum 8376HL 28Cores CPU @ 2.6GHz, 4 Sockets, 28 Physical Cores/Socket |

|

ESXi host RAM |

1.5 TB |

|

Network adapters |

2 x 100 Gbit as a shared network for vSAN, SAP application, and admin network traffic. Optional a dedicated NIC for the admin network. See the network section of this guide for the recommended network configurations. |

|

Storage configuration |

Disk Groups: 4 Cache Tier: 4 x 750G Intel Optane SSD DC P4800x based PCIe adapters Capacity Tier: 16 x 1.6TB Intel SSD D7-P5600 based NVMe PCIe adapters |

|

Linux LVM configuration |

Linux LVM with 8 VMDK for Log partition |

Software Resources

Table 9 shows the VMware related software resources used in this solution. From the SAP side, both SAP HANA version 1.0 and 2.0 are supported.

Table 9. Software Resources

|

Software |

version |

purpose |

|

6.7 U3 , 7.0 U1/U2/U3c |

VMware vCenter Server provides a centralized platform for managing VMware vSphere environments. |

|

|

6.7 U3, 7.0 U1/U2/U3c |

vSphere ESXi cluster to host virtual machines and provide vSAN Cluster. |

|

|

6.7 U3, 7.0 U1/U2/U3c |

Software-defined storage solution for hyperconverged infrastructure |

SAP HANA HCI on vSAN Configuration Guidelines

Selecting the correct components and a proper configuration is vital to achieving the performance and reliability requirements for SAP HANA. The server and vSAN configurations (RAM and CPU) determine how many SAP HANA VMs and which VMs are supported.

The vSAN configuration specifics such as the tested storage policies, number of disk groups, selected flash devices for the caching and capacity storage tier or the vSAN network configuration define how many SAP HANA VMs a vSAN based datastore can support.

SAP HANA HCI Network Configuration

To build an SAP HANA ready HCI system, dedicated vSAN networks for SAP application, user traffic, admin and management are required. Follow the SAP HANA Network Requirements document to decide how many additional network cards have to be added to support a specific SAP HANA workload on the servers.

Table 10 provides an example configuration for an SAP HANA HCI configuration based on dual port network cards.

Table 10. Recommended SAP HCI on vSphere Network Configuration

|

|

|

ADMIN AND VMWARE HA NETWORK |

APPLICATION SERVER NETWORK |

vMotion NETWORK |

vSAN NETWORK |

BACKUP NETWORK |

SCALE-OUT INTERNODE NETWORK |

SYSTEM REPLICATION NETWORK |

|

Host Network Configuration |

Network Label |

Admin |

App-Server |

vMotion |

vSAN |

Backup |

Scale-Out |

HANA-Repl |

|

Recommended MTU Size |

Default (1500) |

Default (1500) |

9000 |

9000 |

9000 |

|||

|

Bandwidth |

1 or 10 GbE |

1 or 10 GbE |

>= 10 GbE* |

>= 10 GbE* |

||||

|

Physical NIC |

0 |

1 |

OPTIONAL |

|||||

|

Physical NIC port |

1 |

2 |

1 |

2 |

||||

|

VLAN ID# |

100 |

101 |

200 |

201 |

202 |

203 |

204 |

|

|

VM Guest Network Cards |

Virtual NIC (inside VM) |

0 |

1 |

- |

- |

2 |

2 |

3 |

*The selected network card bandwidth for vSAN directly influences the number of SAP HANA VMs that can be deployed on a host of the SAP HANA HCI vSAN cluster. For instance, a network exceeding 10 GE is needed if more than two SAP VMs should be deployed per host. In the stretched cluster case, the witness appliance VM can leverage the App server network. A dedicated witness network is not required.

In addition to using a high bandwidth and low latency network for vSAN, it is also strongly recommended using a VMware vSphere Distributed Switch™ for all VMware related network traffic (such as vSAN and vMotion).

A Distributed Switch acts as a single virtual switch across all associated hosts in the data cluster. This setup allows virtual machines to maintain a consistent network configuration as they migrate across multiple hosts.

The vSphere Distributed Switch in the SAP HANA HCI solution uses at least two 10 GbE dual port network adapters for the teaming and failover purposes. A port group defines properties regarding security, traffic shaping, and NIC teaming. It is recommended to use the default port group setting except the uplink failover order that should be changed as shown in Table 10. It also shows the distributed switch port groups created for different functions and the respective active and standby uplink to balance traffic across the available uplinks.

Table 11 and Table 12 show an example of how to group the network port failover teams. It does not show the optional networks needed for VMware or SAP HANA system replication or Scale-Out internode networks. Additional network adapters are needed for these networks.

|

PROPERTY |

vlan* |

active uplink |

standby uplink |

|

Admin plus VMware HA Network |

100 |

Nic0-Uplink1 |

Nic1-Uplink1 |

|

SAP Application Server Network |

101 |

Nic0-Uplink2 |

Nic1-Uplink2 |

|

vMotion Network |

200 |

Nic1-Uplink1 |

Nic0-Uplink1 |

|

vSAN Network |

201 |

Nic1-Uplink2 |

Nic0-Uplink2 |

|

PROPERTY |

vlan* |

active uplink |

standby uplink |

|

Admin plus VMware HA Network |

100 |

Nic0-Uplink1 |

Nic0-Uplink2 |

|

SAP Application Server Network |

101 |

Nic0-Uplink2 |

Nic0-Uplink1 |

|

vMotion Network |

200 |

Nic1-Uplink1 |

Nic1-Uplink2 |

|

vSAN Network |

201 |

Nic1-Uplink2 |

Nic1-Uplink1 |

*VLAN id example, final VLAN numbers are determined by the network administrator.

We used different VLANs to separate the VMware operational traffic (for example: vMotion and vSAN) from the SAP and user-specific network traffic.

VMware ESXi Server: Storage Controller Mode

Server storage or RAID controller normally support both pass-through and RAID mode.

In vSAN environments, only use controllers that support pass-through mode, as it is a requirement to deactivate RAID mode and use only pass-through mode.

vSAN Disk Group Configuration

Depending on the hardware configuration, like the number of CPUs and flash devices, minimal two or more disk groups should be configured per host. More disk groups improve the storage performance. The current disk group maximum is five disk groups per vSAN cluster node.

The cache disk type should be a low-latency high-bandwidth SAS SSD or an NVMe device. The capacity should be 600 GB or larger. Review VMware vSAN design considerations for flash caching devices and the All-Flash Cache Ratio Update document for details.

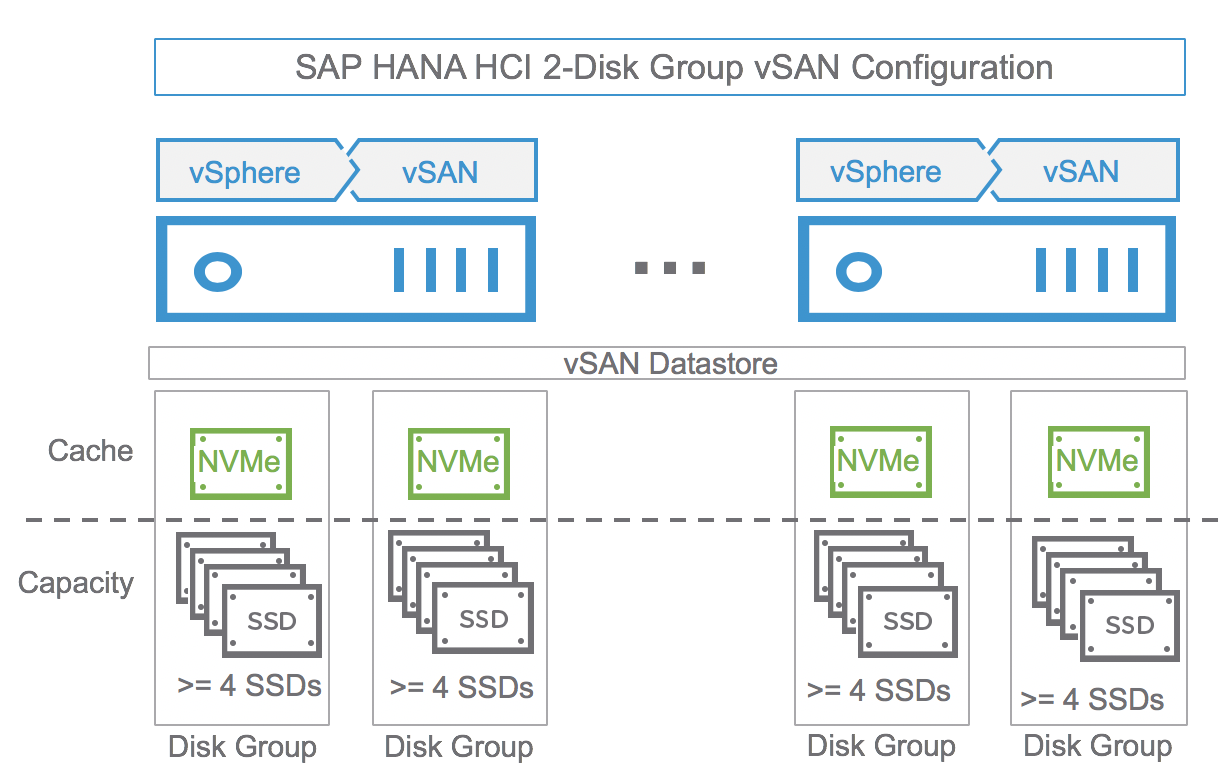

In our 2 and 4-socket server reference configuration, we used two and four disk group configurations to optimally use the server hardware components installed. The number of disk groups should be selected depending on how many SAP HANA VMs will be deployed on a single vSAN cluster node. In some test configurations, we noticed that a 2-disk group configuration with NVMe for caching and four capacity disks was enough to meet the SAP HANA KPIs for two SAP HANA VMs per server. If four SAP HANA VMs should be deployed on a server, we recommend using a minimum of 3 or up to 4-disk group configuration.

In addition, the PCIe lanes of the PCIe slots of the NVMe should be distributed evenly across all the CPUs. If multiple NVMe are connected on single CPU link, ensure that separate ports are used. As noted, the HW vendors are free to decide which configuration they want to certify.

In our Cascade Lake 2-socket/2-disk group and 4-host server vSAN cluster configuration, we used a write optimized 2x 750 GB Intel Optane DC 4800X Series for caching and 6 X 3.8TB 3840 Micron 5200 TCG-E SAS SSDs for the capacity tier per disk group. In total, we used 12 SSDs per server, all connected on one vSAN certified SCSI adapter. With this vSAN configuration, we were able to validate two SAP HANA VMs per server successfully.

In the next 4-disk group configuration, we used 4-socket in a three node vSAN cluster configuration. We used an 800GB NVMe device for caching and four SAS 900GB SSDs for the capacity tier per disk group. With this vSAN configuration, we were able to validate 4 SAP HANA VMs per server. Additional tests with other configurations also found that fewer capacity SSDs are needed to pass the tests by using NVMes in the caching tier. The number of capacity SSDs depends mainly on the size of the SAP HANA VMs that will run on the HCI solution.

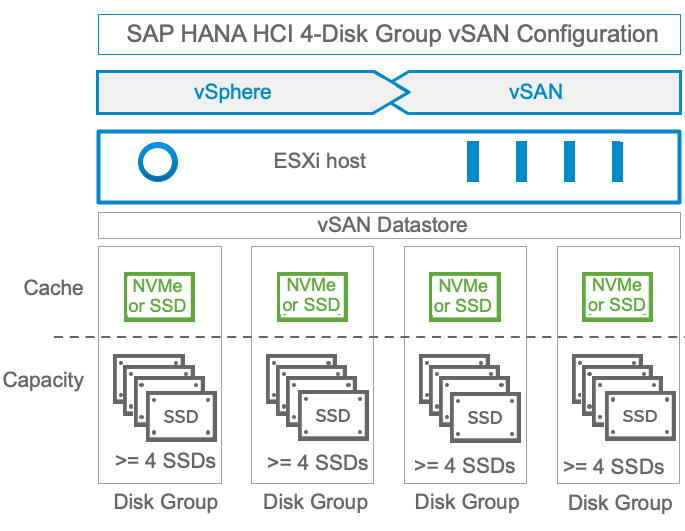

Figure 9 shows a single server of our 4-disk group test configuration in a graphical way.

Figure 9. 4-disk Group Configuration Example of Our SAP HANA HCI Test Configuration

Replacing the SAS SSDs for caching with a higher bandwidth, low latency NVMe devices helped us to reduce the number of vSAN disk groups from four to two, while still maintaining the SAP HANA storage KPIs for two SAP HANA VMs per server.

Note: If 4 or more SAP HANA VMs are supported per vSAN node, it is recommended to use at least a 4-disk group configuration.

Figure 10. 2-disk Group Configuration of Our SAP HANA HCI Test Configuration

Note: The number of capacity drives per disk group and the number of disk groups per host impact the maximum number of SAP HANA VMs per server.

vSAN Storage Configuration

All vSAN disk groups are configured as an single cluster-wide shared datastore. The actual storage configuration for a VMDK file will be defined by a specific vSAN storage policy that fits its usage (OS, log, or data). For SAP HANA, we use the same storage policy for log and data. For the VMDK that contains the OS or SAP HANA binaries, the storage policy can be modified to use erasure coding to optimize capacity instead of performance.

vSAN Storage Policy

The SAP HANA HCI solution is a very specific configuration and is optimized for the high-performance demands of an SAP HANA database. Just like an SAP HANA appliance, the storage subsystem is optimized to meet the SAP HANA KPIs for reliability, consistency, and performance. These KPIs are validated, tested, and finally certified by SAP.

Depending on the actual vSAN hardware configuration, a vSAN storage policy is selected and applied to the SAP HANA OS, log and data disks. The vSAN policy is one of the parameters that determine the number of HANA VMs that can be supported on a server.

Table 13 and Table 14 show the vSAN storage policy we used for our testing. This storage policy should be applied on both the data disks and the log disk of each SAP HANA Database VM.

For non-performance critical volumes, the vSAN default storage policy or a custom RAID 5 storage policy can be used to lower the storage capacity need of these volumes.

For example, a VM protected by a primary level of failures to tolerate value of 1 with RAID 1 requires twice the virtual disk size, but with RAID 5, it requires 1.33 times the virtual disk size. For RAID 5, you need a minimum of 4 vSAN nodes per vSAN cluster.

The number of stripes configured should equal the number of capacity devices contained in a host. Note that the maximum number of stripes that can be configured in a storage policy is 12.

Note: Only the listed vSAN storage policies got tested by VMware and got used during the vSAN SAP HANA validation.

Table 13. vSAN Storage Policy Settings for Data and Log for a Configuration with 12 or More Capacity Disks

|

Storage Capability |

Setting |

|

Failure Tolerance Method (FTM) |

RAID 1 (Mirroring) |

|

Number of failures to tolerate (FTT) |

1 |

|

Minimum hosts required |

3 |

|

Number of disk stripes per object |

12 |

|

Flash read cache reservation |

0% |

|

Object space reservation |

100% |

|

Disable object checksum |

No |

Table 14 shows the vSAN policy for a 2-disk group vSAN cluster configuration.

Table 14. vSAN Storage Policy Settings for Data and Log for a Configuration with 8 Capacity Disks

|

Storage Capability |

Setting |

|

Failure Tolerance Method (FTM) |

RAID 1 (Mirroring) |

|

Number of FTT |

1 |

|

Minimum hosts required |

3 |

|

Number of disk stripes per object |

8 |

|

Flash read cache reservation |

0% |

|

Object space reservation |

100% |

|

Disable object checksum |

No |

Table 15 shows the vSAN policy for less performance critical disks like OS or SAP HANA shared.

Note: You need a minimum of 4 vSAN nodes to use RAID 5, and a minimum of 6 vSAN nodes for RAID 6.

Table 15. vSAN Storage Setting for OS or Other Less Performance Critical Volumes

|

Storage Capability |

Setting |

|

Failure tolerance method (FTM) |

RAID 5/6 (Erasure Coding) |

|

Number of FTT |

1 |

|

Minimum hosts required |

4 |

|

Number of disk stripes per object |

1 |

|

Flash read cache reservation |

0% |

|

Object space reservation |

0% |

|

Disable object checksum |

No |

SAP HANA HCI Storage Planning and Configuration

When planning a virtualized SAP HANA environment running on vSAN, it is important to understand the storage requirements of such an environment and the applications running on it. It is necessary to define the storage capacity and IOPS needs of the planned workload.

Sizing a storage system for SAP HANA is very different from storage sizing for SAP classic applications. Unlike with SAP classic applications, where the storage subsystem is sized regarding the IOPS requirements of a specific SAP workload, the SAP HANA storage is defined by the specified SAP HANA TDI storage KPIs. These data throughput and latency KPIs are fixed values per SAP HANA instance.

Depending on how many SAP HANA VMs a vendor is supporting on an SAP HANA HCI cluster, the vSAN datastore must be able to maintain the performance KPIs and must be able to provide the storage capacity of all installed SAP HANA VM on the vSAN cluster.

VMware has successfully tested a 4-socket 3-node vSAN based SAP HANA HCI configuration with an 8 SAP HANA VM configuration (4 SAP HANA VMs per one active node) and a 2-socket 4-node cluster with 2 SAP HANA VM per server configuration. These configurations represent one SAP HANA VM per socket, leaving the third or fourth node in the cluster used as an HA node. This leaves enough capacity available to support a full node failure at any time.

Use the SAP HANA Hardware and Cloud Measurement Tools (HCMT) to check if the vSAN storage performance meets the SAP HANA requirements. The tool and the documentation can be downloaded from SAP with a valid SAP user account. See SAP note 2493172.

SAP HANA Storage Capacity Calculation and Configuration

All SAP HANA instances have a database log, data, root, local SAP, and shared SAP volume. The storage capacity sizing calculation of these volumes is based on the overall amount of memory needed by SAP HANA’s in-memory database.

SAP has defined very strict performance KPIs that have to be met when configuring a storage subsystem. These storage performance KPIs are the leading sizing factor. How many capacity devices are used to provide the required I/O performance and latency depends on the HCI vendor hardware components.

The capacity of the overall vSAN datastore must be large enough to store the SAP HANA data, log, and binaries by providing the minimal FTT=1 storage policy. SAP has published several SAP HANA specific architecture and sizing guidelines, like the SAP HANA Storage Requirements. The essence of this guide is summarized in Figure 11 and Table 16, which can be used as a good start for planning the storage capacity needs of an SAP HANA system. It shows the typical disk layout of an SAP HANA system and the volumes needed. The volumes shown in the document should correspond with VMDK files to ensure the flexibility for the changing storage needs (the increased storage capacity most likely).

Figure 11. Storage Layout of an SAP HANA System. Figure © SAP SE, Modified by VMware

Table 16 determines the size of the different SAP HANA volumes. It is based on the SAP HANA Storage Requirements document. Some of the volumes like the OS and usr/sap volumes can be connected and served by one PVSCSI controller, others like the LOG and data volume are served by dedicated PVSCSI controllers to ensure high IO bandwidth and low latency.

Table 16. Storage Layout of an SAP HANA System

|

Volume |

VMDK |

SCSI Controller |

VMDK Name |

SCSI ID |

Sizes as of SAP HANA Storage Requirements |

|

/(root) |

|

PVSCSI Contr. 1 |

vmdk01-OS-SIDx |

SCSI 0:0 |

Minimum 40 GiB for OS |

|

usr/sap |

|

PVSCSI Contr. 1 |

vmdk01-SAP-SIDx |

SCSI 0:1 |

Minimum 50 GiB for SAP binaries |

|

hana/shared |

|

PVSCSI Contr. 1 |

vmdk02-SHA-SIDx |

SCSI 0:2 |

Minimum 1x RAM max. 1 TB |

|

hana/data |

|

PVSCSI Contr. 2 |

vmdk03-DAT1-SIDx (vmdk03-DAT2-SIDx vmdk03-DAT3-SIDx) |

SCSI 1:0

(SCSI 1:1

SCSI 1:2) |

Minimum 1 x RAM Note: If you use multiple VMDKs, you can use Linux LVM to build one large data disk. |

|

hana/log |

|

PVSCSI Contr. 3 |

vmdk04-LOG1-SIDx (vmdk04-LOG2-SIDx) |

SCSI 2:0

(SCSI 2:1) |

[systems <= 512GB] log volume (min) = 0.5 x RAM [systems >= 512GB] log volume (min) = 512GB |

|

hana/backup (optional) |

|

PVSCSI Contr. 4 |

vmdk05-BAK-SIDx |

SCSI 3:1 |

Size backups >= Size of HANA data+ size of redo log. The default path for backup is (/hana/shared). Change the default value when a dedicated backup volume is used. |

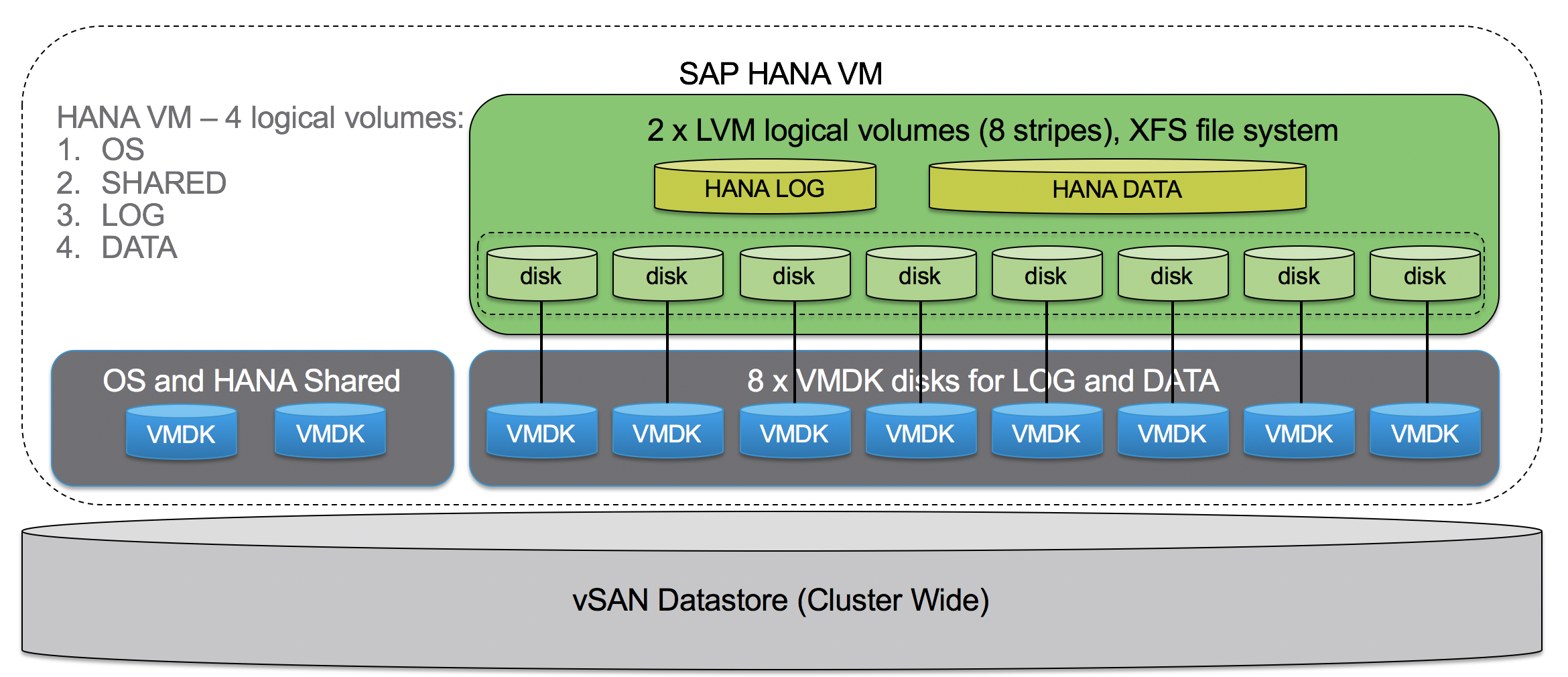

Figure 12 shows the default SAP HANA storage layout as documented in SAP’s HANA storage white paper, in a graphical view with the dedicated VMDKs per OS, HANA shared, log, and data volumes.

Figure 12. Dedicated VMDK Files per SAP HANA Volume

A “pure” VMDK solution provides the easiest way to get started and is enough to achieve the SAP HANA HCI performance and scalability KPIs.

Usage of Linux Logical Volume Manager (LVM)

Although we did not use Linux LVM with our 4-socket, 3 node cluster tests, the usage of LVM is supported and can be used if desired. Using Linux LVM can help to leverage more vSAN resources and balance the VMDK components distribution on vSAN. On the other hand, using LVM is adding configuration complexity. It is determined by the HCI solution vendor to use LVM or not.

Figure 13 shows an example how LVM can be used for the SAP HANA LOG and DATA volumes. We used this configuration with our 4-disk group SAS cache and 2-disk group configurations. Linux LVM used with 8 VMDK for data partition and a min 4 to 8 VMDK for log partition.

Note that the number of VMDK disks used for LVM should not exceed the capacity disks used for vSAN in a host. For example, if 6 capacity disks are used in a server, up to 6 VMDKs disks for LVM would be ideal.

Figure 13. Usage of LVM Managed Volumes

vSAN Storage Capacity Calculation

To determine the overall storage capacity per SAP HANA VM, summarize the sizes of all specific and unique SAP HANA volumes as outlined in Figure 11 and Table 16.

To determine the overall vSAN datastore capacity required, without cache tier, it is required to multiply the above SAP HANA volume requirements with the amount of VMs that can run on the SAP HANA HCI cluster.

Simplified calculation:

vSAN datastore capacity = total HANA VM x (OS + USR/SAP + SHARED + DATA + LOG) x 2 (FTT=1, RAID 1) + 30% Slack space.

Example:

A cluster with 3 servers with 18 TB RAM and 8 SAP HANA VMs in total, one server is HA -> 12 TB of SAP HANA data needs to be stored.

8 x (10 GB + 60 GB + 1 TB + 12 TB / 8 VMs + 512 GB) x 2 x 1.3 = 8 x 3,142 GB x 2 x 1.3 = ~65.4 TB

We have over 138 TB of vSAN raw datastore capacity available with our example 3-node 4-socket SAP HANA HCI configuration, which is much more than the amount required for up to 8 SAP HANA VMs with a total up to 12 TB of SAP HANA system RAM capacity. The additional space can be used to store optional backups. This lowers the TCO since no additional nearline backup storage is needed. Nevertheless, moving the backup files to a permanent external backup location is recommended and required.

SAP HANA HCI VMware vSAN Detection Script

SAP requested to implement a method to detect if an SAP HANA VM runs on an SAP HANA HCI solution. VMware and SAP worked together to develop a script that allows SAP to detect at the runtime if an SAP HANA VM is running on a vSAN datastore.

The VMware vSAN detection script is available on request from VMware. Contact the author or contributors of this document for the script. The script needs to be installed by the SAP HANA HCI solution vendor on their VMware based SAP HANA HCI solution systems.

The script is a Python script that will be executed every 5 minutes on the vSAN hosts that build the SAP HANA HCI solution and will add the following information to the VM configuration file:

"guestinfo.vsan.enabled": True/False,"guestinfo.SDS.solution": esxi_version, which is also the vSAN release version"guestinfo.vm_on_vsan": True/False

This data will be parsed by the SAP CIM provider and SAP support tools like SAPsysinfo, and it detects if an SAP HANA HCI on VMware vSAN solution is used for this specific VM. Details about the VMware SAP key monitoring metrics can be found in OSS Note 2266266—Key Monitoring Metrics for SAP on VMware vSphere (version 5.5 u3 and higher).

Prerequisites:

- Enable SSH for the time of installation. Deactivate it after the installation of the script.

- To simplify the installation process, it is recommended to have identical password of the super user on all the ESXi servers.

Installation and execution of the health check installation script

- Enable SSH on the vSphere ESXi hosts systems.

- Place the installation script and setInfo.vib into the SAME directory of any Linux/MacOS system.

- Run ./install_vib_script_remotely.sh and type in the following information as prompted:

root@Centos [ ~/hana_health_check ]# ./install_vib_script_remotely.sh -hPlease specify the one of the following parameters:-i or --install: install the vib on specified hosts-r or --remove: remove the vib on specified hosts-u or --update: update the vib on specified hosts-h or --help: display this message- To install the vib on the specified:

[w3-dbc302:hana_vib]$./install_vib_script_remotely.sh -iPlease specify the hosts(Separate by whitespace):10.xx.xx.xx login as: rootPassword:Validating connectivity to 10.xx.xx.xx10.xx.xx.xx connectivity validatedUploading setInfo_v1.3.vib to 10.xx.xx.xxSuccessfully uploaded setInfo_v1.3.vib to 10.xx.xx.xx:/tmpInstalling VIB in 10.xx.xx.xxHost acceptance level changed to 'CommunitySupported'.Installation Result:Message: Operation finished successfully.

Reboot Required: false

VIBs Installed: VMware_bootbank_setInfo_1.3.0-6.7.0

VIBs Removed:

VIBs Skipped:

Successfully installed VIB in 10.xx.xx.xx

Functions and scenarios of the script

The SAP HANA HCI health check Python script is executed every 5 minutes, if you want to shorten the time to 1 min, modify the value of RUN_PYC_INTERVAL at the beginning of the bash script.

For all the VMs on the host, the script gets the following info from each individual VM:

vsan.enabled = True/Falsesds.solution = ESXI/vSAN_Versionvm_on_vsan = True/False

Testing of the script

- Install an SAP HANA supported Linux version based VM on one of your host servers.

- Ensure that the latest Open VMware guest tools are installed on your test VM.

- Enable host and VM monitor data exchange as documented in SAP note 1606643.

- Download and copy the latest sapsysinfo script (<= revision 114), see SAP note 618104 for details and execute it on your test VM.

- Example output (search for vSAN in the document):

- -------------------------------------------------------------------------------

- VMware vSAN info:

- guestinfo.vsan.enabled =

- guestinfo.SDS.solution =

- guestinfo.vm_on_vsan =

- -------------------------------------------------------------------------------

- Linux system information:

- -------------------------------------------------------------------------------

- If you do not get the above outputs, check the prerequisites and ensure that the used hypervisor is a VMware hypervisor and the used SDS solution is vSAN.

If a VM is moved to vSAN (or have a newly created vmdk on vSAN), or moved out from vSAN to other datastores, the guestinfo will be updated within 5 mins.

If the VM is storage vMotioned to another host that does not have the script running, you need to manually delete the three variables from the VM configuration file to ensure consistency. You can check inside vCenter if these variables are set for a VM. See Figure 14 for details:

Figure 14. SAP HCI vSAN Specific Configuration Parameters

Updating the script

To update the vib to a higher version, replace the vib file within your Linux/MacOS directory and run the following commands:

[w3-dbc302:hana_vib]$./install_vib_script_remotely.sh -uPlease specify the hosts(Separate by whitespace):10.xx.xx.xxlogin as: rootPassword:Validating connectivity to 10.xx.xx.xx10.xx.xx.xx connectivity validatedUploading setInfo_v1.3.5.vib to 10.xx.xx.xxSuccessfully uploaded setInfo_v1.3.5.vib to 10.xx.xx.xx:/tmpUpdating VIB in 10.xx.xx.xxInstallation Result:Message: Operation finished successfully.

Reboot Required: false

VIBs Installed: VMware_bootbank_setInfo_1.3.5-6.7.0

VIBs Removed: VMware_bootbank_setInfo_1.3.0-6.7.0

VIBs Skipped:

Successfully update VIB in 10.xx.xx.xxUninstalling the script

To uninstall the script from ESXi, run:

[w3-dbc302:hana_vib]$./install_vib_script_remotely.sh -rPlease specify the hosts(Separate by whitespace):10.xx.xx.xxlogin as: rootPassword:Validating connectivity to 10.xx.xx.xx10.xx.xx.xx connectivity validatedRemoving VIB in 10.xx.xx.xxRemoval Result:Message: Operation finished successfully.

Reboot Required: false

VIBs Installed:

VIBs Removed: VMware_bootbank_setInfo_1.3.5-6.7.0

VIBs Skipped:

Successfully cleared up VMs info, removed vib from 10.xx.xx.xx

SAP HANA HCI on vSAN—Support and Process

Support Status

vSAN is supported as part of an SAP HANA HCI vendor-specific solution, as described in this document. See SAP Note 2718982.

The SAP HANA HCI solution vendor is the primary support contact for the customer with escalation possibilities to VMware and SAP. The support of an SAP HANA HCI solution is comparable and very similar to the SAP HANA Appliance support model.

The SAP HANA HCI solution vendor must define the overall usable storage capacity and performance available for SAP HANA VMs. The vendor also defines how many SAP HANA VMs per host are supported and how many vSAN cluster nodes are supported by the vendor.

For supported SAP HANA HCI solutions based on VMware products, check out SAP HANA Certified HCI Solution.

Support Process

If you want to escalate an issue with your SAP HANA HCI solution, work directly with your HCI vendor and follow the defined and agreed support process, which normally starts by opening a support ticket within the SAP support tools.

vSAN Support Insight

vSAN Support Insight is a next-generation platform for analytics of health, configuration, and performance telemetry. Its purpose is to help vSAN users maintain reliable and consistent computing, storage, and network environment and can be used, in addition to the HCI vendor specific support contract, with an active support contract running vSphere 6.5 U1d or later.

How does it work?

See How your Experiences Improve Products and Services for details.

Conclusion

With vSAN, VMware equips its hardware and OEM partners to build SAP HANA ready HCI solutions. Not only compute, memory, and network, but also internal storage components can be leveraged from a standard x86 server, which dramatically changes the SAP HANA infrastructure economics and the deployment methodology. With SAP HANA HCI powered by VMware solutions, an SAP hardware partner vendor can build true turnkey SAP HANA infrastructure solutions offering customers to deploy SAP HANA in days instead of weeks or months.

Appendix

Optimizing the SAP HANA on vSphere Configuration Parameter List

VMware vSphere can run a single large or multiple smaller SAP HANA virtual machines on a single physical host. This section describes how to optimally configure a VMware virtualized SAP HANA environment. These parameters are valid for SAP HANA VMs running vSphere and vSAN based SAP HANA HCI configurations.

The listed parameter settings are the recommended BIOS settings for the physical server, the ESXi host, the VM, and the Linux OS to achieve optimal operational readiness and stable performance for SAP HANA on vSphere.