What's New in vSphere 8 Update 1?

In keeping with the release traditions, we are proud to announce vSphere 8 Update 1, available soon as an update to vSphere 8. When it is released you will be able to update vCenter through the VMware Appliance Management Interface (VAMI) and update ESXi as you would with any other patch using vSphere Lifecycle Manager. See our vSphere 8 Update 1 announcement blog article.

For a refresher on what is new with the initial release of vSphere 8, check the following article.

Enhance Operational Efficiency

So let’s get into what’s new since vSphere 8! First stop: efficiency. IT infrastructure is fun and all but we at VMware want to get you back to solving interesting business problems.

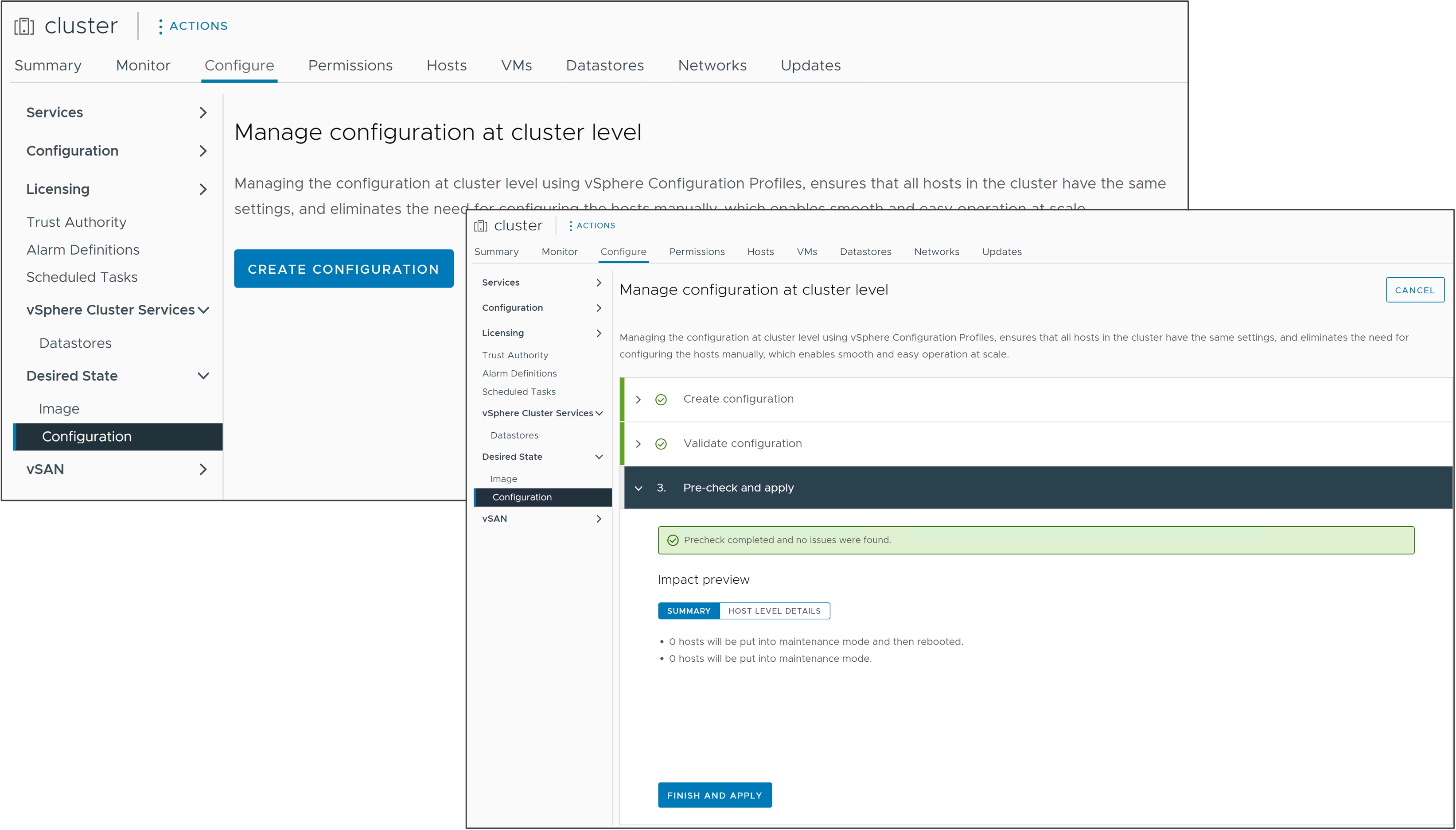

vSphere Configuration Profiles

In vSphere 8 we introduced a technology preview of the next generation in vSphere cluster configuration management called vSphere Configuration Profiles. With the release of vSphere 8 Update 1, vSphere Configuration Profiles is now a fully supported feature and no longer in a technology preview phase.

vSphere Configuration Profiles is a new capability in vSphere 8.0, that allows Administrators to manage the host configuration at a cluster level. This capability allows administrators to

- Set desired configuration at the cluster in form of a JSON document.

- Check that hosts are compliant with desired configuration.

- If non-compliant, remediate hosts to bring them into compliance.

With vSphere 8 Update 1, vSphere Configuration Profiles supports vSphere Distributed Switch configuration, which was not available in the earlier technology preview. Environments using VMware NSX are not supported with vSphere Configuration Profiles.

Existing vSphere clusters can be transitioned to use vSphere Configuration Profiles. If the cluster has a Host Profile attached to it, you will see a warning to remove the Host Profile once the cluster has been transitioned to vSphere Configuration Profiles. Once the transition is completed, Host Profiles cannot be attached to the cluster, or to hosts within the cluster.

Note: If the cluster is still using baseline-based life-cycle management, you must first convert the cluster to use image-based life-cycle management.

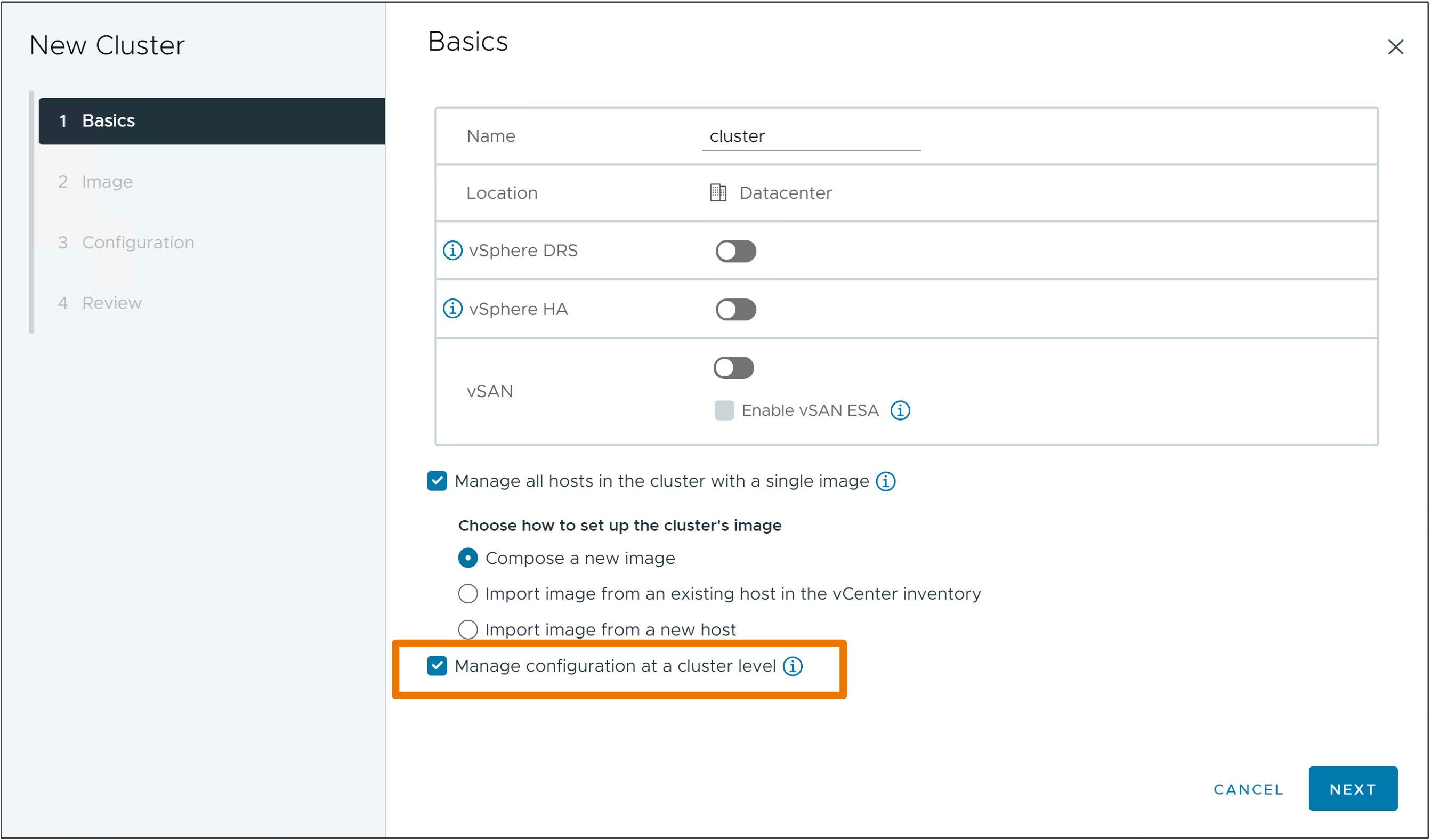

vSphere Configuration Profiles can be activated during new cluster creation. Requires the new cluster to be managed using a single image definition. Configuration customization is available after the cluster is created.

For more on everything vSphere Configuration Profiles, see our dedicated landing page:

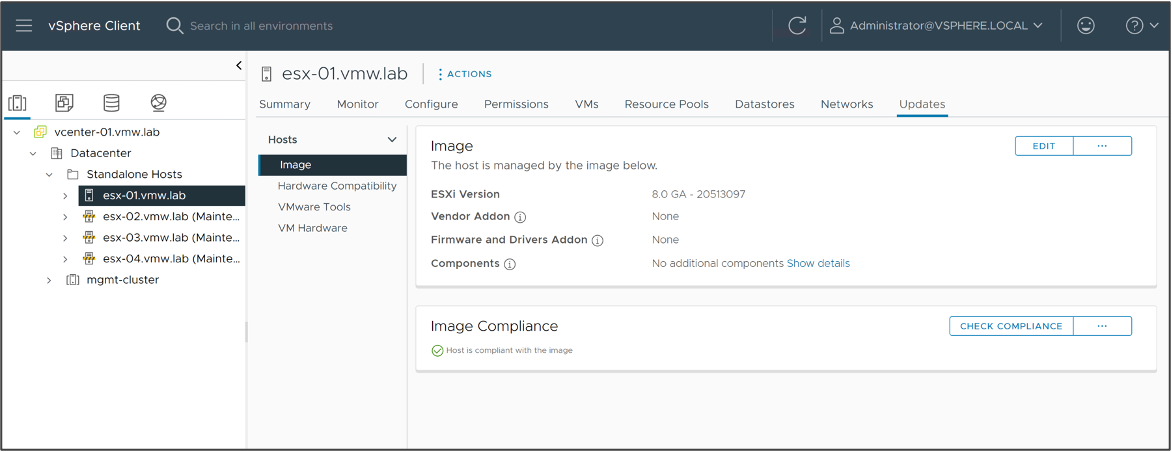

vSphere Lifecycle Manager for Standalone Hosts

vSphere 8 introduced vSphere Lifecycle Manager support for standalone ESXi hosts managed by vCenter using vSphere APIs. In vSphere 8 Update 1, full vSphere Client support is available for standalone ESXi hosts to compose a desired image, remediate, check compliance and more. Everything you expect vSphere Lifecycle Manager to be able to do to a vSphere cluster, you can perform for standalone hosts, including staging and ESXi Quick Boot.

You can also define custom custom image depots for standalone ESXi hosts. This is useful for hosts that sit on the edge because they can be configured to use a depot that is co-located with the ESXi host and avoid remediation issues from poor or high-latency connections between remote ESXi hosts and vCenter.

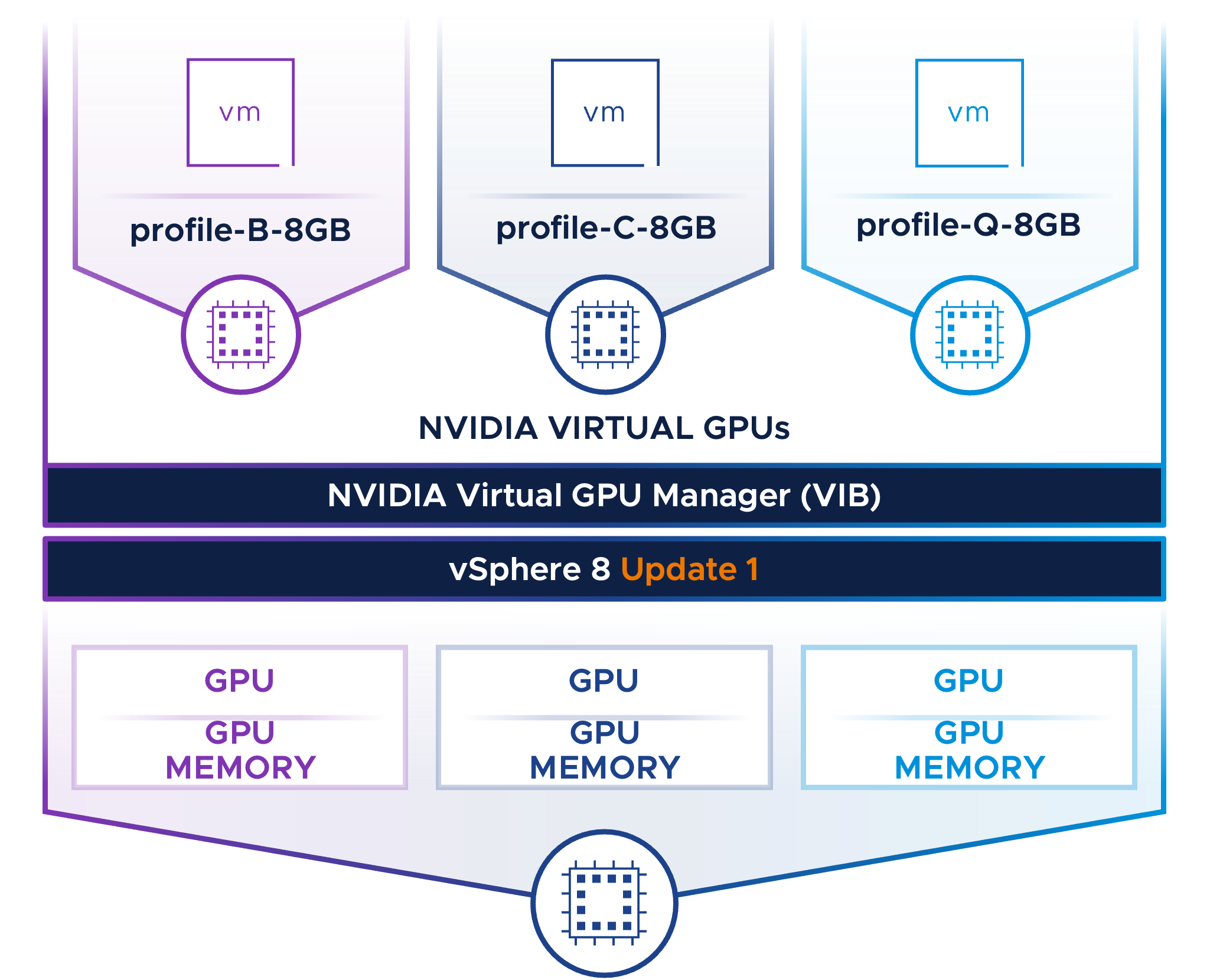

Host different GPU workloads on a single GPU

In earlier vSphere versions, all NVIDIA vGPU workloads on an ESXi host must use the same vGPU profile type and GPU memory size.

In vSphere 8 U1, NVIDIA vGPUs can now be assigned different vGPU profile types. The GPU memory sizes of all profiles must still remain the same. For example, on the diagram we show three VMs, each with a different vGPU profile type “B, C and Q” and GPU memory size of “8GB”. This allows you to share GPU resources more effectively among different workloads.

NVIDIA provides different vGPU profile types depending on the workload.

- Profile type A is designed for application streaming or session-based solutions

- Profile type B is designed for VDI applications

- Profile type C is designed for compute intensive applications like machine learning

- Profile type Q is designed for graphical intensive applications

For more on vGPU Profile Types see the Nvidia Grid vGPU user guide.

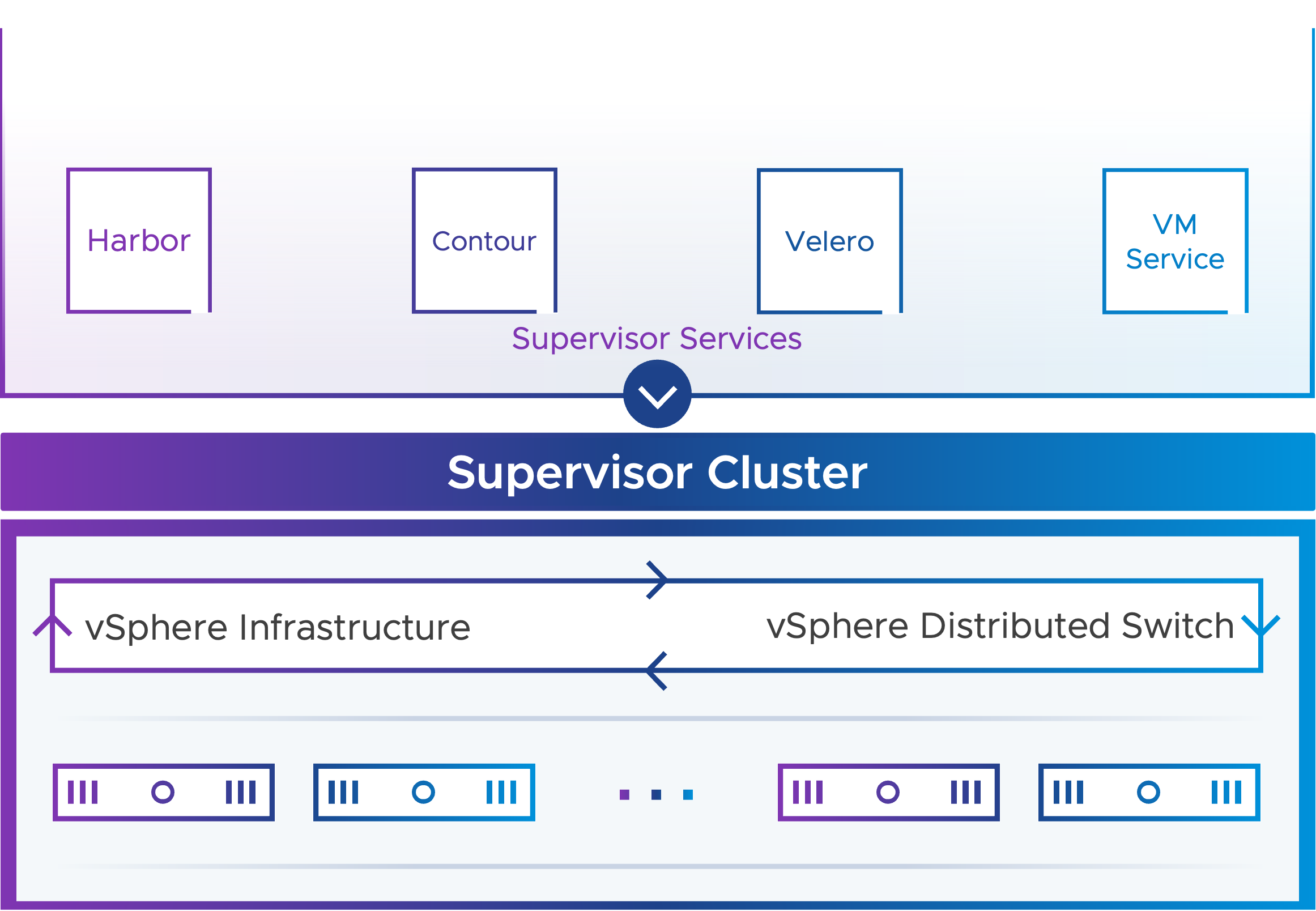

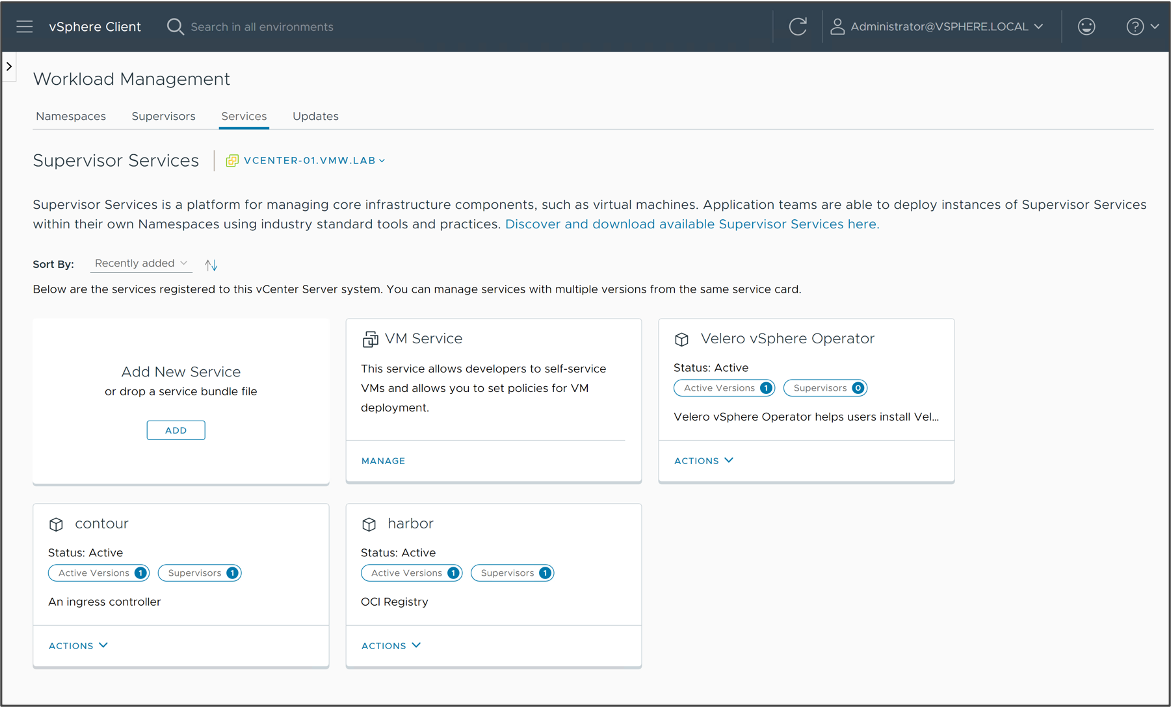

Supervisor Services with vSphere Distributed Switch

In vSphere 8 Update 1, in addition to the VM Service, Supervisor Services are now available when using the vSphere Distributed Switch networking stack.

Supervisor Services are vSphere certified Kubernetes operators that deliver Infrastructure-as-a-Service components and tightly-integrated independent software vendor services to developers. You can install and manage Supervisor Services on the vSphere with Tanzu environment so that to make them available for use with Kubernetes workloads. When Supervisor Services are installed on Supervisors, DevOps engineers can use the service APIs to create instances on Supervisors in their user namespaces.

You manage Supervisor Services in the vSphere Services platform from the vSphere Client. By using the platform, you can manage the life cycle of Supervisor Services, install them on Supervisors, as well as perform version control. A Supervisor Service can have multiple versions that you can install on Supervisors as only one version at a time can run on a Supervisor.

For more on Supervisor Services, see the documentation about Managing Supervisor Services with vSphere with Tanzu..

VM Service Bring Your Own Image

VMware VM Service has been enhanced to provide support for customer created VM images. DevOps or Admins can initiate image build pipelines to create images with support for CloudInit or vAppConfig.

Admins add these new VM templates to a Content library to make available to DevOps team. DevOps creates the cloud-config specification that will configure the VM on first boot. The DevOps team submits the VM specification along with the cloud-config to create and configure the VM.

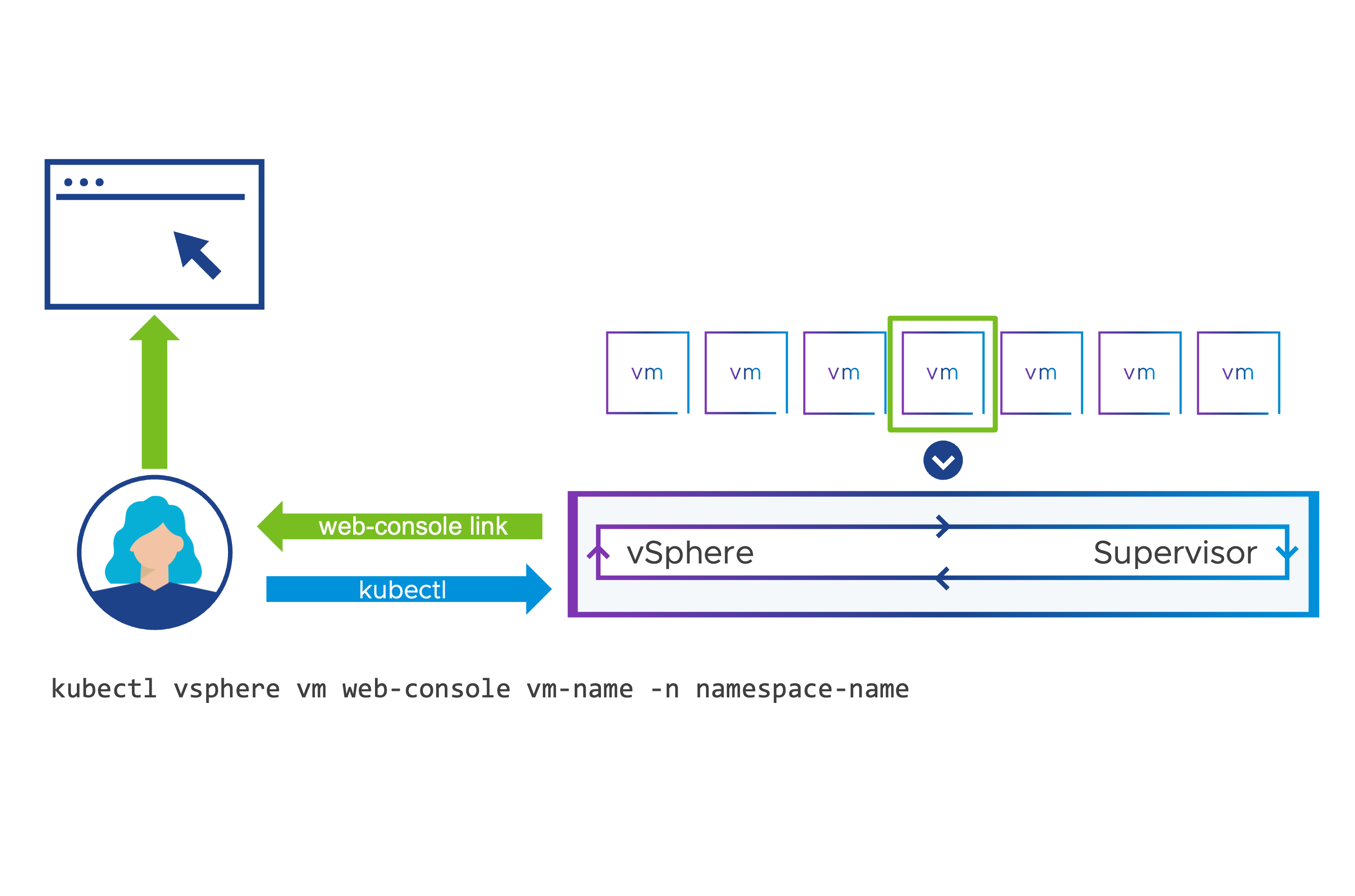

VM Consoles for DevOps

DevOps users can easily access virtual machine remote consoles, for virtual machines they have deployed, using kubectl.

A unique link is created to grant access to the virtual machine console and does not require the user to have permissions to access the vSphere Client. VM web-console provides a one-time-use time-limited (2 minute) URL to the user. Connectivity to the Supervisor Control Plane on port 443 is required. VM web-console allows for self-service debugging and troubleshooting with VMs that may not have network connectivity to allow for SSH.

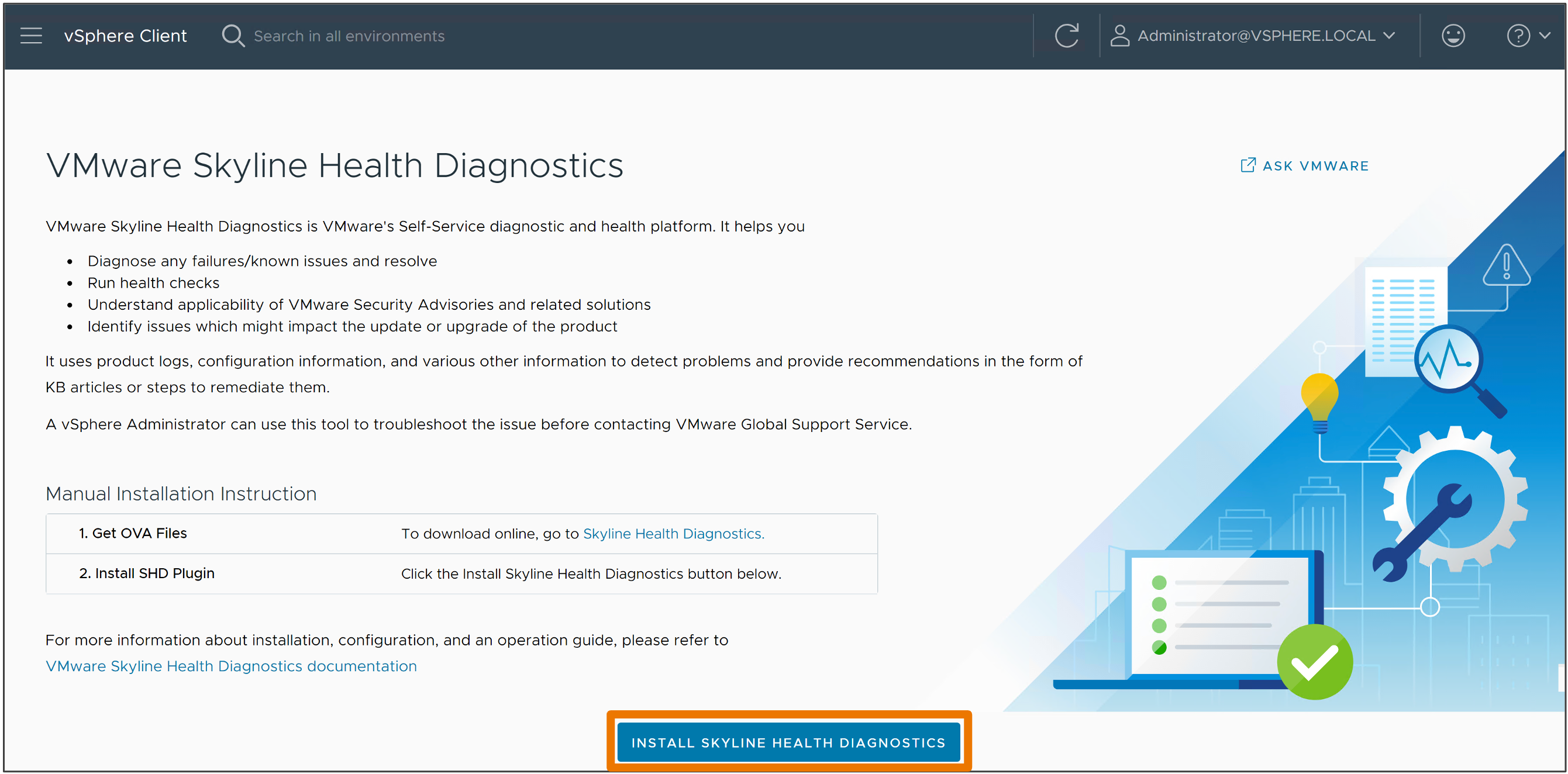

Integrated Skyline Health Diagnostics Plug-in

It is easier than ever to deploy and manage VMware Skyline Health Diagnostics. A guided workflow embedded in the vSphere Client can be used to easily deploy the Skyline Health Diagnostics appliance and register it with the vCenter.

Skyline Health Diagnostics is VMware’s self-service diagnostic and health platform. It helps you

- Diagnose any failures or known issues and resolve

- Run health checks

- Understand applicability of VMware Security Advisories and related resolutions

- Identify issues which might impact the update or upgrade of the product

It uses product logs, configuration information, and various other information to detect problems and provide recommendations in the form of KB articles or steps to remediate.

Note: The Skyline Health Diagnostics platform is not embedded into vCenter 8. You must deploy the Skyline Health Diagnostics appliance. In vSphere 8 U1, you now have a guided installer to easily deploy this appliance.

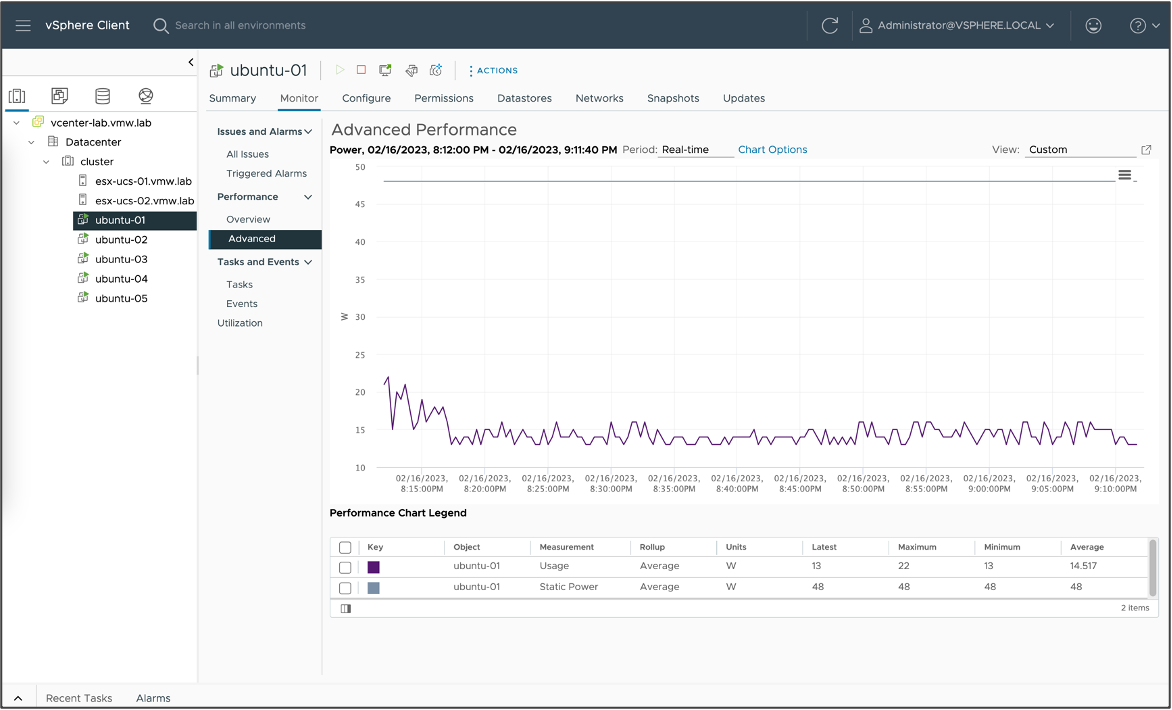

Enhanced vSphere Green Metrics

In vSphere 8.0, we introduced new metrics to capture power consumption of a host, including power associated with virtual machine workloads. vSphere 8.0 Update 1 is now able to attribute the individual power consumption of each VM. Better VM metrics, taking VM size into account, to provide customers more data to aggregate the VM power consumption to understand the energy efficiency of workloads. IT Admins can get the power metrics for each of the VMs, developers could get the power metrics from an API interface, and application owners can access an aggregated view of their power consumption data.

Static Power is the modelled idle power of the VM, as if the VM was a hypothetical bare-metal host configured with the same number of CPUs and Memory as the VM. Static Power estimates the base power draw that would be required to keep that hypothetical host powered on. Usage is a real measured power based on the VMs active CPU and Memory utilization. It is derived from the hosts attached power meters (IPMI - Intelligent Platform Management Interface).

Elevate Security

Security is always a tradeoff. Sometimes we trade performance, or budget, or staff time, or even staff happiness. VMware is always looking for ways to make security less of a tradeoff, by making it easy to do the right thing.

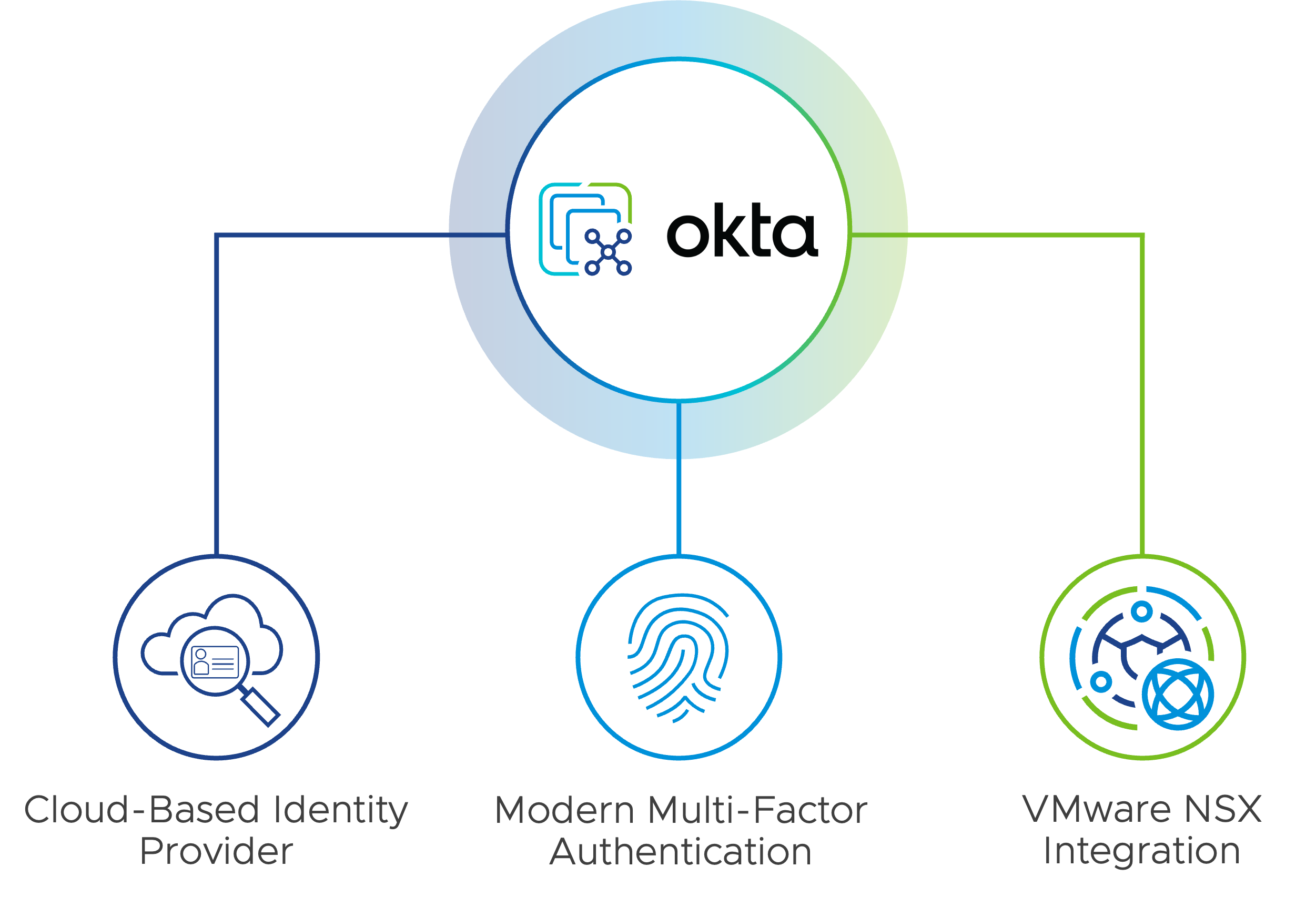

Okta Identity Federation for vCenter

Identity management and multifactor authentication is a big part of security nowadays. vSphere 8 Update 1 brings modern cloud-based identity provider support to vCenter, starting with Okta.

Federated identity means that vSphere never sees user credentials, which helps both security and compliance efforts. It works just like most web authentication services everyone is used to, where you are redirected to the service, and redirected back once you authenticate.

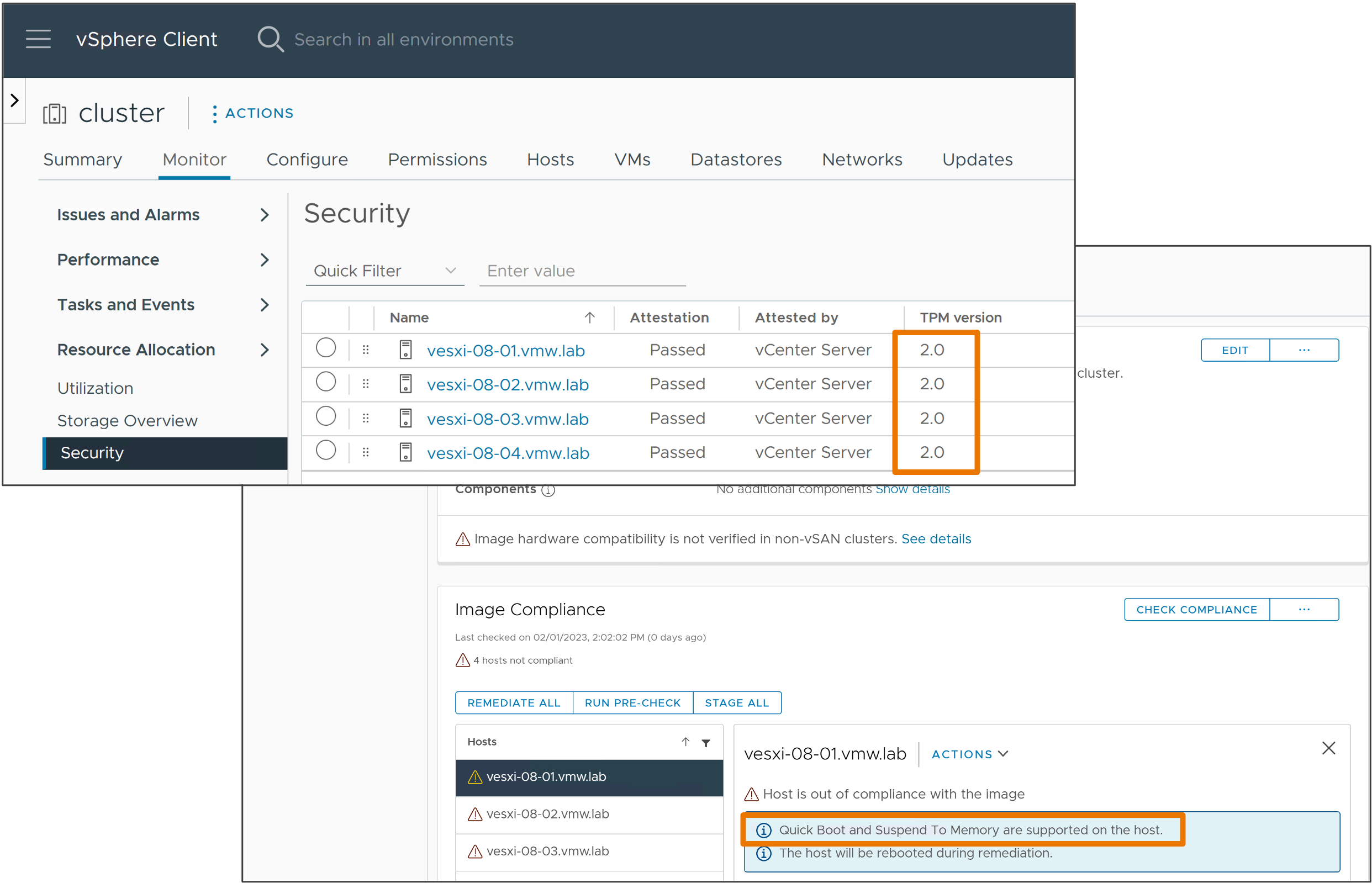

ESXi Quick Boot for Secure Systems

vSphere introduced the idea of Quick Boot in vSphere 6.7, and it’s been very helpful in a lot of ways. Who doesn’t want a faster restart? Even more important, vSAN customers who have big nodes will sometimes find their node reboots taking longer than the rebuild timeout, which is also a problem because a rebuild adds load to a cluster and increases wear on flash devices.

Hosts with TPM 2.0 enabled go through a secure boot and attestation process, which verifies the configuration of the host, and is a powerful way to detect malware and other misconfigurations. Quick Boot is now compatible with that in vSphere 8 Update 1. No more having to choose.

And, as with most security enhancements in vSphere it’s an auspicious note in the client that Quick Boot is now supported.

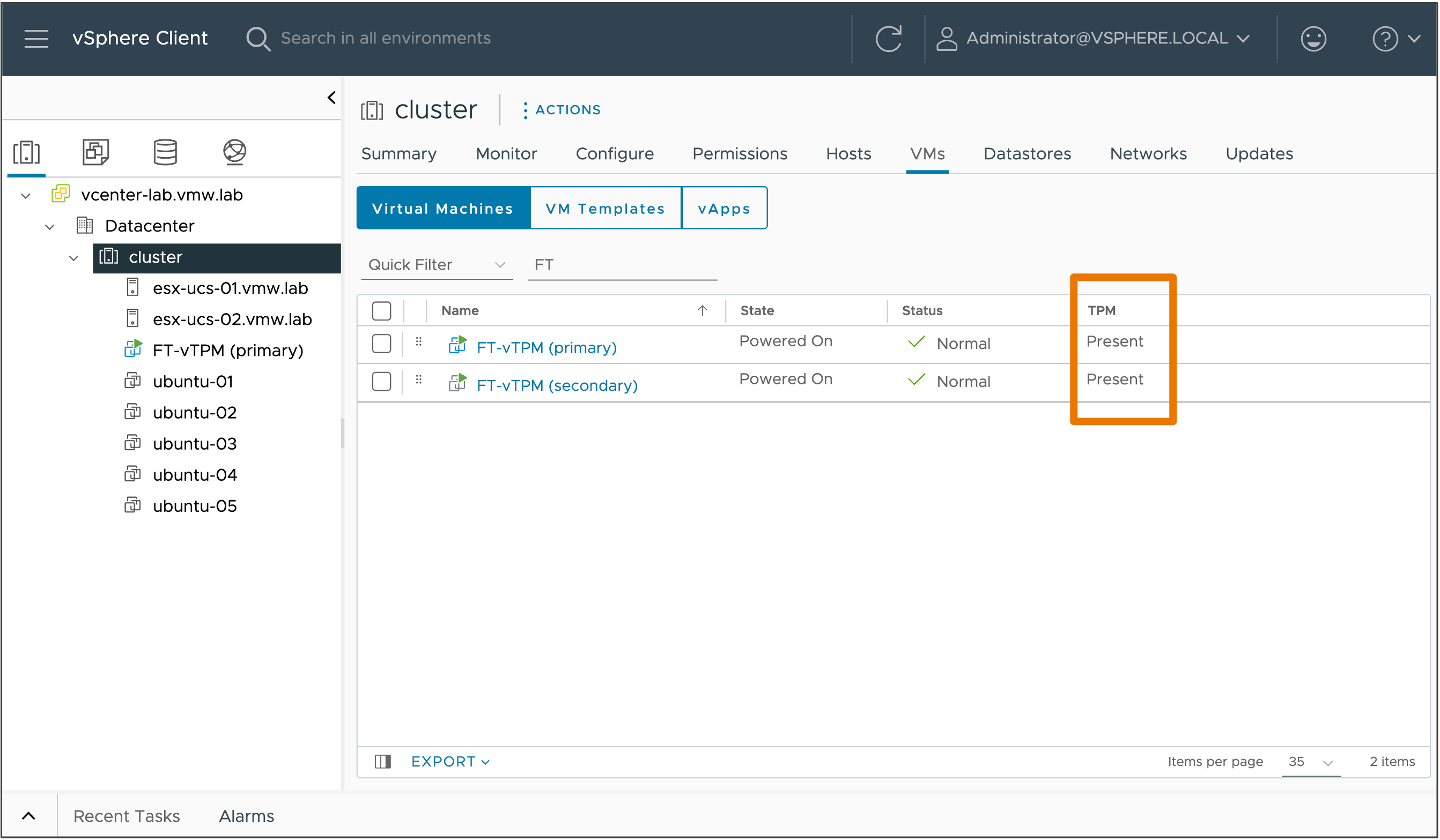

vSphere Fault Tolerance with vTPM

VMware vSphere Fault Tolerance (FT) provides continuous availability for applications (with up to four virtual CPUs) by creating a live shadow instance of a virtual machine that mirrors the primary virtual machine. If a hardware outage occurs, vSphere FT automatically triggers failover to eliminate downtime and prevent data loss. After failover, vSphere FT automatically creates a new, secondary virtual machine to deliver continuous protection for the application.

Virtual TPMs are an important component for guest OSes nowadays, and enable things like Microsoft Device Guard, Credential Guard, Virtualization-Based Security, Secure Boot & OS attestation, and more. They’re also showing up on regulatory compliance audits, too. vSphere 8 Update 1 removes another security tradeoff by enabling VMs with virtual TPMs to be replicated and protected.

Supercharge Workload Performance

vSphere 8 had added some great capabilities in improving workload performance. Update 1 takes this to the next level and helps meet the throughput and latency needs of modern distributed workloads

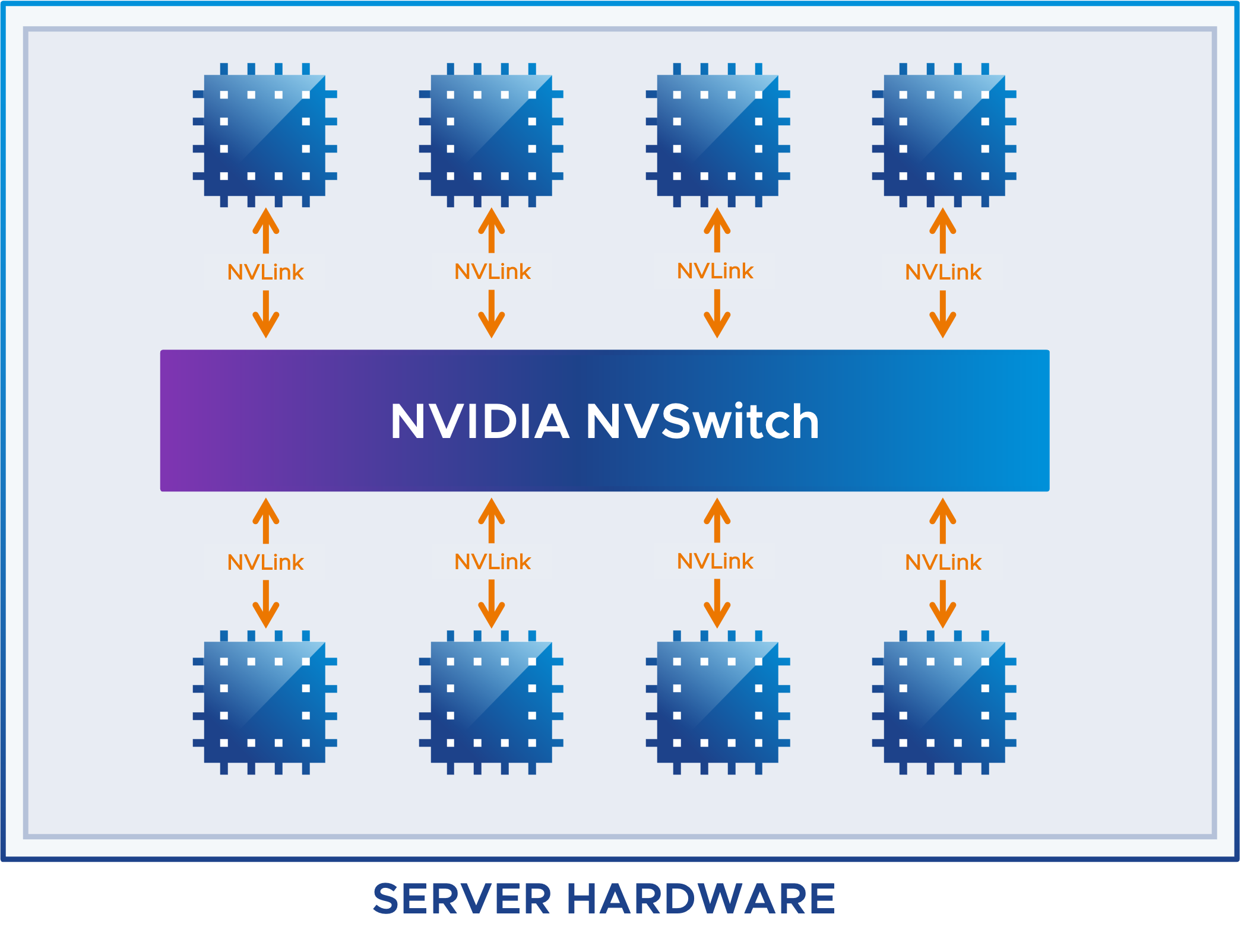

Nvidia NVSwitch Support

We at VMware have been talking about AI for a long time now, with our deep & flexible support for GPUs in vSphere. We continue advancing on-premises AI through our partnership with NVIDIA, by adding support for their NVSwitch technology.

This technology is particularly required in high-performance computing (HPC) and AI applications (for example, deep learning, scientific simulations, and big data analytics) which require multiple GPUs working together in parallel. This is highly recommended for applications that use more than 2 GPUs. Many AI and high-performance computing applications are constrained by the speeds of the buses inside commodity server hardware. To solve this, NVIDIA created a special switch, called NVSwitch, that allows up to 8 GPUs to communicate at blistering speeds.

Difference between NVLink and NVSwitch:

- NVLink is the backend protocol for NVSwitches. NVLink Bridge point-to-point connections may be used for linking 2 to 4 GPUs at very high bandwidth.

- NVSwitch is required for more than four GPUs to be connected, and using the vSphere 8U1 NVSwitch support you can form partitions of 2, 4 or 8 GPUs to apply to a VM.

-

NVLink using “Hopper” architecture, a pair of GPUs can transmit 450 GB/s bidirectionally for a total bandwidth of 900 GB/s.

For comparison, PCIe Gen5 x16 can transmit up to 64 GB/s so you can see the major increase in GPU to GPU bandwidth using NVLink and NVSwitch.

NVLink and NVSwitch capable systems are consumed using Vendor Device Groups and vGPU Profiles in vSphere 8, which allow flexibility for these types of workloads without hampering day-to-day vSphere operations.

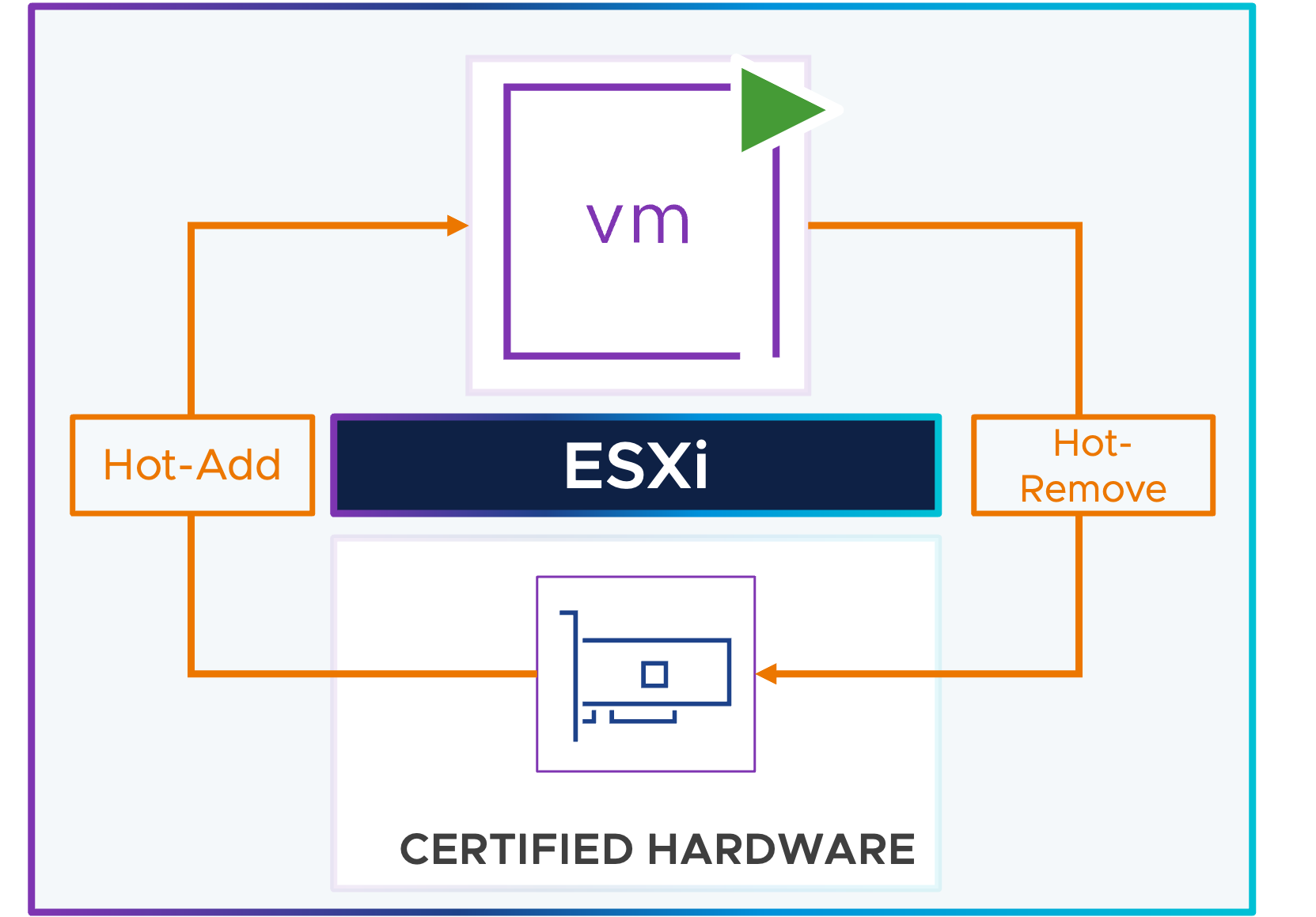

VM DirectPath I/O Hot-Plug for NVMe

In previous releases, adding or removing VM DirectPath IO devices required virtual machines to be in a powered-off state. vSphere 8 Update 1 introduces support to hot-add and hot-remove of NVMe devices using vSphere APIs.

Servers must be certified with the “PCIe Native Surprise Hot Plug certification” to support hot-add and hot-remove of VM DirectPath IO NVMe devices.

Core Storage

For what is new in vSphere core storage checkout the following article.