Designing vSAN Networks - 2022 Edition vSAN ESA

Back in 2019, I wrote an update on common vSAN network design choices. I thought it would be a good idea to by discussing how these questions have aged, and what new questions have come up. VMware vSAN Express Storage Architecture™ (ESA) is helping customers drive new levels of extreme performance and with faster storage processing comes increased demands on the networking stack.

What is new for vSAN Express Storage Architecture (ESA) Networking?

vSAN ESA requires 25Gbps networking at a minimum . vSAN ESA AF6, and AF High Density nodes require 50Gbps of vSAN networking throughput, and ESA AF8 requires 100Gbps of networking throughput to deliver maximum performance. vSAN ESA now compresses data before it transits the networking stack allowing additional compaction of data before it hits the network. A new adaptive networking stack helps keep contention on the vSAN ESA network managed.

vSphere 8 Considerations while shopping for NICs consider DPUs. At this time the primary benefits are for virtual machine networking security.

Do I need dedicated interfaces for vSAN?

For high-performance environments dedicated interfaces have gained in popularity. vSAN ESA and tier 1 applications, can benefit from not having to rely on NIOC. Dedicated interfaces and switches can also help with troubleshooting by isolating the cause of traffic on a switch. To offset the cost of dedicated interfaces, a common configuration is to also put vMotion on the same switches, and have vMotion default to switch A, vSAN to switch B, and have them only need NIOC in cases of a switch outage.

What should I look for in a NIC?

The minimum speed for new hosts should be 25Gbps NICs, and strong consideration of 100Gbps for high performance clusters should be strongly considered.

You may think “But I haven’t upgraded my switches!” The good news, is 25Gbps ports use a SFP28 interface that is backwards compatible with 10Gbps SFP+ cables, optics, and switches. You can buy 25Gbps “Ready” gear now and upgrade switches a year later.

What is required for vSAN RDMA?

Documentation on vSAN RDMA requirements can be found here.

What kind of switches should I purchase for small remote sites?

Previously for small hybrid environments, 1Gbps networking would be considered to reduce costs and allow reuse of existing switching. This deployment though is rarely considered as a 2-node direct connection option has eliminated this use case.

What should I consider when shopping for a switch?

This question is easier explained by a few things to consider:

25Gbps is the new minimum to buy

vSAN ESA explicitly requires 25Gbps minimum. While you many not need the throughput, buying a 25Gbps switch today prepares you for future upgrades. It also makes sure that you are using a more modern ASIC, as cheaper 10Gbps switches may be using 6 years (or older!) silicon. This also holds true for network interface cards. Even though your switches are 10Gbps, purchasing newer ASIC 25GBps NICs can still benefit performance. In a follow up blog on things to look for spepcifically on NICs. It is worth noting that with as few as 4 drives it is possible to saturate a single 25Gbps interface with vSAN ESA.For extreme performance 100Gbps should be a default assumption going forward.

All 25 switches are not the same

Be careful of switches that are sold as “access/campus switches”. They will often have limited monitoring, management capabilities. These switches often may have limited buffers and performance.

Be mindful of switches with small port buffers – Just because a device has 10Gbps interfaces doesn’t mean it will perform well under load. When a port becomes saturated buffers will add a slight amount of latency at the expense of dropping the packet and forcing a TCP to re-transmit (which tends to be far more impactful on performance). Now, if a port is regularly being saturated at a point faster speeds (25Gbps vs. 10Gbps) LACP (with advanced Source and Destination TCP poprt hashes) can also help. While it’s not necessary for every vSAN cluster to go to 1GB+ buffers, it should be expected that a 4MB buffer switch will be outperformed by a 32MB buffer switch.

Be aware of shared port buffers

While a switch may have 32MB of buffer, that will often be split into smaller units such as ports, port groups, or cores. It is possible that the total buffer on the switch not be saturated but packets still dropped. If a switch is being shared between vSAN and regular VM traffic this behavior can prevent a large burst of traffic through an over-subscribed uplink from taking away all available buffer. In some cases, switches may have different buffer balancing methods that can be chosen. If a single uplink port with non-vSAN traffic is consuming the buffers changing buffer allocation modes can lead to improvement. While non-blocking uplinks are a great starting point. For clusters using more than 6 hosts, 16MB of switch buffer is strongly recommended. For larger high-performance clusters, consider deep buffer switches (That provide more than 1GB of shared buffer). For switches that leverage Virtual Output Queue (VoQ) confirm that at least 1MB per port buffer is available.

Buffers do not replace faster link speeds

100Gbps datacenter switches are going to be preferred over a ultra-deep buffered 25Gbps switch. Buffers are only useful when there is contention for using a port that leads to a momentary demand for more resources. If all ports are operating below their line rated speed it will not enhance performance. If a port is going to be constrained for a long continuous time then a buffer will not improve performance. At this point increasing link speed or quality of service (DSCP/CoS) prioritizing vSAN traffic over non-vSAN traffic is what is needed to alleviate this contention.

Avoid switches that are not switches

Switches that lack the ability to send packets from one port to another port directly without going through another switch. While much more rare in 2019, these “Fabric Extension” devices still are around and should not be considered for high throughput east west traffic such as vMotion or Storage.

Latency can be just as bad as packet loss

Pete Koehler has a great blog explaining the impacts of both latency and packet loss.

How Should I configure and manage my switches?

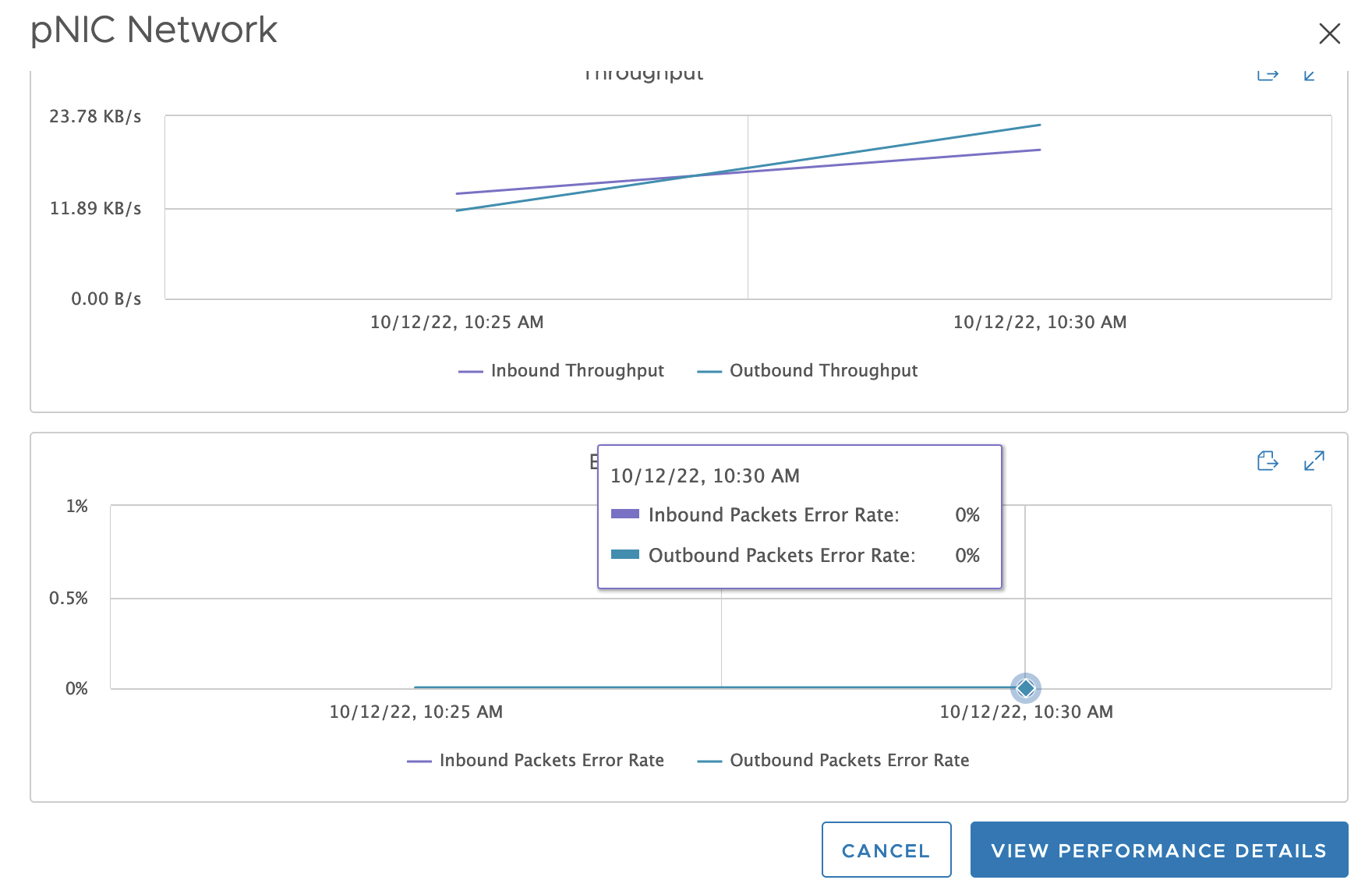

Monitor your switches

Good enterprise-class switches will allow you to log port errors. CRC errors will point to problems with physical cables (dirty fiber, bent cables, interference on non-shielded cables). This can often also be monitored by SNMP. I would strongly recommend configuring switches to syslog to LogInsight and have vRealize Operations monitor SNMP counters on the switches to help quickly identify these kinds of issues. If a switch does not support syslog, or SNMP this is potentially a warning that this is also going to be lacking in performance. For monitoring buffer exhaustion SNMP poll intervals are too long. Consider using switch alarms that notify on buffer exhaustion, or configure specific switch buffer monitoring systems. It is also generally a good idea when trying to understand throughput constraints on a switch to look at layer 4 telemetry (netflow/sflow etc) using tools like vRealize Networking Insight. This can help identify why a shared interface has congestion for vSAN traffic (perhaps database backup traffic is going over the same link with the same CoS tag).

Monitor your hosts

Just because a switch isn’t showing a dropped packet doesn’t mean there isn’t an issue. Flow Control could be limiting a saturated port, TCP retransmits may be happening because of out of order delivery, improperly configured LACP hashes may depending on the environment only be visible from the host. SNMP and traditional monitoring are limited to tracking bursting issues. To address this vSAN 6.7 includes network diagnostic mode to help with 1-second polling of key network performance statistics. Additional monitoring done at the ASIC side of the switch can also support more granular monitoring of buffers. Examples of this include Arista LANZ, Cisco Active Buffer Monitoring. vSAN IO Trip Analyzer offers easier end to end disability into possible sources of latency.

Consider switch lifecycle

Historically upgrading the code running on a switch required that the switch reboot and a few minutes outage for this switch would occur. While this process can be mitigated with redundant switch paths this typically was something that limited the amount of patching done, as well as shifted this patching to a specified maintenance window. Expensive chassis switches with redundant supervisors often mitigated this problem. As a middle ground, some new top of rack switches can support ISSU (In-Service Software Upgrades). Be aware that there are sometimes caveats, and limits to only minor updates. This functionality can help in keeping switches up to date.

It’s worth noting that a lot of this guidance also applies to iSCSI and NFS. In general, all VMware storage traffic likes having highly available, low latency, low jitter, and low packet loss networking.

Did the ISL or site to site networking requirements for vSAN stretched clusters change with the vSAN 8 ESA GA?

Please see the following documentation.