Leading VMware Performance Results for Machine Learning Apps Using NVIDIA AI Enterprise on vSphere

During the VMworld show this year, due to all of the varied news items appearing each day, you may have missed an important, leading performance benchmark submission from VMware and Dell on machine learning that used NVIDIA’s AI Enterprise tools and platforms. This was an MLPerf benchmark submission using vSphere 7, NVIDIA A100 GPUs, accessed as vGPUs, and a set of test applications from the MLPerf suite.

In the figure below, showing a summary of the MLPerf results achieved in 2021, the bars for each test are normalized to the native performance represented by 1.0 on the Y-axis. The performance results achieved with different machine learning applications on VMware vSphere show 94.4% and higher compared to that of native (bare-metal) performance.

You can see this official submission in full at the ML Commons site Look for the item labeled as a “VMware submission” in the Notes column, among the various Dell entries.

This new 2021 round of testing was done with three A100 GPUs in a server. A full description of the hardware used, the vSphere version and the tests executed is given in this article by the three VMware Performance Engineering authors, Uday Kurkure, Lan Vu and Hari Sivaraman.

This close-to-native performance result is in keeping with the findings of our performance engineering team over several years of different ML applications testing. Those rigorous tests consistently report low single digit differences between the virtualized performance and native performance with ML apps.

As an example, an earlier performance report, published in 2020 by the same VMware performance expert team, using sample apps from MLPerf, showed excellent performance on the earlier Turing T4 GPUs:

The differences with this new 2021 result are:

-

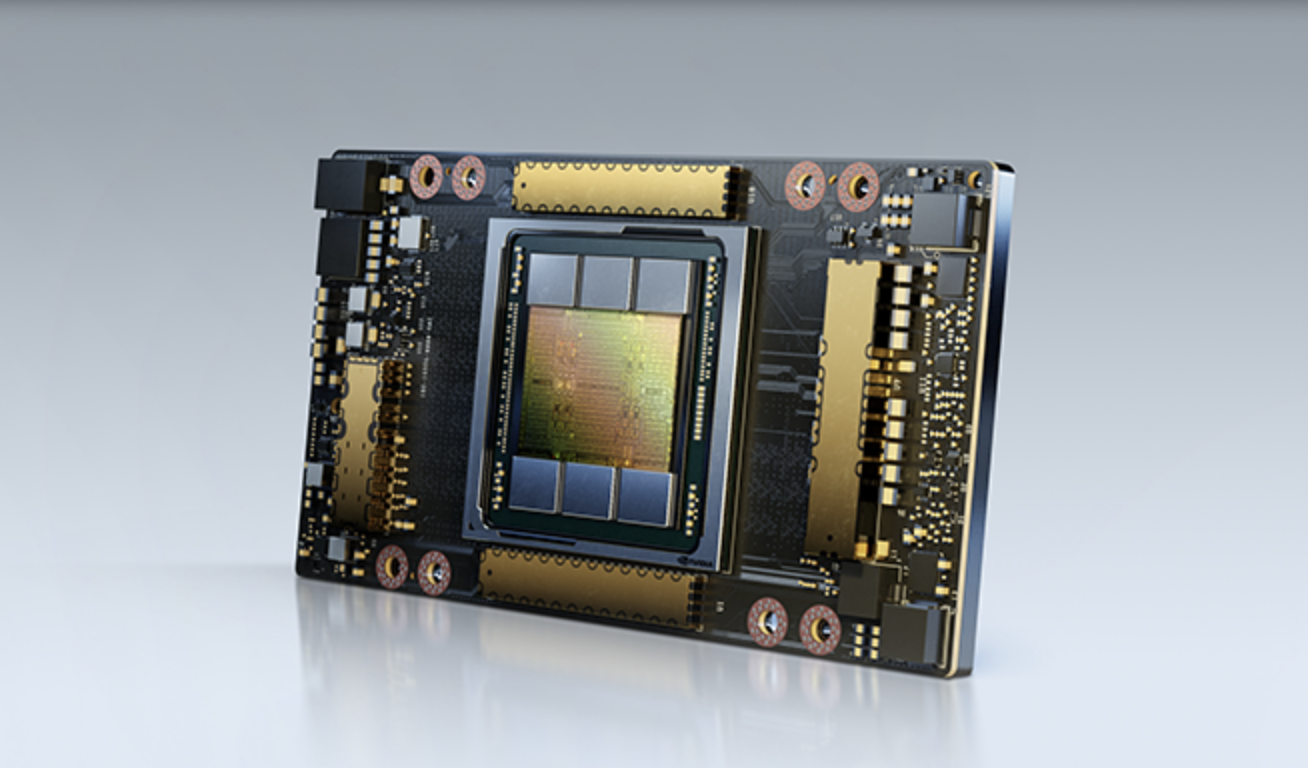

The specification of the GPU hardware is significantly higher with the A100 than the earlier testing on the Tesla-generation T4 GPU. T4 is supported in the AI-Ready Enterprise Platform along with the A100, A30 and others.

-

This is a formally validated, ML Perf submission to the MLCommons organization.

-

The testing used the NVIDIA TensorRT and NVIDIA Triton Inference Server tools as key performance optimizers

MLCommons is an industry and academic consortium with over 100 members that focuses on collective engineering for machine learning and artificial intelligence. MLCommons counts among its member companies AMD, Baidu, DellEMC, Fujitsu, HPE, Intel, Microsoft NVIDIA and VMware. The MLPerf benchmark suites, created by the members of MLCommons, are now the de-facto industry-standard for measuring machine learning system performance. While executing the benchmarking tests, the VMware team made use of a set of platforms from the NVIDIA AI Enterprise (NVAIE) suite of AI/ML data science tools and frameworks, that we will look at briefly below. This NVIDIA AI Enterprise suite is exclusively supported by NVIDIA on VMware vSphere.

The NVIDIA AI Enterprise Tools and Platforms

The NVIDIA AI Enterprise Suite is an AI/ML-specific layer of software tools and frameworks that are a key part of the NVIDIA and VMware AI-Ready Enterprise platform partnership. The AI-Ready Enterprise Platform shown above is composed of three main layers.

Starting at the bottom layer, you see a collection of accelerated mainstream vendor servers (from Dell, HPE, Lenovo and others) that have GPUs and SmartNICS on them. These servers have been put through a certification test by NVIDIA engineers. In the middle tier, the operating environment is either VMware vSphere hosting containers in VMs - or for those who want to use the Kubernetes integration, it is vSphere with Tanzu. For those users on vSphere with Tanzu, there are Kubernetes-style operators for GPU and network driver installation and management at that middle layer.

As you can see in the top layer, there are also several platforms and tools specifically for the data scientist to use that have enterprise-level support on vSphere from NVIDIA. The idea behind the AI-Ready Enterprise platform is to make life easier for both the data scientist and for the DevOps community that supports the infrastructure used by the data scientist, together.

Two of the important tools at the data science tier of the NVIDIA AI Enterprise Suite are TensorRT and the Triton Inference Server. They are typically deployed as containers. These two NVAIE tools were used together by VMware’s Performance Engineering team to achieve the impressive results shown for two different workloads on the MLPerf benchmark web page

TensorRT optimizes your trained model using techniques such as quantization, layer compression and other sophisticated performance optimization techniques. The supported version of TensorRT is found in the NVAIE registry on the NVIDIA GPU Cloud (NGC) as a container. TensorRT is run in its container by first pulling the image down from the NVAIE registry and then issuing a command such as

docker run --gpus all -it --rm -v local_dir:container_dir nvcr.io/nvidia/tensorrt:<xx.xx>-py<x>

Triton Inference Server provides a multi-threaded, multi-application runtime for your optimized model once it moves into the inference phase of the ML lifecycle.

The Triton Inference Server may be accessed from an application using gRPC or HTTP protocols. The Inference Server is aware of the vGPUs available and will schedule jobs on one or more of those vGPUs as needed.

You can get hold of the container that encapsulates the Triton Inference Server by making use of your credentials to access your private NVAIE-specific registry on the NGC. From there you get the container using a familiar command:

$ docker pull nvcr.io/nvidia/tritonserver:<xx.yy>-py3

The Triton Inference Server has a model repository for managing different models at once and different versions of the same model. Once your trained models have been set up in the repository, the Triton Inference Server can schedule them onto different hosts and different GPUs.

The Triton Inference Server container is set up to run using a command such as:

$ docker run --gpus=1 --rm -p8000:8000 -p8001:8001 -p8002:8002 -v/full/path/to/docs/examples/model_repository:/models nvcr.io/nvidia/tritonserver:<xx.yy>-py3 tritonserver --model-repository=/models

More information on the Triton Inference Server can be found at http://docs.nvidia.com/en/nvaie

The specific versions of TensorRT and Triton Inference Server that are supported under the NVIDIA AI Enterprise Suite are given at this site: https://docs.nvidia.com/ai-enterprise/sizing-guide/overview.html

More information on the technical details of the NVIDIA AI Enterprise Suite of products is available at: https://docs.nvidia.com/ai-enterprise/latest/index.html

The MLperf benchmarking suite, designed with various kinds of machine learning applications in mind, such as image recognition and natural language processing (NLP), has become the most commonly used suite in the machine learning industry.

Conclusions

The performance data has a clear message - you don't need to be as concerned about performance of your critical machine learning applications, when they are hosted on VMware vSphere, as you might have originally thought. This new MLPerf result, formally approved by the MLCommons organization, attests to the fact that ML performance on vSphere is within a small, single-digit percentage of native performance - and can in some cases equal it. What VMware brings along with this is the capability to free up significant amounts of your CPU power when the GPU is taking the brunt of the work in ML inference, as shown here and in model training as shown in other reports. The combination of powerful acceleration tools from the NVIDIA AI Enterprise Suite with the latest optimized hardware in the Ampere range gives you a world class platform for deploying your AI/ML workloads in the enterprise datacenter. This allows you to avoid the individual silo deployments of the past and bring the new workloads under enterprise management control in your datacenter.