Cloud Foundation Holodeck: Software-Defined Networking

Overview

The VMware Cloud Foundation (VCF) Experience Program is designed to provide a hands-on experience highlighting how VCF delivers a Cloud Operating Model for customer managed on-premises environments, capable of hosting traditional and modern applications. This document is intended for use with a VCF Lab Constructor (VLC) based nested environment using the Holodeck Configuration.

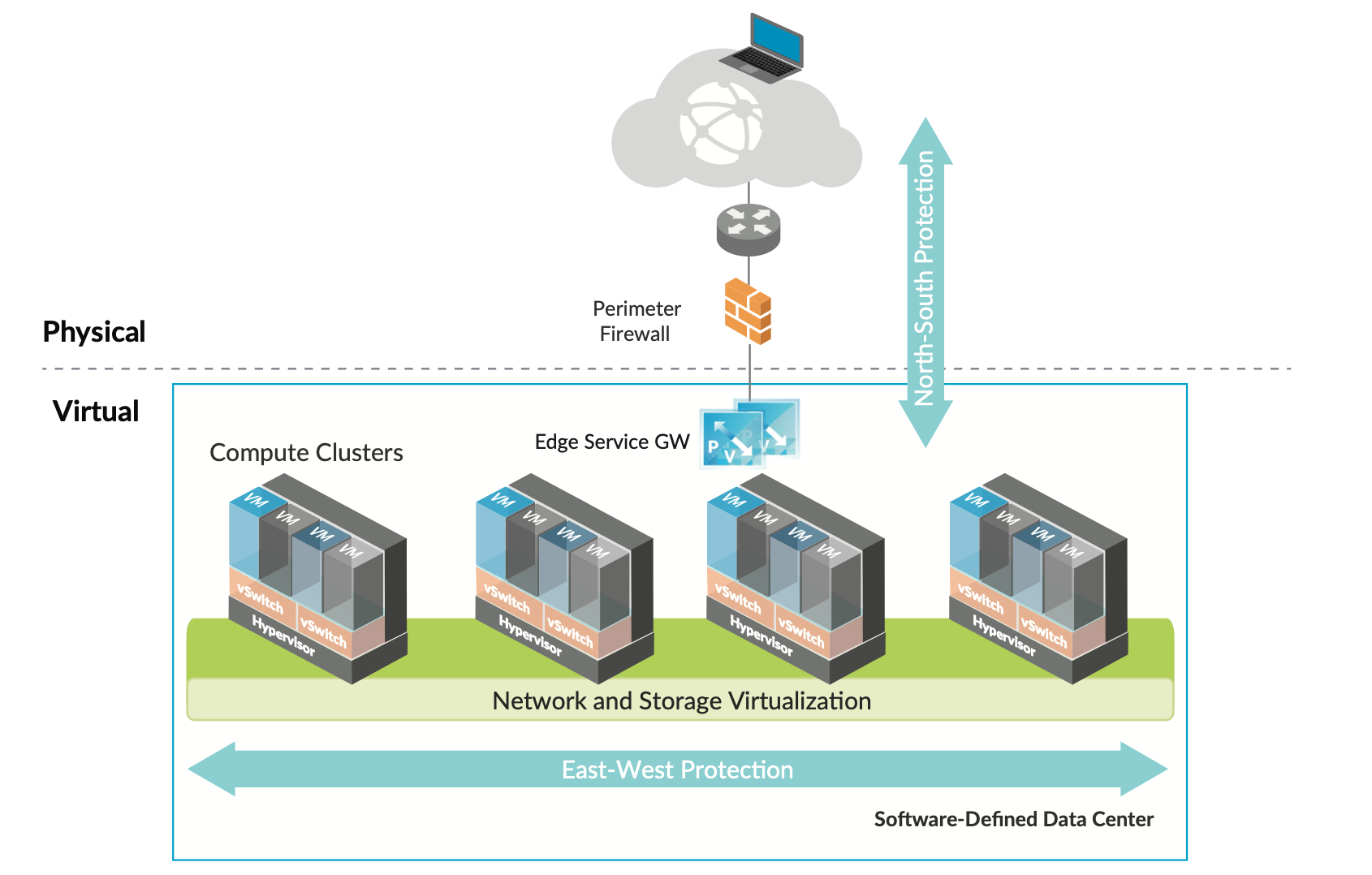

This document introduces the fundamentals of Software Defined Networking and Security provided by VMWare NSX. The modules in this lab focus on the simplicity of virtualizing network functions in software versus traditional hardware approaches. Network and security functions, once decoupled from their hardware counterparts (switches, routers, and firewalls), can be leveraged on a per-application basis rather than depend on their physical location.

Module breakdown:

- Module 1 – Introducing Software Defined Networking: Segments and Distributed Routing

- Module 2 – Changing the Security Game – Distributed Firewall

- Module 3 – Load Balancing

Credentials

Please refer to the Default Account Information section towards the end of this document for the account usernames and password that will be used throughout this document.

Links to the management interfaces are provided on the Chrome bookmark bar of the lab environment.

Module 1: Segments and distributed routing

Software Defined Networking (SDN) in VMware Cloud Foundation is provided by VMware NSX. VMware NSX operates as an overlay network which allows for encapsulating L2 network traffic within a L3 underlay network. This allows networks and network services to be delivered programmatically in software.

NSX enables customers to create elastic logical networks that span physical network boundaries. NSX abstracts the physical network into a pool of capacity and separates the consumption of these services from the underlying physical infrastructure. This model is like the model vSphere uses to abstract compute capacity from the server hardware to create virtual pools of resources that can be consumed as a service.

Lab 1: Creating Network Segments

This lab deploys the necessary networking components to support a OpenCart two-tier application. OpenCart is an open-source eCommerce platform that uses an Apache Web Server front end, and a MySQL database backend. This lab uses preconfigured VMs that will be attached to newly created SDN segments. This lab simulates a common issue, where a legacy application with hard coded networking dependencies needs to be deployed in a new data center. Rather than attempting to recreate subnets, VLAN and firewall components in hardware, we will create all the necessary infrastructure in software, faster.

[Step 1] Logging in to the environment

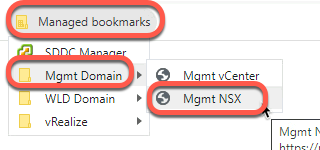

- On the Holo-Console, open a new tab in the Chrome browser

- Click the Managed bookmarks folder in the bookmark bar then select Mgmt Domain->Mgmt NSX

- At the certificate warning screen, click Advanced then Proceed to nsx-mgmt.vcf.sddc.lab (unsafe)

- Log into NSX Manager as the user admin with the password VMware123!VMware123!

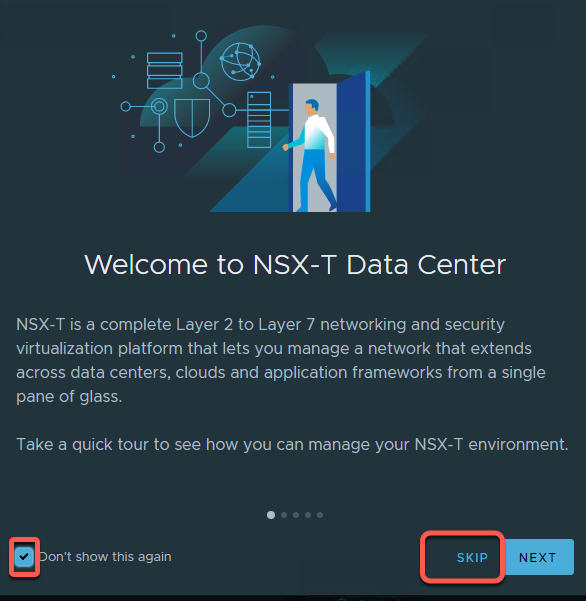

- If this is your first time loggin in, you will land on a Welcome screen. This can be revisited later.

- Click Don’t show this again followed by Skip

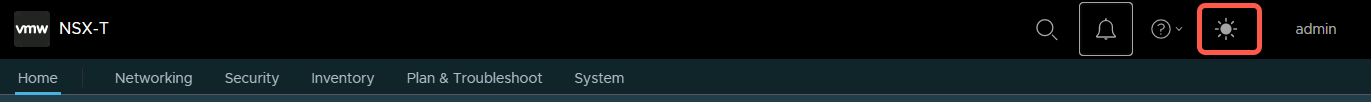

- This lab guide uses the NSX UI in light mode. Click the sunshine icon in the upper right corner of the UI to change to light mode. This can be changed back by clicking the half-moon icon in same location.

- Click the Networking tab

[Step 2] Create the OC-DB-Segment

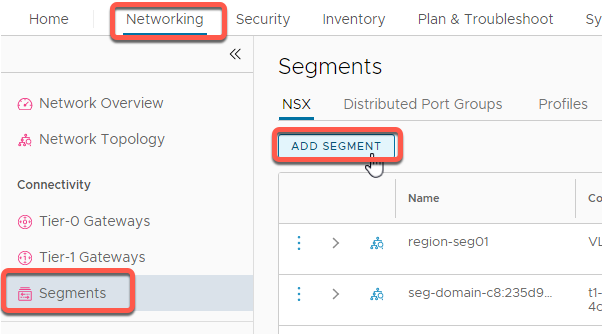

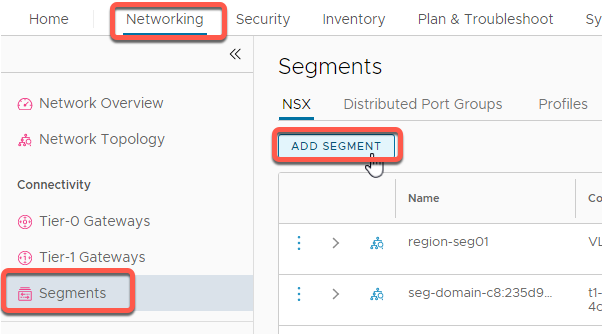

- Click the Networking tab at the top of the screen

- Click Segments in the left-hand side pane.

- Click ADD SEGMENT button

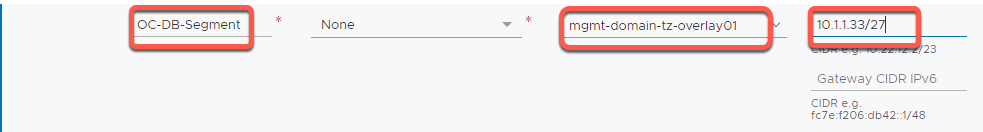

- In the Segment Name field, enter OC-DB-Segment

- In the Select Transport Zone dropdown, select mgmt-domain-tz-overlay01 | Overlay

- In the Gateway CIDR IPv4 field, enter 10.1.1.33/27 Note: This entry sets the subnet, CIDR and gateway at same time).

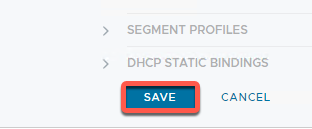

- Scroll down and click Save

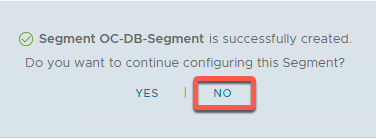

- A notice is displayed informing of the success of the segment creation. Click No to answer the Do you want to continue configuring this Segment dialog

[Step 3] Create the OC-Web-Segment

- Click the Networking tab at the top of the screen

- Click Segments in the left pane.

- Click ADD SEGMENT button

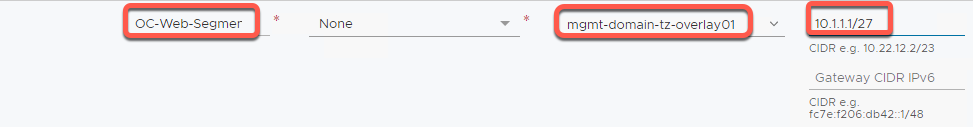

- In the Segment Name field, enter OC-Web-Segment

- In the Select Transport Zone dropdown, select mgmt-domain-tz-overlay01 | Overlay

- In the Gateway CIDR IPv4 field, enter 10.1.1.1/27

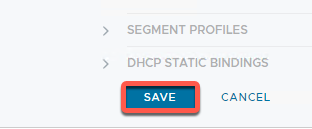

- Scroll down and click SAVE

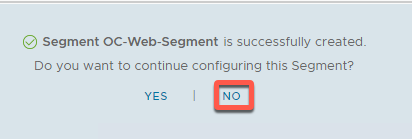

- A notice is displayed informing of the success of the segment creation. Click No to answer the Do you want to continue configuring this Segment dialog

[Step 4] Connect OpenCart web server VMs

The following steps attach two Apache web server VMs to the OC-Web-Segment.

- Using the Chrome bookmarks, access vCenter Server Web Client by navigating to Managed bookmarks->Mgmt Domain->Mgmt vCenter

- Launch the vSphere Client and login to the Mgmt vCenter using the username administrator@vsphere.local and the password of VMware123!

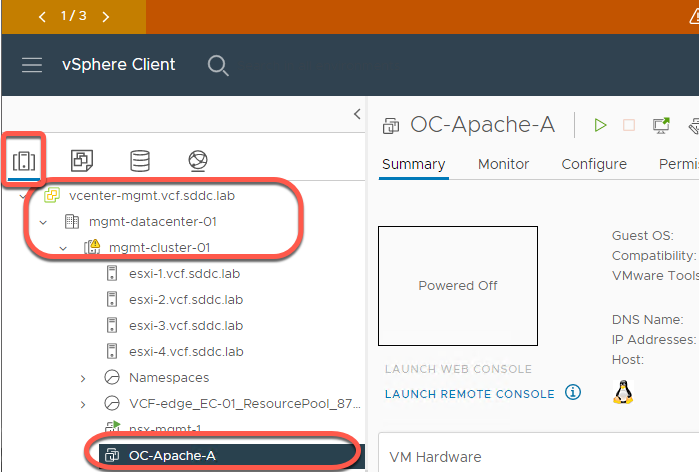

- Select the vCenter Server Hosts and Clusters view and expand out as shown

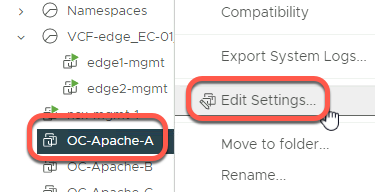

- Right click on OC-Apache-A and click Edit Settings

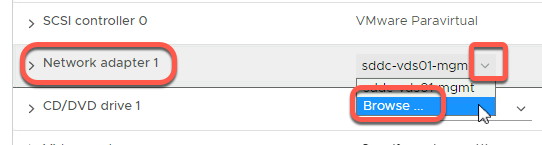

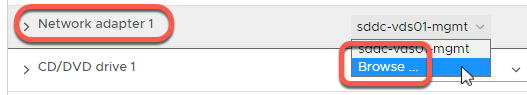

- Click the dropdown next to Network Adapter 1

- Click Browse

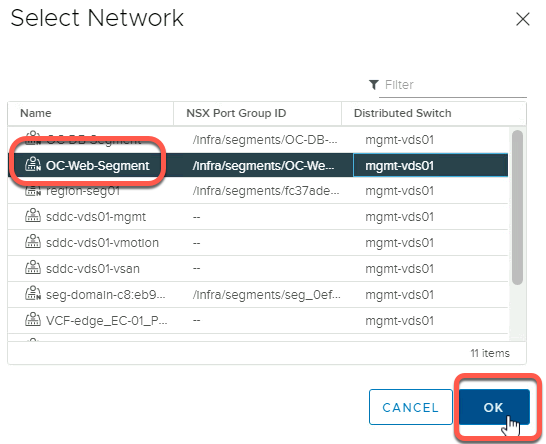

- Observe that the network segments created earlier are visible

- Click OC-Web-Segment

- Click OK

- Click OK

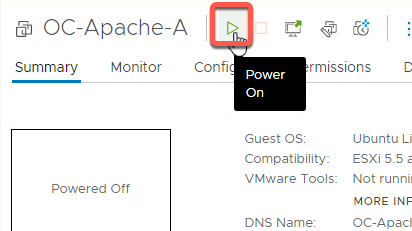

- Power on the OC-Apache-A VM

[Step 5] Attach OC-Apache-B to OC-Web-Segment

- Right click on OC-Apache-B and click Edit Settings

- Click the dropdown next to Network Adapter 1

- Click Browse

- Click OC-Web-Segment

- Click OK

- Click OK

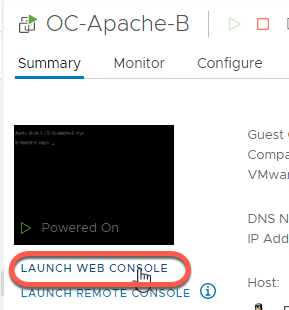

- Power on the OC-Apache-B VM

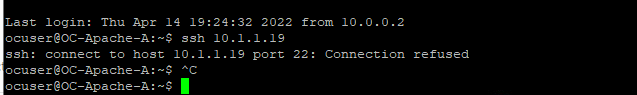

[Step 6] Test basic connectivity – OC-Apache-B

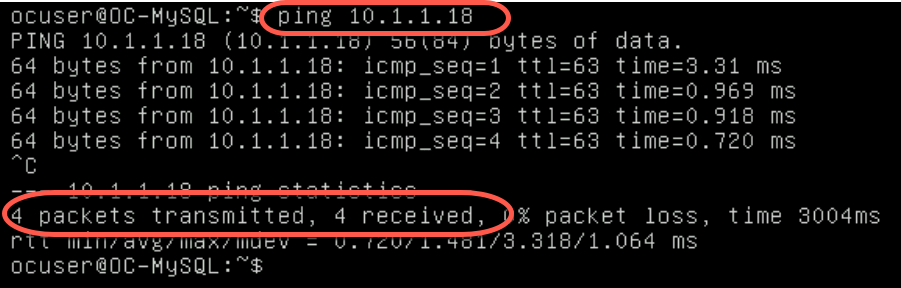

- The IP addresses for the two VMs on the segment to be tested are:

- OC-Apache-A – 10.1.1.18

- OC-Apache-B – 10.1.1.19

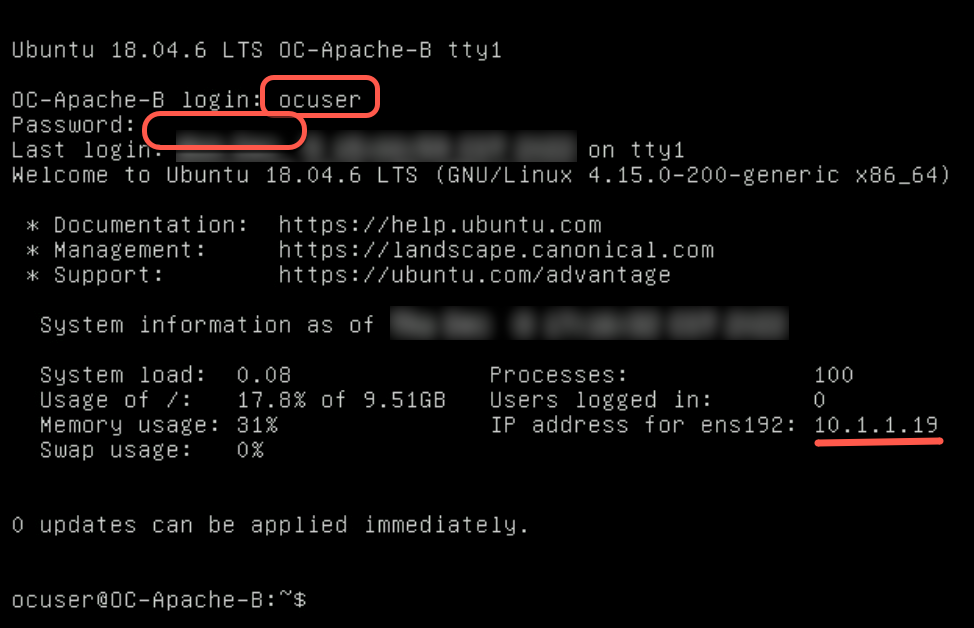

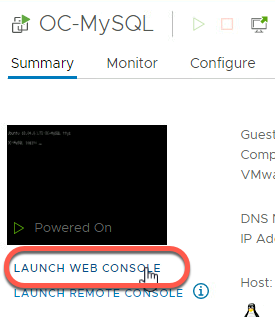

- Launch the web console for OC-Apache-B. You may need to hit enter within the console window to get a login prompt

- Login with the username ocuser and the password of VMware123!

- Verify the IP Address configured is 10.1.1.19

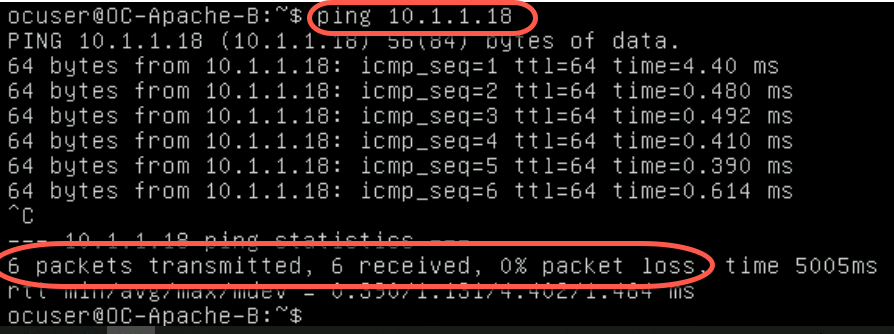

- Enter the following command to verify network connectivity with 10.1.1.18 (OC-Apache-A)

ping 10.1.1.18

- Type Control-C to stop the ping command

- Verify no packet loss while pinging OC-Apache-A from OC-Apache-B.

This verifies network communication between both VMs over the recently added segment

[Step 7] View NSX OC-Web-Segment in vCenter Server

- Using the Chrome bookmarks, access the vCenter Server Web Client by navigating to Managed bookmarks->Mgmt Domain->Mgmt vCenter

- Launch the vSphere Client and login to the Mgmt vCenter using the username administrator@vsphere.local and the password of VMware123!

- Click on the networking icon and expand the menu on the left-hand side of the vSphere Web Client

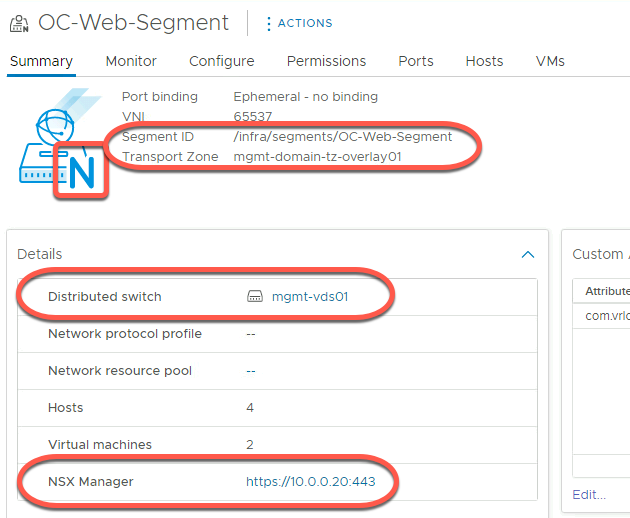

- Click on OC-Web-Segment

- Note the following:

- The “N” denotes this is a NSX segment and not a standard port group

- The segment ID and Transport Zone for the segment are shown

- The vDS the segment is attached to

- With vSphere 7 and NSX-T 3.1 and higher, NSX segments are an extension of the vDS and are visible to the vSphere administrator. NOTE: In previous release, NSX objects were “Opaque” and not visible to the vSphere administrator.

- The hyperlink for the NSX Manager for the segment

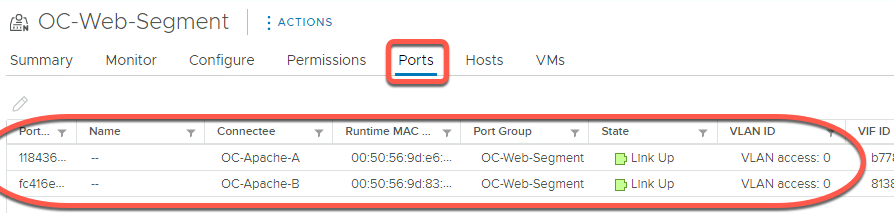

- Click on Ports

- Note each VM attached to the segment has a port assigned. Other details, such as the MAC address and VLAN ID, are also displayed

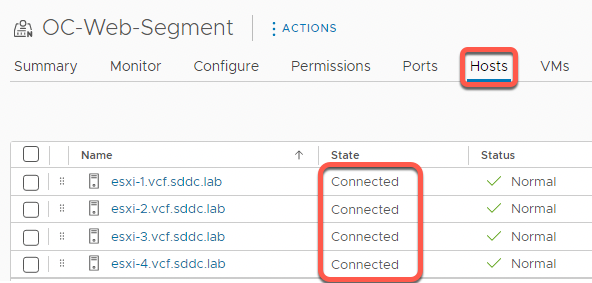

- Click on the Hosts tab

- Note the OC-Web-Segment is connected on each ESXi host in the transport zone. When a segment is created, it is accessible to all hosts in the transport zone.

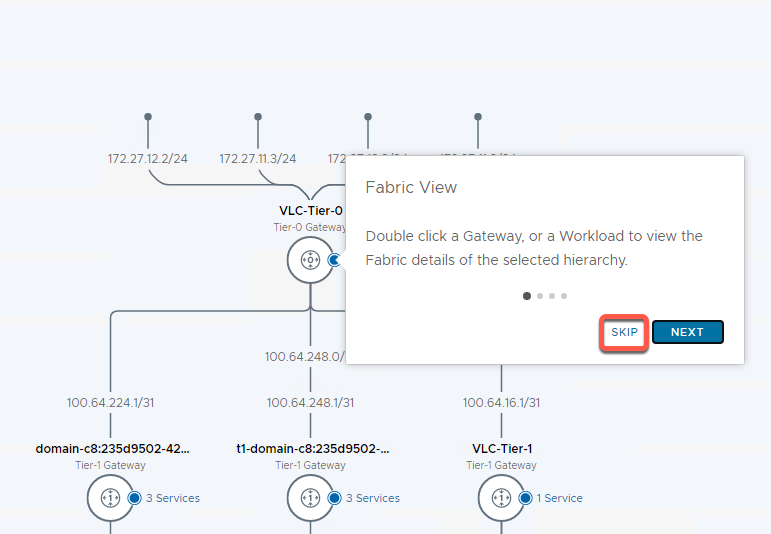

[Step 8] Discover the Network Topology

- On the Holo-Console, open a new tab in the Chrome browser

- Click the Managed bookmarks folder in the bookmark bar then select Mgmt Domain->Mgmt NSX

- Log into NSX Manager as the user admin with the password VMware123!VMware123!

- Click the Networking tab

- Select Network Topology from the left-hand side menu

- Click Skip to close the mini-tour if needed.

- Locate the new segments quickly by clicking on the Overview panel at the bottom of the screen in the area with a set of stand-alone boxes

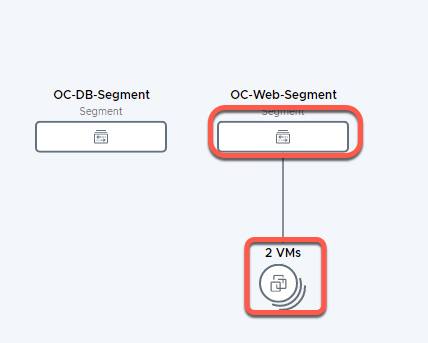

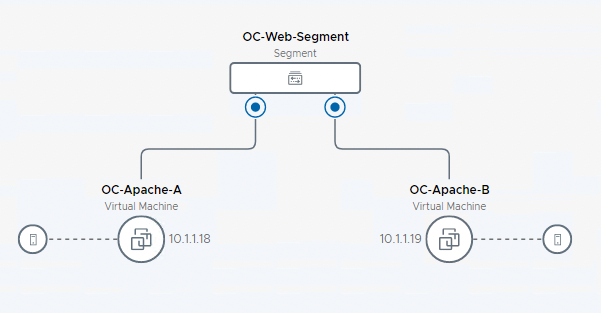

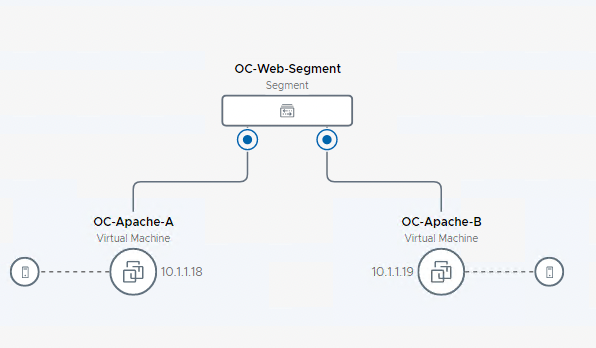

- Locate the OC-Web-Segment on the topology view.

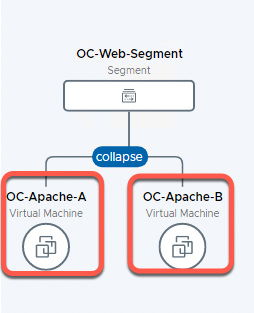

- Click on 2 VMs under OC-Web-Segment to expand the view

- Note the two VMs previously configured on the OC-Web-Segment

[Lab Summary]

This lab demonstrated how NSX can be utilized to quickly and securely provision L2 and L3 services on existing infrastructure. This enables:

- Easily delivering network services needed by an application with the application

- Eliminating delays with traditional network provisioning processes, which can take days to months.

- Empowering operations staff to deploy approved networks or retain control with the network admin team.

- Building a virtualized networking foundation that facilitates ease of workload migrations to other VMware Cloud properties for Disaster Recovery or Cloud Bursting activities.

This lab usually takes less than 15 minutes to complete. How does this compare to your experience in getting a single subnet provisioned for VM use?

Lab 2: View packet flow within a host

This lab demonstrates the use a powerful diagnostic and visualization tool in NSX known as Traceflow to view traffic moving between virtual machines on the same host and same segment. Subsequent labs will examine traffic between VM’s running on different hosts on the same segment, and VM’s communicating between segments in same and different hosts.

Traceflow injects packets at the point the point where a VM connects to a vSphere distributed switch (VDS) port. It provides observation points along the packet’s path as it traverses physical and logical entities (such as ESXi hosts, logical switches, and logical routers) in the overlay and underlay network. This provides the ability to identify the path a packet takes to reach its destination or where a packet is dropped along the way. Each entity reports the packet handling on input and output, allowing for ease of troubleshooting.

Keep in mind that Traceflow is not the same as a ping request/response that goes from guest-VM stack to guest-VM stack. What Traceflow does is observe a marked packet as it traverses the overlay network. Each packet is monitored as it crosses the overlay network until it reaches and is deliverable to the destination guest VM. However, the injected Traceflow packet is never actually delivered to the destination guest VM. This means that a Traceflow can be successful even when the guest VM is powered down.

Note: Until the VM has been powered on after attaching it to an NSX segment, the NSX control plane initially does not know which host to use to inject packets from that VM as source. This results in a failure of the Traceflow test. After initial power-on of the VM on any host on the segment, NSX Manager keeps track of the location of the last run location for the VM.

[Step 1] Setup VMs for test

This step ensures the participant can observe packet flow between two VM’s on the same host by moving one VM as necessary to co-locate with the other. The subsequent lab will move the VM to show communications between hosts.

- Using the Chrome bookmarks, access vCenter Server Web Client by navigating to Managed bookmarks->Mgmt Domain->Mgmt vCenter

- Launch the vSphere Client and login to the Mgmt vCenter using the username administrator@vsphere.local and the password of VMware123!

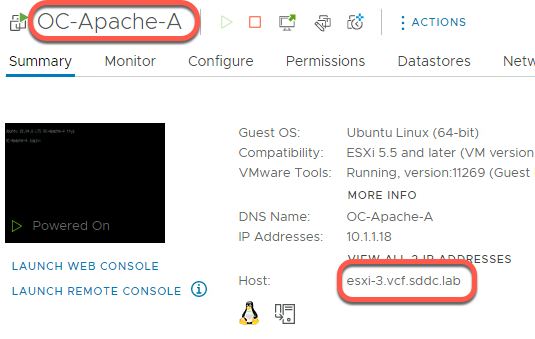

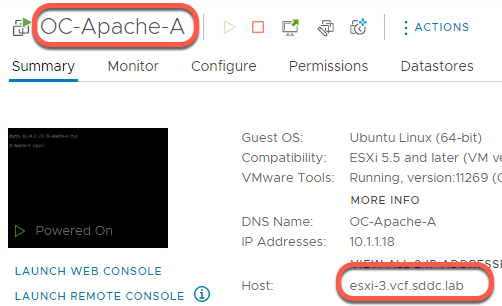

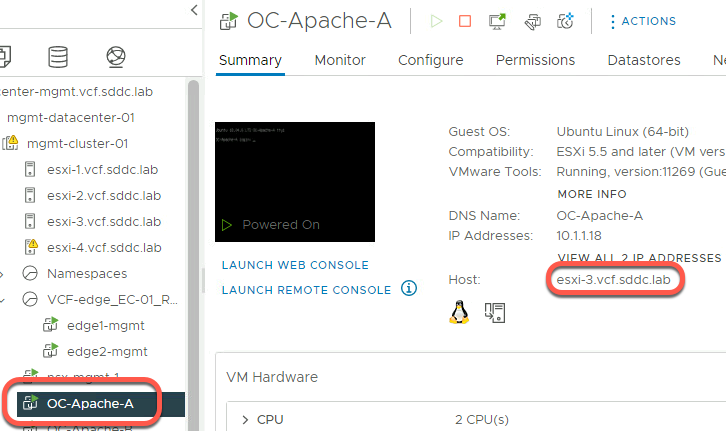

- From the Hosts and Clusters view click on OC-Apache-A to determine on which ESXi is the VM running

In this example, OC-Apache-A is running on host esxi-3.vcf.sddc.lab:

- Click on OC-Apache-B to determine on which ESXi is the VM running

- If the two VMs (OC-Apache-B and OC-Apache-A) are not on the same host, initiate a vMotion to move them to the same host.

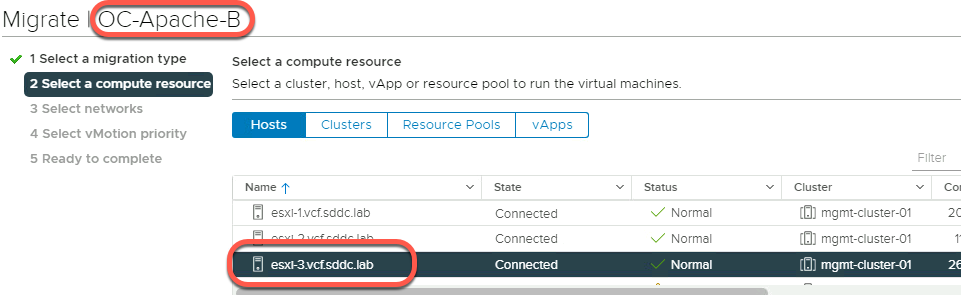

To perform the vMotion, right click on the VM and select Migrate.

Click Next to change the compute resource only

Select the ESXi host to migrate to, then click Next

Click Next to accept the default network selection

Click Next to accept the default vMotion priority

Click Finish to perform the migration

Below shows OC-Apache-B being migrated to esxi-3.vcf.sddc.lab that OC-Apache-A was shown to be on earlier

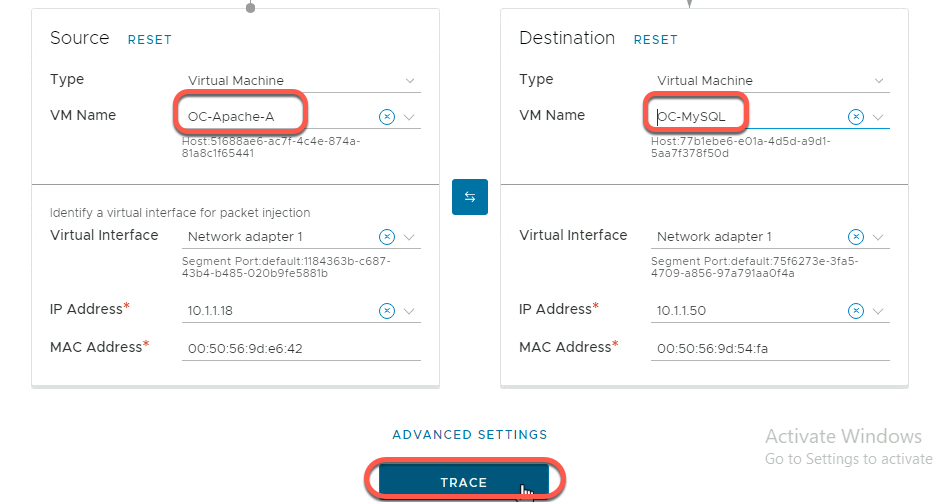

[Step 2] Test packet flow

- On the Holo-Console, open a new tab in the Chrome browser

- Click the Managed bookmarks folder in the bookmark bar then select Mgmt Domain->Mgmt NSX

- Log into NSX Manager as the user admin with the password VMware123!VMware123!

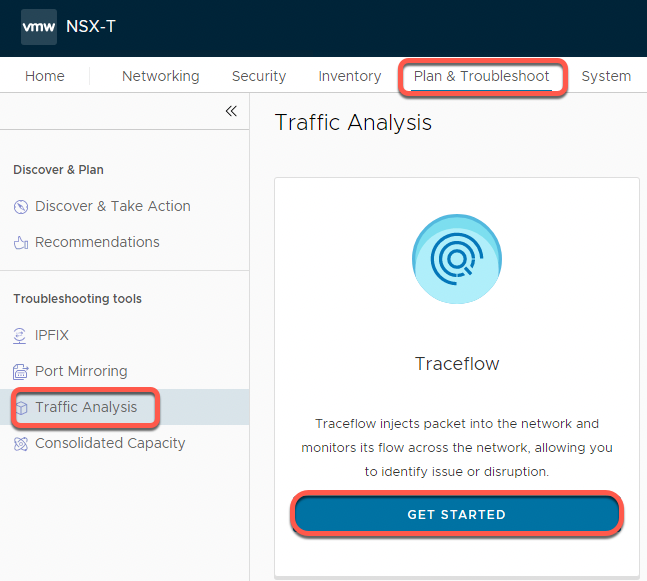

- Click Plan and Troubleshoot on the top menu bar

- Click on Traffic Analysis

- Click Get Started on the Traceflow box

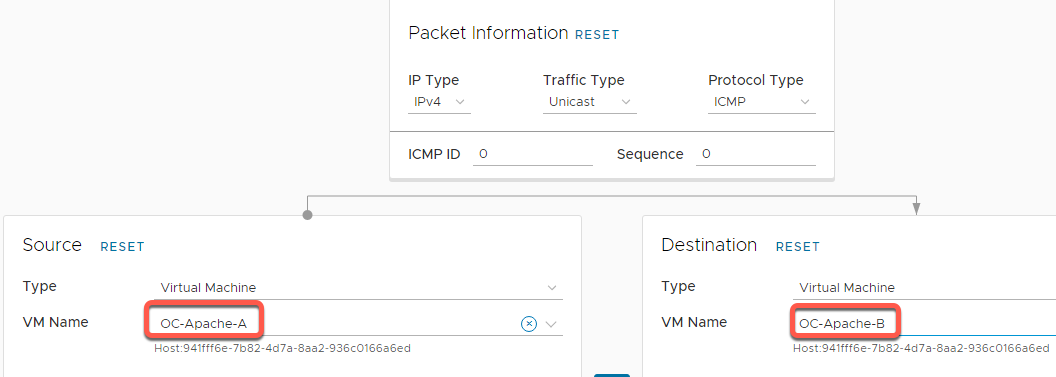

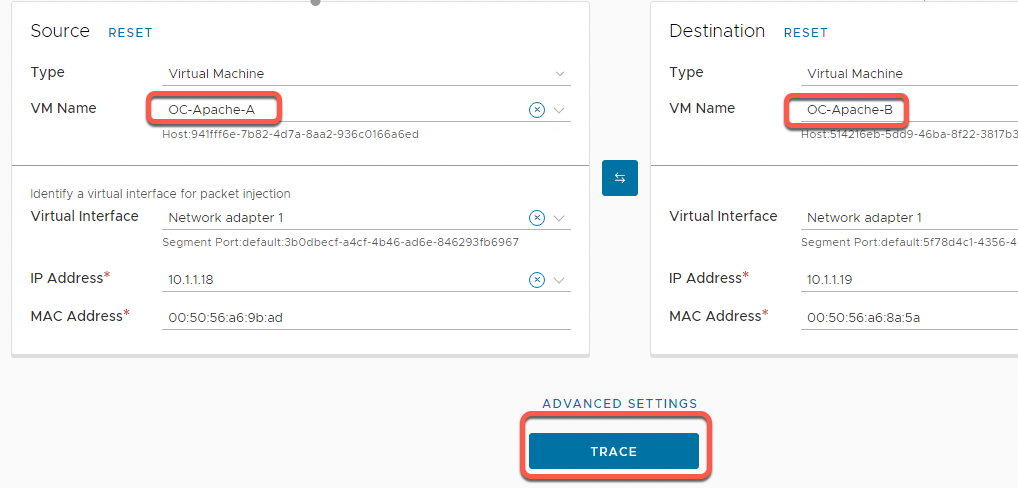

- Select the dropdown for the Source VM Name and select OC-Apache-A

- Select the dropdown for the Destination VM Name and select OC-Apache-B

- Scroll down and click Trace

- The path the packets take are shown on the resulting topology view.

In this example, packets moved from OC-Apache-A to OC-Apache-B via the OC-Web-Segment

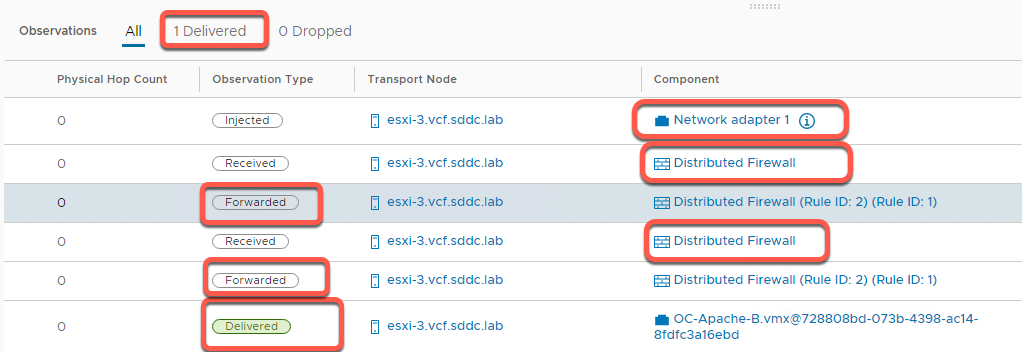

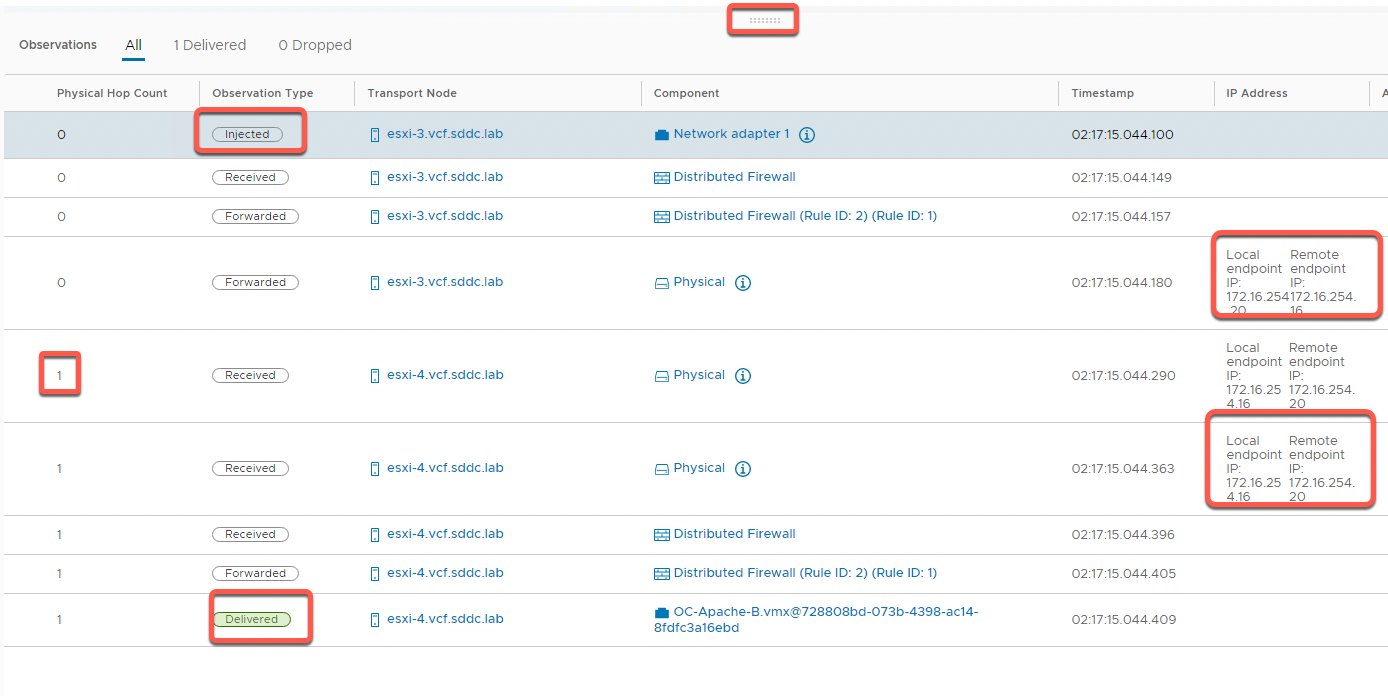

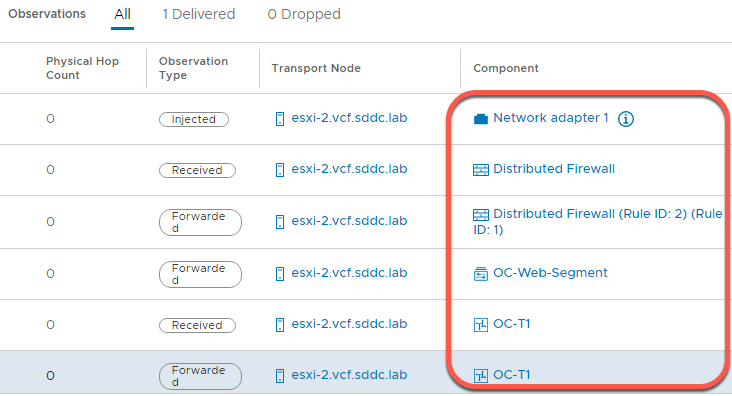

- In the Observations panel, observe the following

- One packet was delivered

- The physical hop count is zero, indicating that the packet did not leave the host

- The packet was injected at the network adapter for OC-Apache-A virtual machine

- It is then received at the distributed firewall at the VDS port for OC-Apache-A

- With no rule blocking, the packet is then forwarded on from the sending VDS port

- The packet is then received on the distributed firewall at the receiving VDS port for OC-Apache-B

- With no rule blocking forwarding, the packet is then forwarded to the destination

- The last step shows the packet being delivered to the network adapter for the OC-Apache-B VM

[Lab 2 Summary]

Lab 2 shows two very specific capabilities.

- Traceflow provides a powerful tool for visualizing and diagnosing NSX overlay networks. You will use Traceflow in several other communication scenarios in the remainder of this lab.

- The second point may not have been immediately obvious. While sending a packet between two hosts on the same subnet, the packet crossed two firewalls. In traditional networking, forcing a packet to traverse a firewall involves subnets, or complex traffic steering rules. With NSX, the distributed firewall is present on every VDS port. You will explore the distributed firewall in more detail in Module Two.

Lab 3: View packet flow between hosts

This lab uses the Traceflow capability in NSX to view traffic moving between virtual machines on different hosts on the same segment.

[Step 1] Setup VMs for test

- Using the Chrome bookmarks, access the vCenter Server Web Client by navigating to Managed bookmarks->Mgmt Domain->Mgmt vCenter

- Launch the vSphere Client and login to the Mgmt vCenter using the username administrator@vsphere.local and the password of VMware123!

- From the hosts and clusters view, click on OC-Apache-A to determine which ESXi host it is running on. In this example, OC-Apache-A is running on host esxi-3.vcf.sddc.lab

- Next click on OC-Apache-B and determine the host it is running on.

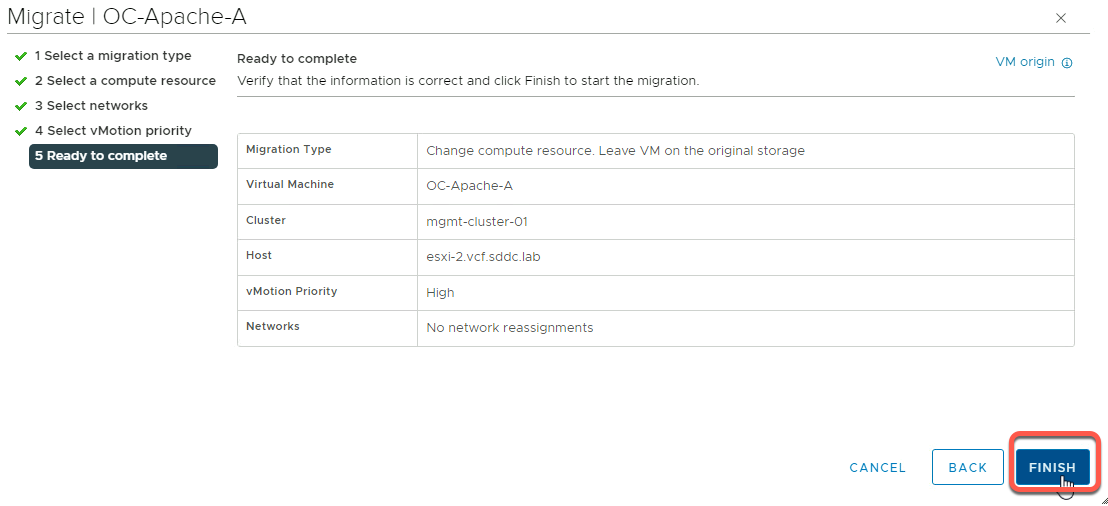

- If both VMs (OC-Apache-A and OC-Apache-B) are running on the same ESXi host, initiate a vMotion to move one of the VMs to a different host.

To perform the vMotion, right click on the VM and select Migrate.

Click Next to change the compute resource only

Select the ESXi host to migrate to, then click Next

Click Next to accept the default network selection

Click Next to accept the default vMotion priority

Click Finish to perform the migration

[Step 2] View packet flow

- On the Holo-Console, open a new tab in the Chrome browser

- Click the Managed bookmarks folder in the bookmark bar then select Mgmt Domain->Mgmt NSX

- Log into NSX Manager as the user admin with the password VMware123!VMware123!

- Click Plan and Troubleshoot

- Click Traffic Analysis

- Click Get Started on the Traceflow box

- Select the dropdown for the Source VM Name and select OC-Apache-A

- Select the dropdown for the Destination VM Name and select OC-Apache-B

- Scroll down and click Trace

- The path the packets take are shown on the resulting topology view.

In this example, packets moved from OC-Apache-A to OC-Apache-B via the OC-Web-Segment

- Click the X to close the “multiple physical received observations” banner if displayed, as this is expected in a nested lab environment and it can be safely ignored

![]()

- Resize the observations window by dragging the center icon upward

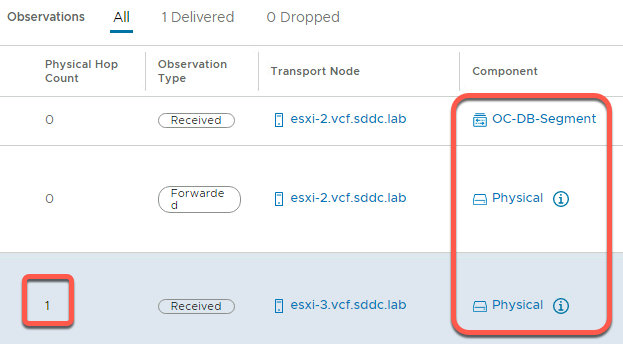

- In the Observations panel, note the following

- One packet was delivered as expected. These is no change in firewall behavior from the last example

- Because OC-Apache-B is now on a different host, the packet crosses the physical layer and increments the hop count

- You can see the Local Endpoint IP and Remote Endpoint IP for esxi-3, and opposite local and remote view from esxi-4. This is an example of NSX “Tunnel Endpoints” in use as opposite ends of an overlay network path between hosts.

[Step 3] View Host TEP information

- On the Holo-Console, open a new tab in the Chrome browser

- Click the Managed bookmarks folder in the bookmark bar then select Mgmt Domain->Mgmt NSX

- Log into NSX Manager as the user admin with the password VMware123!VMware123!

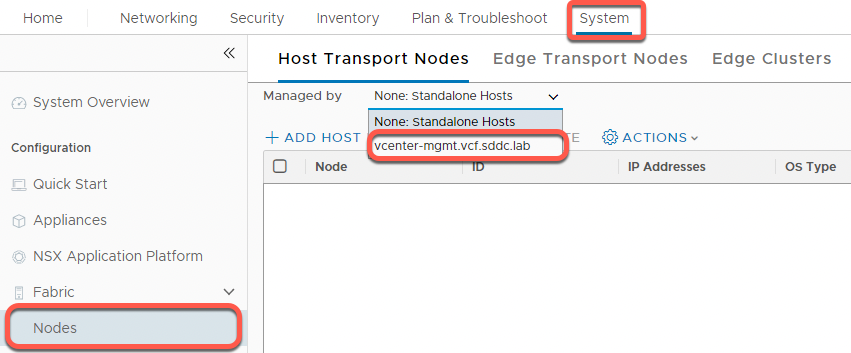

- On the top menu bar click System

- In the left menu click Fabric

- Click Nodes

- On the Managed By field select vcenter.mgmt.vcf.sddc.lab from the dropdown

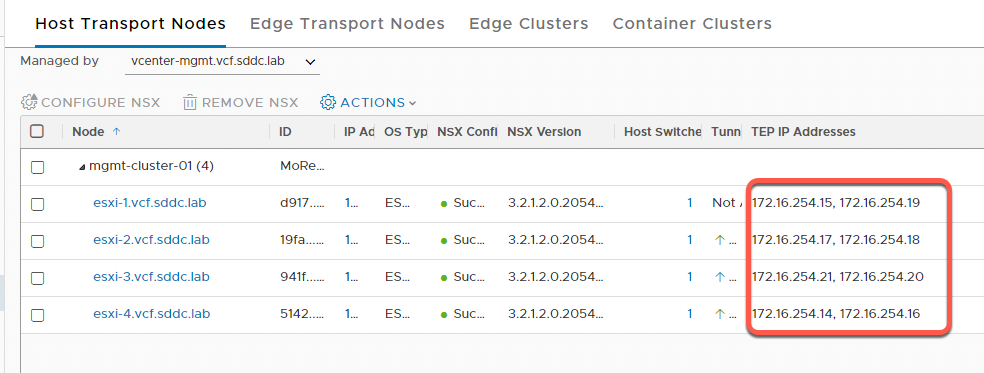

- Expand the mgmt-cluster

- Notice the TEP IP Addresses column. You can widen the browser as well as resize columns as needed to see TEP addresses. Each host has two TEP interfaces in the Host TEP VLAN. In the Holodeck lab configuration, Host TEP addresses are automatically allocated using DHCP on the 172.16.254.1/24 network.

- The NSX Manager is responsible for updating all transport nodes in the transport zone any time a VM powers on or is migrated. This provides mapping of VM to TEP addresses to send overlay traffic for a specific VM. As a TEP, the NSX prepped vSphere Distributed switch is responsible to de-encapsulate overlay traffic to a VM and encapsulate traffic to communicate on the overlay. This is transparent to the VM and the underlay network

[Lab 3 Summary]

Lab 3 extends the concept of overlay networks to separate hosts. NSX Manager keeps all hosts participating in a transport zone up to date as to what Host TEP address to use to send packets to a specific VM. The concept of overlay networking is very powerful, as the IP network information of the virtual machines communicating is completely independent of the underlying transport network. In this example, the two ESXi hosts communicate over a 172.16.254.0/24 subnet for any overlay traffic on any segment running on these hosts. The underlying ESXi hosts could also be on different subnets due to being in different rows in a datacenter, buildings in a campus or datacenters in a local region. Overlay networking removes the artificial limits various datacenter IP strategies place on where a given workload can be run.

Lab 4: Adding router connectivity

In Lab 1, two segments were created. These segments are not connected or connected to other parts of the network. This lab adds an NSX Tier-1 (T1) router. Connecting the OC-Web-Segment and OC-App-Segment router will allow for communication between the two segments. Finally, this lab demonstrates connecting the T1 router to the existing Tier-0 (T0) router allowing for communication outside the lab environment.

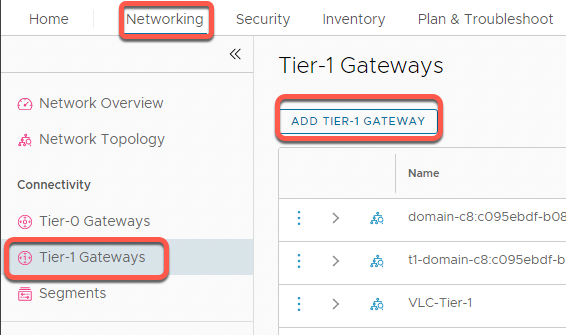

[Step 1] Create a T1 Router

- On the Holo-Console, open a new tab in the Chrome browser

- Click the Managed bookmarks folder in the bookmark bar then select Mgmt Domain->Mgmt NSX

- Log into NSX Manager as the user admin with the password VMware123!VMware123!

- Click Networking

- Click Tier-1 Gateways in the left navigation panel

- Click on Add Tier-1 Gateway

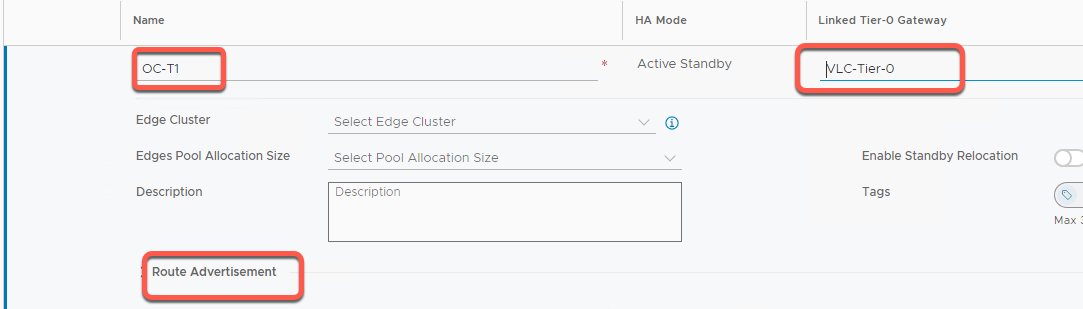

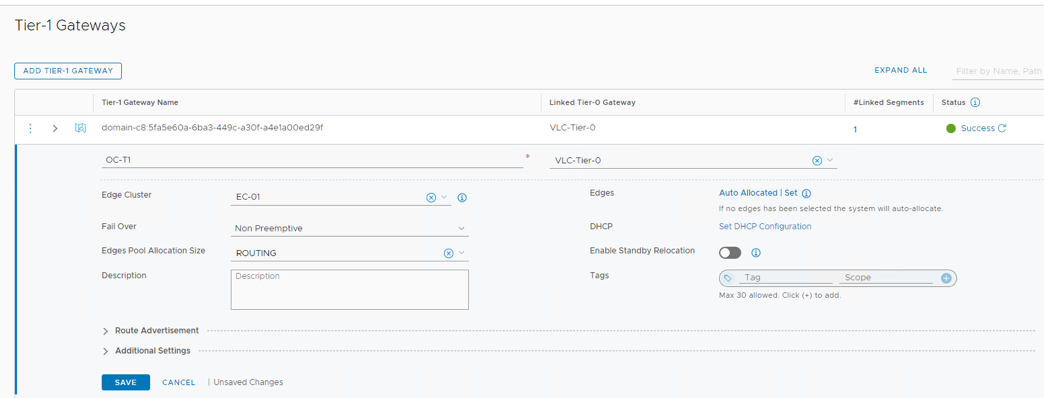

- Name the gateway OC-T1 and click Select Tier-0 Gateway and select VLC-Tier-0.

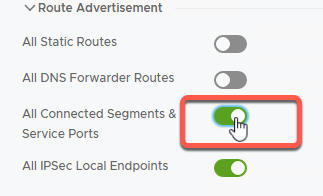

- Expand Route Advertisement

- Enable All Connected Segments & Service Ports

- Click Save

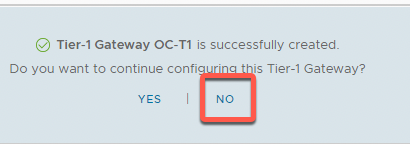

- Click No at the dialog asking, ‘Do you want to continue configuring this Tier-1 Gateway?’

- Click the refresh icon in the Status column until the status shows Success

![]()

[Step 2] Connect segments to OC-T1

- On the Holo-Console, open a new tab in the Chrome browser

- Click the Managed bookmarks folder in the bookmark bar then select Mgmt Domain->Mgmt NSX

- Log into NSX Manager as the user admin with the password VMware123!VMware123!

- Click Segments

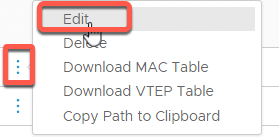

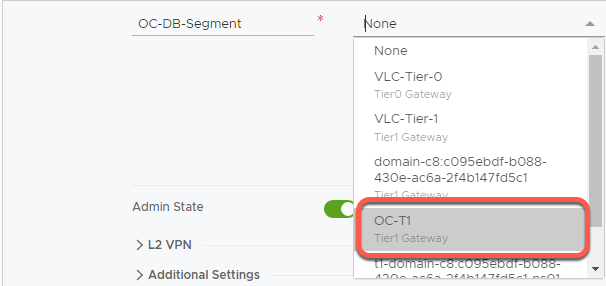

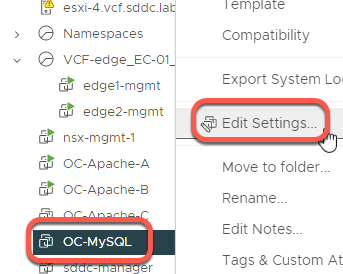

- Click the three dots to the left of OC-DB-Segment then select Edit

- Under Connected Gateway, scroll to find OC-T1 and click to select.

- Click Save

- Click Close Editing

- Click the three dots to the left of OC-Web-Segment then select Edit

- Under Connected Gateway, scroll to find OC-T1 and click to select

- Click Save

- Click Close Editing

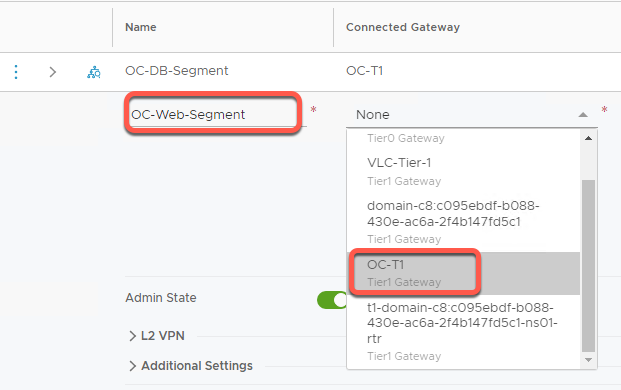

[Step 3] Attach OC-MySQL to segment OC-DB-Segment

- Using the Chrome bookmarks, access the vCenter Server Web Client by navigating to Managed bookmarks->Mgmt Domain->Mgmt vCenter

- Launch the vSphere Client and login to the Mgmt vCenter using the username administrator@vsphere.local and the password of VMware123!

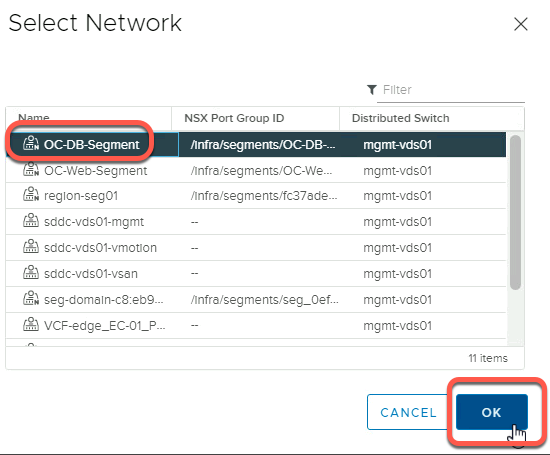

- From the hosts and clusters view, right click on OC-MySQL and click Edit Settings

- Click the dropdown next to Network Adapter 1

- Click Browse

- Click OC-DB-Segment

- Click OK

- Click OK

- Power on the VM

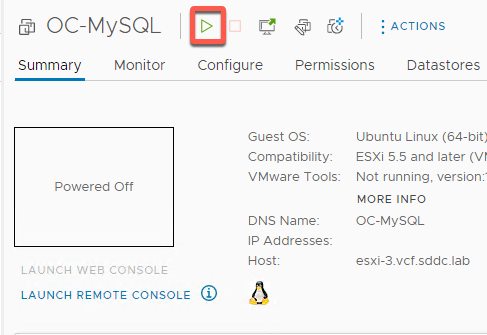

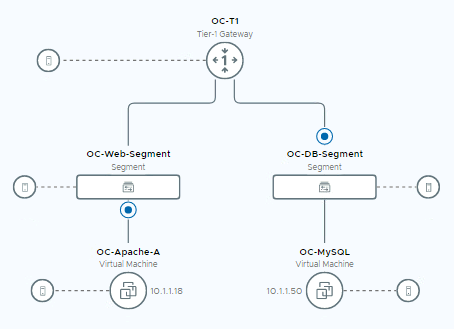

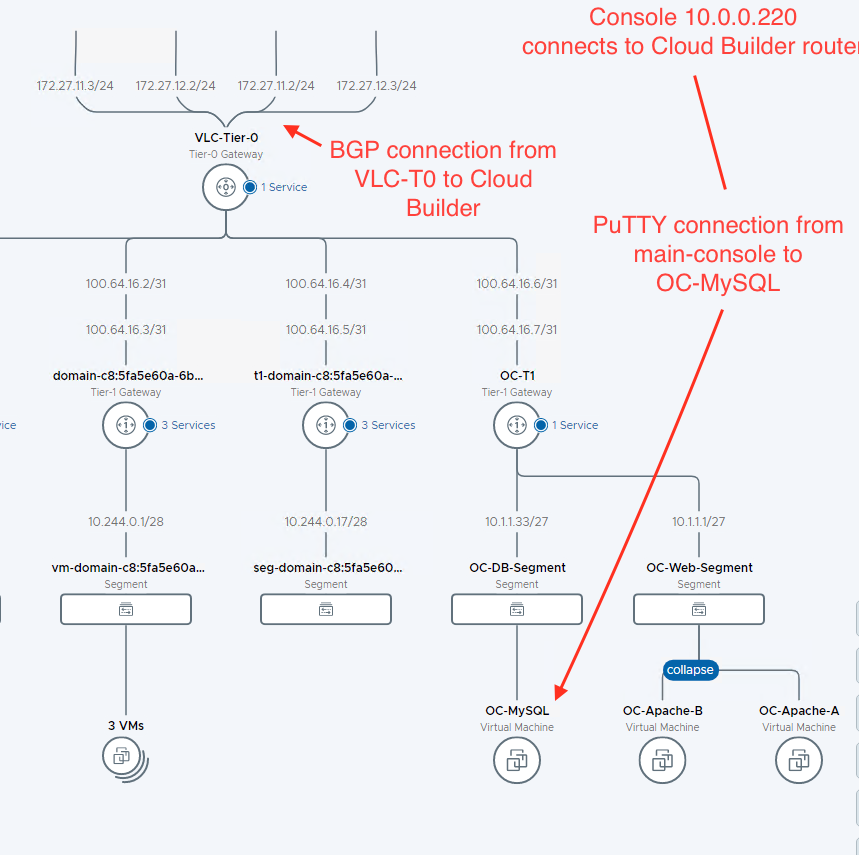

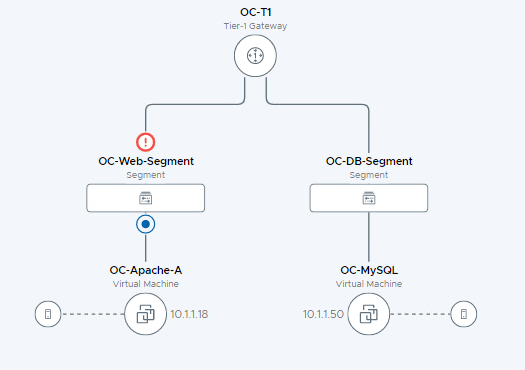

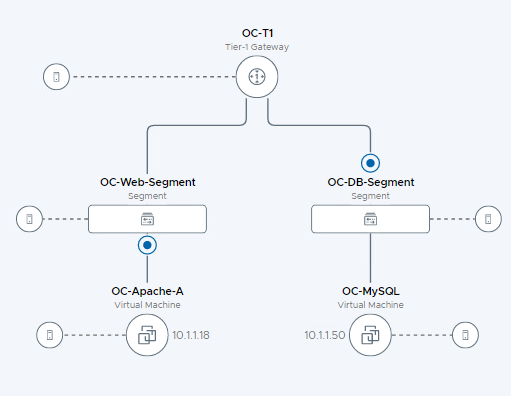

[Step 4] Review network topology

- On the Holo-Console, open a new tab in the Chrome browser

- Click the Managed bookmarks folder in the bookmark bar then select Mgmt Domain->Mgmt NSX

- Log into NSX Manager as the user admin with the password VMware123!VMware123!

- Click Networking

- Click Network Topology

- Click to zoom in to see the OC-T1 Tier-1 Gateway

- Observe the newly created Tier-1 Router and connected segments are visible on the topology map

- Expand the VM’s under each segment.

[Step 5] Test Web to App communications

- Using the Chrome bookmarks, access the vCenter Server Web Client by navigating to Managed bookmarks->Mgmt Domain->Mgmt vCenter

- Launch the vSphere Client and login to the Mgmt vCenter using the username administrator@vsphere.local and the password of VMware123!

- From the hosts and clusters view, click on OC-MySQL

- Power on the OC-MySQL VM

- Wait for the OC-MySQL VM to boot

- Click Launch Web Console

- If a login prompt is not shown, press Enter

- Login ocuser password VMware123!

- Enter the following command

ping 10.1.1.18

- Wait for the ping command to output a few lines. Type Control-C to stop the ping command

- Observe the success of the ping command.

This success indicates successful communication between the OC-MySQL and OC-Apache-A (10.1.1.18) VMs. For this communication to be successful, the network traffic had to traverse the OC-T1 router configured earlier

[Lab 4 Summary]

Lab 4 added L3 routing between segments in less than 10 minutes. Compare this to your experience in time taken to get a multi-tier network stood up in your environment.

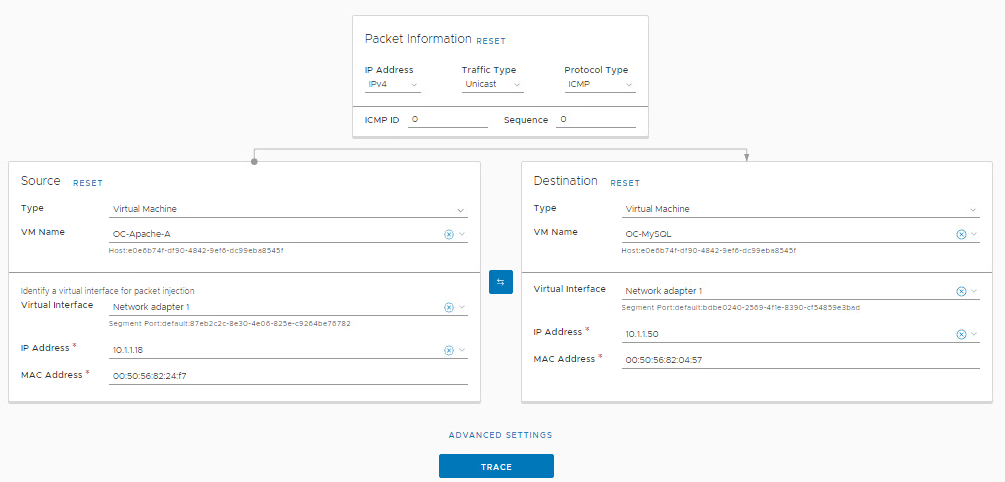

Lab 5: View L3 packet flow within a host

This lab uses the Traceflow feature in NSX to view traffic moving between virtual machines on the same host, on different segments. In most datacenters, the network path required to allow for this communication requires packets moving through to an external router at the top of rack, or end of row, or datacenter core router.

[Step 1] Setup VMs for test

- Using the Chrome bookmarks, access the vCenter Server Web Client by navigating to Managed bookmarks->Mgmt Domain->Mgmt vCenter

- Launch the vSphere Client and login to the Mgmt vCenter using the username administrator@vsphere.local and the password of VMware123!

- From the hosts and clusters view, click on OC-Apache-A to determine which ESXi host it is running on. In this example, OC-Apache-A is running on host esxi-3.vcf.sddc.lab

- Next click on OC-MySQL and determine the host it is running on.

- If both VMs (OC-Apache-A and OC-MySQL) are running not on the same ESXi host, initiate a vMotion to move them to the same host

To perform the vMotion, right click on the VM and select Migrate.

Click Next to change the compute resource only

Select the ESXi host to migrate to, then click Next

Click Next to accept the default network selection

Click Next to accept the default vMotion priority

Click Finish to perform the migration

[Step 2] View Layer 3 communications in NSX Traceflow

- On the Holo-Console, open a new tab in the Chrome browser

- Click the Managed bookmarks folder in the bookmark bar then select Mgmt Domain->Mgmt NSX

- Log into NSX Manager as the user admin with the password VMware123!VMware123!

- Click Plan & Troubleshoot

- Click Traffic Analysis in the left navigation panel

- Click Get Started on the Traceflow box

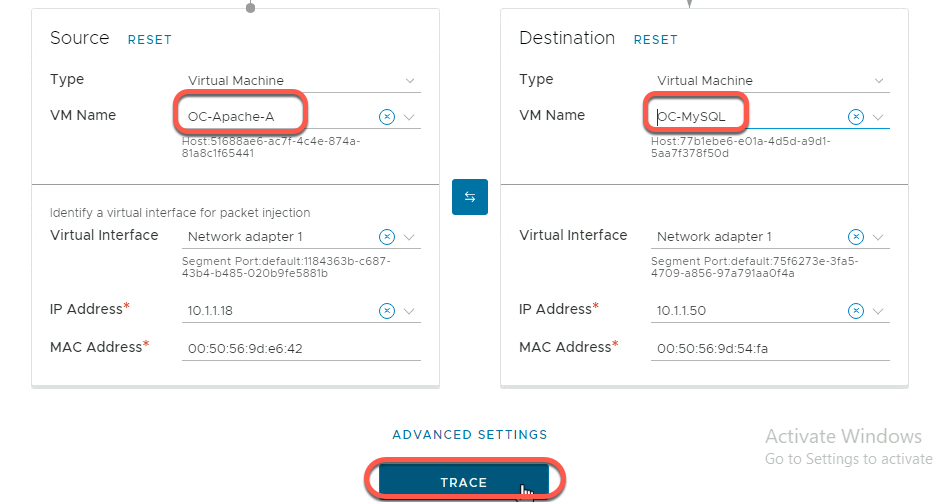

- Configure a Traceflow from OC-Apache-A to OC-MySQL

- Click Trace

- Notice the communication path traverses the OC-T1 router in the Traceflow topology diagram.

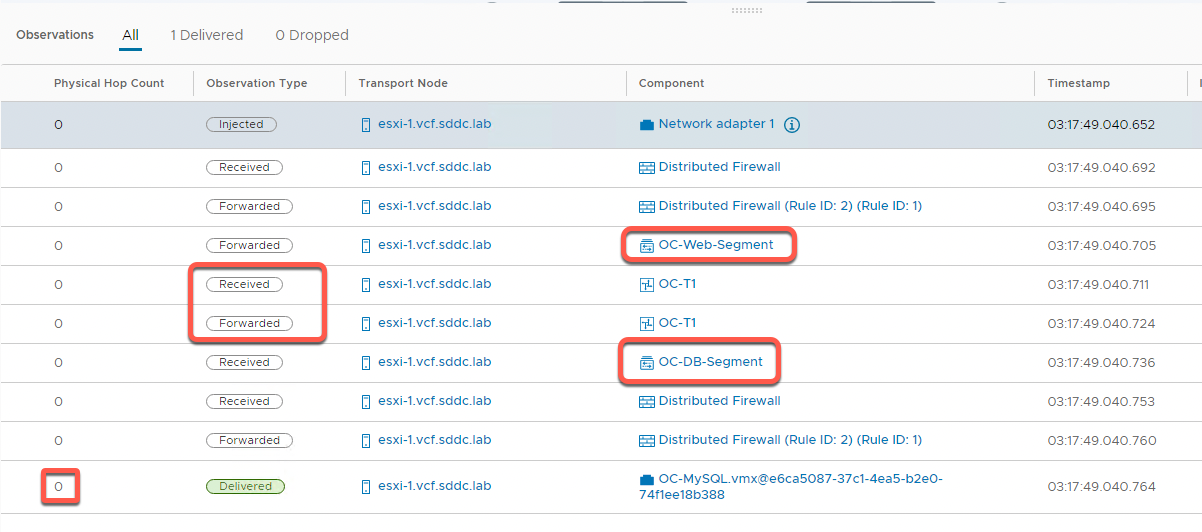

- In the Observations panel, review the following:

- As before, with no firewall rules in place, one packet was delivered

- Notice the packet routed between the OC-Web-Segment and OC-DB-Segment via the OC-T1 router, while never leaving the host as the Physical Hop Count remains zero

Lab 5 Summary

Lab 5 is a very simple example of the power of distributed routing in NSX. In a traditional environment, L3 routing happens in the datacenter, somewhere away from the server. There are many different architectures but each effectively requires the packets to leave the host to be routed elsewhere in the datacenter to get back to a VM on the same host. With NSX distributed routing, the routing happens right at the host between different connected segments.

Lab 6: View L3 packet flow between hosts

This lab demonstrates the use of the Traceflow feature in NSX to view traffic moving between virtual machines on the different hosts and segments. In most datacenters, the network path required to allow for this communication requires packets moving through an external router. This example shows the power of distributed routing.

[Step 1] Setup VMs for test

- Using the Chrome bookmarks, access the vCenter Server Web Client by navigating to Managed bookmarks->Mgmt Domain->Mgmt vCenter

- Launch the vSphere Client and login to the Mgmt vCenter using the username administrator@vsphere.local and the password of VMware123!

- From the hosts and clusters view, click on OC-Apache-A to determine which ESXi host it is running on.

- Next click on OC-MySQL and determine the host it is running on.

- If both VMs (OC-Apache-A and OC-MySQL) are running on the same ESXi host, initiate a vMotion to move one of them to a different host

To perform the vMotion, right click on the VM and select Migrate.

Click Next to change the compute resource only

Select the ESXi host to migrate to, then click Next

Click Next to accept the default network selection

Click Next to accept the default vMotion priority

Click Finish to perform the migration

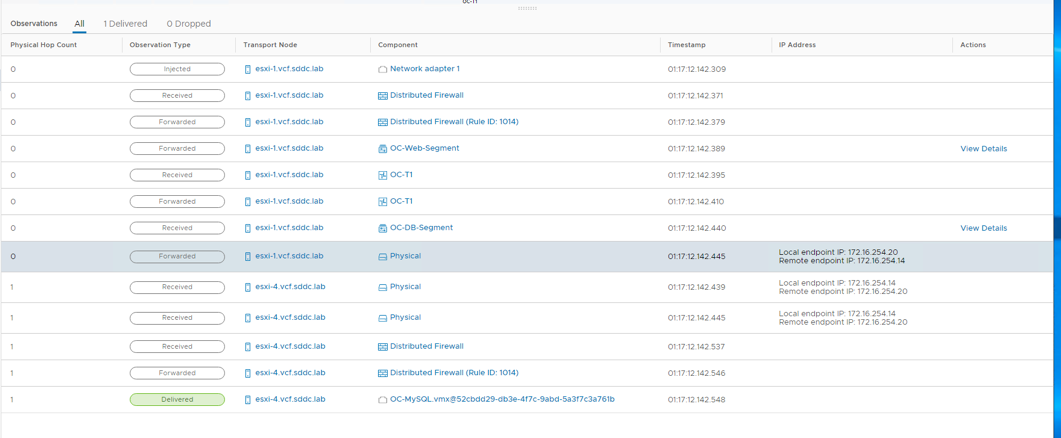

[Step 2] View Layer 3 communications in NSX Traceflow

- On the Holo-Console, open a new tab in the Chrome browser

- Click the Managed bookmarks folder in the bookmark bar then select Mgmt Domain->Mgmt NSX

- Log into NSX Manager as the user admin with the password VMware123!VMware123!

- Click Plan & Troubleshoot

- Click Traffic Analysis in the left navigation panel

- Click Get Started on the Traceflow box

- Configure a Traceflow from OC-Apache-A to OC-MySQL

- Click Trace

- Notice the communication path traverses the OC-T1 router in the Traceflow topology diagram.

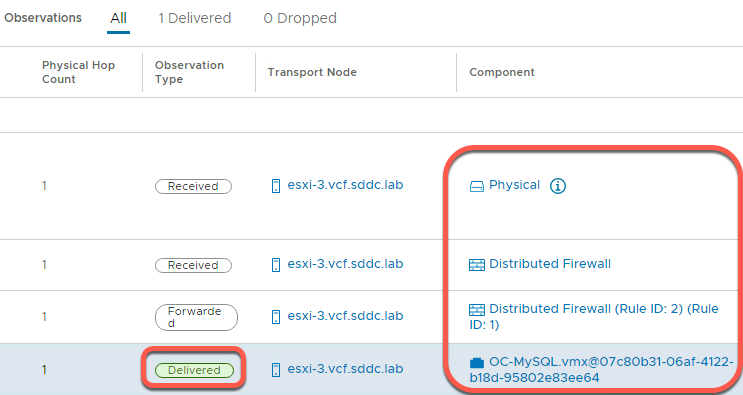

- In the Observations panel, review the following

- One packet was delivered

- The packet was injected at the network adapter for OC-Apache-A virtual machine

- It is then received at the distributed firewall at the VDS port for OC-Apache-A

- With no rule blocking, the packet is then forwarded on from the sending VDS port

- The packet then hits OC-T1 router and gets forwarded to the OC-Web-Segment

- Since OC-Apache-A and OC-MySQL are running on different ESXi hosts, the physical hop count increases

- The packet is then received on the distributed firewall at the receiving VDS port for OC-MySQL

- With no rule blocking forwarding, the packet is then forwarded to the destination

- The last step shows the packet being delivered to the network adapter for the OC-MySQL VM

[Step 3] Test end to end communications

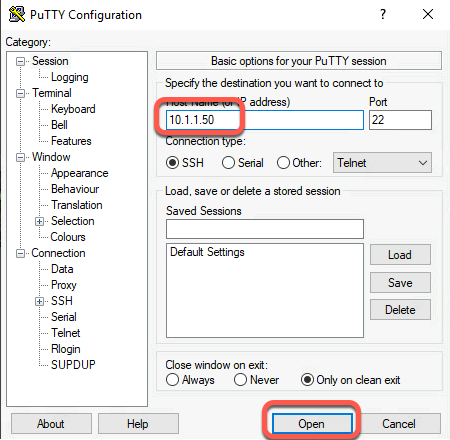

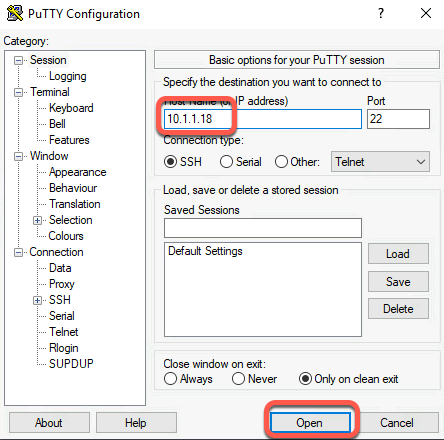

- On the Holo-Console, double click the PuTTY icon on the desktop to start the PuTTY application

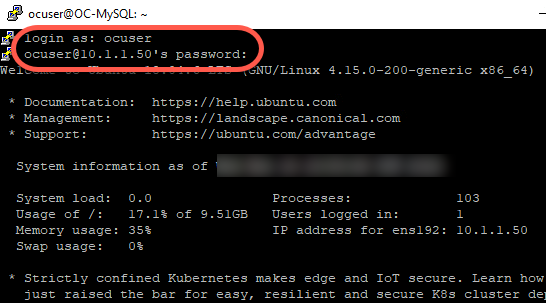

- Enter 10.1.1.50 for the IP address to connect to. This is the IP address for the OC-MySQL VM

- Click Open

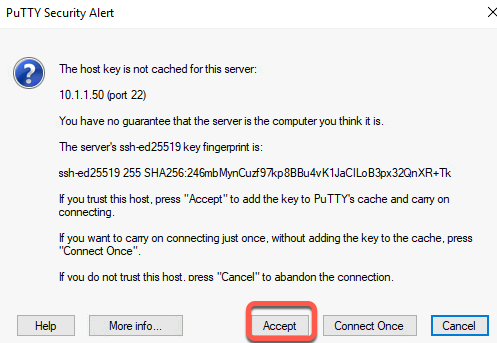

- Click Accept to add the SSH host key to PuTTY’s cache and continue to connect

- If a login prompt does not appear, close the PuTTY window, and restart this step.

- Login with the username ocuser and the password VMware123!

- Successfully connecting from the Holo-Console to the OC-MySQL VM verifies the entire SDN connection. In this lab configuration, the NSX Edge Cluster connects via BGP to the pod router where the Holo-Console is connected. Secure SSH traffic from the Holo-Console flows to the pod router, over BGP links to the Tier-0 router, to the OC-T1 router, to the OC-MySQL VM on OC-App-Segment, and returns.

[Lab 6 Summary]

Lab 5 demonstrates packets traveling between the VDS ports for two virtual machines running on different ESXi hosts across two segments connected by a Tier-1 router. The important distinction is this router functionality was distributed across all hosts versus a physical device cabled somewhere else in the datacenter.

Lab 7: Testing the application

This lab verifies the web server VMs and database VM are working correctly prior to moving on to other topics, such as load balancing and security.

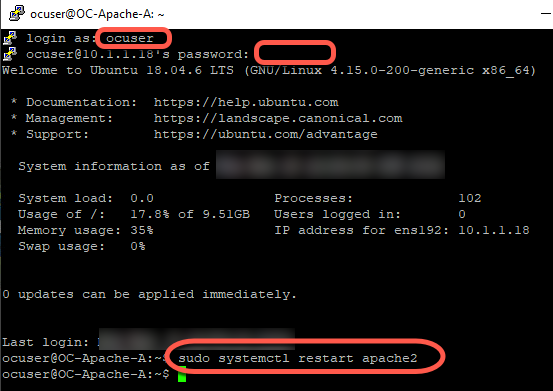

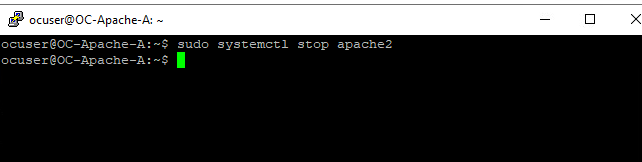

[Step 1] Restart web servers

- On the Holo-Console, double click the PuTTY icon on the desktop to start the PuTTY application

- Enter 10.1.1.18 for the IP address to connect to. This is the IP address for the OC-Apache-A VM

- Click Open

- Click Accept to add the SSH host key to PuTTY’s cache and continue to connect

- If a login prompt does not appear, close the PuTTY window, and restart this step.

- Login with the username ocuser and the password VMware123!

- Run the following command:

sudo systemctl restart apache2

- Repeat this step for the OC-Apache-B VM using the IP address of 10.1.1.19

[Step 2] Test Opencart app

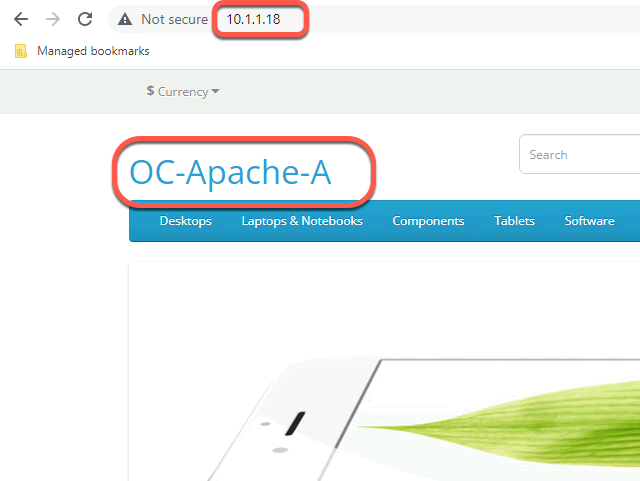

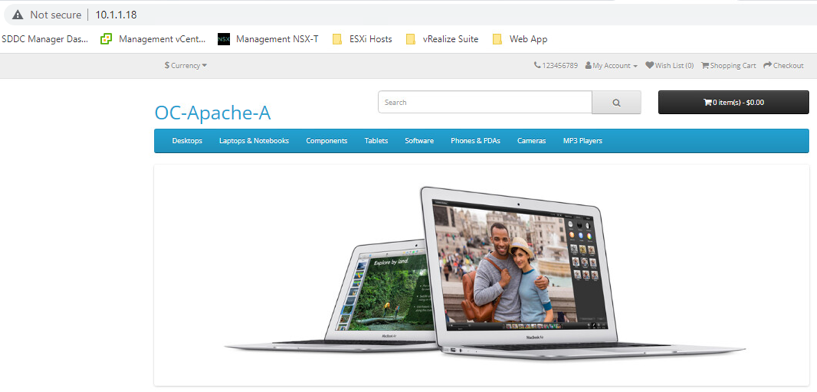

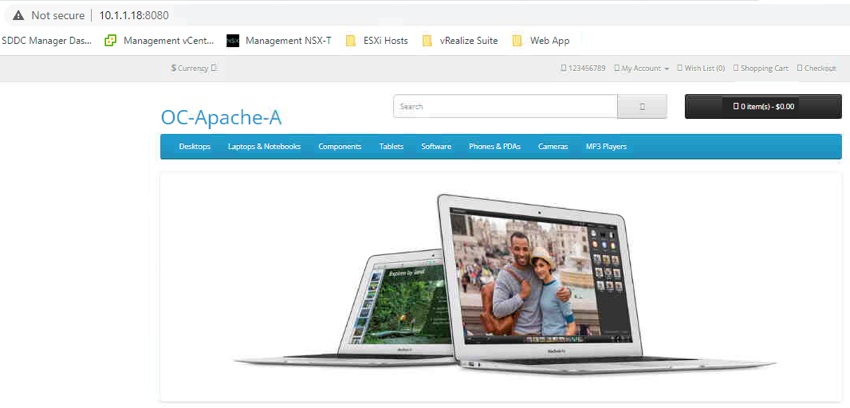

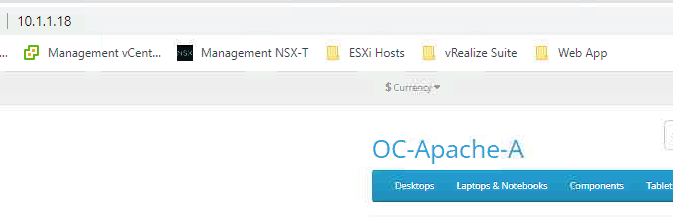

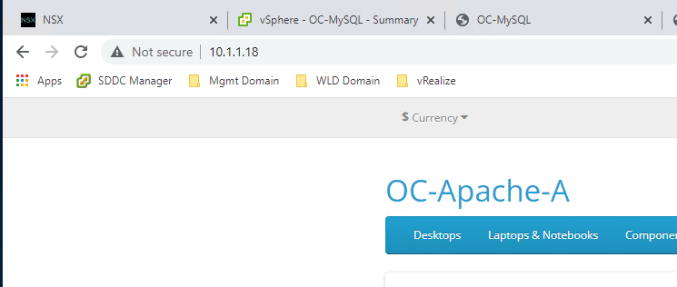

- On the Holo-Console, open a new tab in the Chrome browser

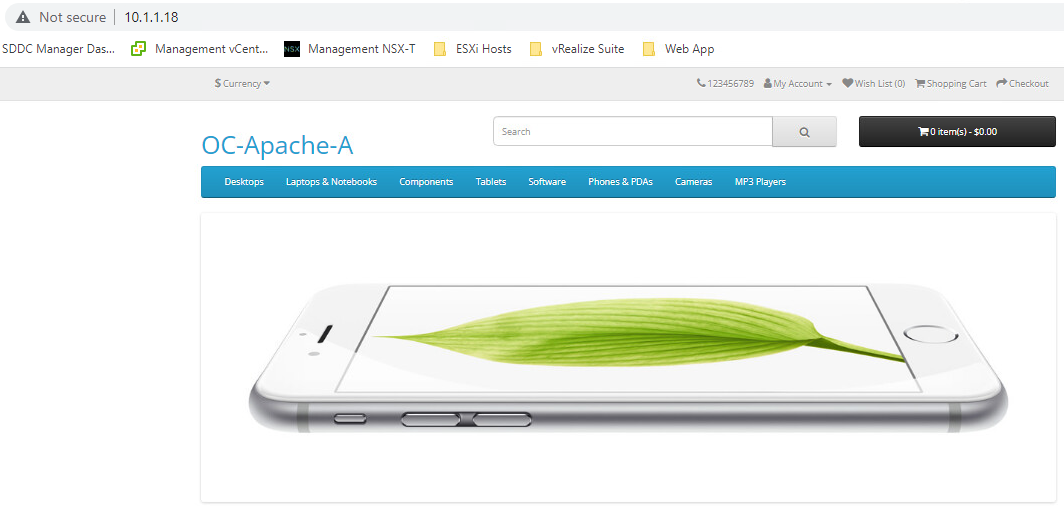

- Connect to OC-Apache-A 10.1.1.18

- Observe the OC-Apache-A web page loads. Note that the name of the host (OC-Apache-A) is shown on the page to assist in identifying what webserver is hosting the web page.

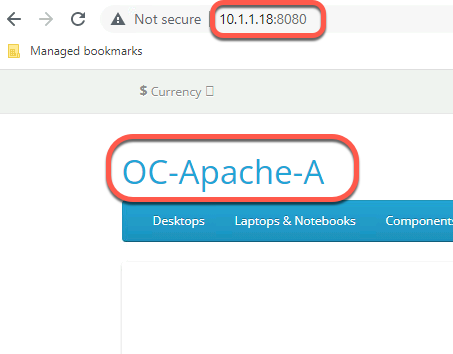

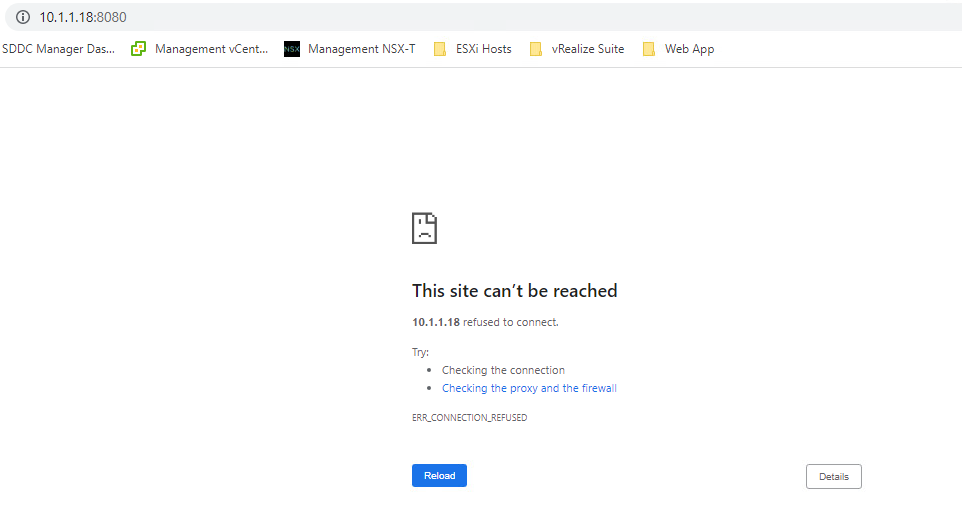

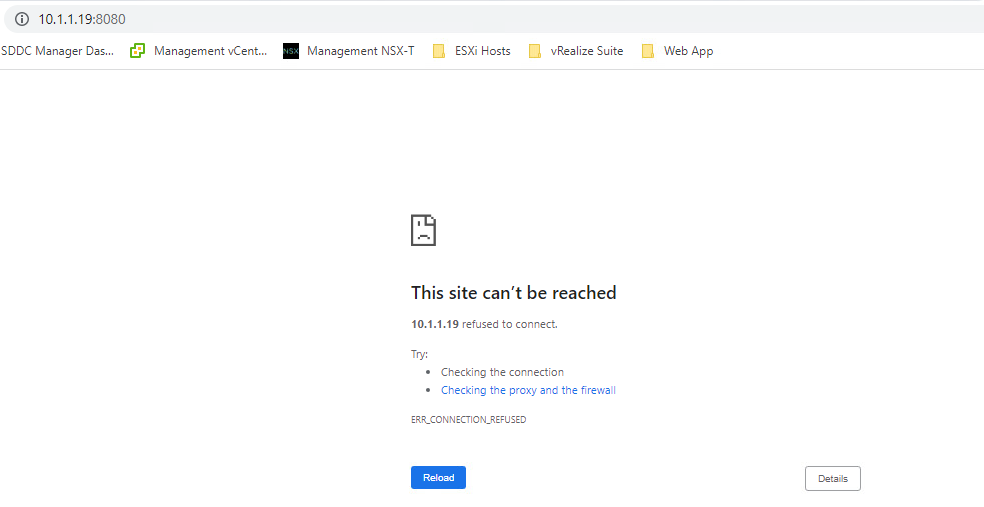

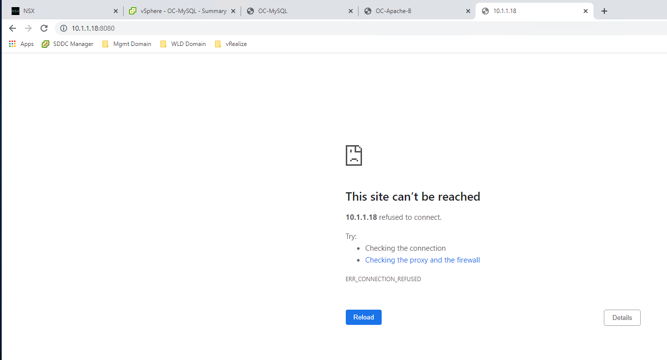

- Connect to the alternate port (8080) on OC-Apache-A by using the address of 10.1.1.18:8080. The identical output is shown. Port 8080 is used later in the security lab.

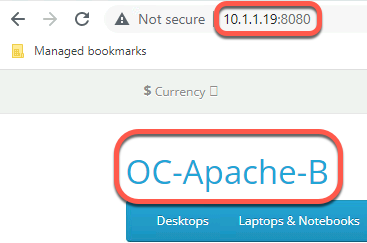

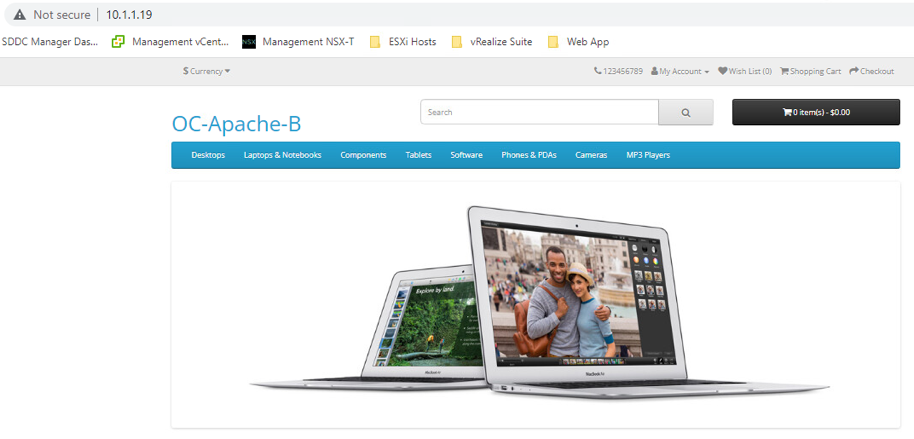

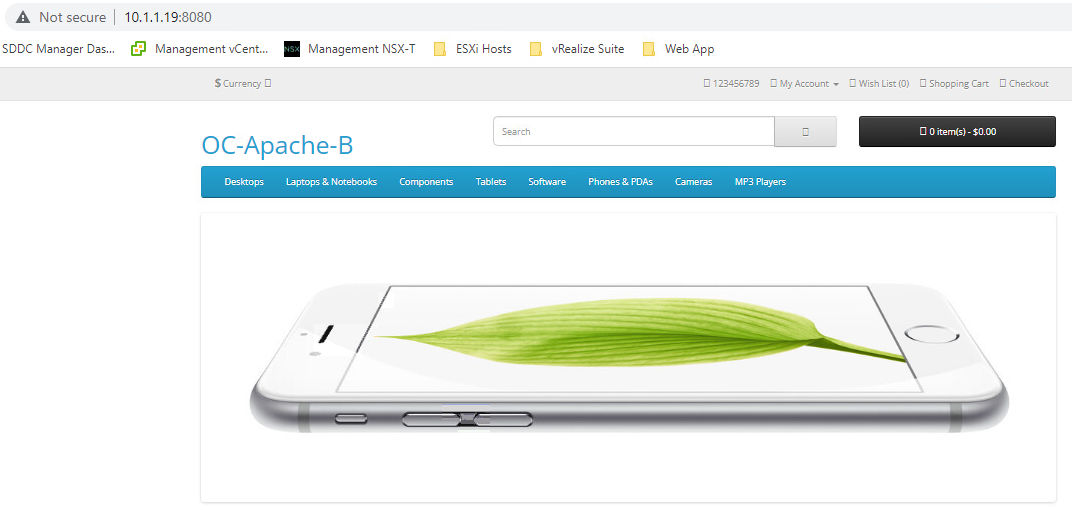

- Repeat this step for the OC-Apache-B VM using the IP address of 10.1.1.19

[Lab 7 Summary]

Congratulations. In just the time it took to get this far in the lab, two brand new overlay network segments and a software defined router were deployed and configured to allow for network traffic between the segments.

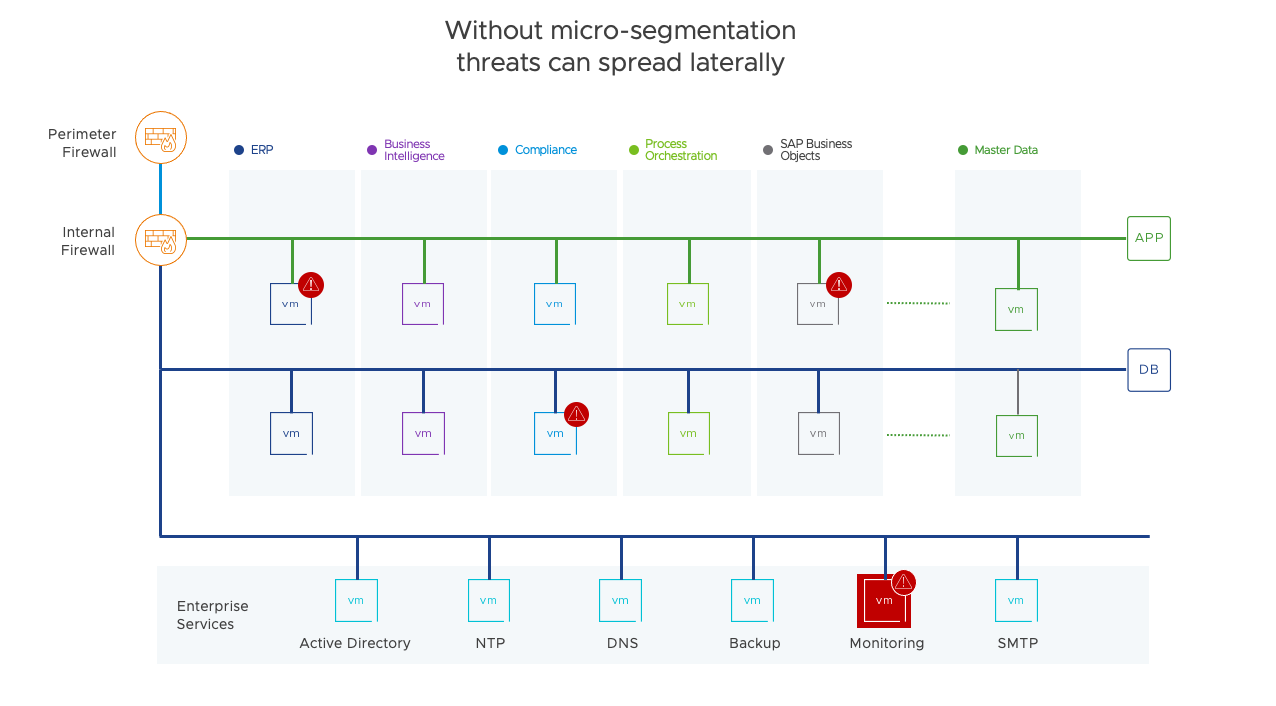

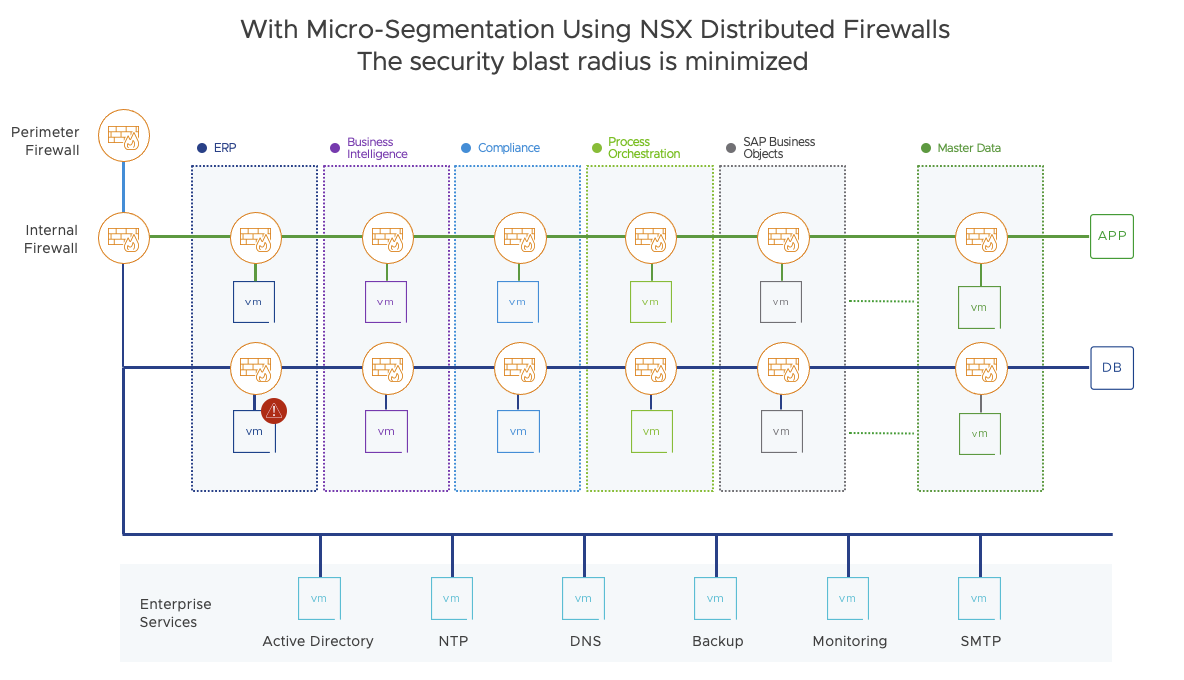

Module 2: Changing the Security Game with Microsegmentation

Over the past 10+ years traffic in a datacenter has changed. More and more traffic remains within the datacenter, moving between distributed application components. This traffic, known as East-West, is difficult to secure using traditional perimeter firewalls, which were predominantly designed for traditional North-South traffic.

Microsegmentation enables administrators to increase the agility and efficiency of the datacenter while maintaining an acceptable security posture. Microsegmentation decreases the level of risk and increases the security posture of the modern data center.

Microsegmentation with NSX in VCF is applied at the vNIC to VDS interface. Packets are inspected as they enter and leave each virtual machine. Microsegmentation is effectively a centralized packet filtering solution that acts on every machine.

Lab 1: Tagging VMs and Grouping Workloads based on Tags

This lab explores the use of tagging to create groups of VMs to apply specific distributed firewall rules to. In small environments, creating groups based on VM name may suffice. However, as an environment grows, tagging may be a better alternative.

Terminology and definitions:

- Tags – A virtual machine is not directly managed by NSX, however, NSX allows attachment of tags to a virtual machine. This tagging enables tag-based grouping of objects. For example, a tag called AppServer can be associated to all application servers).

- Security Groups – A security group is a collection of assets or grouping objects from your vSphere inventory.

Security Groups are containers that can contain multiple object types including logical switch, vNIC, IPset, and Virtual Machine (VM). Security groups can have dynamic membership criteria based on security tags, VM name or logical switch name. For example, all VMs that have the security tag web will be automatically added to a specific security group destined for Web servers. After creating a security group, a security policy is applied to that group.

- Security Policies – A security policy is a set of Guest Introspection, firewall, and network introspection services that can be applied to a security group. The order in which security policies are displayed is determined by the weight associated with the policy. By default, a new policy is assigned the highest weight so that it is at the top of the table. However, you can modify the default suggested weight to change the order assigned to the new policy. Policies can be stateful or stateless.

Note: Tagging in NSX is distinct from tagging in vCenter Server. At this time, vCenter Server tags cannot be used to create groupings in NSX. In larger, more automated environments, customers use a solution such as vRealize Automation to deploy virtual machines and containers with security tagging set at time of creation.

Given that the OpenCart application used in this lab only has two web servers and one database server, we’re going to create two tags as criteria for two groups. This might seem somewhat redundant, creating one tag per group, however it’s essential to remember:

- This is a small sample two-tier application. For applications leveraging micro-services, you’ll be able to group more than one machine under one tag, and better leverage the security groups

- The advantage of using tags and groups is also an operational one. Once you create your infrastructure around Security Groups that contain tags, the moment you tag a machine with a specific tag, it immediately inherits the specific Security Group, firewall rules and so on. This brings us closer to the cloud delivery model.

- The downside is that a certain level of caution needs to be implemented when working with tags and Security Groups, meaning that it’s just as easy to add a machine to an existing Security Group and avoid the complication that comes with setting up the firewall rules, but it is also just as easy to evade good security by giving the new machine too many permissions due to old tags/security group configurations.

To show the capability of tags we will set up OC-Apache-A with the appropriate Tags and Security Group. And then we’ll have OC-Apache-B inherit the web tag and see how easy it is to apply all the appropriate rules to a new machine. The VM->Tag->Group mapping is as follows

| VM | IP Address | Tag | Security Group |

| OC-MySQL | 10.1.1.50 | OC-DB-Tag | OC-DB-Group |

| OC-Apache-A | 10.1.1.18 | OC-Web-Tag | OC-Web-group |

| OC-Apache-B | 10.1.1.19 | OC-Web-Tag | OC-Web-Group |

[Step 1] Create Tags

- On the Holo-Console, open a new tab in the Chrome browser

- Click the Managed bookmarks folder in the bookmark bar then select Mgmt Domain->Mgmt NSX

- Log into NSX Manager as the user admin with the password VMware123!VMware123!

- Navigate to Inventory->Tags

- Click on ADD TAG

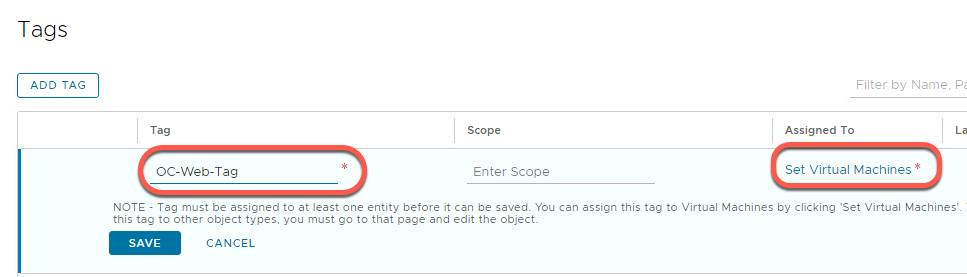

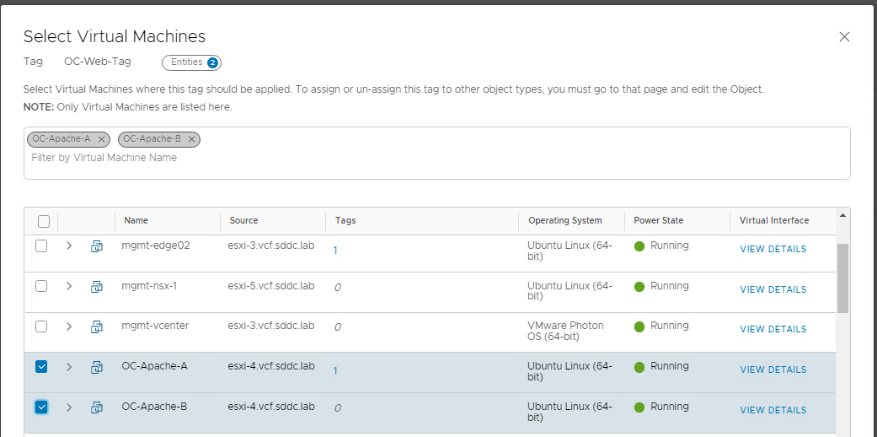

- We will first create a new tag for the web servers. Add the name OC-Web-Tag in the Tag field. Don’t hit save yet. As you can see, the note says the tag must be assigned to at least one object first.

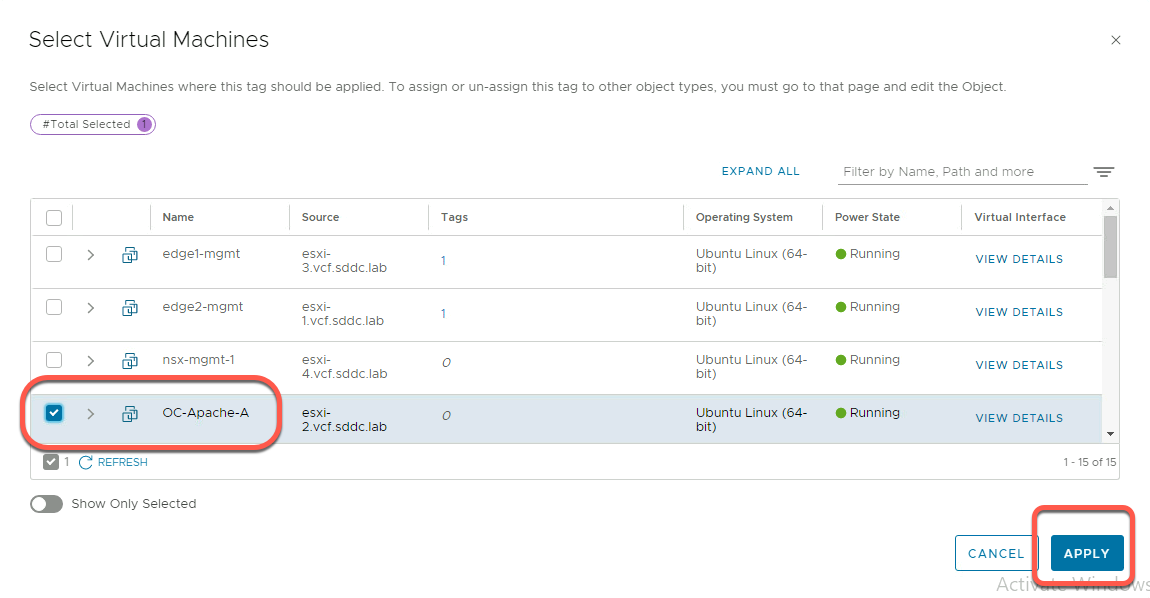

- Assign this tag to the OC-Apache-A virtual machine. Click on the Set Virtual Machines link field and select the OC-Apache-A virtual machine (you may need to scroll). For now, do not select OC-Apache-B as it will added later

- Click APPLY

- Note the “Assigned To” value has incremented to 1

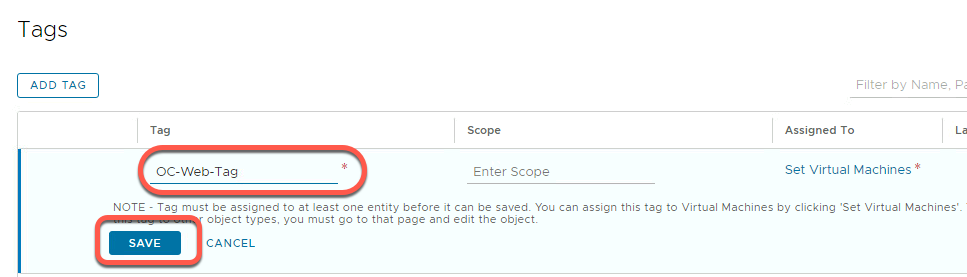

- Click SAVE to save the new tag

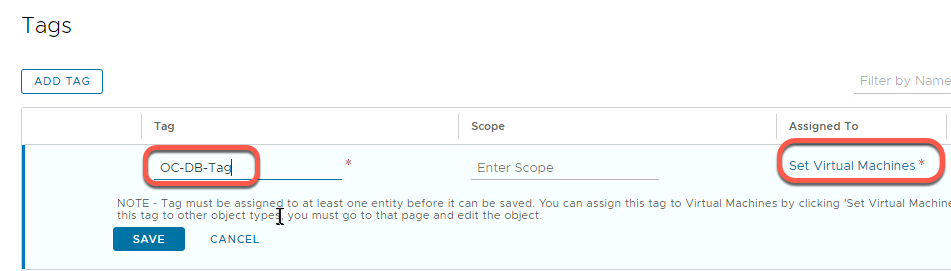

- Click Add Tag

- Add the name “OC-DB-Tag”

- Click Set Virtual Machines then select OC-MySQL

- Click Apply

- Click Save

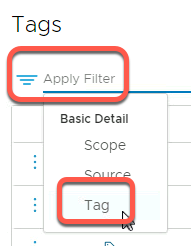

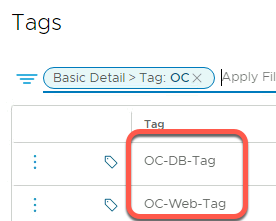

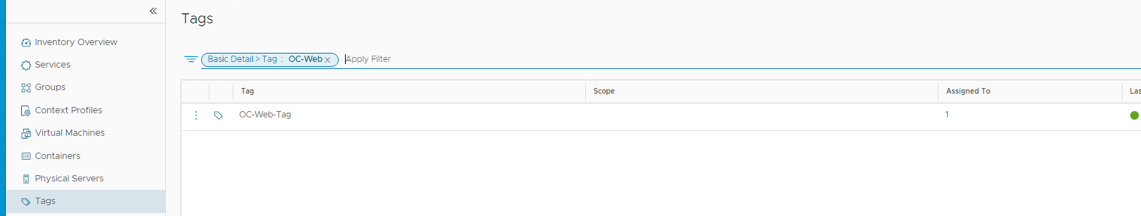

Step 2: Verify Tags

- Click on Inventory->Tags->Filter by Name, Path and more

- Click Tag

- Search for Tags with the string “OC” in the name and click OK

- Verify the two tags created earlier are displayed

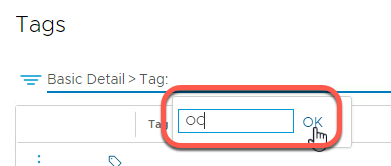

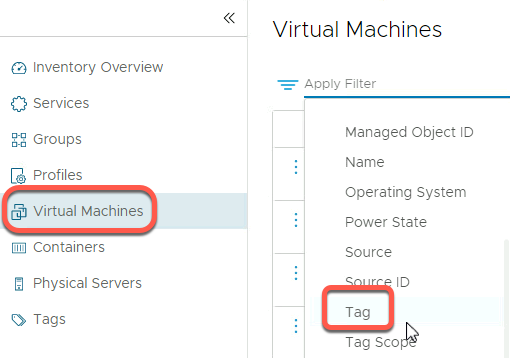

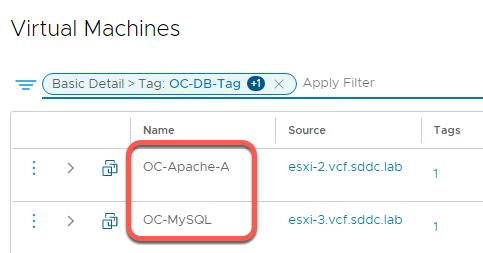

Step 3: Verify virtual machines are mapped to tags.

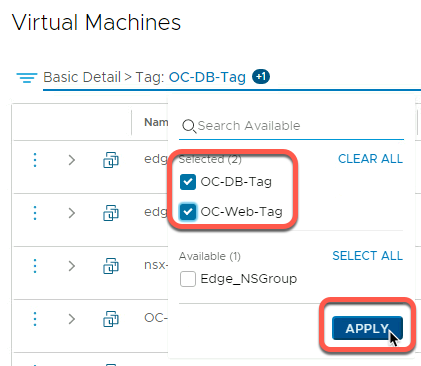

- Select Inventory->Virtual Machines and click in the Filter area

- Scroll down in Basic Detail and select Tag

- Select our two tags and click Apply

- Verify OC-Apache-A and OC-MySQL are present

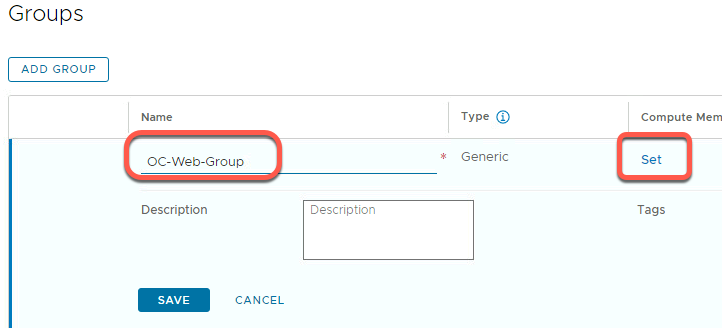

Step 4: Create Groups

Group mapping is as follows

| Tag | Security Group |

| OC-DB-Tag | OC-DB-Group |

| OC-Web-Tag | OC-Web-Group |

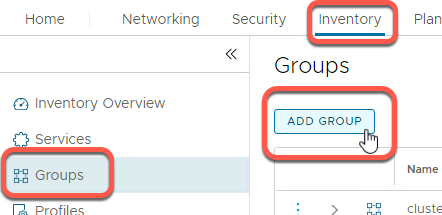

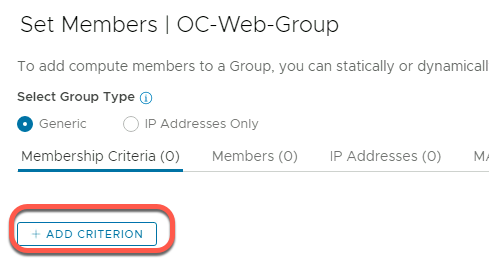

Step 5: Create OC-Web-Group

- Click Inventory->Groups->ADD GROUP

- Add group name OC-Web-Group

- Click on “Set” in the Compute Members column to add group members. In this example we will use the tags we just created to populate the group.

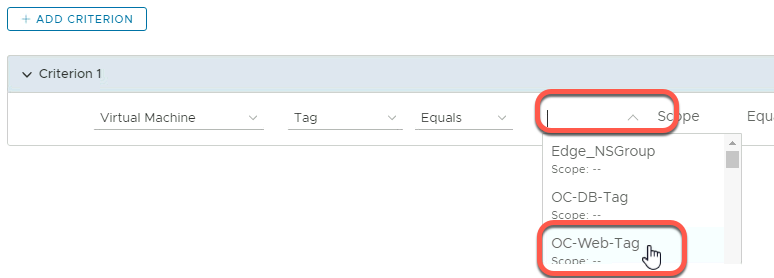

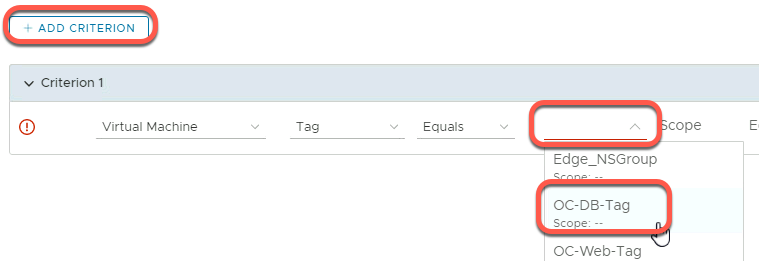

- Click Add Criterion

- Select Virtual Machine, that filters on Tag Equals “OC-Web-Tag”

- Click Apply

- Click Save

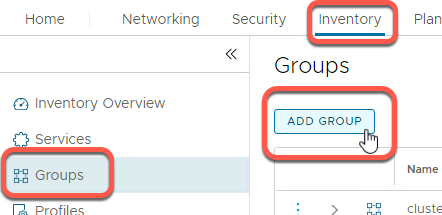

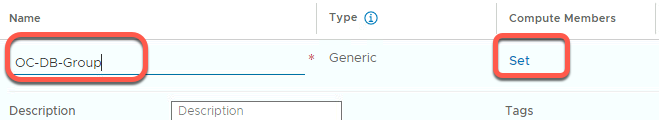

Step 6: Create OC-DB-Group

- Click Inventory->Groups->ADD GROUP

- Add group name OC-DB-Group

- Click on Set to add group members. In this example we will use the tags we just created to populate the group

- Click Add Criterion

- Click on the Scope and select the OC-DB-Tag tag

- Click Apply

- Click Save

Step 7: Verify Groups

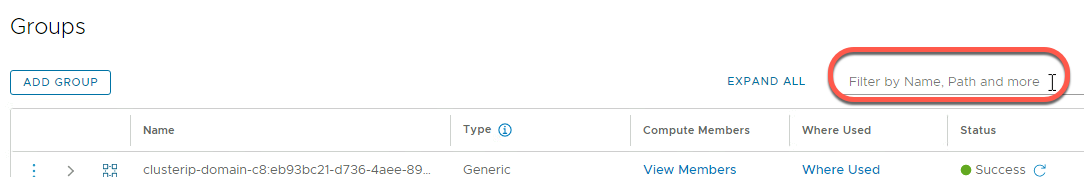

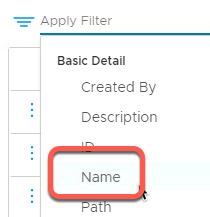

- On the Inventory->Groups panel click in the Filter by Name, Path and More field

- Click on Name in the Basic Detail column

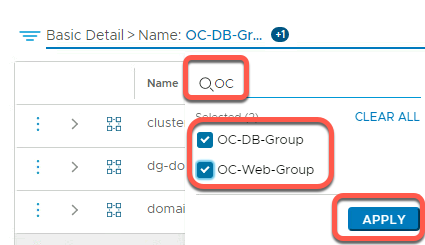

- Type OC to filter for our group names

- Select the OC-Web-Group and OC-DB-Group groups

- Click Apply

- Click View Members for each group

- Each group should have a single VM as a member (we can ignore IP addresses, Ports and VIF for now).

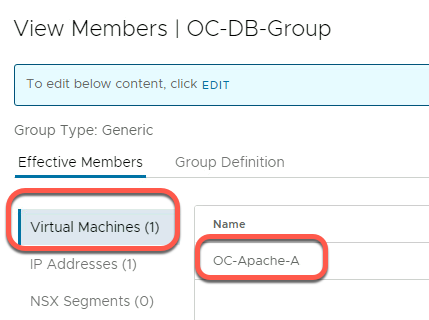

The following example shows the view when looking at the View Members details for the OC-DB-Group

- At this point we have implemented the following:

| VM | Tag | Group | Configured |

| OC-Apache-A 10.1.1.18 | OC-Web-Tag | OC-Web-Group | Yes |

| OC-Apache-B 10.1.1.19 |

|

| No |

| OC-MySQL 10.1.1.50 | OC-DB-Tag | OC-DB-Group | Yes |

[Lab 1 Summary]

Lab 1 shows how to create tagging and grouping in NSX. This capability allows creation and management of a scalable set of distributed firewall rules.

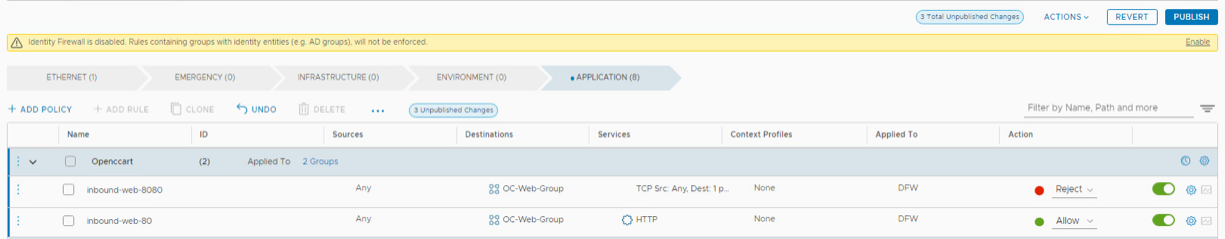

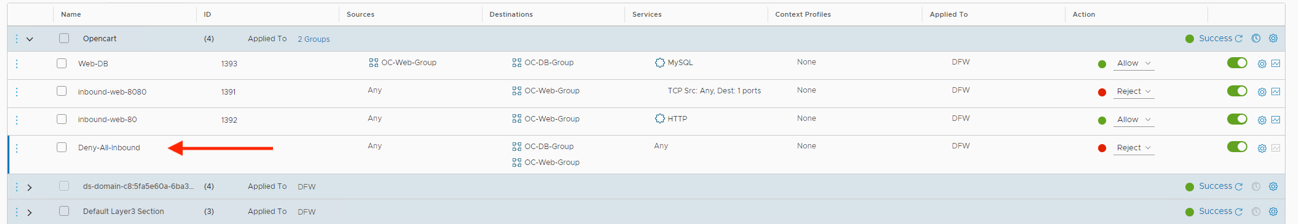

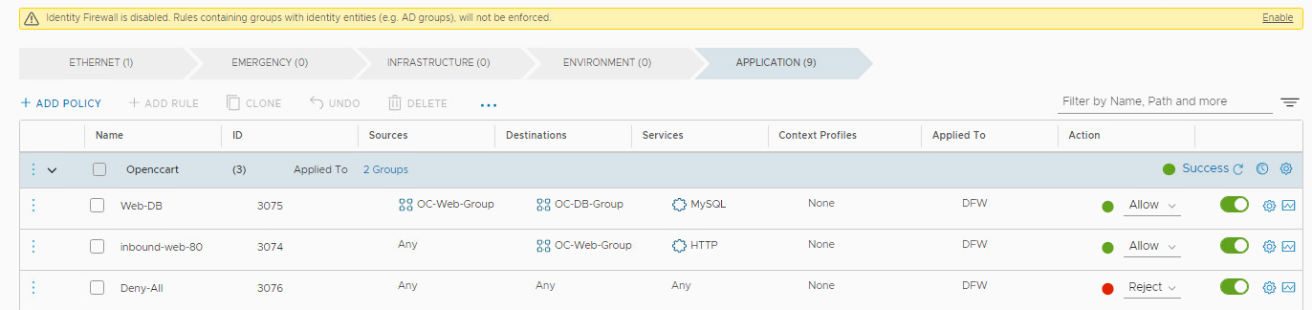

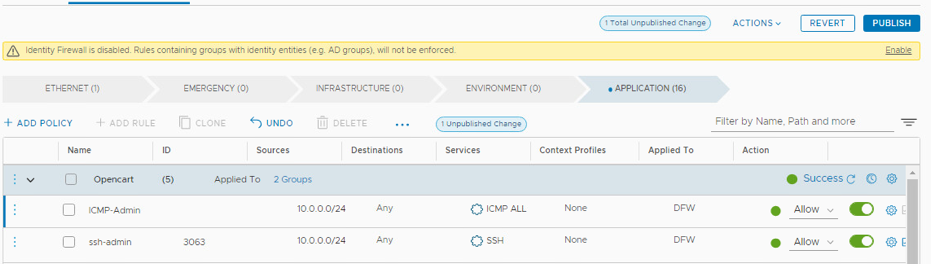

Lab 2: Applying Distributed Firewall Rules Based on Tagging on a segment

This lab will show configuring the distributed firewall to limit access in our Opencart Application. For the purposes of this lab, we will create the following rules.

| Name | Source | Destination | Port/Protocol | Allowed | Notes |

| Inbound-web-80 | Any | OC-Web-Group | HTTP (80) | Allow | Outside to web port 80 |

| Inbound-web-8080 | Any | OC-Web-Group | HTTP (8080) | Reject | Outside to web port 8080 |

| Web-DB | OC-Web-Group | OC-DB-Group | 3306 (MySQL) | Allow | Web to DB comms |

| ssh-admin | 10.0.0.0/24 | OC-DB-Group OC-Web-Group | SSH | Allow | SSH from lab console only |

| ICMP-Admin | 10.0.0.0/24 | OC-DB-Group OC-Web-Group | ICMP ALL | Allow | Allow ICMP only from lab console |

| Deny-All-Inbound | Any | OC-DB-Group OC-Web-Group | Any | Reject | Reject all else inbound |

Keep in mind that this all happening at the distributed firewall level, where firewall rules are implemented at the VM switch port versus needing the services of a routed (perimeter) firewall to implement. Since we have created groups in the previous lab, now we can create access rules based on these groups.

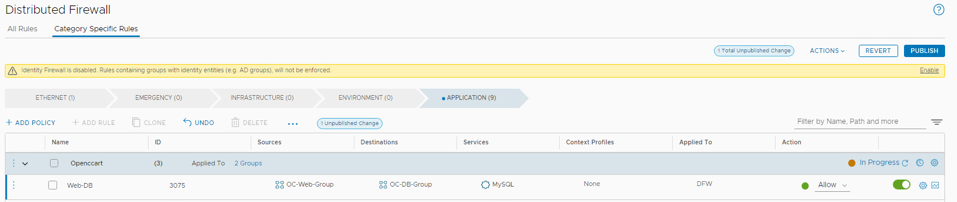

Step 1: Create New Policy

- If necessary, open a new tab in the Chrome browser

- Click the Management NSX-T shortcut in the bookmark bar (click advanced / proceed to nsx-mgmt.vcf.sddc.lab, if required to accept the certificate

- )

- Log into NSX Manager as user: admin with the password: VMware123!VMware123!

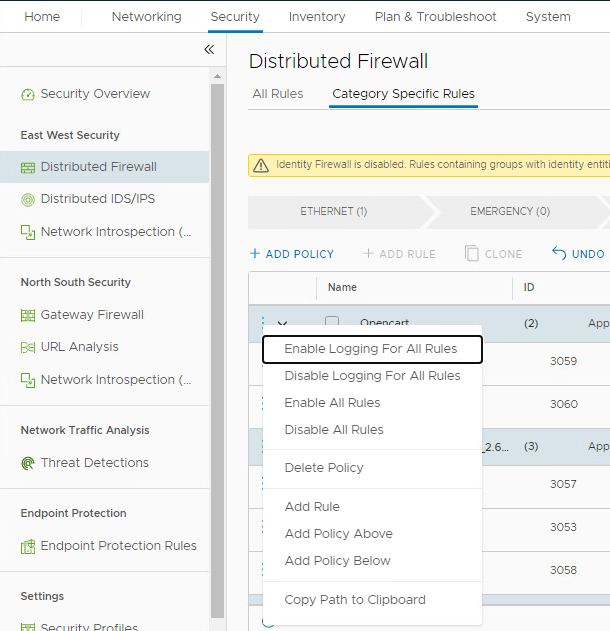

- Navigate to Security > Distributed Firewall in the NSX-T Console

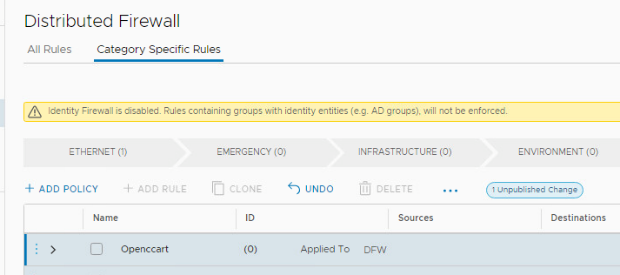

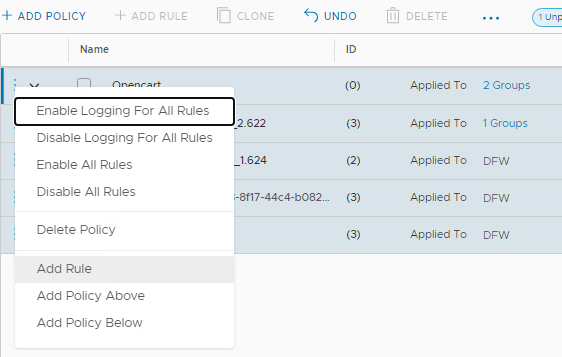

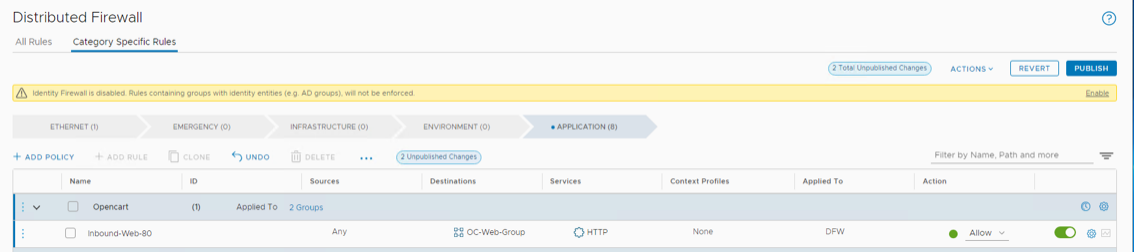

- Click on Add Policy > New policy, Name the policy “Opencart”

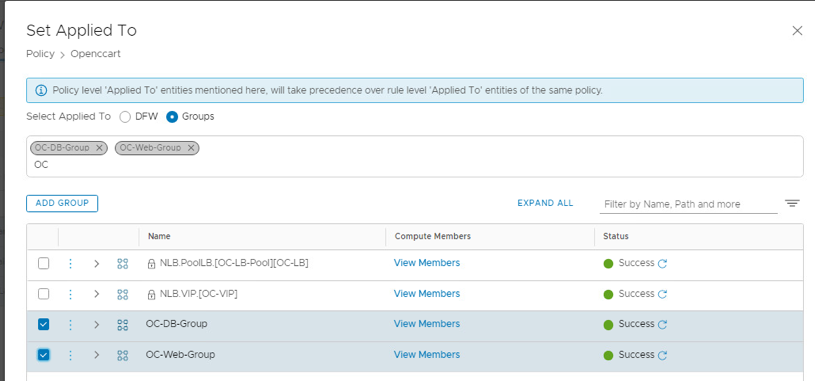

- Hover over DFW in the Opencart policy row, then click the pencil twice.

- Note the default is the entire distributed firewall, however we want this rule to apply to the groups we created in the previous labs.

- Click the Groups radio button

- Search for OC then select OC-DB-Group and OC-Web-Group

- Click Apply

Step 2: Add inbound-web-80 rule

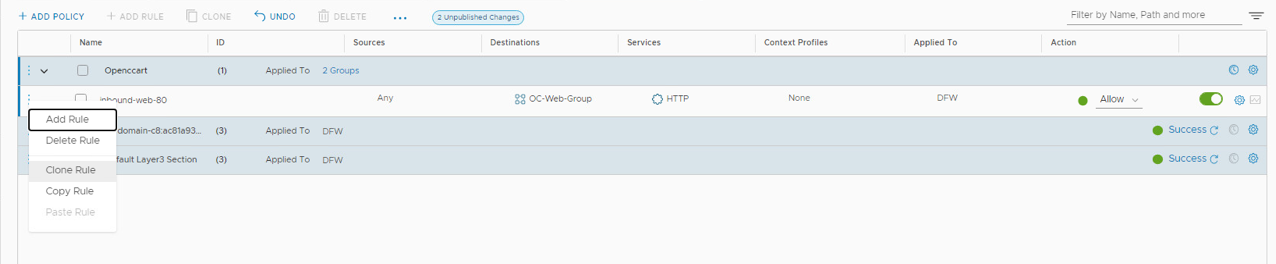

- Click on the three vertical dots on the left of the Opencart policy.

- Click Add Rule

- Click on Add Rule and change name to inbound-web-80

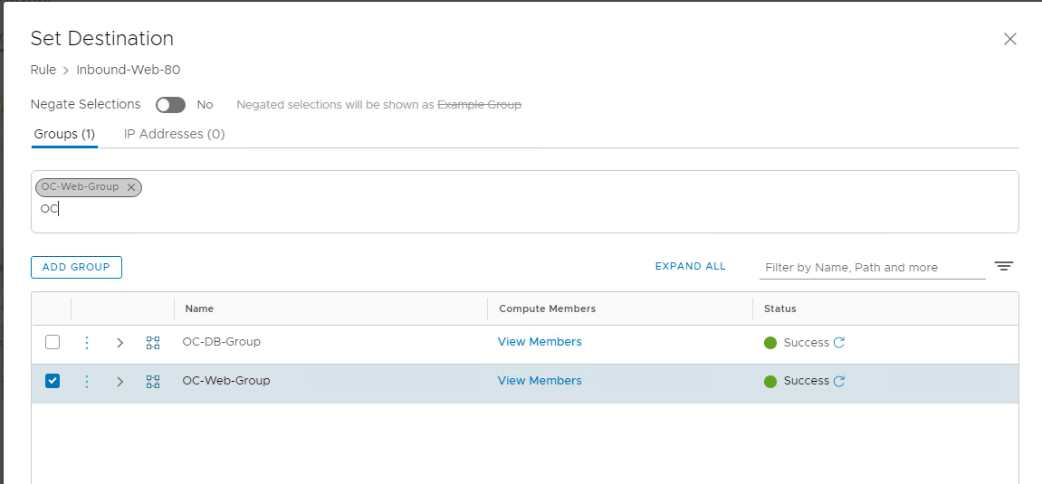

- Hover over Destinations, then click the pencil icon twice

- This brings up the Set Destination pop-up. Type OC in the search box then click on OC-Web-Group checkbox, then apply

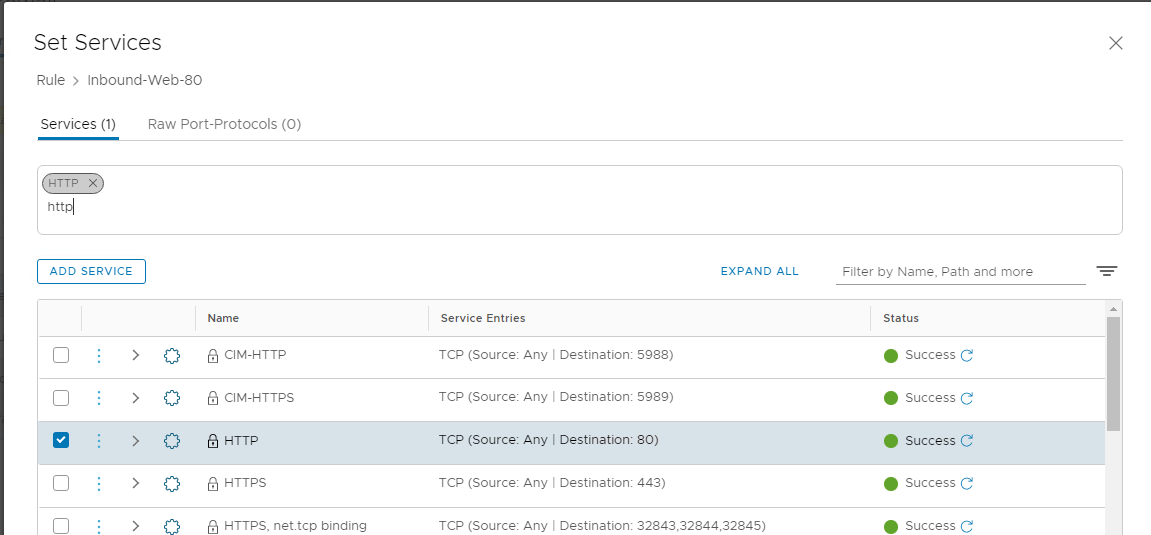

- Hover over Services, then click the pencil icon

- Type HTTP in the services area then select HTTP

- Click Apply

- The result should be the following:

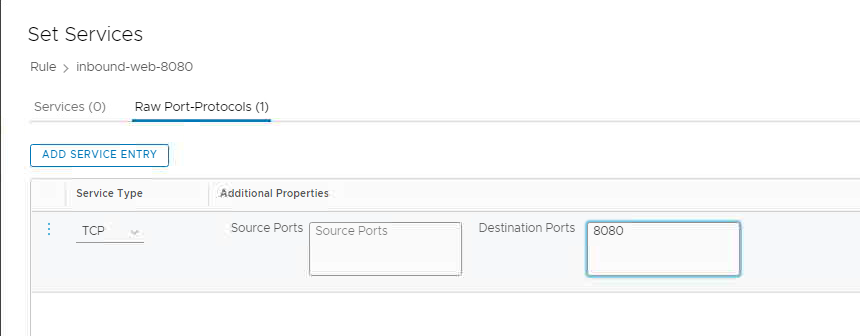

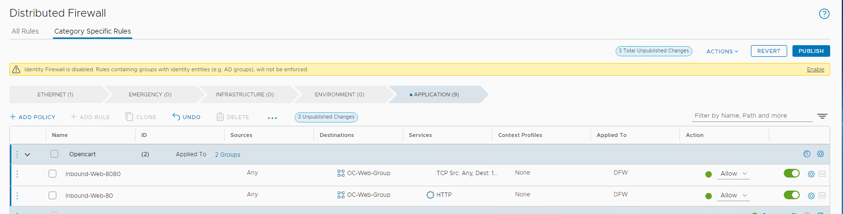

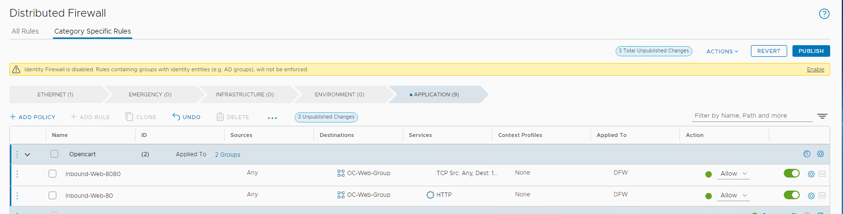

Step 3: Add inbound-web-8080 rule (clone inbound-web-80)

- Click on the three vertical dots on the left of the inbound-web-80 rule.

- Click Clone Rule

- Click on Copy of inbound-web-80 and change name to inbound-web-8080

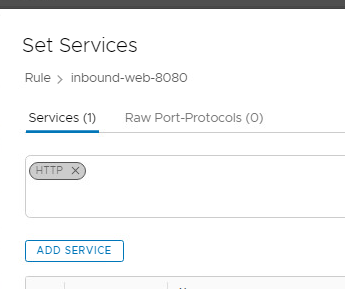

- Hover over Services, then click the pencil icon twice

- Uncheck the box for the HTTP service

- Click on Raw Port Protocols

- Click Add Service Entry

- Set Service Type TCP and Destinations Port 8080

- Click Apply

- The result should be the following:

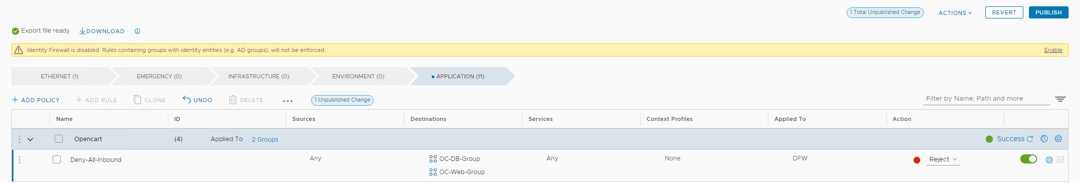

Step 4: Test inbound-web-XX rules

- Notice at this point we have two rules in place that are defaulted to Allow, and we have not yet published the rule changes. Leave this as is for the moment. Next, we will test that both ports are currently active on our web server

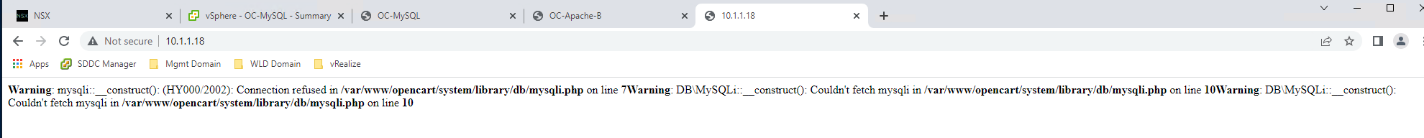

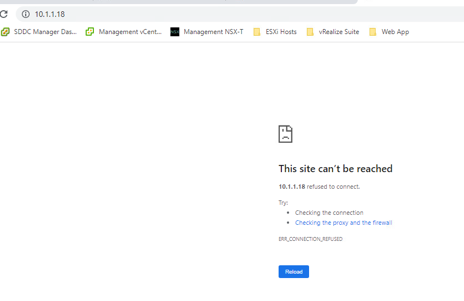

- Open a tab on the browser and connect to OC-Apache-A 10.1.1.18

- Open a second tab and connect to OC-Apache-A 10.1.1.18:8080

- Return to the NSX Manager tab. Click the arrow next to Allow on inbound-web-8080 and select Reject

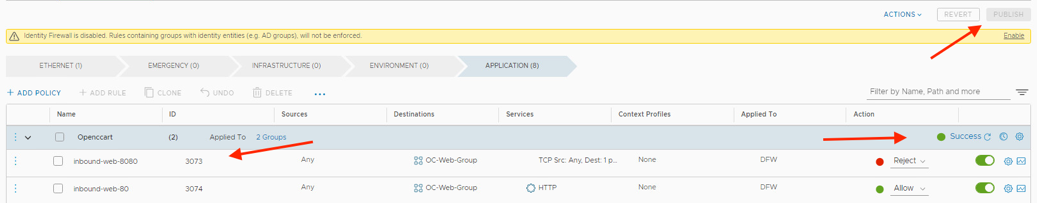

- Click PUBLISH to make the rules active

- Notice that the Publish button is greyed out, showing there are no uncommitted changes. The green Success indicator is set at the policy level, and our two rules now have ID numbers showing they have been activated. You may need to click the refresh icon next to In Progress to show success.

- Go to the OC-Apache-A 10.1.1.18 browser tab and refresh. The web page should load normally

- Go to the OC-Apache-A 10.1.1.18:8080 browser tab and refresh. The web page should fail to load

Step 5: Extend security policies to new VM based on tag

- Test operations of the OC-Apache-B 10.1.1.19 webserver on port 80

- Test operations of the OC-Apache-B 10.1.1.19 webserver on port 8080

- Observe that the Reject rule on port 8080 does not extend to OC-Apache-B.

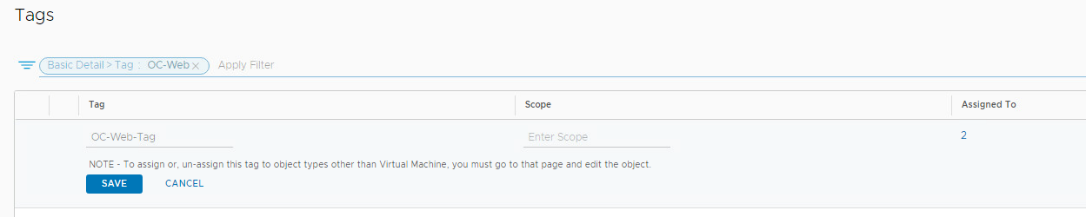

- Return to the NSX tab and click on Inventory then Tags

- Click on Filter, select Tag and search for by Name and type OC-Web

- Click the 3 dots next to OC-Web-Tag, then click Edit

- Click on the number 1 under Assigned To

- Search for OC-Apache and select OC-Apache-B then click Apply

- Click Save

- Test operations of the OC-Apache-B 10.1.1.19 webserver on port 8080

- Notice that as soon as the tag was applied to OC-Apache-B VM, it immediately became a part of the Opencart Policy, because it became a member of the web-group that the rules applied to

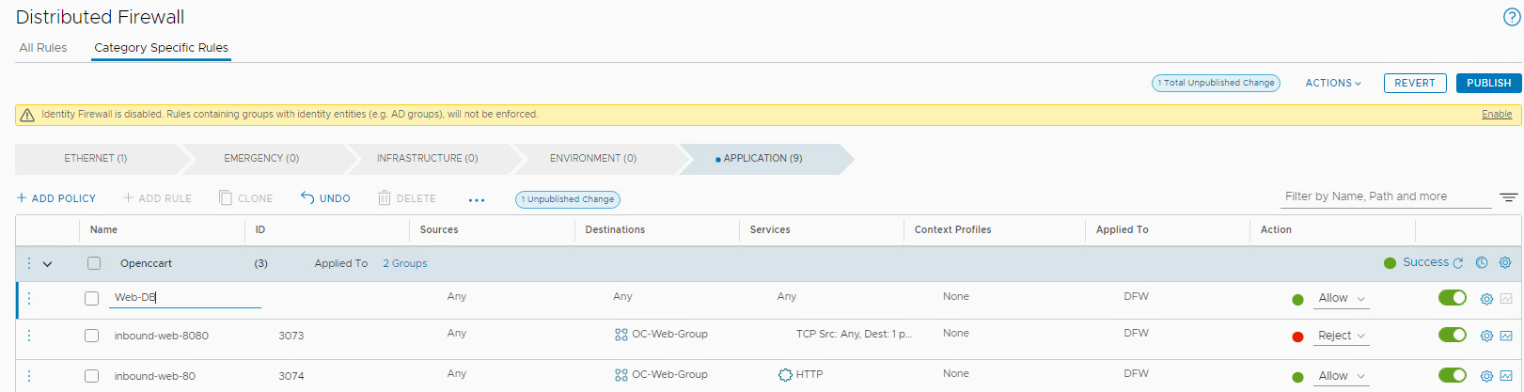

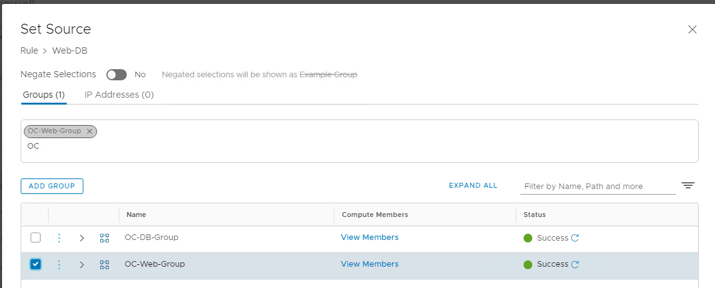

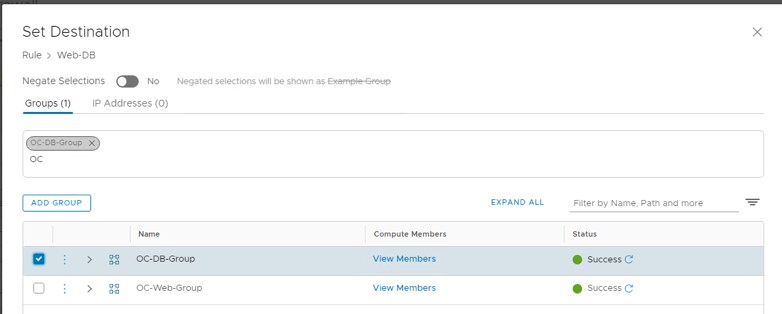

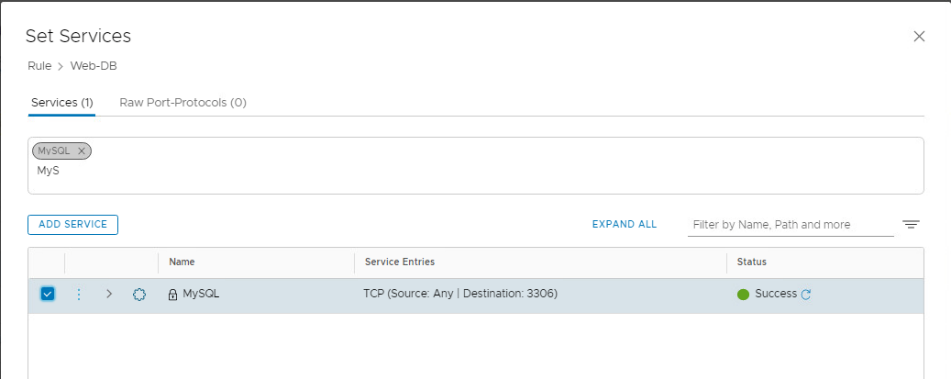

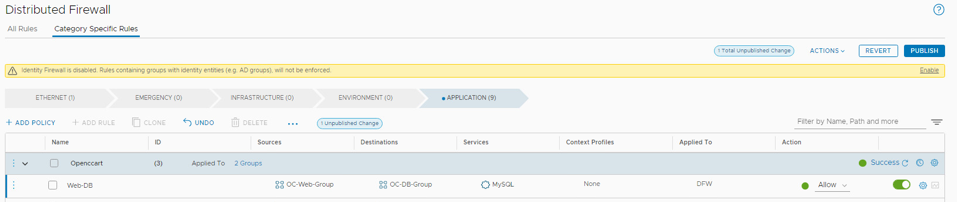

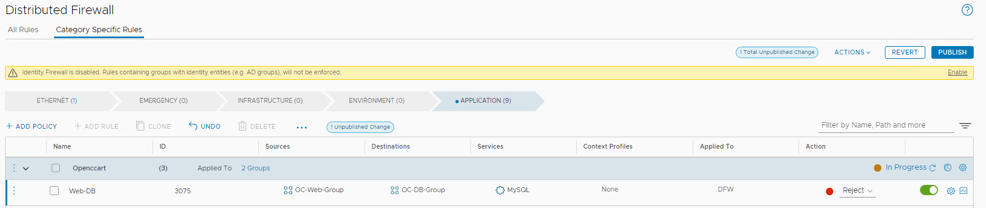

Step 6: Implement Web-DB rule

The step will Allow communications from only the Apache web servers to MySQL

- On the NSX Manager tab, go to Security -> Distributed Firewall

- Click on the 3 dots next to OpenCart and Add Rule

- Name the rule Web-DB.

- Set Sources to OC-Web-Group and click Apply

- Set Destinations to OC-DB-Group and click Apply

- Set Services to MySQL and click Apply

- Your rule should look like this

- Click Publish

- Test access to OC-Apache-A on 10.1.1.18. You should get a normal web page load

- Return to the NSX tab and set the Web-DB rule to Reject and click Publish (this will allow us to see the impact of the firewall blocking access from Apache to MySQL which will be useful later in the lab)

![]()

- Test access to OC-Apache-A on 10.1.1.18. Your web page load should fail

- Reset the Web-DB rule to Allow and Publish before moving on to the next step

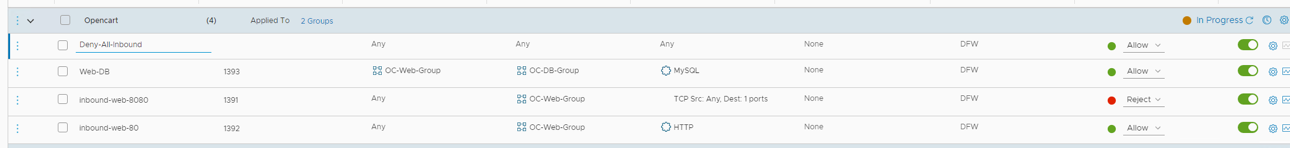

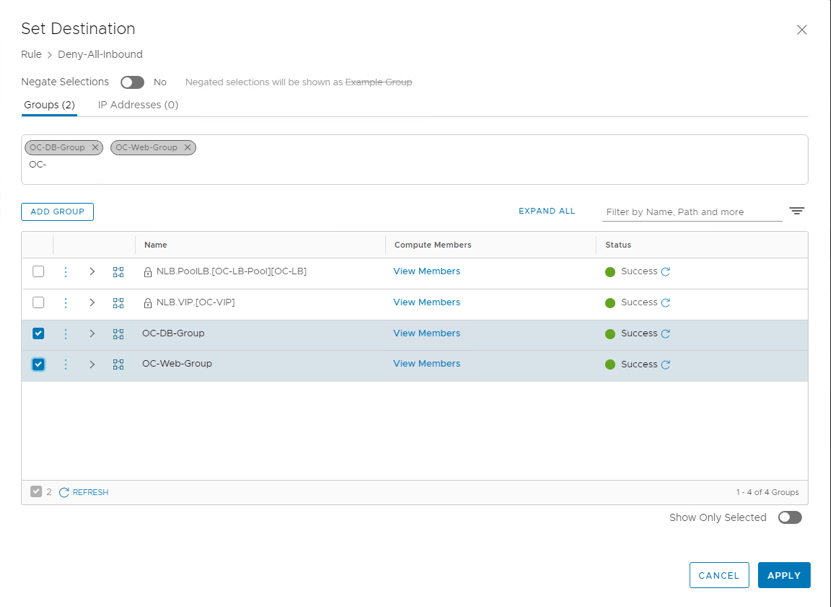

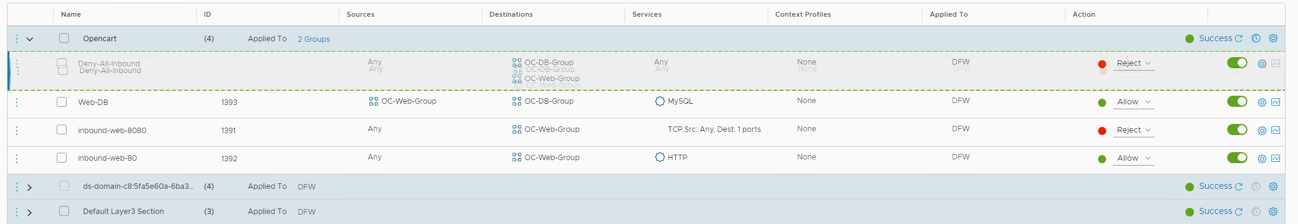

Step 7: Implement Deny All Inbound rule

The step will implement a Deny All Inbound rule which will deny all inbound traffic we have not explicitly allowed. This step will also show the order of rule evaluation within a security policy

- On the NSX Manager tab, go to Security -> Distributed Firewall

- Click on the 3 dots next to OpenCart and Add New Rule

- Name the rule Deny-All-Inbound.

- Leave Sources at Any

- Set Destinations to groups OC-DB-Group and OC-Web-Group (Hint: Type OC- and enter to quickly show OC groups) Check the two groups and then Apply

- Set Action to Reject, then click Publish

- Test access to OC-Apache-A on 10.1.1.18. Your web page load should fail. This is because the Deny-All rule is evaluated prior to our Allow rules

- Return to the NSX tab

- Move the deny-all rule down by clicking the mouse and holding down with the cursor anywhere on the Deny-All rule line and dragging the rule to below our inbound-web-80 rule then clicking Publish

- Test access to OC-Apache-A on 10.1.1.18. You should get a normal web page load

- Return to the NSX tab

- Click on the tree dots to the left of the inbound-web-8080 line and Delete Rule (since we have a hard Deny-All, unless port 8080 is explicitly allowed, it will be blocked, rendering this rule no longer needed.)

- Publish the rules. You may need to click Refresh on the bottom of the policy screen

- Go to the OC-Apache-A 10.1.1.18:8080 The web page should fail to load.

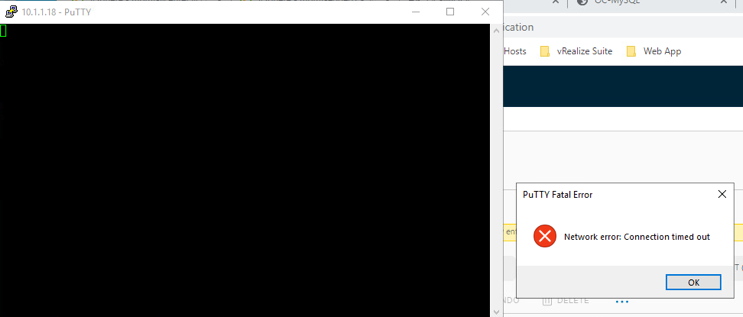

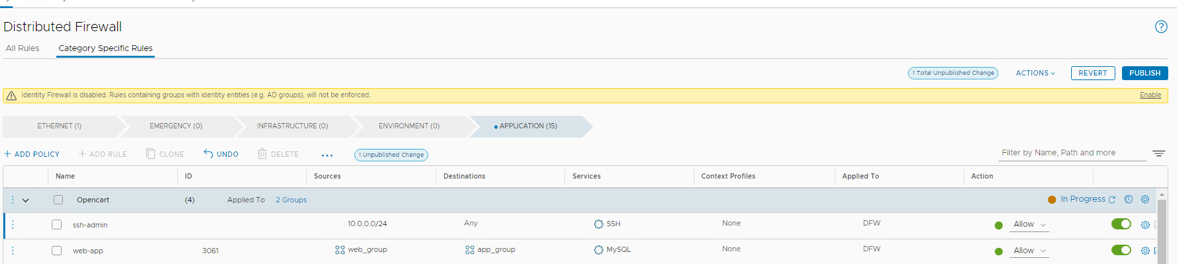

Step 8: Implement ssh rule

The step will implement a rule to allow ssh connections to our Apache and MySQL VM’s, but only from our inside admin network (10.0.0.0/24)

- Attempt to SSH to OC-Apache-A. Click on PuTTY and connect to 10.1.1.18

- Your connection should time out

- Return to the NSX tab

- Click on the three dots to the left of the Opencart policy and Add Rule

- Name the rule ssh-admin

![]()

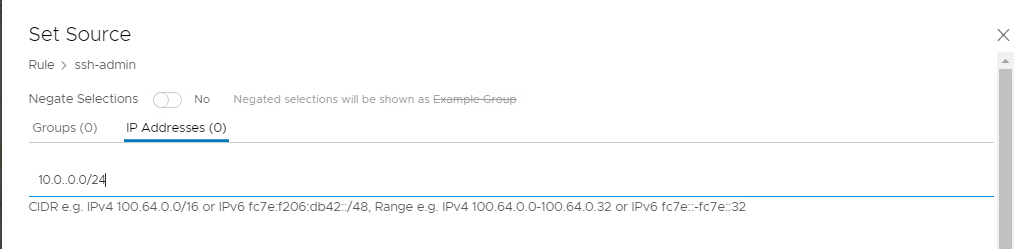

- Click on the pencil for Sources. Then IP Addresses. Set to 10.0.0.0/24, then click Apply

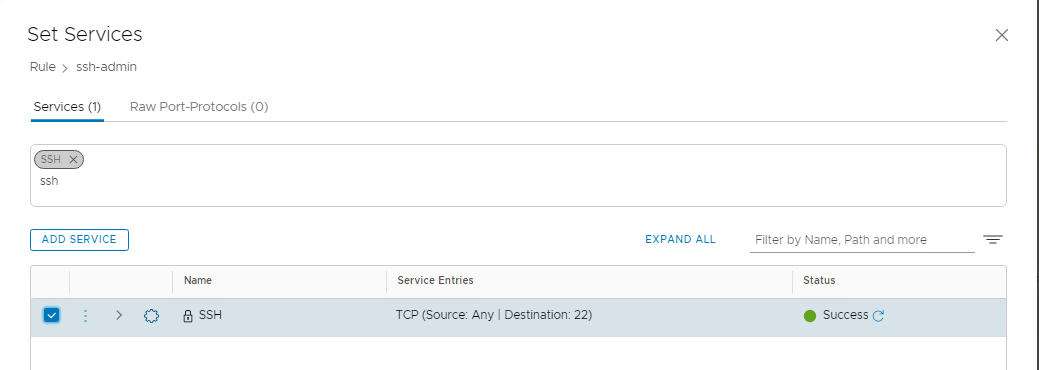

- Click on the pencil for Services. Select SSH, then click Apply

- Publish your new rule

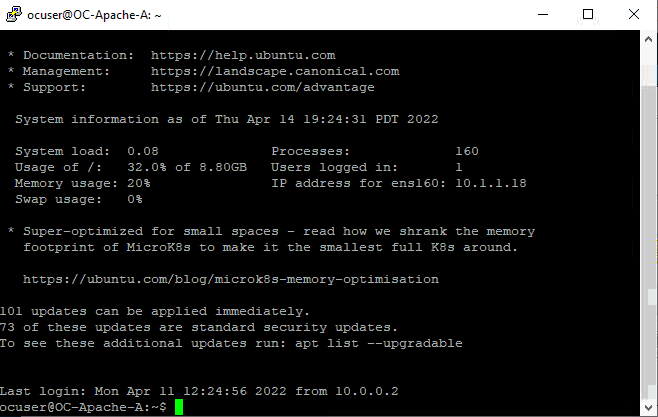

- Attempt to SSH to OC-Apache-A. Click on PuTTY and connect to 10.1.1.18

- Your connection should succeed.

- Login as ocuser, password VMware123!

- Attempt to ssh from OC-Apache-A to OC-Apache-B 10.1.1.19

- Your connection should be refused

- Observe that we have blocked ssh within the Web servers but allow between our admin network and the web servers.

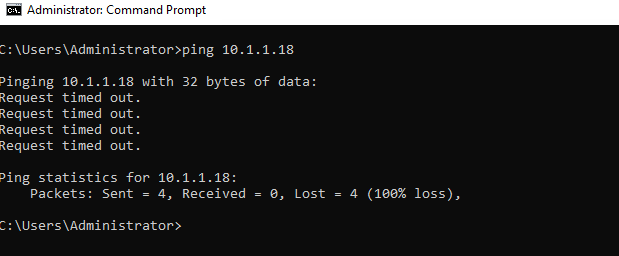

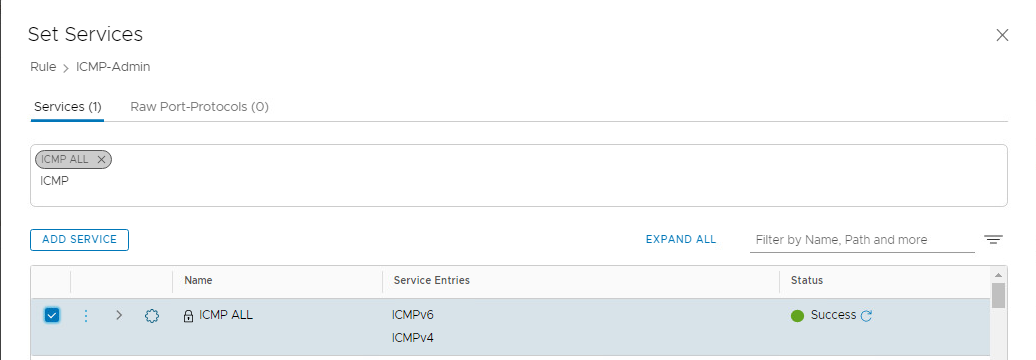

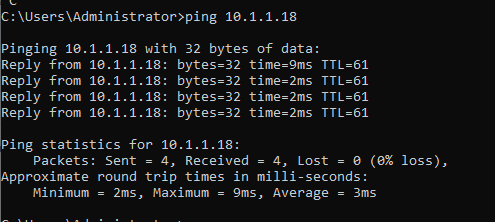

Step 9: Implement ICMP-Admin rule

The step will implement a rule to allow ICMP (Ping) to our Apache and MySQL VM’s, but only from our inside admin network (10.0.0.0/24), as Ping is used to determine host accessibility in many security threat situations.

- Open a command prompt on the Windows desktop by clicking the window icon -> Windows System -> Command Prompt

- Attempt to ping to OC-Apache-A 10.1.1.18. Your connection should time out

- On the NSX Manager tab, go to Security -> Distributed Firewall

- Click on the 3 dots next to Opencart and Add New Rule

- Name the rule ICMP-Admin

![]()

- Click on the pencil for Sources. Then IP Addresses. Set to 10.0.0.0/24, then click Apply

- Click on the pencil for Services. Select ICMP-ALL, then click Apply

- Publish your new rule

- Return to your Windows command prompt

- Attempt to ping to OC-Apache-A 10.1.1.18. Your connection should succeed

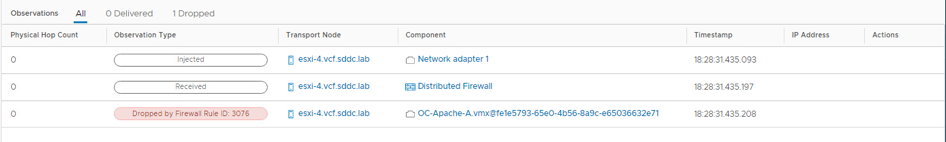

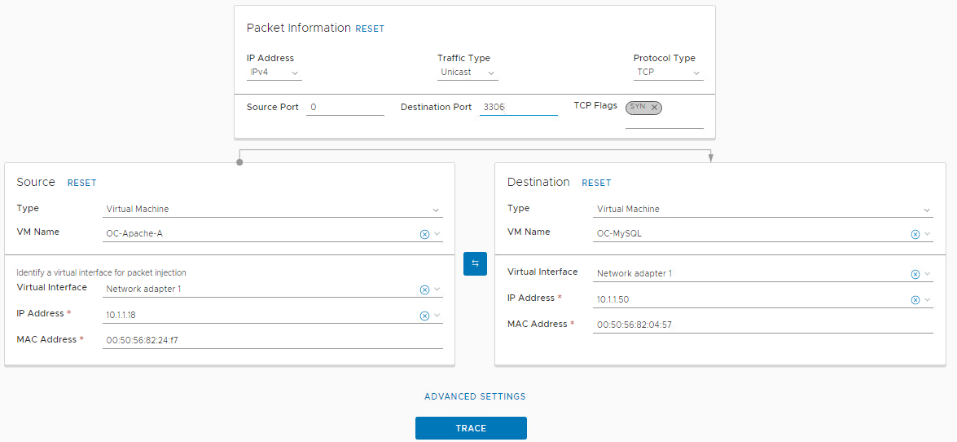

- From the NSX-T Manager interface click the Plan & Troubleshoot tab

- Click Traceflow in the left navigation panel

- Configure Traceflow from OC-Apache-A to OC-MySQL and click Trace

- Traceflow should show the ICMP packet dropped at the first firewall point (OC-Apache-A) before being placed on the segment

- Review the observations panel and notice the packet was dropped at OC-Apache A between the VM NIC and OC-Web-Segment

- On the Traceflow screen, click EDIT to reconfigure the trace then click Proceed on the warning pop up

- Change Protocol Type from ICMP to TCP, with Destination Port 3306

- Click Trace. Your Traceflow should succeed

- In the Observations panel, review the following

- We show 1 packet delivered

- The packet was injected at the network adapter for OC-Apache-A virtual machine

- It is then received at the distributed firewall at the VDS port for OC-Apache-A

- With no rule blocking, the packet is then forwarded on from the sending VDS port

- The packet then hits OC-T1 router and gets forwarded to the OC-DB-Segment

- Since OC-Apache-A and OC-MySQL are running on different ESXi hosts, you notice the physical hop between TEP’s

- The packet is then received on the distributed firewall at the receiving VDS port for OC-MySQL

- With no rule blocking forwarding, the packet is then forwarded to the destination, the last step shows the packet being delivered to the network adapter for the OC-MySQL VM

- Notice the flexibility of Traceflow to allow us to troubleshoot our distributed firewall and distributed routing using appropriate communications protocols.

[Lab 2 Summary]

Lab 2 shows the power of the distributed firewall capability in NSX. Using tagging a grouping, we were able to create a scalable set of rules for our Opencart application that only allow necessary communications for application operation, while blocking all other traffic. This was all done directly at the vSphere VDS switch port level, versus a piece of hardware elsewhere in the datacenter.

Module 3: Load Balancing

This module will add a Load Balancer for HTTP traffic

Lab 1: Configure load balancer

This lab will configure an L3-L7 load balancer on the NSX Tier-1 Router created in a Module 1.

Step 1: Configure OC-T1 to run on an Edge Cluster

To support stateful services, such as Layer 3-7 Firewall we need to configure OC-T1 as a Services Router (SR). This simply means associating OC-T1 with our existing NSX Edge cluster in management domain

- Open a new tab in the Chrome browser

- Click the Management NSX-T shortcut in the bookmark bar (click advanced / proceed to nsx-mgmt.vcf.sddc.lab, if required to accept the certificate)

- Log into NSX Manager as user: admin with the password: VMware123!VMware123!

- Navigate to Networking then click on Tier-1 Gateways

- Click the 3 dots next to OC-T1 and select edit

- Click on Select Edge Cluster and select EC-01

- Click Save

- Click Close Editing

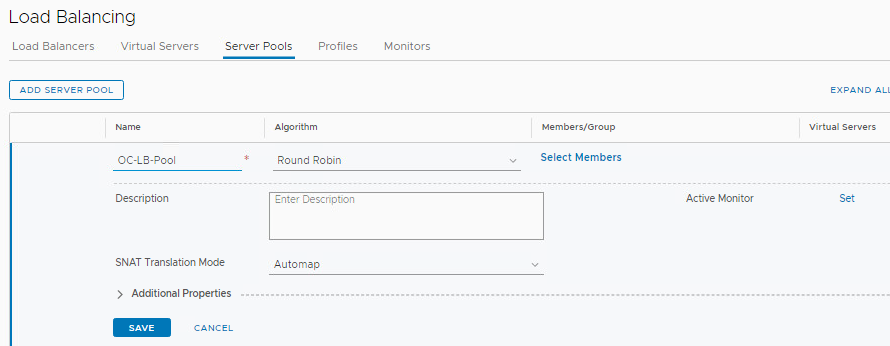

Step 2: Create Server Pool

A server pool is a set of servers that can share the same content.

- In NSX Manager, navigate to Networking and click on Load Balancing

- The click on the Server Pools tab and click Add Server Pool

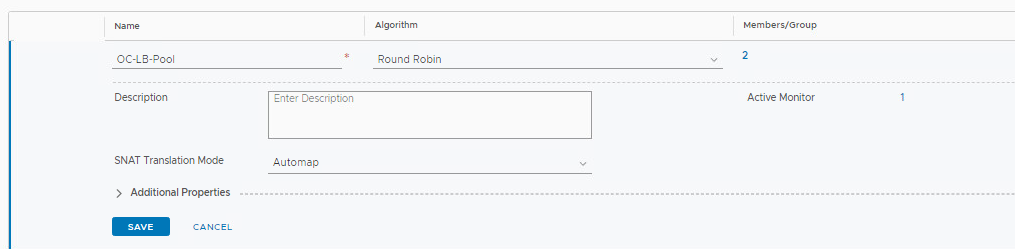

- Name the pool OC-LB-Pool

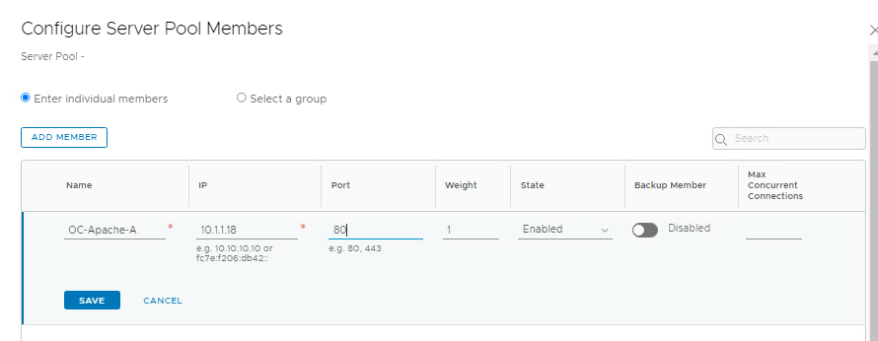

- Click Select Members

- Click Add Member

- Name OC-Apache-A, IP 10.1.1.18 Port 80

- Click Save

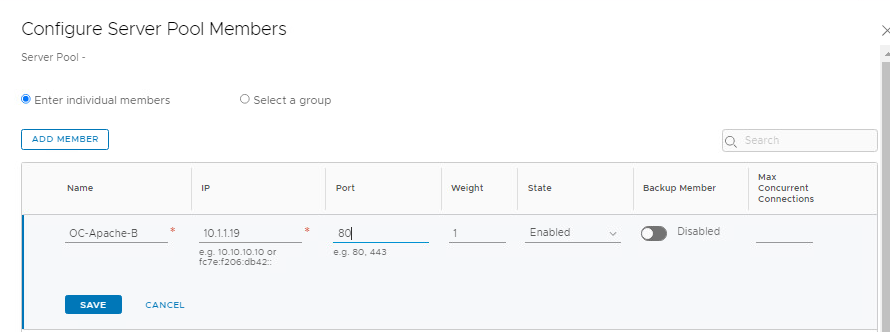

- Repeat steps for OC-Apache-B, using:

- Name OC-Apache-B, IP 10.1.1.19 Port 80

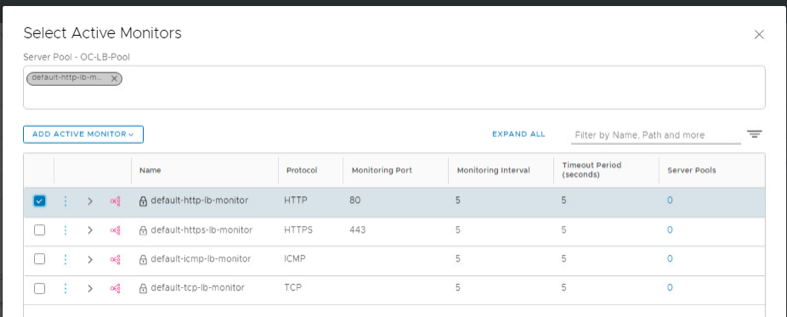

- Click Set next to Active Monitor

- Select the default HTTP Port 80 monitor, then click Apply

- Click Save

Step 3: Create Load Balancer

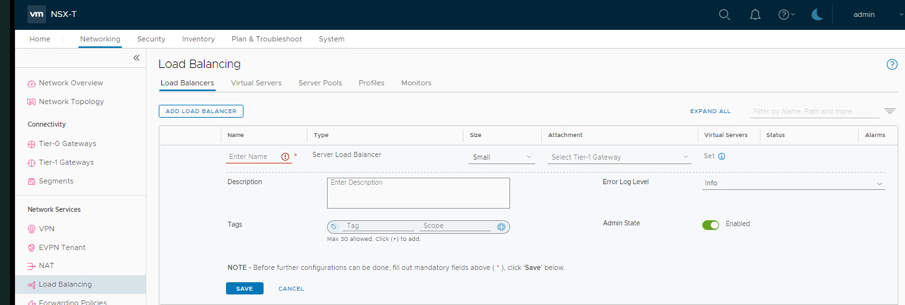

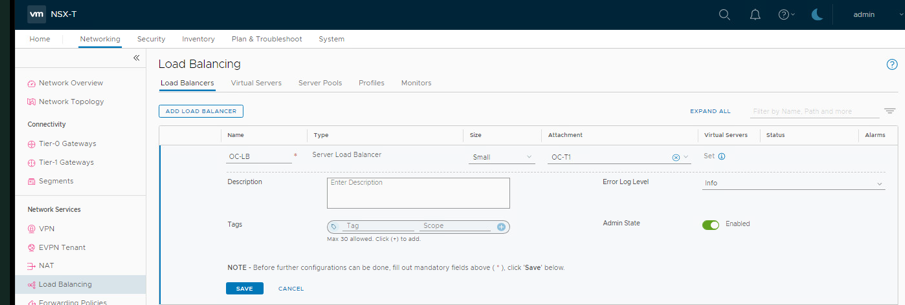

- Navigate to Networking and click Load Balancing

- Click Add Load Balancer

- Name the Load Balancer OC-LB. On Attachment select OC-T1

- Click Save

- When prompted Want to continue configuring this Load Balancer, select No

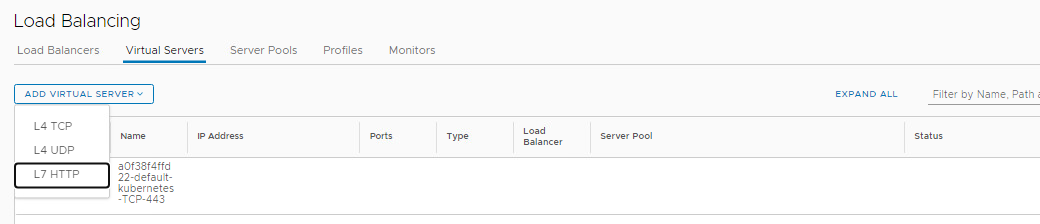

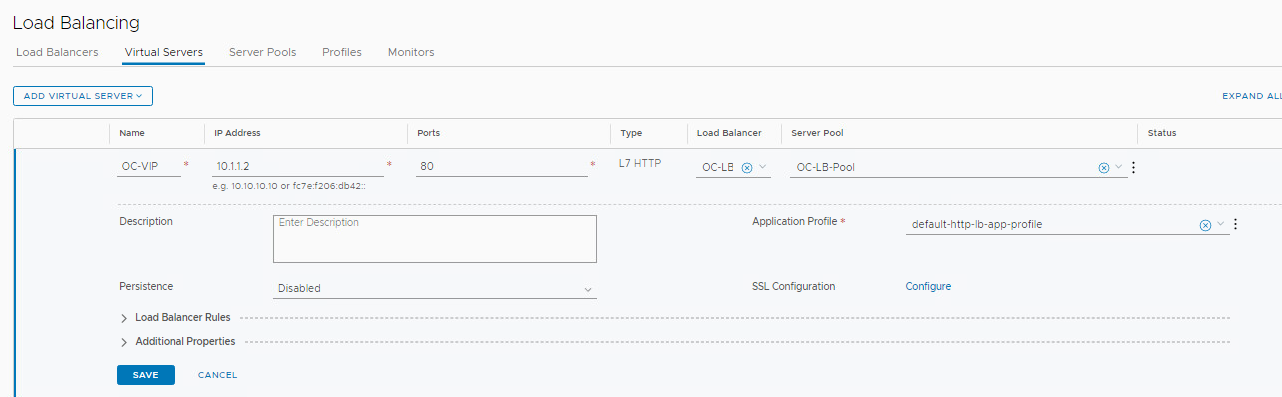

Step 4: Create Virtual Servers

A Virtual Server is an IP address that acts as the front end for a Server Pool

- Navigate to Networking and click on Load Balancing

- Click the Virtual Servers tab and then Add Virtual Server, L7 HTTP

- Name the Virtual Server OC-VIP, IP Address 10.1.1.2, Port 80

- Type OC in the Load Balancer field and it will allow you to select OC-LB

- Type OC in the Server Pool field and select OC-LB-Pool

- Click on SAVE

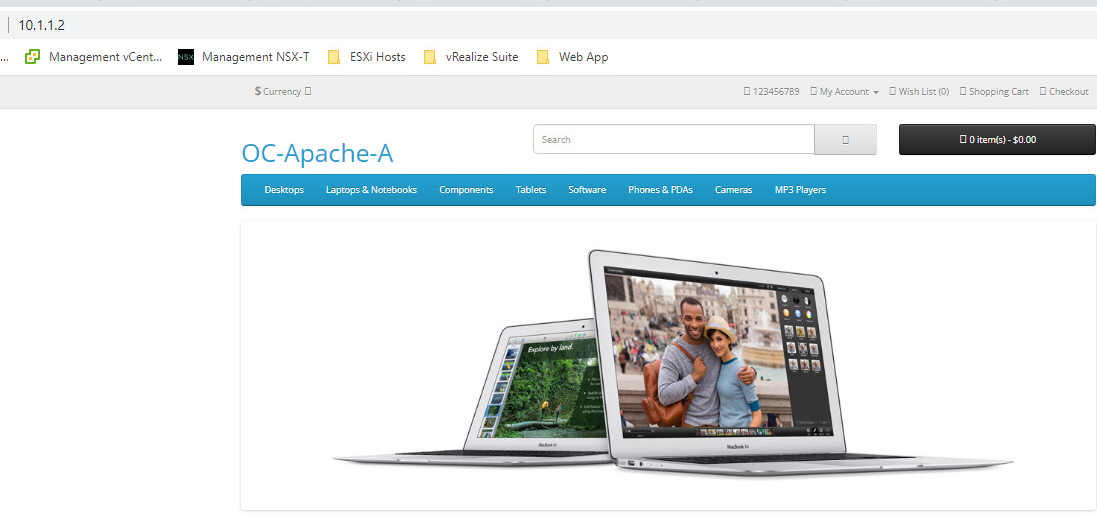

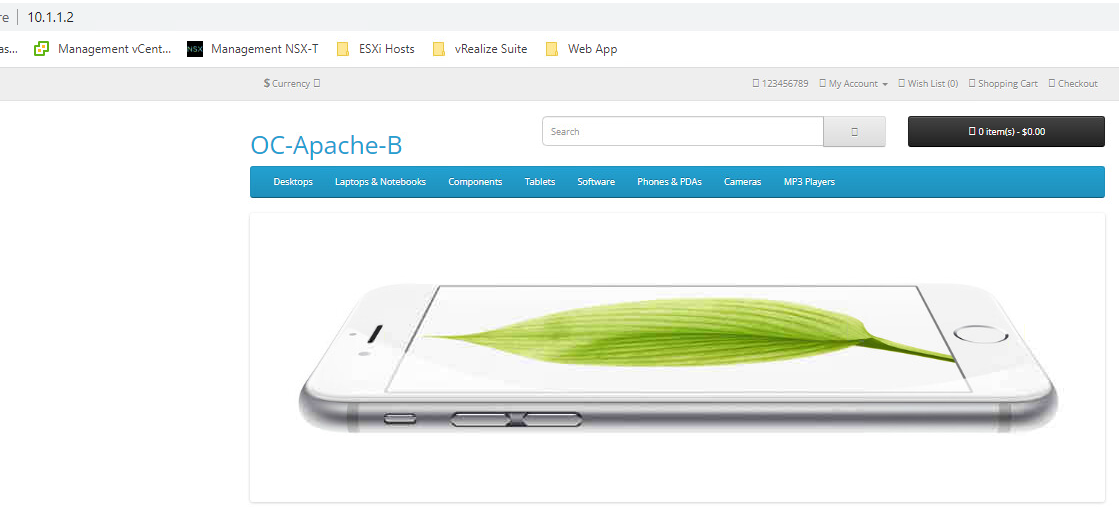

Step 5: Test Load Balancer

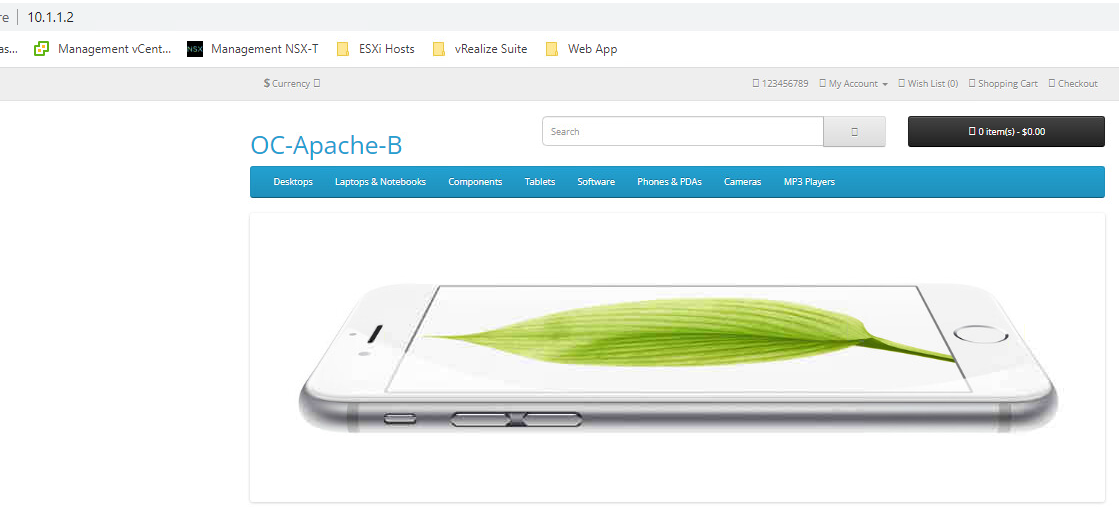

- Open a new tab for 10.1.1.2

- Refresh the browser for this tab. You should see the opposite web server

- Open a PuTTY session to OC-Apache-A, login ocuser, password VMware123!

- Run the command sudo systemctl stop apache2

- Wait approximately 30 seconds

- Refresh the browser for the 10.1.1.2 tab several times in a row. You should see the OC-Apache-B web page only, as the Active Monitor would detect the failure of OC-Apache-A quickly

- Return to the OC-Apache-A PUTTY session and run the command sudo systemctl start apache2

- Wait approx. 30 seconds

- Refresh the browser for the 10.1.1.2 tab several times in a row. You should see both OC-Apache-A and OC-Apache-B web pages, as the Active Monitor would detect the return of OC-Apache-A quickly

[Lab 1 Summary]

Lab 1 shows how quickly a load balancer can be instantiated on the NSX Tier-1 router

<END OF DOC>

Default IP Addresses

The following is a quick reference for the various network addresses used within this document.

- Default VCOMS internal networks (Site-1)

- 10.0.0.0/24 - Management

- 10.0.0.4/24 - vMotion

- 10.0.0.8/24 - vSAN

- 10.1.0.0/16 – OpenCart supernet

- 10.50.0.0/24 - AVN Region segment

- 10.60.0.0/24 – AVN X-Region seg

- 10.70.0.0/24 – Tanzu Ingress

- 10.80.0.0/24 – Tanzu Egress

- 172.27.11.0/24 – Edge TEP

- 172.27.12.0/24 – Edge Uplink 1

- 172.27.13.0/24 – Edge Uplink 2

- 172.16.254/24 – Host TEP/DHCP

Default Account Information

The following is a quick reference for the account information that is used within this document.

Links to the UIs for the various components are present as bookmarks in Chrome on the Holo-Console.

- SDDC Manager

- Username: administrator@vsphere.local

- Password: VMware123!

- vCenter Server Admin Console

- Username: root

- Password: VMware123!

- vSphere Web Client

- Username: administrator@vsphere.local

- Password: VMware123!

- VMware NSX Manager

- Username: admin

- Password NSX-T: VMware123!VMware123!

- vRealize Operations Manager

- Username: admin

- Password: VMware123!

- vRealize Automation Cloud Assembly

- Username: configadmin

- Password: VMware123!

- Windows Console (Jump Host)

- Username: administrator

- Password: VMware123!

- Opencart Apache and MqSQL VMs

- Username: ocuser

- Password: VMware123!

Glossary

| Use vmw_outline table style – | Remove borders on left, right, top and inside vertical. |

| vmw_table heading left | vmw_table body copy – Lutpat iurem iliquissit vulland |

| LDAP Server | Nuguera esecte mod ercinibh |

| Consequi Sismodo | Name of ESX cluster in vCenter Server hosting VMs that are used as the source for cost reporting |

| Term | Te elit, conulpute modignis nostionse tionsent lutpatueros euis ex enisit lor ip erostor dolortisl ulpute et augiat elisim volorem vullandre dolor sit vel ullandre molor aute min henia mdignis nostionse tionsent lutpatu. |

| Term | Iduissi tate te faccummy nos del dit dolore digniat. |

| Term | Lutpat iurem iliquissit vulland ionsequis atuer sendre dolenibh eu feugait ulla facipit iure tat loreet eum zzrit, commy nisi. |

| Term | Esenis nismodi psusciliquat vel ullan henim nosting er illa faccummy nonse feuis their nonsenim iure eugait wisi. |

| Term | Agnis auguera esecte mod ercinibh endiamc onsequi sismodo lortie et vercil iurem zzrilla mconull amcore diat velissis ea feumsan hendionummy nulput nummy nibh erer sum diamet ad do doluptatuero eum qui blamet vullum er aliquipit iril ute te. |

| Term | Iduissi tate te faccummy nos del dit dolore digniat. |

| Term | Lutpat iurem iliquissit vulland ionsequis atuer sendre dolenibh eu feugait ulla facipit iure tat loreet eum zzrit, commy nisi. |

| Term | Esenis nismodi psusciliquat vel ullan henim nosting er illa faccummy nonse feuis their nonsenim iure eugait wisi. |

| Term | Agnis auguera esecte mod ercinibh endiamc onsequi sismodo lortie et vercil iurem zzrilla mconull amcore diat velissis ea feumsan hendionummy nulput nummy nibh erer sum diamet ad do doluptatuero eum qui blamet vullum er aliquipit iril ute te. |