DataStax Enterprise on VMware Cloud Foundation

Executive Summary

Business Case

Organizations are leveraging big data applications to underpin scalable applications across every industry. Whether it’s transactional data for banking or inventory and recommendation systems in retail, Apache Cassandra is the defacto choice for storing critical data in distributed applications.

Organizations are betting their growth on these technologies, and they want to ensure that the full application stack can be easily managed with secure and repeatable deployments in any environment.

VMware Cloud Foundation™ is a hybrid cloud platform designed for running both traditional enterprise applications and modern applications. It is built on the proven and comprehensive software-defined VMware® stack, including vSphere with Kubernetes, VMware vSAN™, VMware NSX-T Data Center™, and VMware vRealize® Suite. Cloud Foundation provides a complete set of software-defined services for compute, storage, network security, Kubernetes management, and cloud management. The result is agile, reliable, efficient cloud infrastructure that offers consistent operations across private and public clouds.

DataStax Enterprise is a scale-out data infrastructure for enterprises that need to handle any workload in any cloud. Built on Apache Cassandra™, DataStax adds operational reliability hardened by the largest internet apps and the Fortune 100. With DataStax, your applications scale reliably and effortlessly— whether it’s enormous growth, handling mixed workloads, or enduring catastrophic failure.

VMware and DataStax have partnered to offer a joint solution that provides built-in high availability and failure protection across availability zones, regions, data centers, and clouds, which simplifies operations and eliminates silos with consistent processes and tooling across clouds, and offers intrinsic security for data-at-rest and in-flight.

Purpose

This white paper demonstrates the building blocks of the VMware Cloud Foundation workload domain with NSX-T as the infrastructure for DataStax Enterprise application workload with predictable and consistent performance and business continuity.

Scope

The white paper covers the following scenarios:

Baseline Performance Test

The test purpose is to ensure performance consistency and stability for DataStax Enterprise running on VMware Cloud Foundation.

- Baseline performance of 90% Write 10% Read workload on DataStax Enterprise cluster placed on multiple vSphere Clusters, collect IOPS and latency charts by NoSQLBench.

- Performance test of 50% Write 50 Read workload, collect IOPS and latency charts by NoSQLBench.

Based on test results, we can get the expected workload thread to do the failure testing.

Resiliency Test – DataStax Enterprise Business Continuity Demonstration

This is a showcase to prove vSAN resilience to guarantee DataStax Enterprise application workload continuity and stability and also validate VMware vSphere® HA for fast VM failover and DRS for workload rebalance.

- Single capacity disk failure: to simulate single capacity disk failure to demonstrate vSAN resilience. DSE workload will have minimum impact.

- Single cache disk failure: to simulate single cache disk failure to demonstrate vSAN resilience. DSE workload will incur only slight downgrade.

- Single host failure: to simulate single host failure to demonstrate vSAN resilience. DSE workload will incur slight downgrade, but will recover soon after the host comes back. DRS will trigger VM to migrate back to the online host. The tests include the affect this has if a DSE node is on the failed host.

- Single rack failure: to simulate single rack failure to demonstrate DSE rack awareness align with vSAN cluster still provide application availability when a whole cluster is down.

Audience

This paper is intended for DataStax Enterprise administrators and storage architects involved in planning, designing, or administering of DataStax Enterprise on vSAN for production purposes.

Technology Overview

The solution technology components are listed below:

- VMware Cloud Foundation

- VMware vSphere o VMware vSAN

- VMware NSX Data Center

- DataStax Cassandra

VMware Cloud Foundation

VMware Cloud Foundation is an integrated software stack that bundles compute virtualization (VMware vSphere), storage virtualization (VMware vSAN), network virtualization (VMware NSX), and cloud management and monitoring (VMware vRealize Suite) into a single platform that can be deployed on premises as a private cloud or run as a service within a public cloud. This documentation focuses on the private cloud use case. VMware Cloud Foundation helps to break down the traditional administrative silos in data centers, merging compute, storage, network provisioning, and cloud management to facilitate end-to-end support for application deployment.

VMware vSphere

VMware vSphere is the next-generation infrastructure for next-generation applications. It provides a powerful, flexible, and secure foundation for business agility that accelerates the digital transformation to cloud computing and promotes success in the digital economy. vSphere 6.7 supports both existing and next-generation applications through its:

- Simplified customer experience for automation and management at scale

- Comprehensive built-in security for protecting data, infrastructure, and access

- Universal application platform for running any application anywhere

With VMware vSphere, customers can run, manage, connect, and secure their applications in a common operating environment, across clouds and devices.

VMware vSAN

VMware vSAN is the industry-leading software powering VMware’s software defined storage and HCI solution. vSAN helps customers evolve their data center without risk, control IT costs and scale to tomorrow’s business needs. vSAN, native to the market-leading hypervisor, delivers flash-optimized, secure storage for all of your critical vSphere workloads, and is built on industry-standard x86 servers and components that help lower TCO in comparison to traditional storage. It delivers the agility to easily scale IT and offers the industry’s first native HCI encryption.

vSAN simplifies day-1 and day-2 operations, and customers can quickly deploy and extend cloud infrastructure and minimize maintenance disruptions. Stateful containers orchestrated by Kubernetes can leverage storage exposed by vSphere (vSAN, VMFS, NFS) while using standard Kubernetes volume, persistent volume, and dynamic provisioning primitives.

VMware NSX Data Center

VMware NSX Data Center is the network virtualization and security platform that enables the virtual cloud network, a software-defined approach to networking that extends across data centers, clouds, and application frameworks. With NSX Data Center, networking and security are brought closer to the application wherever it is running, from virtual machines to containers to bare metal. Like the operational model of VMs, networks can be provisioned and managed independent of underlying hardware. NSX Data Center reproduces the entire network model in software, enabling any network topology—from simple to complex multitier networks—to be created and provisioned in seconds. Users can create multiple virtual networks with diverse requirements, leveraging a combination of the services offered via NSX or from a broad ecosystem of third-party integrations ranging from next-generation firewalls to performance management solutions to build inherently more agile and secure environments. These services can then be extended to a variety of endpoints within and across clouds.

DataStax Enterprise

DataStax Enterprise is a scale-out data infrastructure for enterprises that need to handle any workload in any cloud. Built on Apache Cassandra™, DataStax adds operational reliability hardened by the largest internet apps and the Fortune 100. DataStax Enterprise 6.8 supports more NoSQL workloads from graph to search and analytics, and improves user productivity with Kubernetes and APIs, all in a unified security model. With DataStax, your applications scale reliably and effortlessly—whether it is enormous growth, handling mixed workloads, or enduring catastrophic failure. Also, DataStax recently launched its cloud offering DataStax Astra- a cloud-native Cassandra as-a-Service built on Apache Cassandra. With DataStax Astra, users and enterprises can now simplify cloud-native Cassandra application development and reduce deployment time from weeks to minutes.

Check out more information about DataStax Enterprise at https://www.datastax.com/.

Testing and Monitoring Tools

Monitoring tools

- vSAN performance services: vSAN Performance Service is used to monitor the performance of the vSAN environment, using the vSphere web client. The performance service collects and analyzes performance statistics and displays the data in a graphical format. You can use the performance charts to manage your workload and determine the root cause of problems.

- Datastax OpsCenter: DataStax OpsCenter is an easy-to-use visual management and monitoring solution for DataStax Enterprise. With OpsCenter, you can quickly provision, upgrade, monitor, backup/restore, and manage your DSE clusters with little to no expertise.

- NoSQLBench integrated Grafana: Grafana is a multi-platform open source solution for running data analytics, pulling up metrics that make sense of the massive amount of data, and monitoring apps through customizable dashboards. Available since 2014, the interactive visualization software provides charts, graphs, and alerts when the service is connected to the supported data sources.

Benchmark tools

Workload Testing— NoSQLBench: the NoSQLBench tool is an open-source performance testing tool for the NoSQL ecosystem: http://docs.nosqlbench.io/#/docs/

Solution Configuration

This section introduces the resources and configurations.

System Configuration

The following sections describe how the solution components are configured.

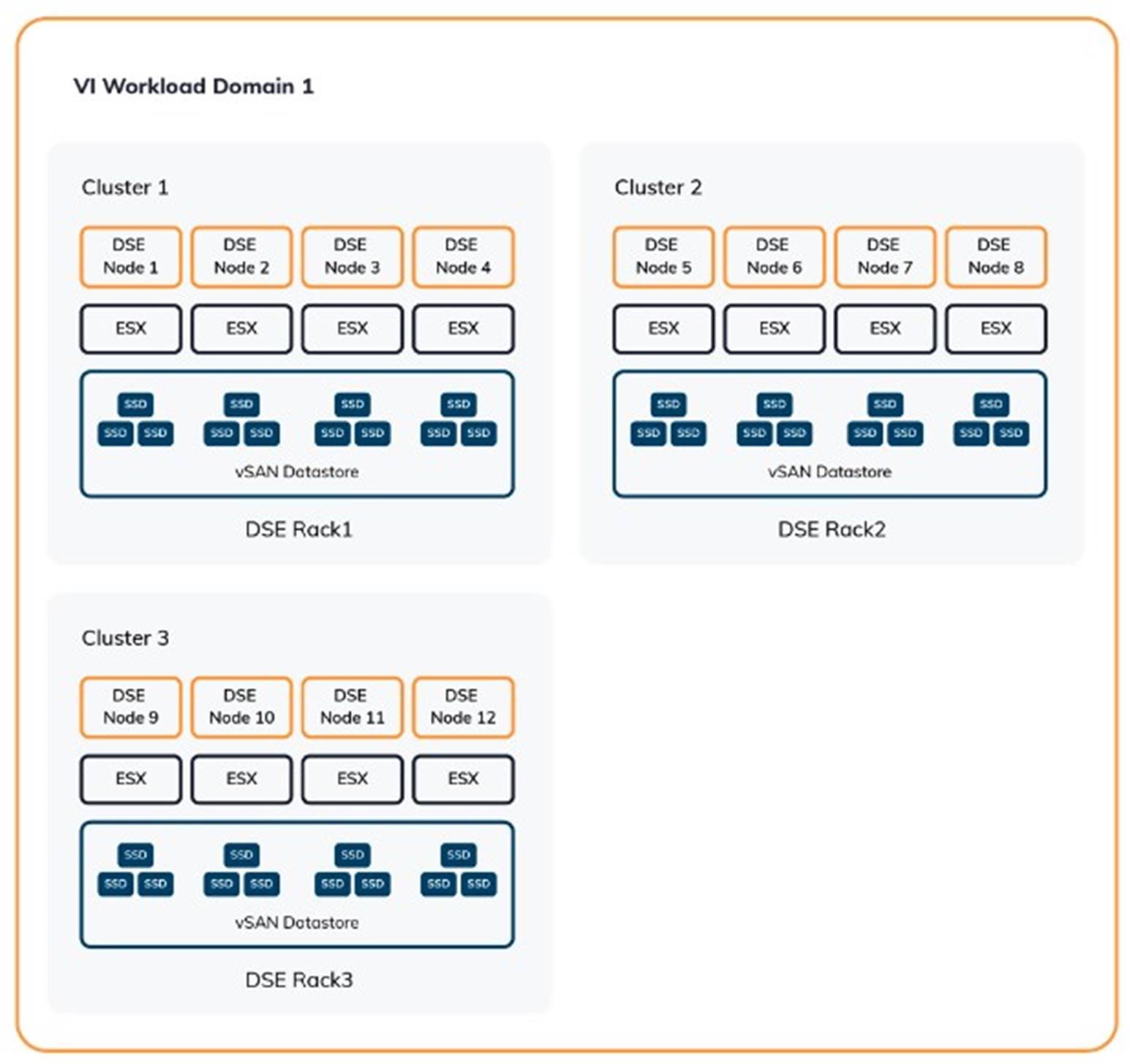

Due to hardware limitation, a 12-node DataStax Enterprise cluster is deployed on a three 4-node vSAN cluster in the workload domain.

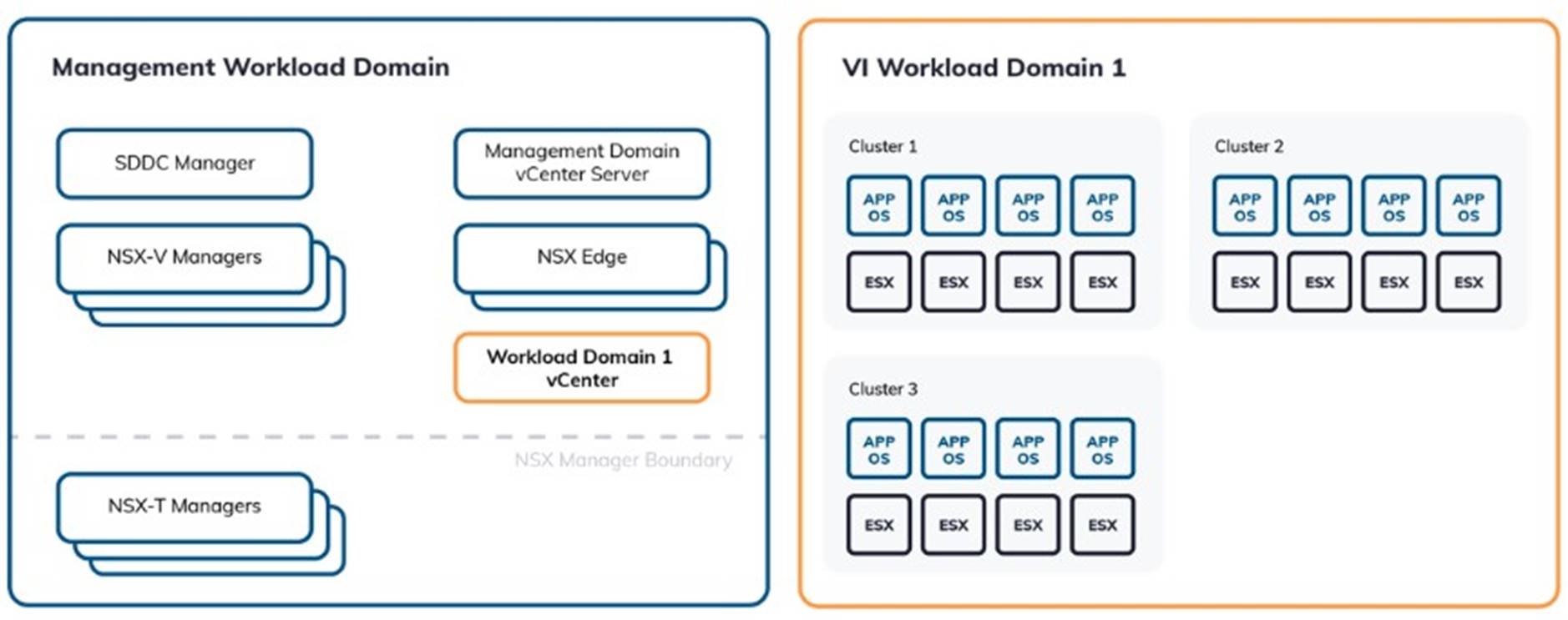

As Figure 1 shows, DataStax Enterprise is deployed on the VI workload domain 1 with 3 clusters each consisting of four hosts with NSX-T and the management domain is shared.

FIGURE 1. Workload Domain in VMware Cloud Foundation

FIGURE 2. Solution Architecture

Server Configuration

Each vSAN ESXi Server in the vSAN Cluster has the following configuration.

TABLE 1. Server Configuration

|

PROPERTY |

SPECIFICATION |

|

Server |

DELL R630 |

|

CPU and cores |

2 sockets, 12 cores each of 2.3GHz with hyper-threading enabled |

|

RAM |

256GB |

|

Network adapter |

2x10Gb NIC |

|

Storage adapter |

SAS Controller Dell LSI PERC H730 Mini |

|

Disks |

Cache-layer SSD: 2 x 1.6 TB SSD, Capacity-layer SSD: 8 x 80 GB SSD |

Software Configuration

TABLE 2. Software Configuration

|

SOFTWARE |

VERSION |

PURPOSE |

|

VMware vCenter Server and ESXi |

7.0 |

ESXi Cluster to host virtual machines and provide vSAN Cluster. VMware vCenter Server provides a centralized platform for managing VMware vSphere environments. |

|

VMware Cloud Foundation |

4.0 |

Hybrid cloud platform |

|

NSX-T |

2.5 |

NSX datacenter |

|

DataStax Enterprise |

6.7.7 |

DataStax Enterprise 6.7.7 (also applies to 6.8) |

|

Ubuntu |

16.04 |

Ubuntu 16.04 is used as the guest operating system of all DataStax Enterprise virtual machines. |

|

NoSQLBench |

3.12.67 |

NoSQLBench is an open source benchmark tool developed by DataStax for benchmarking and load testing a Cassandra cluster. |

Virtual Machine Configuration

TABLE 3. DataStax Enterprise VM Configuration

|

VM ROLE |

INSTANCE |

vCPU |

MEMORY |

VIRTUAL |

OPERATING SYSTEM |

|

DataStax Enterprise |

12 |

8 |

54 GB |

OS Disk: 10 GB Data Disk: 2 TB Log Disk: 100 GB |

Ubuntu 1604 |

Best practices:

- We used different virtual disks for the DataStax Enterprise data directory and the log directory. If components of DataStax Enterprise VMs with one data disk are not fully distributed across vSAN datastore, customers can use multiple virtual disks for the data directory to make full use of physical disks.

- We configured a separate storage cluster on the hybrid management cluster to avoid any performance impact on the tested DataStax Enterprise cluster.

- We followed the recommended production setting for optimizing DataStax Enterprise installation on Linux.

Test Client Configuration

TABLE 4. Test Client VM Configuration

|

TEST CLIENT VM ROLE |

INSTANCE |

vCPU |

MEMORY |

VIRTUAL |

OPERATING SYSTEM |

|

NoSQLBench |

1 |

12 |

32 GB |

40 GB |

Ubuntu 1804 |

VMware vSAN Cluster Configuration

A 4-node VMware vSAN cluster is deployed to support the VMware Cloud Foundation management domain. Three 4-node vSAN clusters are deployed in a workload domain to support the DataStax Enterprise environment.

vSAN can set availability, capacity, and performance policies per virtual machine if the virtual machines are deployed on the vSAN datastore. We use the default storage policy as below for DataStax Enterprise VMs.

Table 5. vSAN Storage Policy Configuration

|

STORAGE CAPABILITY |

RAID 1 SETTING |

|

Number of Failures to Tolerate (FTT) |

1 |

|

Number of disk stripes per object |

2 |

|

Flash read cache reservation |

0% |

|

Object Space reservation |

0% |

|

Disable object checksum |

No |

|

Failure tolerance method |

Mirroring |

Network Configuration

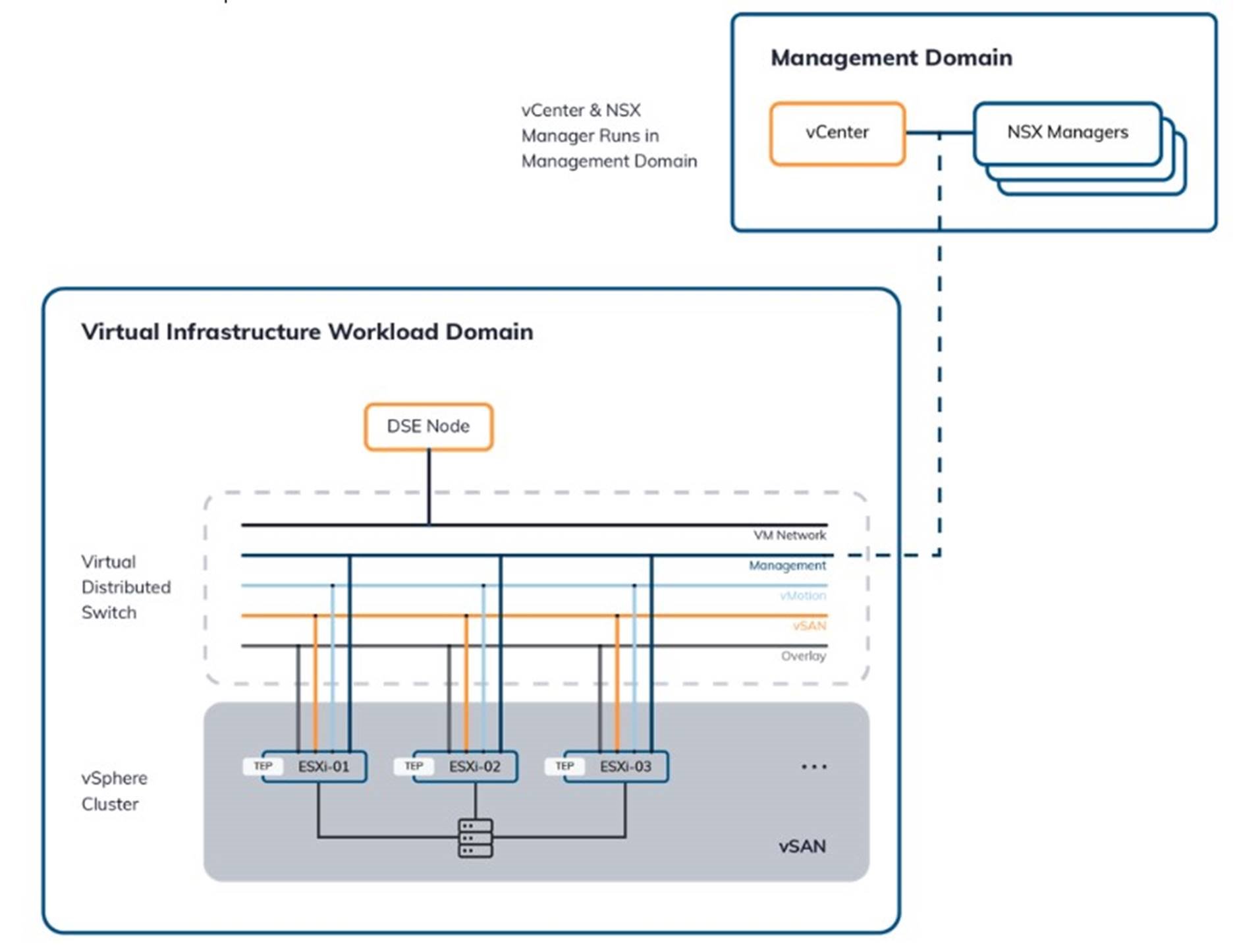

The NSX-T managers resided in the management domain. The application virtual machines use the VM network on an NSX-T segment. vSphere vMotion, vSAN, and VXLAN VTEP for NSX-T use dedicated VDS port groups.

FIGURE 3. Workload Domain Network Architecture

Solution Validation

Overview

We conducted an extensive validation to demonstrate VMware Cloud Foundation as an ideal cloud platform for globally distributed cloud applications for production environments.

Our goal was to select workloads that are typical of today’s modern applications, we tested a typical IoT workload provided in the NoSQLBench.

We used large data sets to simulate real case so the data set of each node should exceed the RAM capacity, and we loaded the base data set of at least 500GB per node (we tested with base dataset 845GB+ per node). In addition, we used OpsCenter to monitor the vitals of the DataStax Enterprise cluster continuously.

In the previous DataStax Enterprise on vSAN joint solution, we already validated the operation function that the DataStax Enterprise cluster is robust and the performance impact is negligible during a normal operation such as removing a DataStax Enterprise node and adding a DataStax Enterprise node. Also we validated the cluster scalability in a near linear fashion by adding nodes and validated the latency was not affected.

So this time we did some baseline testing and focused on failure testing, the newly included NSX data center component for VM migration and multiple-cluster architecture to tolerant rack failure. We loaded the snapshot as the preloaded data set before each workload testing. This solution included the following tests:

- Performance testing: to validate the cluster functions as expected for typical workloads for performance consistency and predictable latency in the production environments.

- Resiliency and availability testing: to verify vSAN’s storage-layer resiliency features combined with DataStax Enterprise’s peer-to-peer design enable this solution to meet performance and data availability requirements of even the most demanding applications under predictable failure scenarios and the impact on application performance is limited.

Baseline Performance

We did some baseline performance testing to validate the cluster functions as expected for typical workloads for performance consistency and predictable latency.

90% WRITE AND 10% READ PERFORMANCE

In this test, NoSQLBench randomly inserts and reads, running a 90% write and 10% read workload on the preloaded data set.

We initiated the test from a client VM.

During the test, the IOPS and latency were consistent from the Grafana view.

FIGURE 4. IOPS for 90%write and 10%read Workload

FIGURE 5. P99 Client Latency for 90%write and 10%read Workload

The throughput increased as threads increased, when thread=250, the average CPU usage was above 85%, peak CPU over 90%, Table 6 includes the IOPS and latencies values with different threads.

TABLE 6. 90%write and 10%read OPS and Latencies

|

THREAD |

OP RATE (op/s) |

LATENCY MEAN |

LATENCY MEDIAN |

LATENCY 95TH PERCENTILE (us) |

LATENCY 99TH PERCENTILE (us) |

|

150 |

90,703 |

1,328 |

970 |

2,319 |

6,552 |

|

200 |

105,570 |

1,472 |

1,025 |

3,875 |

8,559 |

|

250 |

109,022 |

2,347 |

1,079 |

6,583 |

20,517 |

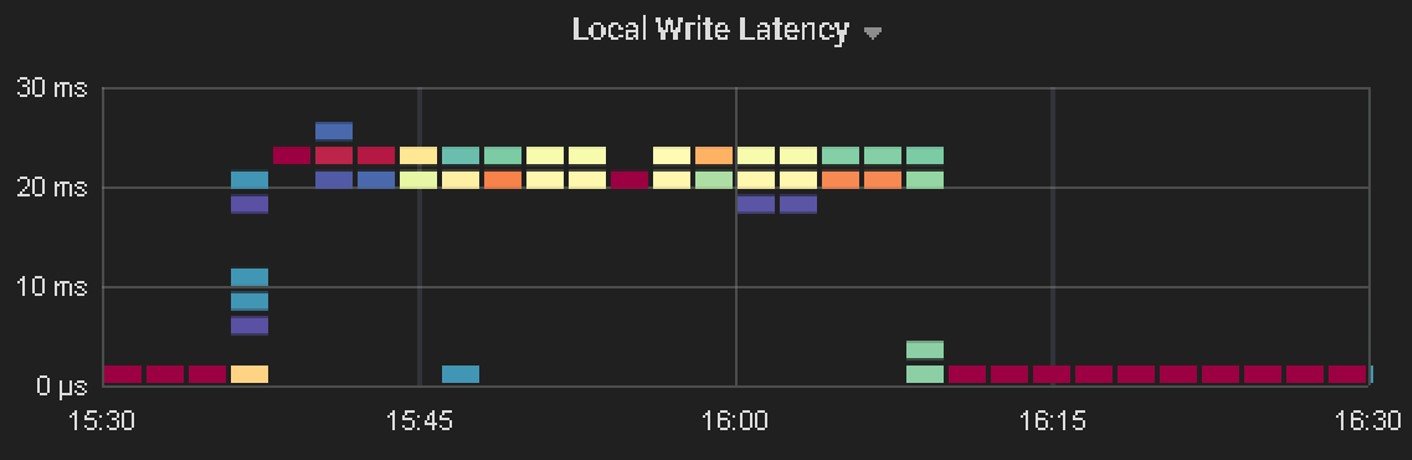

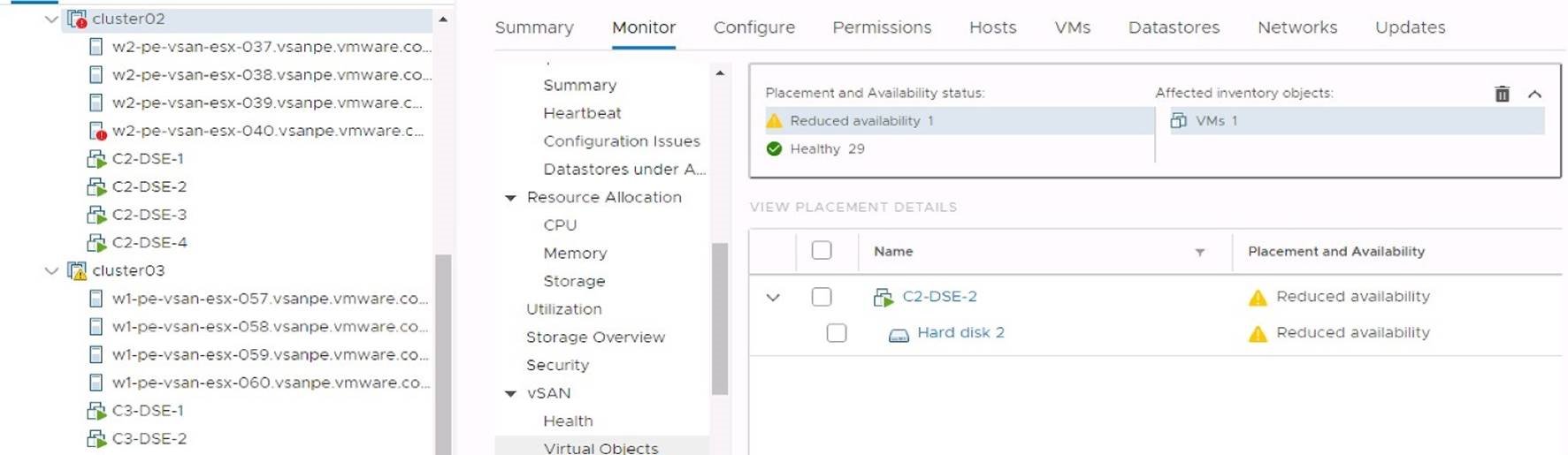

As we can see the local latency from DataStax Enterprise export metrics, local write latency was less than 30ms, local read latency was less than 200ms.

50% WRITE AND 50% READ PERFORMANCE

We took a snapshot for the preload base dataset, each time we loaded the snapshot. We initiated the test from a client VM.

We started with thread=100, and then adjusted the threads number to 200 to see the IOPS and latency difference.

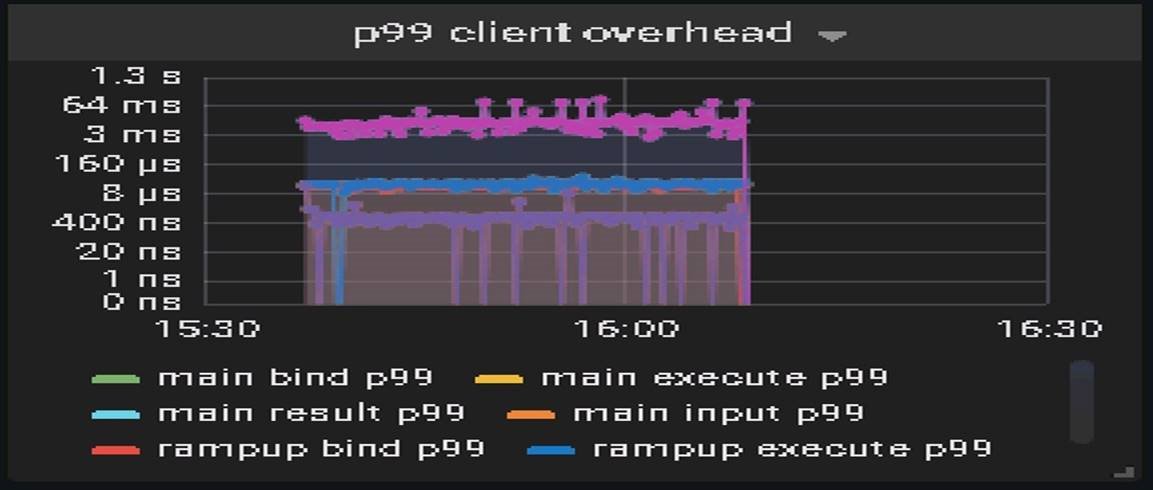

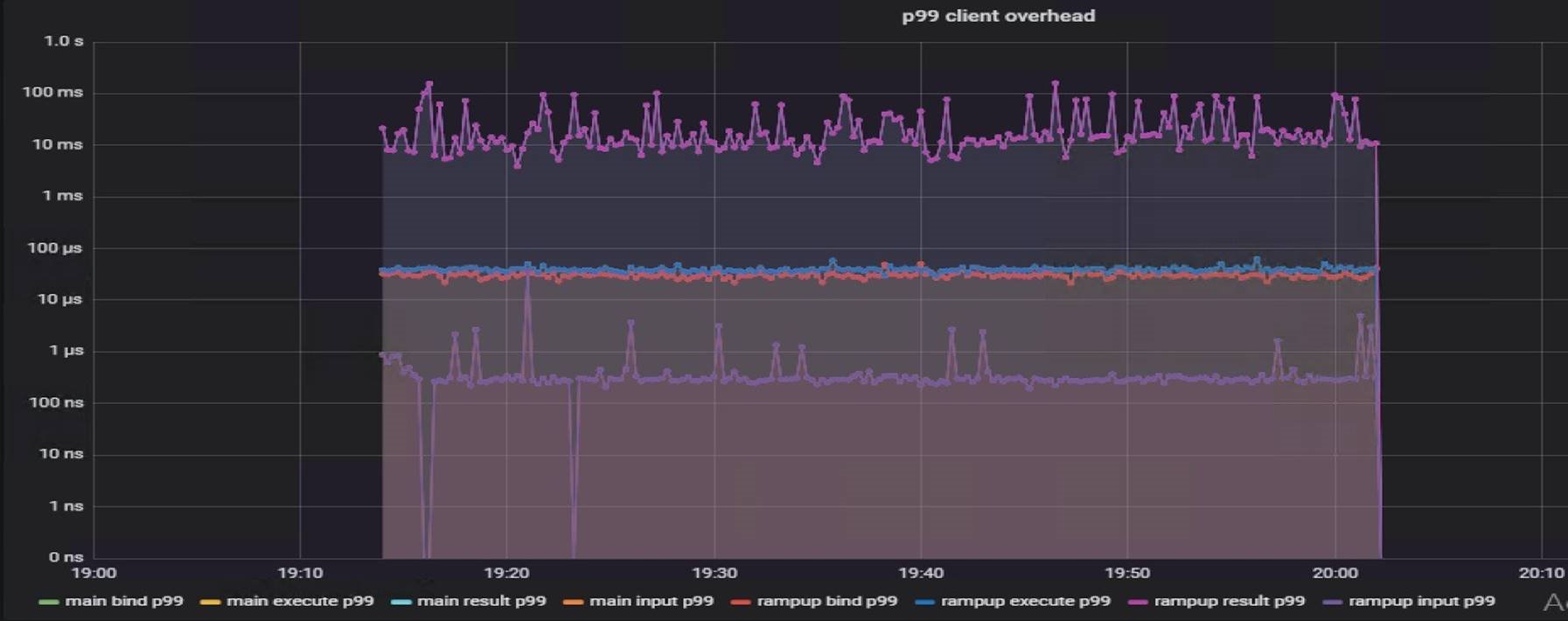

From the Grafana view, the IOPS and latency were consistent during the test.

FIGURE 6. IOPS for 50%write 50%read Workload

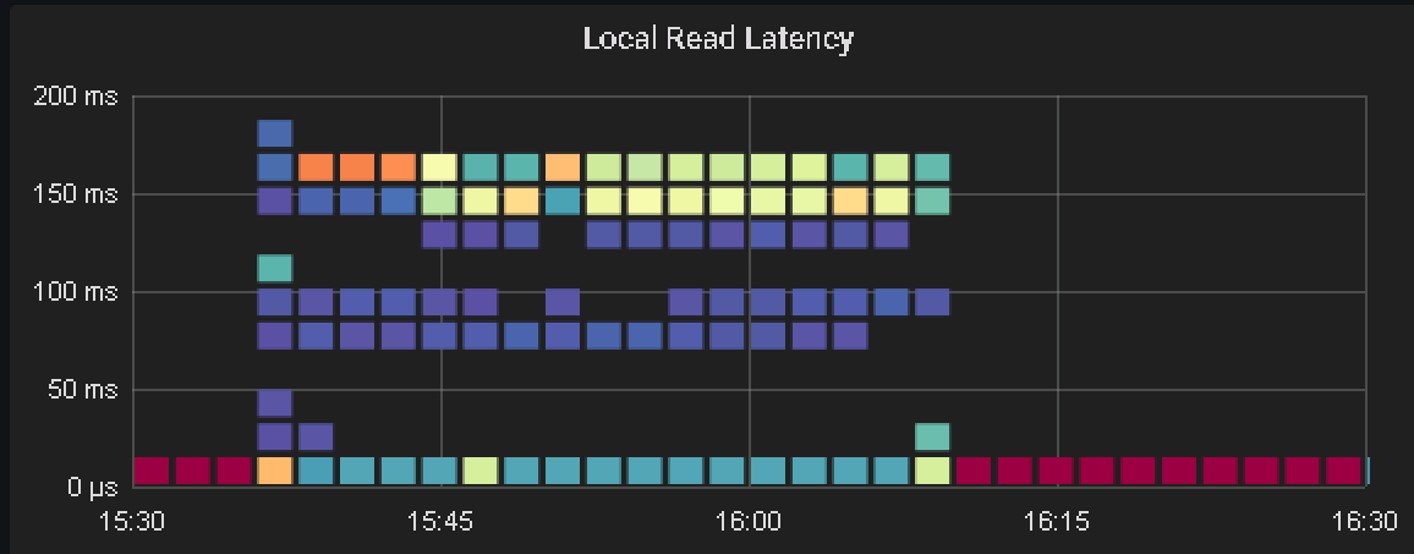

FIGURE 7. P99 Client Latency for 50%write 50%read Workload

FIGURE 7. P99 Client Latency for 50%write 50%read Workload

FIGURE 7. P99 Client Latency for 50%write 50%read Workload

Thread=200 test got higher IOPS as shown in Table 7, but the CPU usage was about 80%.

TABLE 7. 50%write and 50%read IOPS and Latencies

|

THREAD |

OP RATE (op/s) |

LATENCY MEAN |

LATENCY MEDIAN |

LATENCY |

LATENCY 99TH PERCENTILE (us) |

|

150 |

71,570 |

1,385 |

2,003 |

4,335 |

8,733 |

|

200 |

76,644 |

2,432 |

1,696 |

5,932 |

9,977 |

Resiliency and Availability

These scenarios are designed to showcase vSAN’s storage-layer resiliency features combined with DataStax Enterprise’s peer-to-peer design, meeting the data availability requirements of even the most demanding applications, maintaining the continuity and integrity during component failures while causing minimum performance impact. We choose thread=150 in the resiliency and availability tests since the average CPU usage is below 80%.

Capacity Disk Failure

A physical capacity disk failure in a vSAN datastore, which will cause vSAN objects residing on this disk to enter a degraded state. With the storage policy set with PFTT=1, the object can still survive and serve I/O. Storage-layer resiliency handles this failure, thus from the DataStax Enterprise VMs’ perspective, there is no interruption of service.

We simulated a physical disk failure by injecting a disk failure to a capacity SSD drive.

The following procedures were used:

- Run NoSQLBench performance testing with thread=150.

- When the workload is in a steady state, choose a capacity disk with its NAA ID recorded and verify there are affected vSAN components on it, inject a permanent disk failure to the disk on the selected ESXi host.

- After 15 minutes (before the 1-hour timeout, which means that no rebuilding activity will occur during this test), put the disk drive back in the host, simply rescan the host for new disks to make sure NAA ID is back and then clear any hot unplug flags set previously with the -c option using the vsanDiskFaultInjection script. Collect and measure the performance before and after the single disk failure.

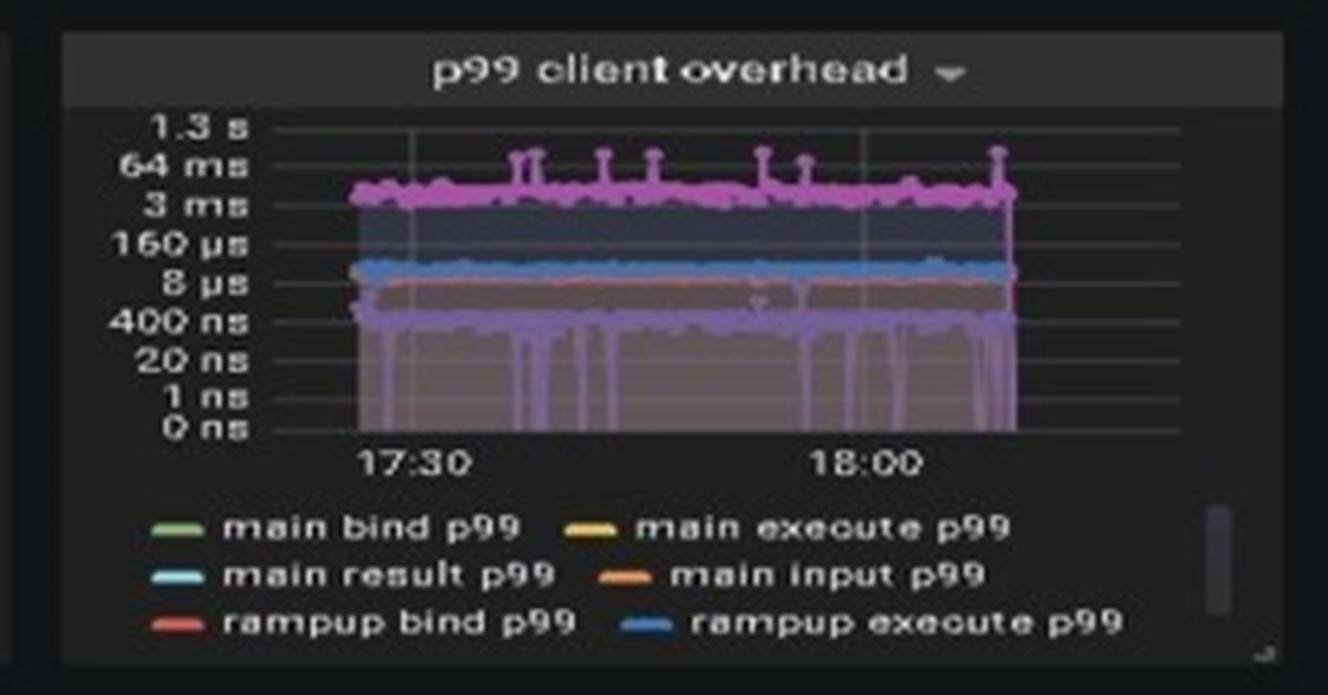

VALIDATION AND RESULT

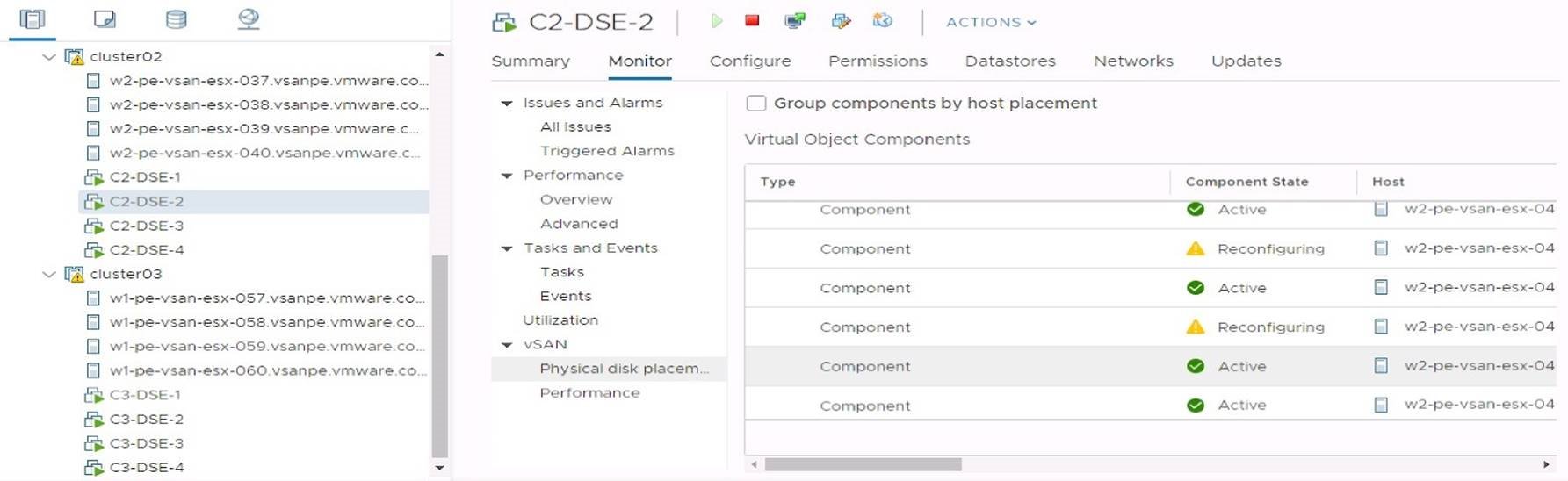

In the validation, we selected a capacity disk on host w2-pe-vsan-esx-040.vsanpe.vmware.com after injecting a permanent disk error, in the Monitor tab for vSAN Virtual Objects, we can see one reduced availability disk, but the VMs are still running normally.

FIGURE 8. Impacted VM Disks

No DataStax Enterprise node was impacted, and all 12 nodes were in normal state.

After 15 minutes, the disk error was cleared, and the backend component was reconfigured and soon became active.

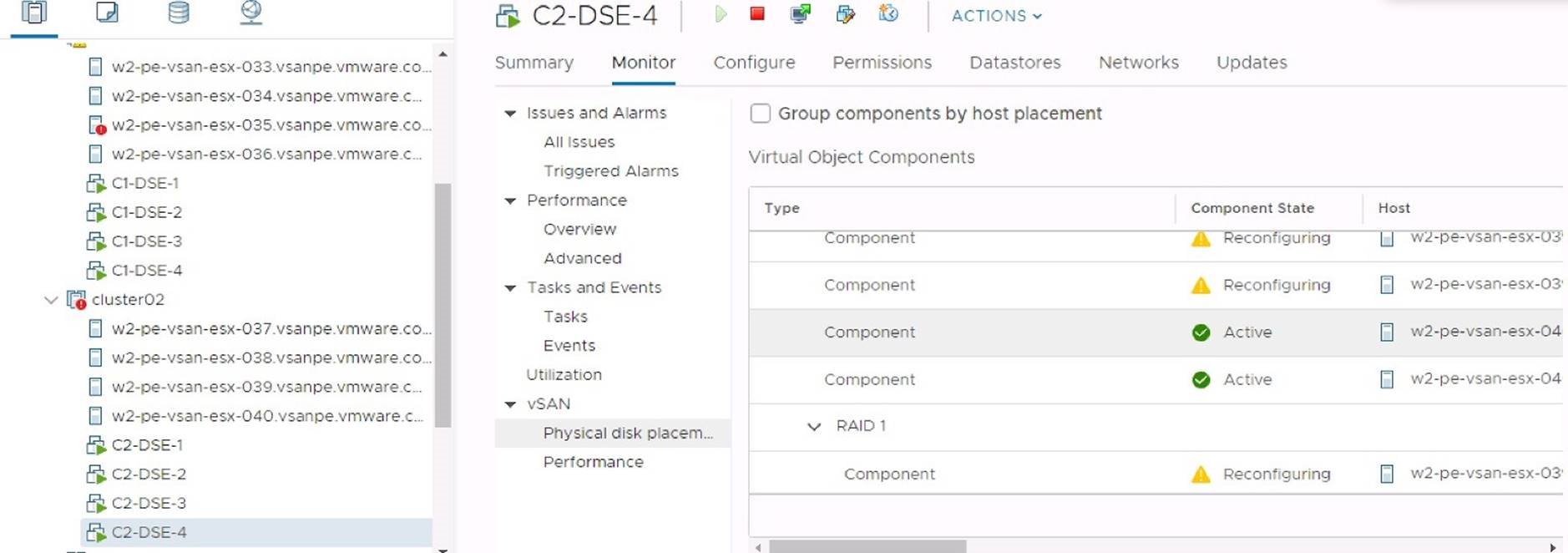

FIGURE 9. Component Reconfiguration

There is minimal impact to the application because all DataStax Enterprise nodes were running.

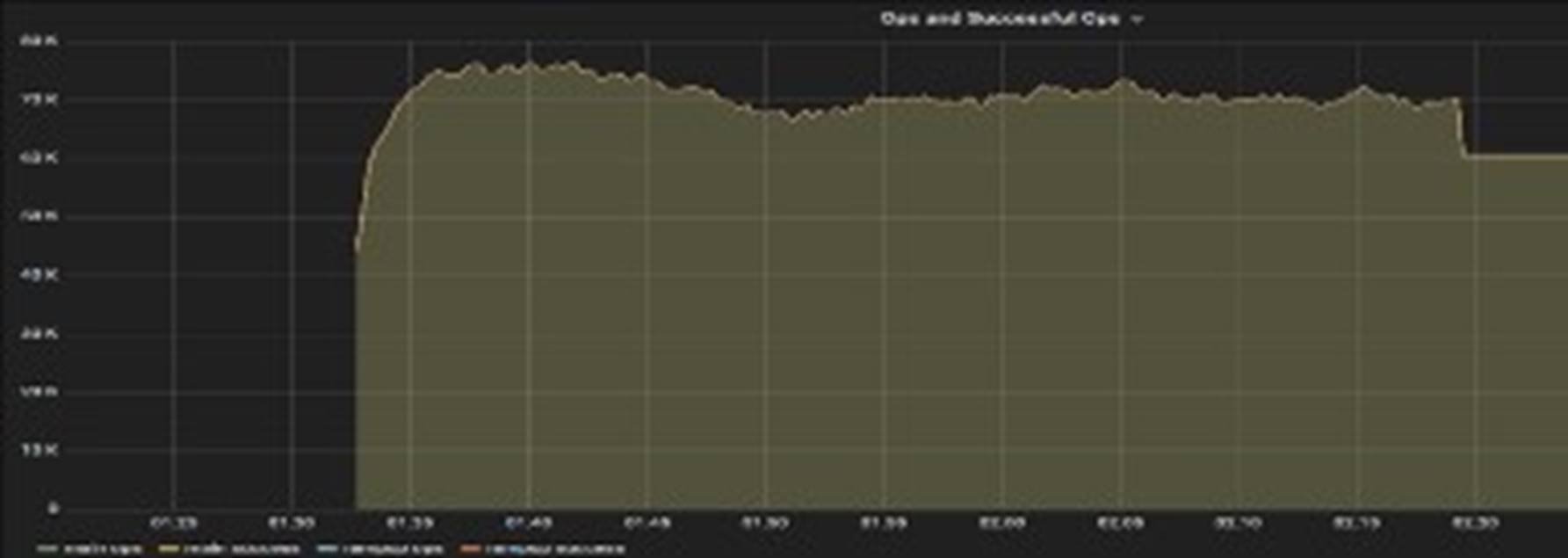

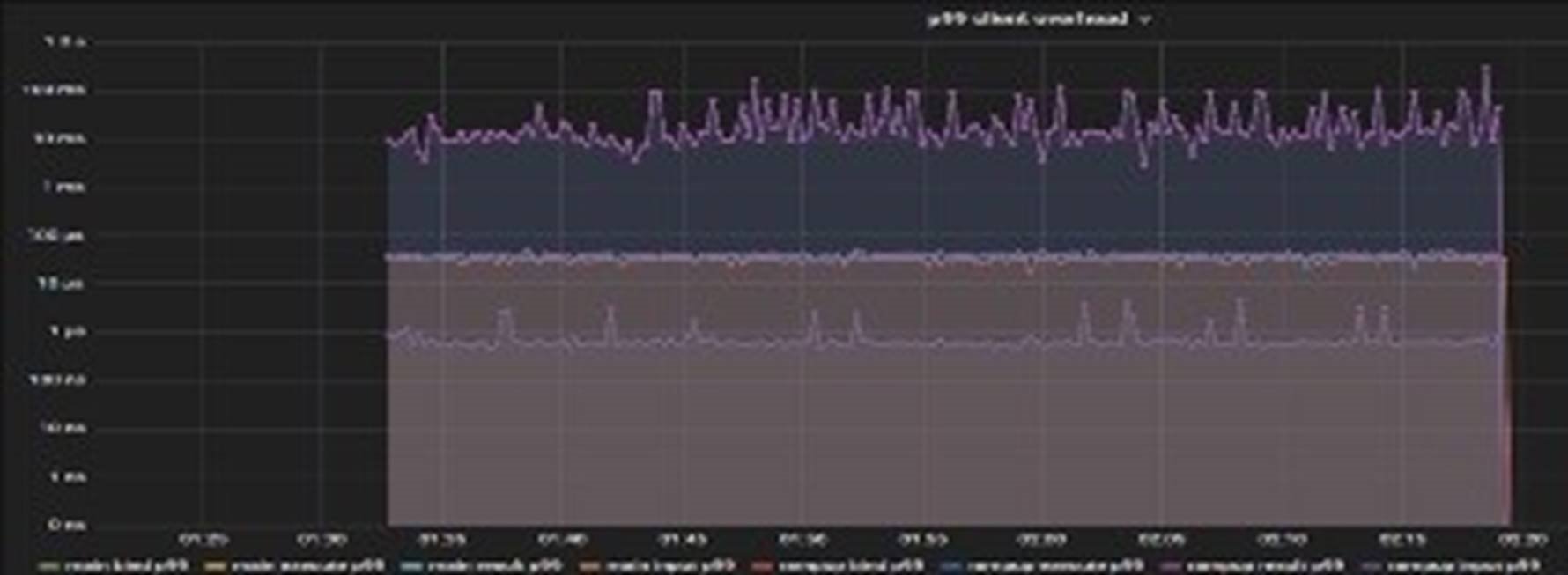

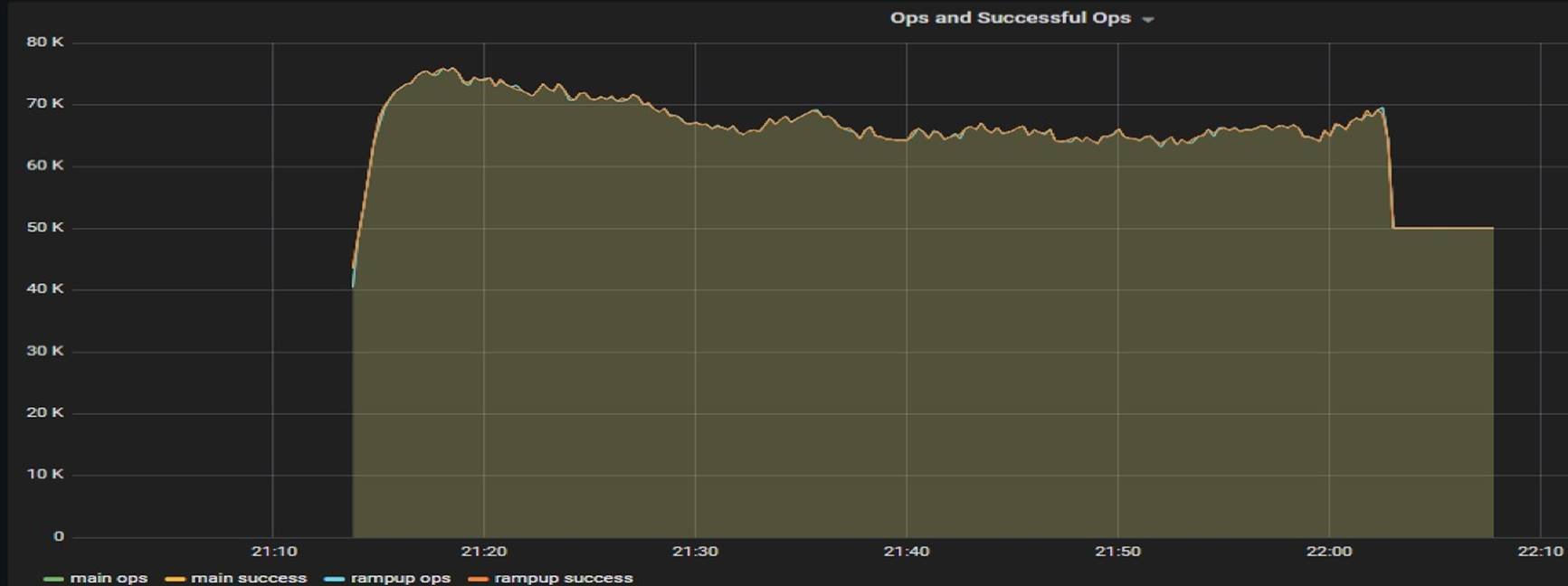

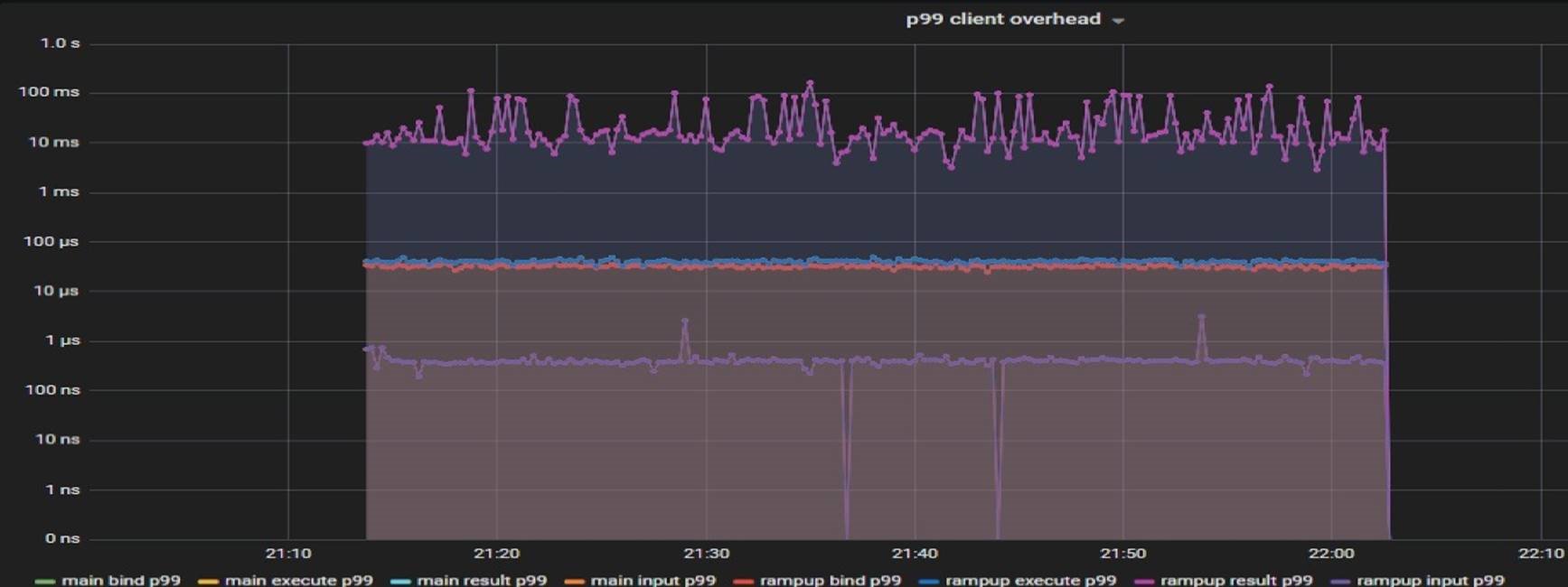

From DataStax Enterprise Grafana report, the IOPS and latency of the performance testing were consistent during the failure, and P99 latency level is acceptable.

FIGURE 10. IOPS during Capacity Disk Failure

FIGURE 11. P99 Latency during Capacity Disk Failure

Cache Disk Failure

A physical capacity disk failure in a vSAN datastore, which will cause vSAN objects residing on this disk to enter a degraded state. With the storage policy set with PFTT=1, the object can still survive and serve I/O. Storage-layer resiliency handles this failure, thus from the DataStax Enterprise VMs’ perspective, there is no interruption of service.

We simulated a physical disk failure by injecting a disk failure to a capacity SSD drive.

The following procedures were used:

- Run NoSQLBench performance testing with thread=150.

- When the workload is in a steady state, choose a capacity disk with its NAA ID recorded and verify there are affected vSAN components on it, inject a permanent disk failure to the disk on the selected ESXi host.

- After 15 minutes (before the 1-hour timeout, which means that no rebuilding activity will occur during this test), put the disk drive back in the host, simply rescan the host for new disks to make sure NAA ID is back and then clear any hot unplug flags set previously with the -c option using the vsanDiskFaultInjection script. Collect and measure the performance before and after the single disk failure.

VALIDATION AND RESULT

In the validation, select a cache disk on host w2-pe-vsan-esx-040.vsanpe.vmware.com after injecting a permanent disk error, in the Monitor tab for vSAN Virtual Objects, we can see 7 reduced availability virtual object, but the VMs are still running normally.

FIGURE 12. Affected VM Disks

After bringing back the cache disk, vSAN backend reconfigured the affected components.

FIGURE 13. Component Reconfiguration

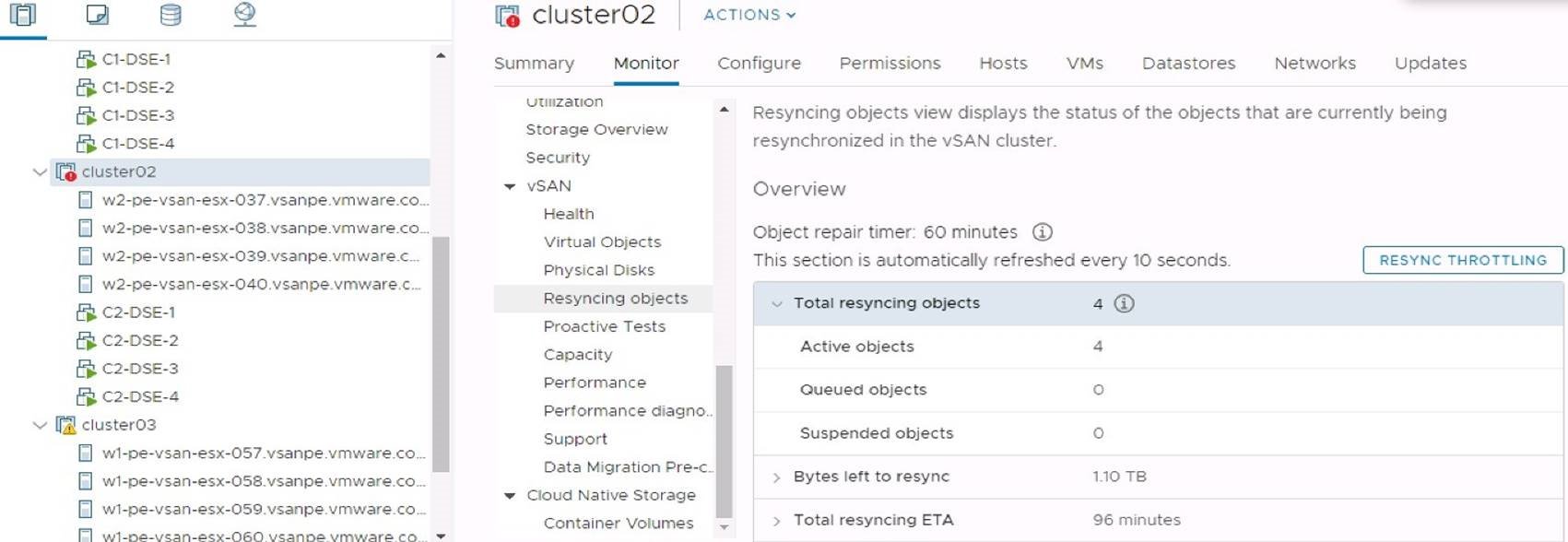

From the vSAN Resyncing Objects page, the resyncing objects and bytes to resync are displayed.

FIGURE 14. Resyncing Objects

There is very limited impact to the application because all DataStax Enterprise nodes are running,

From the DataStax Enterprise Grafana report, the IOPS and latency of the performance testing were consistent during the failure, and the P99 latency level was acceptable.

FIGURE 15. IOPS during cache disk failure

FIGURE 16. P99 Client latency during cache disk failure

Host Failure with vSphere HA and DRS

In this scenario, we validated that the DataStax Enterprise application-level availability together with vSphere HA speed up the failover process. A physical host failure will power off all the running VMs residing on it. In our validation, the DataStax Enterprise cluster loses one node but DataStax Enterprise cluster service is not interrupted. The throughput impact was limited. When a host fails, vSphere HA will restart the affected VMs on other hosts which will significantly speed up the failover process. When the affected host is back, DRS will rebalance VMs to this host. If we do not enable DRS, we can initiate vMotion when we think it is necessary.

Notes: The migration threshold should be set relatively conservative, otherwise, DRS will trigger unnecessary vMotion.

We simulated a host failure by rebooting a physical ESXi host. The procedures were:

- Run NoSQLbench performance testing with thread=150.

- When the workload is in a steady state, select a host to reboot.

- Collect and measure the performance before and after the host failure.

VALIDATION AND RESULT

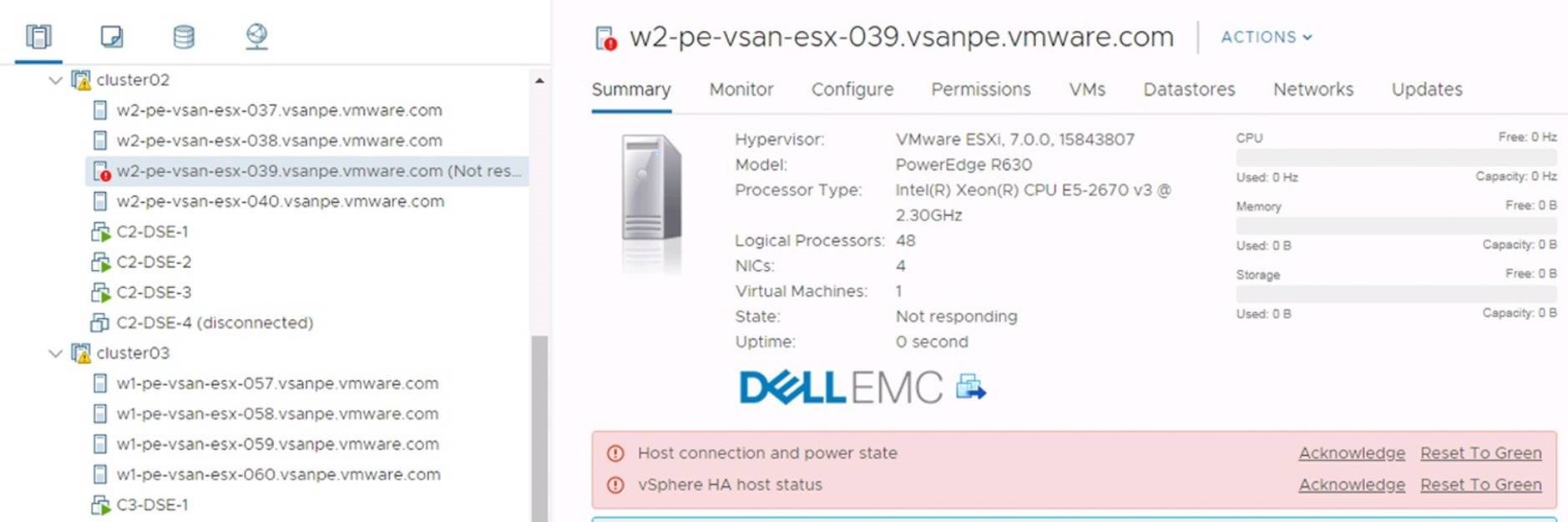

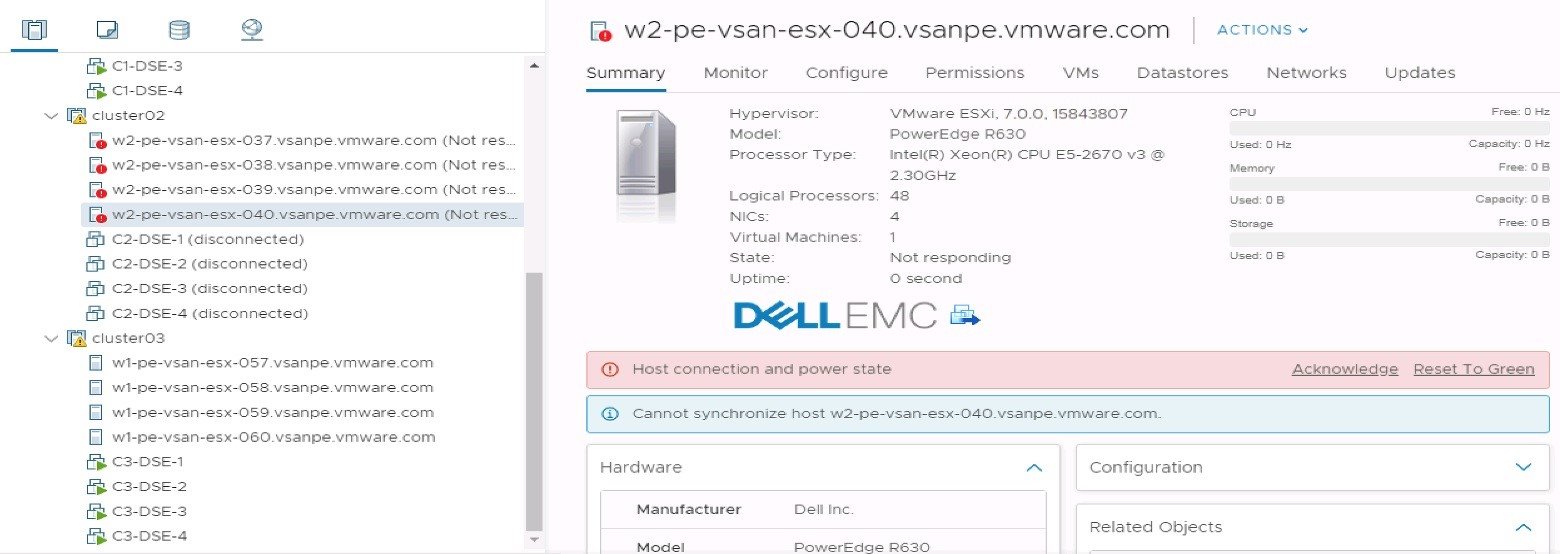

After rebooting the host w2-pe-vsan-esx-039.vsanpe.vmware.com, from the web client UI, the host status was not responding, and also the DataStax Enterprise VM “C2-DataStax Enterprise-4” was disconnected.

FIGURE 17. Host failure

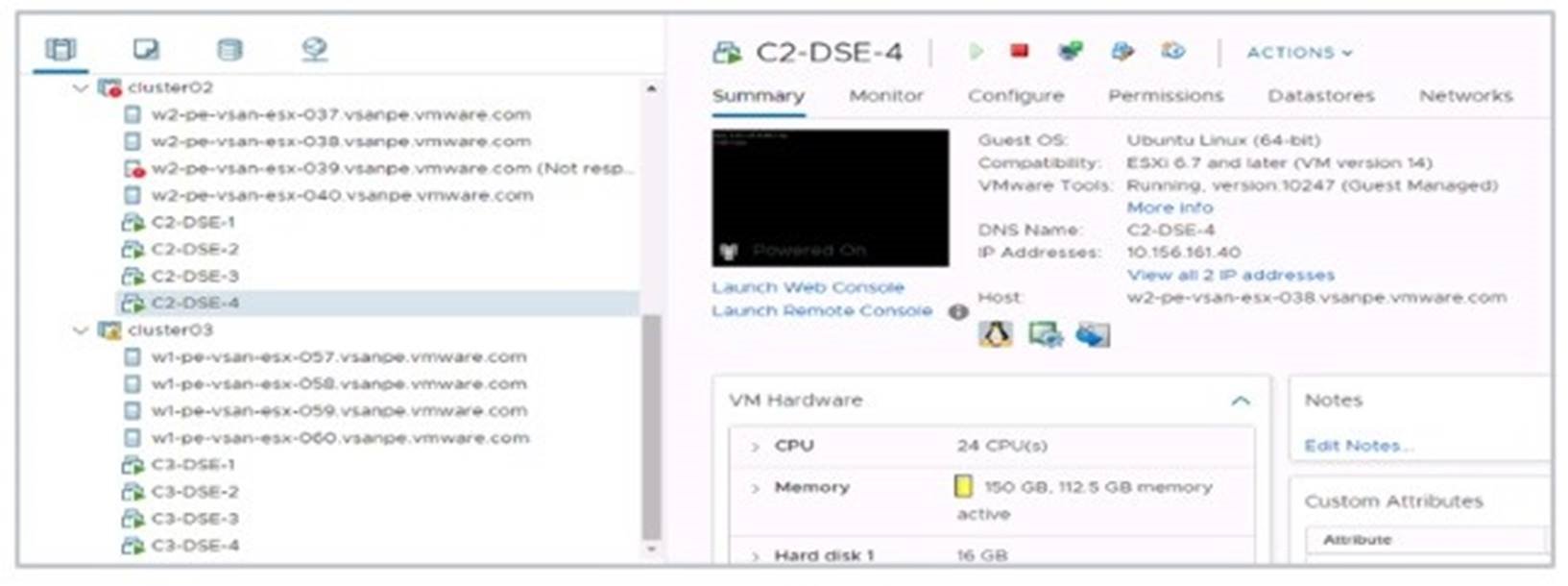

After 1-2 minutes, the DataStax Enterprise VM “c2-dse-4” was restarted by vSphere HA on another node.

FIGURE 18. VM restarted on another host by HA

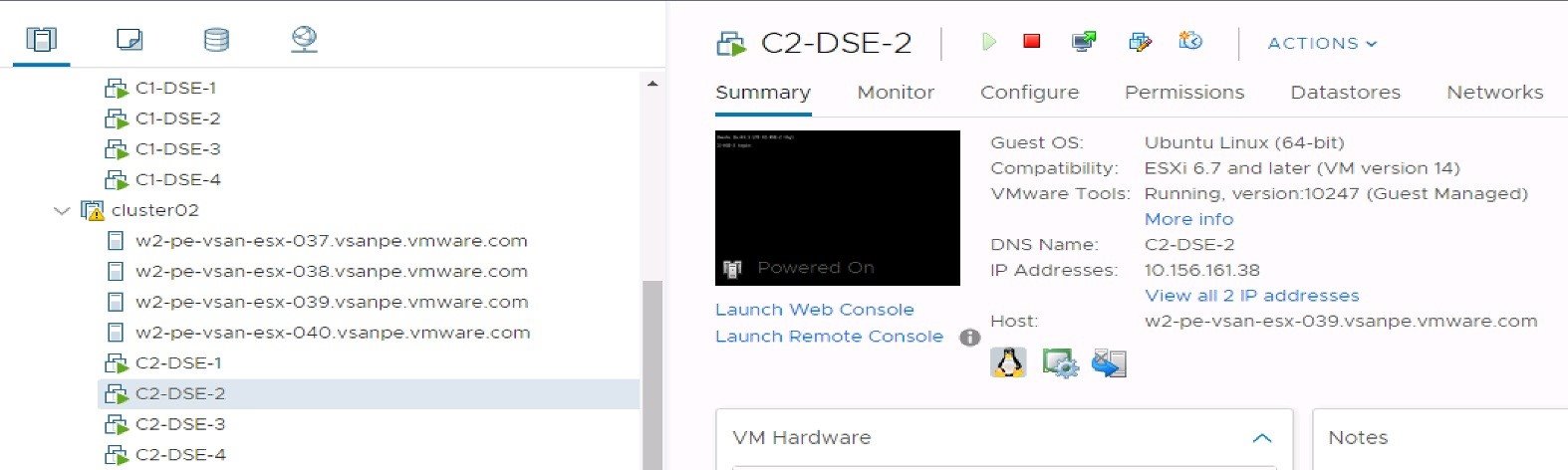

About 10 minutes later, host w2-pe-vsan-esx-039 was back online, vSphere DRS triggered C2-DataStax Enterprise-2 and the vm was migrated to this host.

FIGURE 19. DRS Triggers vMotion

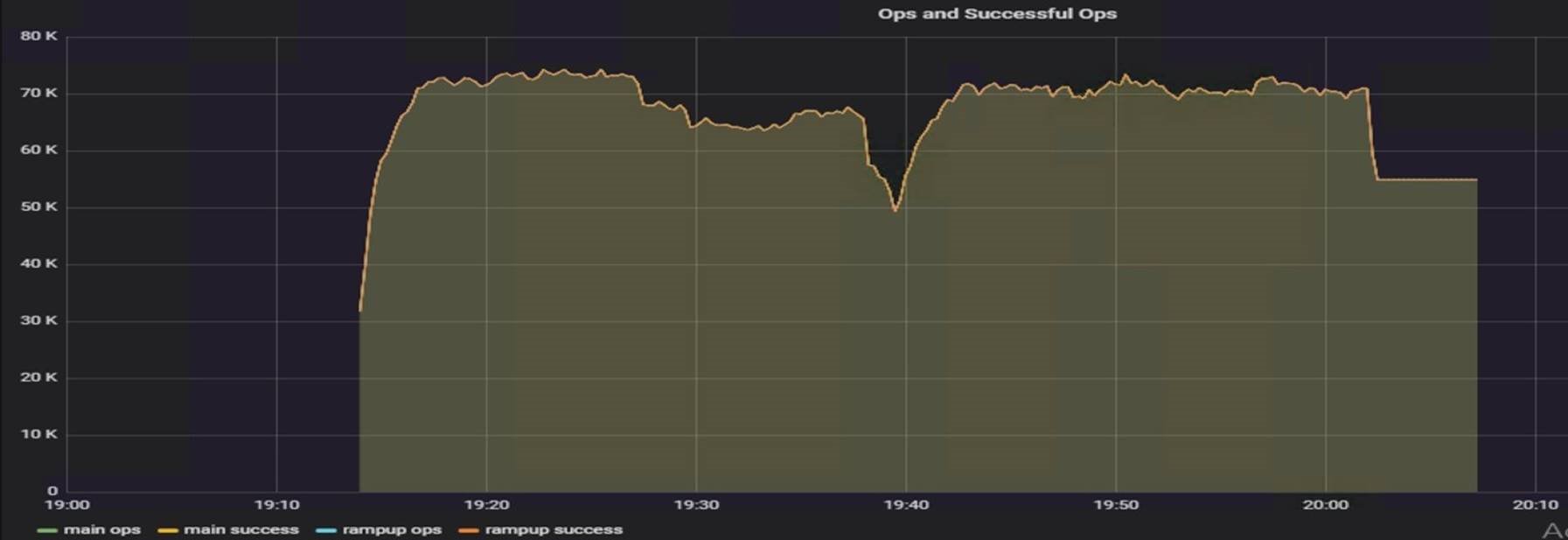

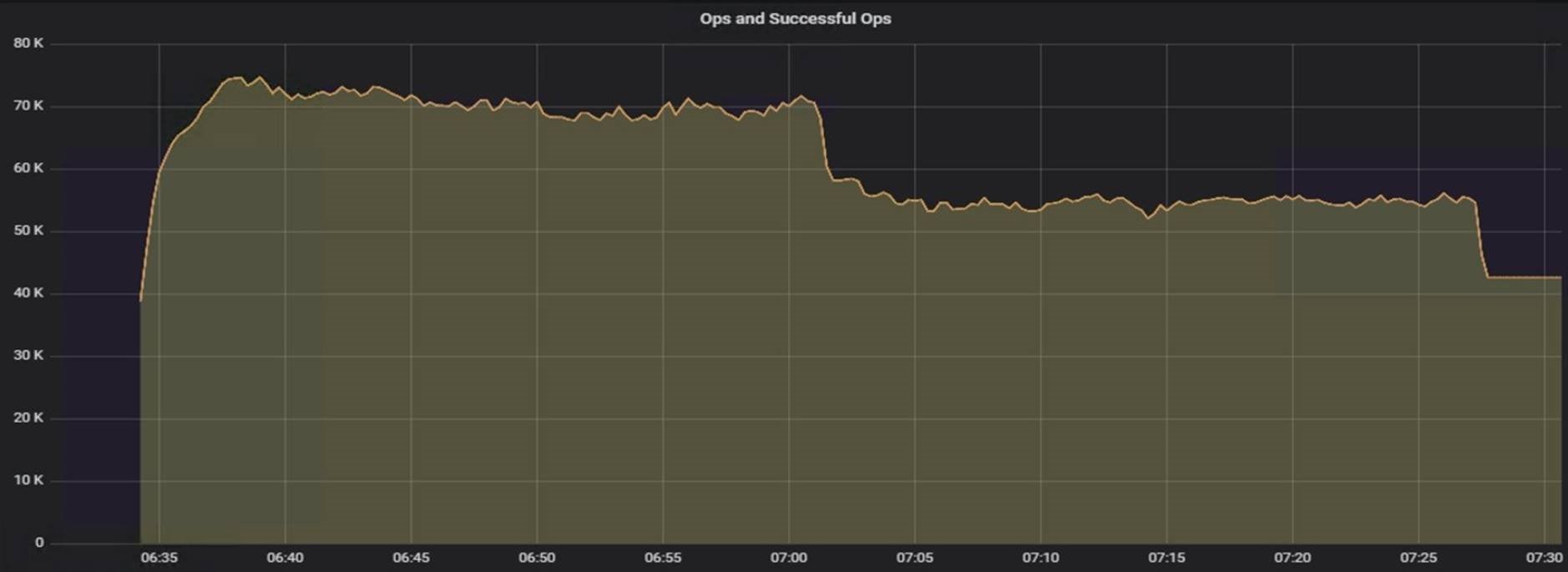

From the DataStax Enterprise Grafana node, throughput was degraded due to the loss of one DataStax Enterprise node as expected, but service was not interrupted, and HA restarted the affected DataStax Enterprise node in 1-2 minute and the workload returned to normal in 2 minutes. The DRS triggered VM migration which caused about 1-minute throughput degradation but soon came back to the performance before the failure. P99 latency was consistent during the testing.

FIGURE 20. IOPS during Host Failure

FIGURE 20. P99 Client Latency during Host Failure

Rack Failure

In this scenario, we validated the DataStax Enterprise application-level availability with rack awareness. We used DataStax Enterprise’s rack awareness, each DataStax Enterprise replica was stored in a different rack in case one rack went down. In our validation, we simulated a rack down that is one vSAN cluster down, since we used Replication Factor 3 for the testing keyspace, DataStax Enterprise cluster still got two replicas, so the DataStax Enterprise service was not interrupted.

We simulated a rack failure by powering off four physical ESXi hosts from ILO. The procedures were:

- Run NoSQLBench performance testing with thread=150.

- When the workload is in a steady state, power off 4 hosts in the same cluster.

- Collect and measure the performance before and after the rack failure.

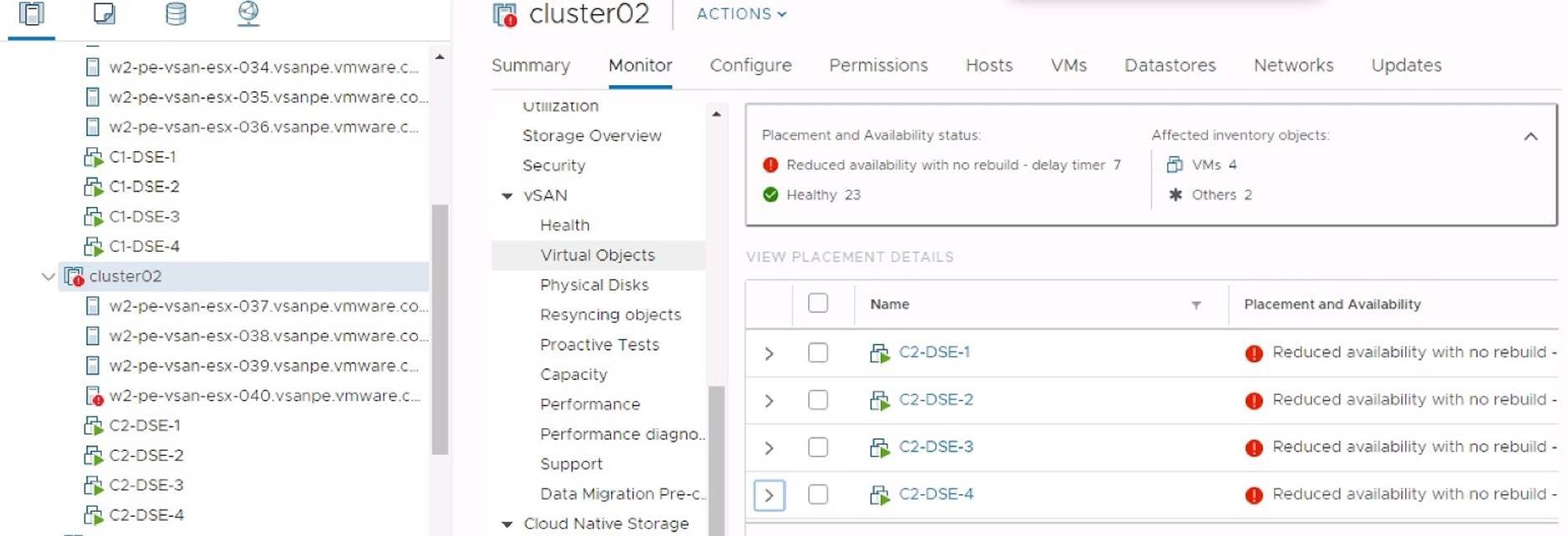

VALIDATION AND RESULT

After powering off the hosts: w2-pe-vsan-esx-037.vsanpe.vmware.com w2-pe-vsan- esx-038.vsanpe.vmware.com, w2-pe-vsan-esx-039.vsanpe.vmware.com, w2-pe-vsan- esx-040.vsanpe.vmware.com, from the web client UI, the host status was not responding, and also the DataStax Enterprise VMs “C2-DataStax Enterprise1, C2-DataStax Enterprise-2,C2-DataStax Enterprise-3,C2-DataStax Enterprise-4” were disconnected.

FIGURE 22. Rack failure

From the DataStax Enterprise side, 4 nodes were impacted (marked as down).

DataStax Enterprise service was not affected because the DataStax Enterprise keyspace still had two replicas, from the Grafana graph for the throughput, IOPS decreased as expected due to 4 DataStax Enterprise nodes down. Latency was consistent during the testing.

FIGURE 23. IOPS during Rack Down

Figure 24. Rack Failure

Best Practices

When deploying DataStax Enterprise on VMware Cloud Foundation, consider the following best practices:

- When configuring the virtual CPU and memory for DataStax Enterprise VMs, choose an appropriate CPU and memory number to best suit the users’ requirements. VM aggregated CPU cores and memory should not exceed the physical resources to avoid contention.

- When Configuring network, follow NSX Data Center for vSphere design best practices for workload domain, see VMware NSX for vSphere (NSX) Network Virtualization Design Guide.

- The DRS migration threshold should be set to conservative to allow only essential VM movements. Alternatively, the DRS automation level set to partially automated or manual to allow only user-initiated events. While availability is not impacted by vMotions, they can result in a momentary drop in performance.

- Follow VMware NSX-T Reference Design for configuring NSX-T on VMware Cloud Foundation.

- Schedule VM backup and snapshots during off peak times.

- Follow Datastax Recommended production settings when deploying DSE on VMware Cloud Foundation.

Conclusion

Overall, deploying, running, and managing DataStax Enterprise clusters on VMware Cloud Foundation provides predictable performance and high availability. VMware Cloud Foundation is an ideal cloud platform for DataStax Enterprise applications, powered by VMware vSphere, vSAN, NSX Data Center, and vRealize Suite. This platform allows IT administrators to enable fast cloud deployment, achieve better scalability for performance, ensure infrastructure and application security, monitor data center operational health, and lower TCO expenditure.

Reference

About the Author

Ting Yin, Senior Solutions Architect in the Solutions Architecture team of the Cloud Platform Business Unit, wrote the original version of this paper.