Exposing Hardware Accelerators using Assignable Hardware

Overview

A wide variety of modern workloads greatly benefit from using hardware accelerators to offload certain capabilities, save CPU cycles, and gain a lot of performance in general. Think about the telco industry for example: Network Function Virtualization (NFV) platforms utilizing NICs and FPGAs. Or customers that use GPUs for graphic acceleration in their Virtual Desktop Infrastructure (VDI) deployment. The AI/ML space is another example of workloads where applications are enabled to use GPUs to offload computations. To utilize a hardware accelerator with vSphere, typically a PCIe device, the device needs to be exposed to guest OS running inside the virtual machine.

In vSphere versions prior to vSphere 7, a virtual machines specifies a PCIe passthrough device by using its hardware address. This is an identifier that points to a specific physical device at a specific bus location on that ESXi host. This restricts that virtual machine to that particular host. The virtual machine cannot easily be migrated to another ESXi host with an identical PCIe device. This can impact the availability of the application using the PCIe device, in the event of host outage. Features like vSphere DRS and HA are not able to place that virtual machine on a another, or surviving, host in the cluster. It takes manual provisioning and configuration to be able to move that virtual machine to another host.

We do not want to compromise on application availability and ease of deployment. Assignable Hardware is a new feature in vSphere 7 that overcomes these challenges.

Introducing Assignable Hardware

Assignable Hardware in vSphere 7 provides a flexible mechanism to assign hardware accelerators to workloads. This mechanism identifies the hardware accelerator by attributes of the device rather than by its hardware address. This allows for a level of abstraction of the PCIe device. Assignable Hardware implements compatibility checks to verify that ESXi hosts have assignable devices available to meet the needs of the virtual machine.

It integrates with Distributed Resource Scheduler (DRS) for initial placement of workloads that are configured with a hardware accelerator. This also means that Assignable Hardware brings back the vSphere HA capabilities to recover workloads (that are hardware accelerator enabled) if assignable devices are available in the cluster. This greatly improves workload availability.

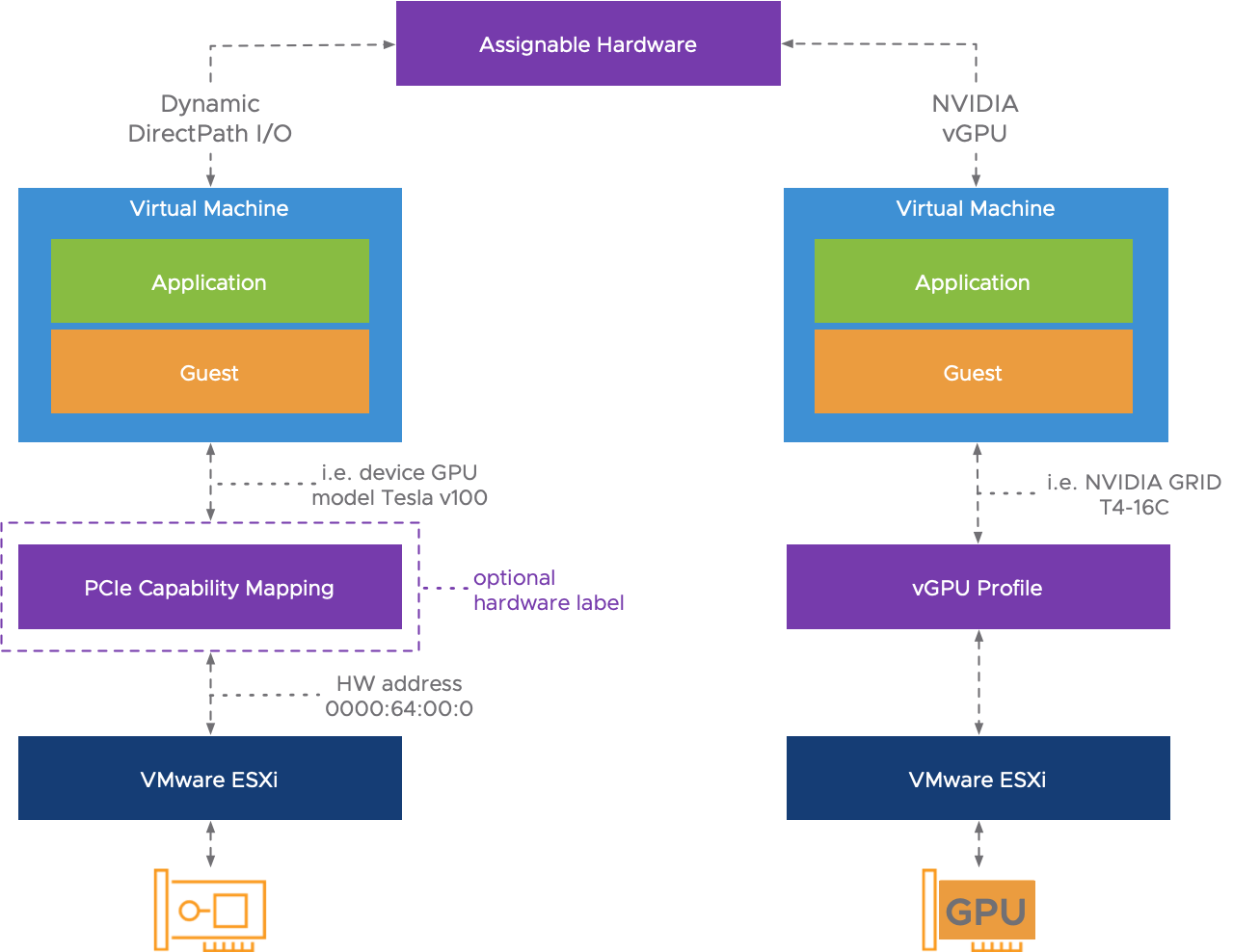

The Assignable Hardware feature has two consumers; The new Dynamic DirectPath I/O and NVIDIA vGPU.

Dynamic DirectPath I/O

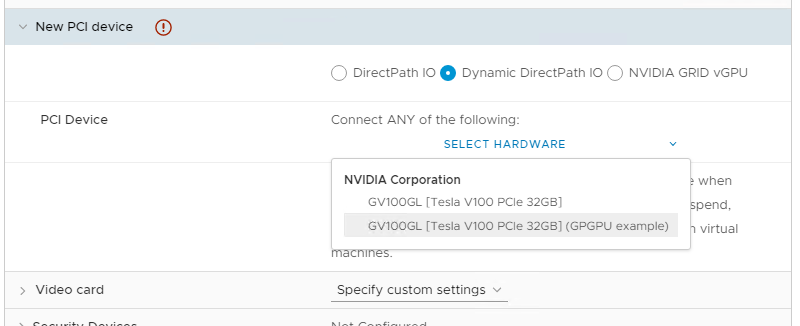

As a part of the Assignable Hardware framework in vSphere 7, Dynamic DirectPath I/O is introduced. When configuring a virtual machine to use a PCIe device, customers will be presented with three options:

- DirectPath I/O

- Dynamic DirectPath I/O

- NVIDIA vGPU

DirectPath I/O is the legacy feature to passthrough a PCIe device like before with no integration with vSphere DRS and HA. Dynamic DirectPath I/O is the new solution that enables the Assignable Hardware intelligence for passthrough devices. No longer is the hardware address of the PCIe device directly mapped to the virtual machine configuration file (the .vmx file). Instead, the attributes, or capabilities, are exposed to the virtual machine.

When you choose ‘Select Hardware’, all the PCIe devices capable for passthrough are listed in the dropdown menu. Once a specific device is selected, Assignable Hardware with DRS will find a suitable host that can satisfy the request for the configured hardware accelerator.

Hardware Labels

Notice the ‘GPGPU example‘ custom label in the upper screenshot? That is is the optional hardware label for PCIe devices when using Dynamic DirectPath I/O. It allows for even more flexibility when assigning hardware accelerators to virtual machines. A PCIe device can be configured with one label. DRS takes care of initial placement for a virtual machine configured with a Dynamic DirectPath I/O. Assignable Hardware finds a suitable host with an identical device as configured, or find a host with a device with an identical hardware label. This could mean a different type of PCIe device is backing that hardware label.

Customers might have heterogeneous host configurations. ESXi hosts in a cluster could be equipped with different types of GPUs. For example; Using custom hardware labels, we could use a NVIDIA T4 GPU, or a RTX6000 GPU. Both using the same GPU ‘Turing’ architecture, so we could potentially use the ‘Turing GPU‘ hardware label. Using a hardware label provides the flexibility to use either GPU model for the virtual machine, as long as the label matches. It is recommended to only include devices to a specific label that provide similar hardware capabilities.

How to Configure

Providing a custom hardware label is as easy as opening the Passthrough enabled PCI Devices options, insert a label and configure a virtual machine with the PCIe device. Keep in mind that VM hardware version 17 is required for Assignable Hardware and Dynamic DirectPath I/O.

NVIDIA vGPU

The other consumer of Assignable Hardware is NVIDIA vGPU. This is a versatile solution that is co-developed with NVIDIA and allows for partial, full, or multiple GPU allocation to workloads. Please review this extensive blog post to learn how NVIDIA vGPU can be integrated on a VMware vSphere platform.

Since vSphere 6.7, the co-developed NVIDIA vGPU solution already allows for workload portability, even live-migrations using vMotion as of vSphere 6.7 Update 1. With vSphere 7 and Assignable Hardware, the workload placement is improved for vGPU enabled virtual machines. Assignable Hardware will verify the availability of the vGPU profile that is selected for the virtual machine.

To Conclude

Assignable Hardware greatly improves how the customers can utilize hardware accelerators. Bringing back vSphere HA and DRS (initial placement) capabilities for virtual machines that are configured with a PCIe device, is huge! The following video also explains the Assignable Hardware concept.