Introduction to the vSphere Clustering Service (vCLS)

Overview

The vSphere Clustering Service (vCLS) is a new capability that is introduced in the vSphere 7 Update 1 release. It’s first release provides the foundation to work towards creating a decoupled and distributed control plane for clustering services in vSphere.

The challenge being that cluster services, like the vSphere Distributed Resource Scheduler (DRS), depend on vCenter Server availability for its configuration and operation. And while there’s ways to increase availability for vCenter Server, think about vSphere High Availability (HA) and vCenter Server High Availability (VCHA), its dependency is not ideal. Also, when thinking about vCenter Server scalability in large on-prem and public clouds, we need a better solution to support clustering services. That’s why vCLS is introduced. In the first release, a subset of DRS capabilities is already using the new vCLS feature.

Basic Architecture

The basic architecture for the vCLS control plane consists of maximum 3 virtual machines (VM), also referred to as system or agent VMs which are placed on separate hosts in a cluster. These are lightweight agent VMs that form a cluster quorum. On smaller clusters with less than 3 hosts, the number of agent VMs is equal to the numbers of ESXi hosts. The agent VMs are managed by vSphere Cluster Services. Users are not expected to maintain the lifecycle or state for the agent VMs, they should not be treated like the typical workload VMs.

Cluster Service Health

The agent VMs that form the cluster quorum state, are self correcting. This means that when the agent VMs are not available, vCLS will try to instantiate or power-on the VMs automatically.

There are 3 health states for the cluster services:

- Healthy – The vCLS health is green when at least 1 agent VM is running in the cluster. To maintain agent VM availability, there’s a cluster quorum of 3 agent VMs deployed.

- Degraded – This is a transient state when at least 1 of the agent VMs is not available but DRS has not skipped it’s logic due to the unavailability of agent VMs. The cluster could be in this state when either vCLS VMs are being re-deployed or getting powered-on after some impact to the running VMs.

- Unhealthy – A vCLS unhealthy state happens when a next run of the DRS logic (workload placement or balancing operation) skips due to the vCLS control-plane not being available (at least 1 agent VM).

Agent VM Resources

The vCLS agent VMs are lightweight, meaning that resource consumption is kept to a minimum. vCLS automatically creates a max of 3 agent VMs per cluster in an existing deployment when vCenter Server is upgraded to vSphere 7 update 1. In a greenfield scenario, they are created when ESXi hosts are added to a new cluster. If no shared storage is available, the agent VMs are placed on local storage. If a cluster is formed before shared storage is configured on the ESXi hosts, as would be the case when using vSAN, it is strongly recommended to move the vCLS agent VMs to shared storage after.

The agent VMs run a customized Photon OS. The resource specification per agent VM is listed in the following table:

| Memory | 128 MB |

| Memory reservation | 100 MB |

| Swap Size | 256 MB |

| vCPU | 1 |

| vCPU reservation | 100 MHz |

| Disk | 2 GB |

| Ethernet Adapter | – |

| Guest VMDK Size | ~245 MB |

| Storage Space | ~480 MB |

The 2 GB virtual disk is thin provisioned. Also, there’s no networking involved, so there’s no network adapter configured. The agent VMs are not shown in the Hosts and Clusters overview in the vSphere Client. The VMs and Templates view now contains a new folder, vCLS, that contains all vCLS agent VMs. With multiple clusters, all vCLS agent VMs will show, numbered consecutively.

UI Overview

The vSphere Client contains messages and notes to show information about the vCLS agent VMs, also stating that the power state and resources of these VMs is handled by the vCLS.

Operations

As stated before, the agent VMs are maintained by vCLS. There’s no need for VI admins to power-off the VMs. In fact, the vSphere Client shows a warning when a agent VM is powered-off.

When a host is placed into maintenance mode, the vCLS agent VMs are migrated to other hosts within the cluster like regular VMs. Customers should refrain from removing, or renaming the agent VMs or its folder in order to keep the cluster services healthy.

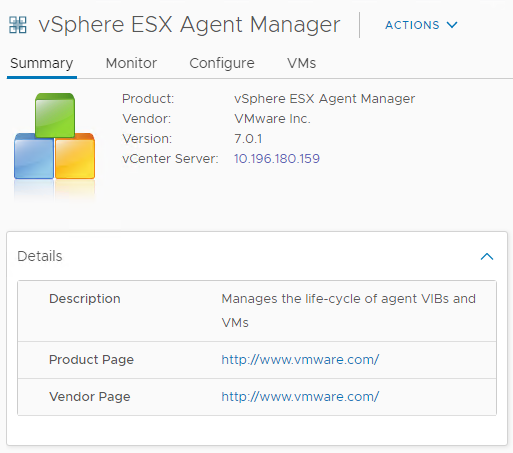

The lifecycle for vCLS agent VMs is maintained by the vSphere ESX Agent Manager (EAM). The Agent Manager creates the VMs automatically, or re-creates/powers-on the VMs when users try to power-off or delete the VMs. Is the example below, you’ll see a power-off and a delete operation. Both from which the EAM recovers the agent VM automatically.

Automation and vCLS

For customer using scripts to automate tasks, it’s important to build in awareness to ignore the agent VMs in, for example clean-up scripts to delete stale VMs. Identifying the vCLS agent VMs is quickly done in the vSphere Client where the agent VMs are listed in the vCLS folder. Also, examining the VMs tab under Administration > vCenter Server Extensions > vSphere ESX Agent Manager lists the agent VMs from all clusters managed by that vCenter Server instance.

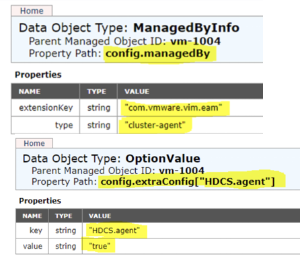

Every agent VM has additional properties so they can be ignored with specific automated tasks. These properties can also be found using the Managed Object Browser (MOB). The specific properties include:

- ManagedByInfo

- extensionKey == “com.vmware.vim.eam”

- type == “cluster-agent”

- ExtraConfig keys

- “eam.agent.ovfPackageUrl”

- “eam.agent.agencyMoId”

- “eam.agent.agentMoId”

vCLS Agent VMs have an additional data property key “HDCS.agent” set to “true”. This property is automatically pushed down to the ESXi host along with the other VM ExtraConfig properties explicitly set by EAM.