MySQL on VMware vSAN 6.7 All-Flash

Executive Summary

This section covers the business case, solution overview, solution benefits, and key results of MySQL on VMware vSAN 6.7 solution.

Business Case

The “LAMP”, which is originally popularized from the phrase “Linux, Apache, MySQL and PHP”, refers to a generic software stack model that has dramatically accelerated the wide adoption of open source technologies. MySQL is the world’s most popular open source database management system and is a key part of the LAMP stack. MySQL offers deployment flexibility, on-demand scalability, high performance and better cost-efficiency open source database solutions in a customized LAMP stack or a Turnkey Linux environment.

VMware vSAN™ is a HyperConverged Infrastructure (HCI) solution with a VMware vSphere ® -native and high-performance architecture, which empowers simplified scalability, high availability and reduced TCO for mission-critical business applications. The all-flash vSAN provides Fault to Tolerance (FTT) method and rich data services through Storage Policy-Based Management (SPBM), which ensures data integrity and improves management agility for enterprise administrators.

This solution validates MySQL powered by VMware vSAN HCI, offering performance scalability, storage efficiency, high availability protection and failure tolerance, for MySQL based applications such as web farms or even business mission-critical OLTP transactions.

Solution Overview

This reference architecture is a showcase of using VMware vSAN all-flash as an HCI for operating and managing MySQL in a vSphere environment:

- We measure the performance scalability of MySQL running on a vSAN all-flash cluster.

- We showcase the storage efficiency of vSAN all-flash for MySQL databases.

- We illustrate the SPBM benefits for MySQL applications.

- We demonstrate the implementation of MySQL Group Replication technology on vSAN to guarantee application-level high availability.

- We show the resiliency of vSAN against different types of hardware failures, while maintaining business continuity and protecting from data loss.

Key Results

This reference architecture in this paper:

- Designs the architecture of deploying MySQL on a vSAN cluster.

- Validates predictable performance scalability of MySQL running on a vSAN all-flash cluster.

- Demonstrates the data service offering of vSAN SPBM to improve storage efficiency and data integrity and minimize the performance impact.

- Implements MySQL high availability with group replication to protect from MySQL service failures or database crashes.

- Demonstrates various vSAN fault tolerance options for business continuity of MySQL workloads.

- Provides best practices and general guidance.

Introduction

This section provides the purpose, scope, and intended audience of this document.

Purpose

This reference architecture verifies the performance and protection of running MySQL on a vSAN cluster.

Scope

The reference architecture covers the following scenarios:

- Performance scalability validation of MySQL on vSAN all-flash with optimized parameters

- vSAN SPBM featuring for MySQL deployment

- Architecting and validating MySQL group replication implementation on vSAN

- Resiliency against various vSAN hardware failures

Audience

This reference architecture is intended but not limited to the following audience:

The Small/Medium Enterprise (SME) Administrator

The SME administrator most often deals with MySQL in the form of turn-key LAMP stacks, as they are a convenient way for any administrators in a small to medium enterprise environment to deploy an application stack. Due to the deployment of LAMP stacks, these environments often have one MySQL instance to one application.

In this solution, we demonstrate that by leveraging the vSAN SPBM feature, the SME administrator has the ability to granularly adjust the performance and protection of an individual LAMP stack on a VM scale, even though it is running in a single cluster.

The Larger Enterprise Administrator

The large enterprise administrator likely has a larger set of resources added to accommodate a farm of MySQL servers. These environments have a more traditional one-to-many arrangement. Large enterprise administrators may be more focused on performance and protection, as the front-end applications or web farms using the databases are more demanding.

In this solution, we demonstrate that the scalable performance of VMware vSAN can guarantee the consistency of application performance, and can be sustained in the event of a failure condition such as a host offline.

The Database Administrator (DBA)

Dedicated DBAs, who typically exist in larger enterprise environments, will have a collection of MySQL servers that provide services to a given set of applications. They typically have a farm of MySQL servers.

In this solution, we demonstrate vSAN can offer predictable performance to meet specific workload demands or business objectives for both scale-up and scale-out considerations, as well as dev/test environment, where they can control usage by using limits.

Technology Overview

This section provides an overview of the technologies used in this reference architecture:

- VMware vSphere 6.7

- VMware vSAN 6.7

- MySQL 5.7.21

- Quanta QuantaPlex T41S-2U

VMware vSphere 6.7

VMware vSphere 6.7 is the next-generation infrastructure for next-generation applications. It provides a powerful, flexible, and secure foundation for business agility that accelerates the digital transformation to cloud computing and promotes success in the digital economy.

vSphere 6.7 supports both existing and next-generation applications through its:

- Simplified customer experience for automation and management at scale

- Comprehensive built-in security for protecting data, infrastructure, and access

- Universal application platform for running any application anywhere

With vSphere 6.7, customers can run, manage, connect, and secure their applications in a common operating environment, across clouds and devices.

VMware vSAN 6.7

VMware vSAN, the market leader HCI, enables low-cost and high-performance next-generation HCI solutions, converges traditional IT infrastructure silos onto industry-standard servers and virtualizes physical infrastructure to help customers easily evolve their infrastructure without risk, improve TCO over traditional resource silos, and scale to tomorrow with support for new hardware, applications, and cloud strategies. The natively integrated VMware infrastructure combines radically simple VMware vSAN storage, the market-leading VMware vSphere Hypervisor, and the VMware vCenter Server ® unified management solution all on the broadest and deepest set of HCI deployment options.

vSAN 6.7 introduces further performance and space efficiencies. Adaptive resync ensures fair-share of resources are available for VM IOs and resync IOs during dynamic changes in load on the system providing optimal use of resources. Optimization of the destaging mechanism has resulted in data that drains more quickly from the write buffer to the capacity tier. The swap object for each VM is now thin provisioned by default, and will also match the storage policy attributes assigned to the VM introducing the potential for significant space efficiency.

MySQL 5.7.21

MySQL is the most popular open source database system which enables the cost-effective delivery of reliable, high-performance and scalable web-based and embedded database applications. It is an integrated transaction safe, ACID-compliant database with full commit, rollback, crash recovery, and row-level locking capabilities. MySQL delivers the ease of use, scalability, and high performance, as well as a full suite of database drivers and visual tools to help developers and DBAs build and manage their business-critical MySQL applications.

InnoDB Engine

InnoDB is the default database storage engine for the latest MySQL 5.7 release, which offers a balance between high reliability and performance. InnoDB engine has the following advantages:

- Data Manipulation Language (DML) operations are fully compliant with an ACID model.

- Row-level locking and Oracle-style consistent reads increase multi-user concurrency and performance.

- InnoDB engine arranges data on disks to optimize queries based on the primary key.

- InnoDB supports foreign key constraints to maintain data integrity.

Group Replication

Group replication is a plugin for MySQL, which offers high availability technology for MySQL database replication solutions. It supports built-in conflict detection, and handling and consistency guarantees to ensure multi-primary write anywhere. The high available replica on each member of the group replication sets allows it to enable both duplicate data and writes on each MySQL server instance. Group replication supports two operation modes:

- Single primary: Only the primary instance can service read-write transactions, the rest of the replication instances are configured as read-only mode.

- Multi-primary: All the members in a group replication set can service read-write transactions.

Quanta QuantaPlex T41S-2U

Tailored for a software-defined storage solution, QuantaPlex T41S-2U features an ultra-dense design equipped with four independent nodes with device slots that can be used for vSAN, consuming just 2U of space. Customers can set up a VMware vSAN cluster in a single appliance, which greatly simplifies the complexity of building a software-defined solution.

With shared infrastructure such as cooling and power supply, and dense design that reduces space consumption, QuantaPlex T41S-2U delivers space and energy efficiency, providing an ideal choice for building solution-based appliances at a low cost.

Operated with Intel E5-2600 v3, v4 product family with maximum 18-core count CPU and DDR4 memory technology up to 1024 GB memory capacity, QuantaPlex T41S-2U improves productivity by offering superior system performance. Besides, each node supports up to six 2.5-inch hot-swap drives with HDDs up to 2 TB capacity and SSDs up to 3.84 TB. Hence, 4 nodes can have up to 76.8 TB of storage capacity using six drives. Therefore, QuantaPlex T41S-2U is an optimum option for compute-storage balance demand.

For more details of Quanta Cloud Technology and Quanta hardware platform, visit here.

Solution Configuration

This section introduces the resources and configurations for the solution including an architecture diagram, hardware and software resources and other relevant configurations.

Solution Architecture

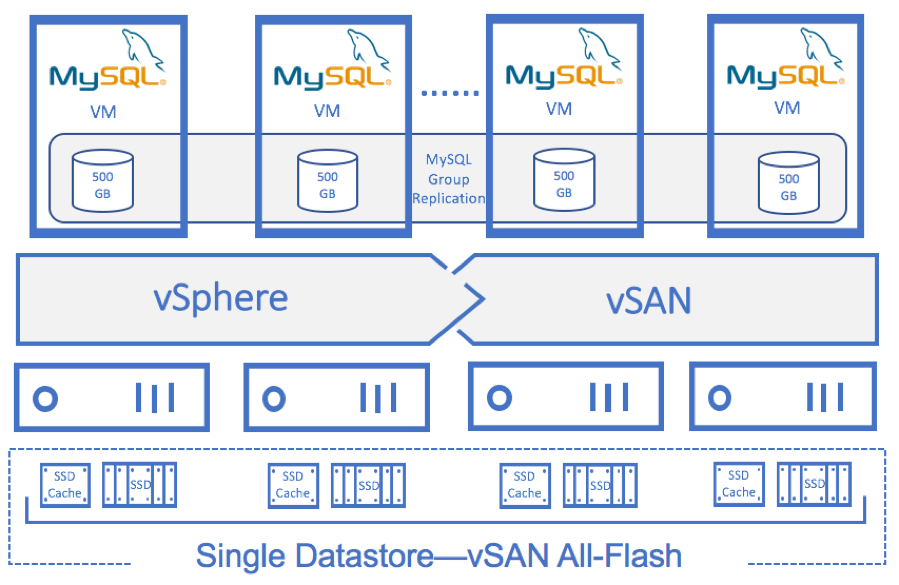

In this solution, we created a 4-node vSphere and vSAN cluster for single MySQL server instances deployment. We also configured group replication on each MySQL instance and set the running mode to single primary to protect from MySQL software failure.

For the single instance deployment, we deployed one virtual machine per ESXi host and validated the MySQL virtual machine count up to four. For those users who need different MySQL backends for separate business purposes, they can scale the MySQL workloads on demand and use vSAN to offer fault tolerance and guarantee business continuity. In addition, users could use vSAN SPBM for space efficiency, workload isolation or IOPS limitation on a virtual disk basis.

For the group replication deployment, we enabled the group replication plugin on each MySQL instance virtual machine and created up to 5-instance scale. It allows users to protect their MySQL database from application level failures for their critical MySQL applications. We evenly distributed the virtual machines participating in group replication to fully leverage the cluster resources.

The detailed solution architecture is as shown in Figure 1.

Figure 1. MySQL on vSAN 6.7 All-Flash Solution Architecture

Hardware Resources

In this solution, we used QuantaPlex T41S-2U, a 2U 4-nodes high density scalable and enterprise-class server hardware with direct-attached SSDs on ESXi hosts to provide a vSAN datastore. Each ESXi host has one disk group consisting of one cache-tier SSD and five capacity-tier SSDs.

Each ESXi server in the vSAN cluster has the following configuration:

Table 1. Hardware Configuration per ESXi Host

| PROPERTY | SPECIFICATION |

|---|---|

| Server model name | QuantaPlex T41S-2U (2U, 4-node) |

| CPU | 2 x Intel Xeon processor E5-2690 v3, 12 core each |

| RAM | 256GB |

| Network adapter | 1 x Quanta ON 82599ES dual-port 10GbE mezzanine card |

| Storage adapter | 1 x Quanta SAS 3008 12Gb |

| Disks | 1 x Intel S4600 2.5” SATA SSD 960 GB 5 x Intel S4500 2.5” SATA SSD 3.84TB |

Software Resources

Table 2 shows the software resources used in this solution.

Table 2 . Software Resources

| SOFTWARE | VERSION | PURPOSE |

|---|---|---|

| VMware vCenter Server and ESXi | 6. 7 | vSphere Cluster to host virtual machines and provide the vSAN Cluster. VMware vCenter Server provides a centralized platform for managing VMware vSphere environments |

| VMware vSAN | 6.7 | Software-defined storage solution for hyperconverged infrastructure |

| Linux operating system | Oracle Linux 7 Update 4 | Operating system for the MySQL database virtual machines and load generation virtual machines |

| MySQL | MySQL Community Server 5.7.21 | Database software |

| SysBench benchmark | 0.4.12 | Complex OLTP load generator tool |

Network Configuration

We created a vSphere Distributed Switch™ to act as a single virtual switch across all associated hosts in the data cluster.

The vSphere Distributed Switch used two 10GbE adapters for the teaming and failover. A port group defines properties regarding security, traffic shaping, and NIC teaming. To isolate vSAN, VM (node) and vMotion traffic, we used the default port group settings except for the uplink failover order. We assigned one dedicated NIC as the active link and assigned another NIC as the standby link. For vSAN and vMotion, the uplink order is reversed. See Table 3 for network configuration.

Table 3. Network Configuration

| DISTRIBUTED PORT GROUP | ACTIVE UPLINK | STANDBY UPLINIK |

|---|---|---|

| VMware vSAN | Uplink2 | Uplink1 |

| VM and vSphere vMotion | Uplink1 | Uplink2 |

NOTE: vSAN and vMotion are on separate vLANs.

MySQL Virtual Machine Configuration

We configured each MySQL virtual machine as described in Table 4 for performance tests and populated the test database using SysBench Benchmark tool. We set the SCSI controller mode for MySQL data disks to VMware Paravirtual. All the virtual disks are configured with thin provisioning.

Table 4. MySQL Virtual Machine Configuration

| MySQL VM Role | vCPU | Memory (GB) | VM Count | Virtual Disks | SCSI ID (Controller, LUN) |

SCSI Type |

|---|---|---|---|---|---|---|

| MySQL VM for SysBench complex OLTP benchmark | 16 | 64 | 4 | OS disk: 40 GB | SCSI (0, 0) | LSI Logic |

| MySQL disk: 800 GB | SCSI (1, 0) | VMware Paravirtual | ||||

| MySQL VM for group replication test | 16 | 64 | Up to 5 | OS disk: 40 GB | SCSI (0, 0) | LSI Logic |

| MySQL disk: 800 GB | SCSI (1, 0) | VMware Paravirtual |

MySQL Single Instance Deployment

In the MySQL single instance deployment, we created 4 virtual machines for SysBench complex OLTP benchmark testing. We formatted the MySQL data disk with Linux ext4 filesystem, and mounted the disk to the MySQL data directory. We customized the MySQL parameters through the configuration file for performance consideration in the solution validation part.

The test database was populated by SysBench with 250 million records and about 540 GB in total. Table 5 shows the MySQL single instance deployment profile in the following solution validation part.

Table 5. MySQL Single Instance Configuration

| Item | Test Configuration |

|---|---|

| MySQL DB engine | InnoDB |

| MySQL Database size | 540 GB, 250 million records |

| MySQL parameters | InnoDB buffer pool: We increased the buffer pool to 70-80% of the VM RAM size, and increased the buffer pool instances to improve concurrency and reduce transaction contention.

InnoDB log files: We increased the log file size to allow pre-allocation. And we changed the InnoDB flush method value to 2 for a compromise of performance and data integrity consideration.

Concurrency settings:

InnoDB flush data/log: O_DIRECT avoids double buffering between InnoDB buffer pool and the operating system file system cache, and in this case trying to operate data file directly from vSAN. Disabling InnoDB to flush neighbor pages since the seek time is not a significant factor for SSD in all-flash vSAN.

Note: The parameters above are only used for performance testing purpose. Users should fully test those parameters before applying them in their environment (especially production environment). |

| Workload | Mixed complex OLTP workload, with 75/25 read/write ratio. |

Group Replication Deployment

In the group replication deployment, we installed the group replication plugin on each MySQL instance and the sample configuration file is listed below:

# server id should be unique on each MySQL instanceserver_id=1gtid_mode=ONenforce_gtid_consistency=ONmaster_info_repository=TABLErelay_log_info_repository=TABLEbinlog_checksum=NONElog_slave_updates=ONlog_bin=binlogbinlog_format=ROWtransaction_write_set_extraction=XXHASH64# group replication group name should be uniqueloose-group_replication_group_name="1bb1b861-f776-11e6-be42-782bcb377188"loose-group_replication_start_on_boot=offloose-group_replication_local_address= "IP address1:port1"loose-group_replication_group_seeds= "IP address1:port1,IP address2:port2, … , IP addressN:portN"loose-group_replication_bootstrap_group= off

We configured the group replication to work in single primary mode. In this mode, only one MySQL instance is configured as the primary instance with read and write transactions enabled, while all other instances are only servicing read transactions. If the primary instance fails, it will:

- Automatically select a new primary instance based on each MySQL instance’s UUID.

- The new primary’s UUID will be known on the rest of the group replication instances.

- If the failed primary instance is able to rejoin, it will function only as read-only.

If a non-primary instance fails, the workload on this node can be configured to be directed to other instances in the group. Single primary mode eliminated some of the consistency checks as compared to multiple primary mode that each instance in the group can support read and write operation, thus there could be less performance compromise. We validated the group replication performance and failover conditions in the Group Replication Test chapter.

Solution Validation

In this section, we present the test methodologies and results used to validate this solution.

Test Overview

The solution validates both performance and protection for running MySQL on vSAN. And it includes the following test scenarios:

-

MySQL on vSAN scalability test

This test validates scaling performance of MySQL mixed OLTP workloads on vSAN. The default vSAN storage policy is adopted in this test scenario.

-

vSAN SPBM based test

This test validates the following SPBM features included in the vSAN storage policy:

- Changing default vSAN storage policy to checksum disabled

- Changing default vSAN storage policy to different stripe width counts

- Configuring default vSAN storage policy with deduplication and compression enabled

- Changing default vSAN storage policy to RAID 5/6 enabled

-

MySQL group replication test

This test validates MySQL group replication running on vSAN, offering additional application level protection.

-

vSAN resiliency test

This test validates the following failure scenarios and how vSAN helps to guarantee MySQL workload continuity.

- Disk failure: Simulate a capacity tier disk failure on a vSAN disk

- Disk group failure: Simulate a cache tier disk failure on a vSAN disk

- Host failure: Simulate an unplanned host failure within a vSAN cluster

Testing Tools

We used the following monitoring tools and benchmark tools in this solution testing:

-

Monitoring tools

vSAN Performance Service

vSAN Performance Service is used to monitor the performance of the vSAN environment, using the web client. The performance service collects and analyzes performance statistics and displays the data in a graphical format. You can use the performance charts to manage your workload and determine the root cause of problems.

vSAN Health Check

vSAN Health Check delivers a simplified troubleshooting and monitoring experience of all things related to vSAN. Through the web client, it offers multiple health checks specifically for vSAN including cluster, hardware compatibility, data, limits, physical disks. It is used to check the health of the vSAN before the MySQL deployment.

vRealize Operations

vSphere and vSAN 6.7 includes vRealize Operations within vCenter . This new feature allows vSphere customers to see a subset of intelligence offered up by vRealize Operations through a single vCenter user interface. Light-weight purpose-built dashboards are included for both vSphere and vSAN. It is easy to deploy, provides multi-cluster visibility, and does not require any additional licensing.

esxtop

esxtop is a command-line tool that can be used to collect data and provide real-time information about the resource usage of a vSphere environment such as CPU, disk, memory, and network usage. We measure the ESXi Server performance with this tool.

Linux iostat

The Linux iostat command is used for monitoring system input/output device loading by observing the time the devices are active in relation to their average transfer rates. The iostat command generates reports that can be used to monitor the operating status of disk devices from the OS level.

-

Database and load generation tool

SysBench Benchmark

SysBench is a modular, cross platform and multithreaded benchmark tool for evaluating OS parameters that are important for a system running a database under intensive load. The oltp test mode is used in solution validation to benchmark a real MySQL database performance.

In this solution validation, we used the SysBench OLTP library to populate MySQL test database, and generate workload for performance and protection testing.

-

Populate database example

sysbench --test=oltp --mysql-host=MySQL001 --mysql-socket=/mnt/mysqldata/mysql.sock --oltp-num-tables=10 --oltp-table-size=250000000 --mysql-db=test --mysql-user=root --mysql-password=password prepare

-

Generate workload example

sysbench --test=oltp --mysql-host=MySQL001 --mysql-socket=/mnt/mysqldata/mysql.sock --oltp-num-tables=10 --oltp-table-size=250000000 --mysql-db=test --mysql-user=root --mysql-password=password --max-time=60 --max-requests=0 --num-threads=32 run

-

MySQL on vSAN Scalability Test

Test Objective

This test is designed to showcase the performance scalability of a single all-flash vSAN cluster running a typical MySQL mixed workload.

Test Scenario

We designed two test scenarios as follows:

- Baseline test: scale with different SysBench test threads within one MySQL virtual machine

- Scale-out test: scale with different MySQL virtual machines count within one vSAN cluster

Test Procedures

The following describes the test procedures in this scenario:

- Update the MySQL “my.cnf” configuration file and restart the MySQL Service. Start the SysBench workload and run the warm-up SyBench test for one hour.

- Set the thread count to 1; run the SysBench OLTP complex read/write test for 30 minutes; collect and measure the performance result including:

- Transaction per second (TPS)

- Transaction response time: the latency monitored from the application level

- vSAN backend IOPS from vSAN Performance Services

- vSAN backend response time from vSAN Performance Services

- Set the thread count to 2, 4, 8, 16, 32 respectively, and repeat the test in step 2.

- Configure the second MySQL VM with the same “my.cnf” configuration file, restart the MySQL service and run the warm-up SysBench test for one hour.

- Set the thread count to 1 on both MySQL instances, run SysBench OLTP complex read/write test simultaneously for 30 minutes, collect and measure the performance result as described in step 2.

- Set the thread count to 2, 4, 8, 16, 32 respectively, repeat the test in step 5.

- Configure the third and fourth MySQL VM with the same “my.cnf” configuration file, restart the MySQL Service and run the warm-up SysBench test for one hour.

- Set the thread count to 1 on each MySQL instance, run the SysBench OLTP complex read/write test simultaneously for 30 minutes, collect and measure the performance result as described in step 2.

- Set the thread count to 2, 4, 8, 16, 32 respectively, and repeat the test in step 8.

Test Scenarios and Results

Figure 2 shows the MySQL scalability performance in the baseline test using various test threads for running the SysBench mixed OLTP workloads on vSAN.

We started with average 362 TPS and kept the average transaction response time of 2.75 milliseconds, and the performance boosts 8 times to 2,368 TPS with the average transaction response time kept in 13.5 milliseconds as shown in Figure 3. Overall the performance is close to linear while scaling from 1 to 32 threads.

Figure 2. SysBench Baseline Test Result with Multi-threads – Transaction per Second

Figure 3. SysBench Baseline Test Result with Multi-threads - Average Transaction Response Time in Milliseconds

Figure 4 and Figure 5 show the MySQL scalability performance in the scale-out test with two MySQL virtual machines. The workloads are identical across multiple virtual machines that are spread out on different hosts, and the workloads were run at the same time. Compared to the result in single VM, the TPS achieved in this test almost doubled for all the thread count 1 to 32, again with the maximum number achieved in 32 threads for 4,038 TPS, and the transaction response time kept only about 15 milliseconds.

Figure 4. SysBench Scale-out Test Result with 2VMs – Transaction per Second

Figure 5. SysBench Scale-out Test Result with 2VMs—Average Transaction Response Time in Milliseconds

Then we increased the VM count to four and the result is shown in Figure 6 and Figure 7. Compared to 2 VM result, the average TPS result increase over 65%. With 32 threads, it increased from 4,038 to 6,609, while the transaction response time only increased to around 19 milliseconds.

Figure 6. SysBench Scale-out Test Result with 4VMs—Transaction per Second

Figure 7. SysBench Scale-out Test Result with 4VMs—Average Transaction Response Time in Milliseconds

In summary, vSAN provided the scaling performance for MySQL mixed workloads running at the same time.

Table 5. Test Result Summary for vSAN Scalability Test

| MySQL VM | Average TPS (Maximum) |

Average Transaction Response Time (ms) |

ESXi Processor Time (%) | Aggregated vSAN Backend IOPS | vSAN Backend Response time (ms) Read/Write |

|---|---|---|---|---|---|

|

1 |

2,368 |

13.5 |

41.16/3.97/2.88/5.15 |

25,644 |

0.515/1.945 |

|

2 |

4,038 |

15.84 |

35.90/35.78/4.53/7.31 |

39,275 |

0.621/2.631 |

|

4 |

6,609 |

19.37 |

34.05/35.23/31.95/36.29 |

65,407 |

0.791/3.455 |

- Within a single VM, as the workload demands increased (thread counts), the average TPS achieved boosted 8 times and the average response time kept below 13.5 milliseconds.

- Within a single vSAN cluster, as the number of provisioned MySQL virtual machines increased, vSAN could deliver up to 6,609 average TPS with the average transaction response time kept around 19 milliseconds.

- The aggregated vSAN IOPS was over 65 thousand. The highest vSAN storage response time was kept within 1 millisecond for read performance and 3.5 milliseconds for write performance. Figure 8 shows the vSAN backend performance in the 4 virtual machines and 32 threads test from vSAN Performance Services.

Figure 8. vSAN Backend Performance

vSAN SPBM Based Test

Test Objective

This test is designed to showcase the vSAN SPBM offering for MySQL performance and capacity considerations.

Test Scenario

We designed the following test scenarios:

- Checksum test: Evaluate the performance impact over MySQL single instances of enabling/disabling checksum in the vSAN storage policy.

- Stripe width test: Evaluate the performance impact over MySQL single instances of setting different number of stripe width in the vSAN storage policy.

- Deduplication and compression test: Evaluate the performance impact over MySQL single instances of enabling deduplication and compression on all-flash vSAN, and evaluate the space efficiency before and after.

- Erasure coding test: Evaluate the performance impact over MySQL single instances of enabling erasure coding in the vSAN storage policy and evaluate the space efficiency before and after..

Test Procedures

The following describes the test procedures in this scenario:

- Create a vSAN storage policy with checksum disabled. Apply the new storage policy to the MySQL data disk on each of the MySQL virtual machine. Run SysBench complex OLTP test with 32 threads and compared the test result with baseline to evaluate the performance impact.

- Create a vSAN storage policy with stripe width set to 2, 4, 6, 8, 10, respectively, based on the vSAN default storage policy. Apply the new storage policy to the MySQL data disk on each of the MySQL virtual machine. Run SysBench complex OLTP test with 32 threads and compare the test results with the baseline to evaluate the performance impact.

- Enable deduplication and compression on the vSAN cluster. Run SysBench complex OLTP test with 32 threads and compare the test result with the baseline to evaluate the performance impact. Measure the space savings before and after enabling deduplication and compression.

- Based on step 3, create a vSAN storage policy with erasure coding enabled. Apply the new storage policy to the MySQL data disk on each of the MySQL virtual machine. Run SysBench complex OLTP test with 32 threads and compare the test results with the baseline to evaluate the performance impact. Measure the further space savings ratio before and after enabling erasure coding.

Test Results

Data integrity consideration with checksum

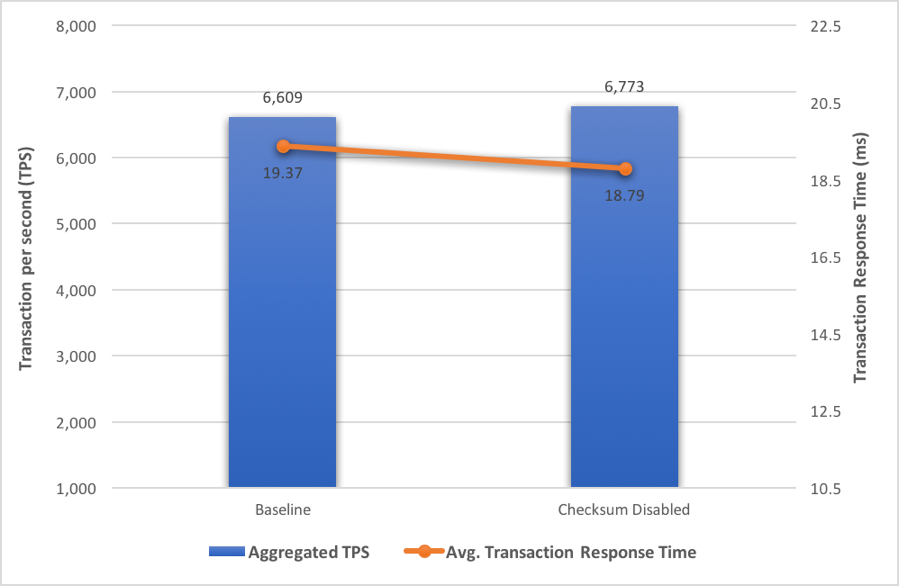

The checksum feature is enabled by default in the vSAN SPBM, which ensures the data integrity at the vSAN component and object level. In the checksum test, we ddeactivated the checksum in the default vSAN storage policy, ran the SysBench complex OLP test with 32 threads and compared the test results.

As shown in Figure 9, we observed the aggregated TPS was 6,609 in the baseline test, and 6,773 after disabling checksum, which was less than 2.5% difference. Meanwhile, the average transaction response time was 19.37 and 18.79 milliseconds respectively. And the average ESXi CPU utilization of both tests was 34.38% in baseline and 28.90% if we deactivated checksum.

In conclusion, the checksum feature provides vSAN data integrity with negligible performance compromise. The CPU offloaded software checksum operation consumed around 5% to 6% of the ESXi processor time. Therefore, we recommend keeping the vSAN checksum default, enabled, and users may deactivate checksum only if extreme performance is required for their MySQL applications.

Figure 9. vSAN Checksum Test Result

Performance consideration with stripe width

In the stripe width test, as the system contains 20 capacity SSDs in total, with vSAN default tolerance setting the maximum stripe could be set to up to 10. Figure 10 shows the stripe width test results, the aggregated TPS of four MySQL instances reached 7,040 when we configured the stripe width to 8, and the application response time was minimized to 18 milliseconds. Compared to the baseline result, the performance improvement was 6.5%.

As the stripe width increases, the MySQL virtual disk objects are more evenly distributed across the capacity disks in a vSAN cluster. Distributing objects across a larger number of capacity disks increases rate at which read requests can be executed, improving the performance of the MySQL servers. However, it is hard to determine the stripe width impact on write performance since the write operations are conducted at the cache-tier for all-flash vSAN.

In conclusion, it is wise to understand your MySQL workloads profile first and change the stripe width setting only if the MySQL application is I/O demanding, or you need stripe width setting to help spread your large MySQL virtual disks more evenly on the vSAN capacity SSDs. Otherwise, leave the default stripe width setting to 1 and adjust the value when appropriate.

Figure 10. Stripe Width Test Result

Space efficiency consideration

vSAN all-flash configuration offers deduplication and compression feature to help save capacity consumption and improve overall vSAN space efficiency. This feature is not enabled by default, but users can choose whether to enable this feature.

vSAN SPBM erasure coding settings can help further improve MySQL database space efficiency on vSAN. The default RAID level is RAID 1/0 and users can configure erasure coding in the vSAN storage policy to enable RAID 5/6 which requests at least 4 and 6 hosts in a cluster, respectively. In our test environment, RAID 5 is implemented since the cluster has four hosts.

In the baseline test, we tested with four MySQL single instances. In the SysBench 32 threads test, we achieved 6,609 TPS and kept the average transaction response time in about 19 milliseconds. The total environment space consumption was 6.18 TB with default fault tolerance level.

As we enabled deduplication and compression in the vSAN cluster, and we ran the same workload, we observed similar performance compared to the baseline as shown in Figure 11. The aggregated TPS of four MySQL single instances reached 6,530 while the average transaction response time still kept about 19 milliseconds. We also observed significant space savings on the vSAN with deduplication and compression enabled, the capacity consumption of which is 3.56 TB and reached 42% of savings.

Then we enabled erasure coding with RAID 5 on the MySQL data disks and we observed further space savings of 45% as shown in Figure 12, the final space consumption was 3.41 TB. As our workload is 75/25 in read/write ratio, RAID 5 does not help heavy write operations. We observed higher average transaction response time which reached 29 milliseconds, and the aggregated TPS was 4,382 with 30% performance downgrade.

Figure 11. Deduplication and Compression, Erasure Coding Test Result

Figure 12. Space Efficiency Summary

In conclusion, the all-flash vSAN deduplication and compression, together with vSAN erasure coding could reduce the vSAN capacity consumption and help improve the overall space efficiency. Deduplication and compression has negligible performance impact for MySQL workload. However, vSAN erasure coding is not recommended for performance demanding conditions since it may cause performance drop for write-intensive workloads. Figure 13 shows the CPU utilization of each ESXi server. The CPU overhead of both deduplication/compression and erasure coding is minimized.

Figure 13. ESXi CPU Consumption Result

Group Replication Test

Test Objective

This test is designed to showcase the application level high availability protection for MySQL workloads featuring group replication running in single-primary mode.

Test Scenario

We designed the following test scenarios:

- Group replication performance test: Evaluate the performance impact with different number of MySQL Server instances in a group replication cluster.

- Group replication failure test: Evaluate the MySQL workload continuity under certain server instance failure situations.

- Replication instance failure: shut down the service on one of the MySQL replication instances, which runs in read-only mode. Each replication instance maintained one database copy of the primary instance.

- Primary instance failure: shut down the service on the MySQL primary instance that runs in read-write mode.

Test Procedures

The following describes the test procedures in this scenario:

- Configure the MySQL group replication cluster with two instances (minimum configuration), create the same test database as in the baseline test. After the group replication syncs the database across each server instance, run a warm-up test for 1 hour.

- On the MySQL primary server instance, run the SysBench complex OLTP test with the thread count set to 8 for 30 minutes, collect and measure the performance result. Compare the test results with the baseline performance.

- Reconfigure MySQL group replication cluster with 3 and 5 server instances respectively, repeat the test step 1 and 2. The number of failures that could be tolerated and the minimum number of MySQL server instances required can be described as:

Minimum number of server instances required = 2 * Number of failures to tolerate + 1

4. In the 5-member group replication cluster, run the baseline workload against the primary instance for a certain period. Shut down the MySQL service on one of the replication instances, collect TPS results every second and measure the workload continuity.

5. In the 5-member group replication cluster, run the baseline workload against the primary instance for a certain period. Shut down MySQL service on the primary instance, measure the time of workload to recover on the new primary instance.

Test Results

Figure 14 shows the group replication test result. In the baseline, we achieved 1,300 TPS and 6.14 milliseconds transaction response time on a single MySQL instance.

After we added another MySQL instance to configure as a MySQL group replication cluster, the TPS slightly dropped to 1,089 when running the same workload. This impact is caused by IO synchronization between the primary instance and the replication instance, and the transaction response time slightly increased to 7.33 milliseconds. Notice two instances are the minimum configuration for group replication, only for testing purpose.

The recommended configuration is to set up group replication with three MySQL server instances. As indicated in the figure, the performance is about the same as the two-instance cluster. The average TPS reached 1,064 while the average transaction response time was kept below 7.51 millisecond. In this configuration, it could tolerate one instance failure in spite of a slight performance drop.

As we provisioned a 5-member group replication cluster that could tolerate two instance failures for higher fault tolerance level, the achieved average TPS was 967 and response time kept below 8.26 milliseconds.

Figure 14. Group Replication Performance Result with Different Number of MySQL Instances

Figure 15 shows the sustained TPS on the primary instance during a replication instance failure in a 5-member group replication cluster. The failure point happened in the middle of the test, we could expect a slight TPS increase after failure, and the average TPS increased from 989 to 1,058. The reason is that the group has lost one member but the origin workload is not changed. With less replication work to sync among the group, the primary instance could service more transactions.

Figure 15. Simulation of Replication Instance Failure

Figure 16 shows the sustained TPS on a replication instance during a primary instance failure in a 5-member group. The primary instance failure happened at the very beginning of the test, after that the remaining replication instances would elect a new primary instance and apply relay logs. During this time, the group would not service new transactions. After all the instances complete synchronization, the new primary instance took over and the production workload could be recovered to the original state.

Figure 16. Simulation of Primary Instance Failure

vSAN Resiliency Test

Test Objective

This test is designed to showcase how vSAN can help MySQL workloads tolerate hardware failures thus guarantee business continuity.

Test Scenario

We designed the following test scenarios:

- Disk failure: Evaluate how vSAN handles a disk failure to ensure the sustainability of MySQL workloads.

- Disk group failure: Evaluate how vSAN handles a disk group failure to ensure the sustainability of MySQL workloads.

- Host failure: Evaluate how vSAN handles a host outage to ensure the sustainability of MySQL workloads.

Test Procedures

The following describes the test procedures in this scenario:

- Running SysBench complex OLTP test with the thread count set to 8 against one MySQL server instance. Monitoring the workload and when entering steady state, manually inject a disk error on a capacity SSD that does not host the MySQL test data. Collect and measure the performance before and after the disk failure.

- Repeat the test in step 1, instead inject a disk error on a capacity SSD that hosts the MySQL test data. Collect and measure the performance before and after the disk failure.

- Repeat the test in step 1, instead inject a disk error on a cache SSD, which will cause an entire disk group failure. Collect and measure the performance before and after the disk group failure.

- Repeat the test in step 1, instead force shutdown a host in the vSAN cluster. Collect and measure the performance before and after the host outage.

Test Results

Disk failure: Evaluate how vSAN handles a disk failure to ensure sustainability of MySQL workloads

Figure 17 and Figure 18 show the failure test result for a capacity disk failure, both with and without hosting the MySQL test data. For the first situation, the sustained performance dropped about 10%, from average 1,443 to average 1,303, and could maintain in a steady state after the failure happened. For the second situation, we observed close to zero performance impact, sustained TPS was 1,483 and 1,456 before and after the failure. vSAN by default will maintain two copies of each object if the FTT setting is 1, therefore it could tolerate single disk failure and sustain MySQL production workloads steadily.

Figure 17. Simulation of vSAN Disk Failure with Data

Figure 18. Simulation of vSAN Disk Failure without Data

Disk group failure: Evaluate how vSAN handles a disk group failure to ensure sustainability of MySQL workloads

Figure 19 shows the disk group failure situation. After we manually introduce an error on a cache SSD, the vSAN disk group will fail and stop service I/O request at once. Therefore, the partially-missing vSAN object will be put in the “degraded” state which will automatically trigger object rebuild on other disk groups. The rebuild bandwidth may cause potential performance impact on the MySQL production workload.

In this case, the sustained performance started from average 1,452, and after the failure happened, it dropped to about 1,000 TPS. Then as the rebuild process was initiated, the TPS curve fluctuated for a short while, and finally reached stability at an average of 1,295 TPS.

Figure 19. Simulation of vSAN Disk Group Failure

Host failure: Evaluate how vSAN handles a host outage to ensure sustainability of MySQL workloads

Figure 20 simulates a host outage situation. Before the failure happens, the sustained TPS was 1,467 on average, and when we manually shut down a host in the vSAN cluster, there was no obvious performance drop. This is because the host outage did not trigger a vSAN object rebuild instantly, instead those objects will be placed in “absent” state, so the sustained performance kept at steady state even after failure, with the average of 1,456 TPS. Those absent objects may impact performance (read operation can only be performed upon the remaining object) or even boost the performance (write operation will only need to be done on the single remaining object), depending on the read/write pattern of the workloads.

In this test case, the performance is about the same before and after the host outage happens. Notice the rebuild process will be triggered if the host outage is not recovered after 60 minutes by default. However, if the host comes back shortly, vSAN will also automatically trigger the rebuild process if there is data inconsistency between these objects.

Figure 20. Simulation of vSAN Host Outage

Best Practices

This section provides the recommended best practices for this solution.

vSAN All-Flash Best Practices

VMware vSAN Design and Sizing Guide provides a comprehensive set of guidelines for designing vSAN. A few key guidelines relevant to MySQL design considerations are provided below:

- Maintain host symmetry: It is recommended to use similarly configured and sized ESXi hosts for the vSAN cluster to avoid imbalance and potential issues.

- Utilize SPBM features: Always use vSAN SPBM features to manage MySQL databases on a virtual disk granularity, such as checksum for vSAN data integrity, fault tolerance settings, and QoS settings.

- Improve storage efficiency: The vSAN all-flash deduplication and compression feature helps improve storage efficiency while introducing minimum performance overhead for MySQL workloads. It is recommended to enable this feature before deploying MySQL on all-flash vSAN to save potential data re-arrangement. Notice the space saving ratio is subject to fluctuate when write-intensive workloads are applied.

- Erasure coding consideration: Enabling vSAN RAID 5/6 erasure coding helps further save storage capacity on the vSAN datastore. For write-intensive or performance demanding workloads, use the default setting instead.

- Stripe width consideration: The stripe width setting may bring about performance improvement for MySQL workloads as separating the components more evenly on each vSAN disk groups. It is recommended to perform strict test since the improvement depends on multiple factors such as workload type or working set.

Refer to Performance Best Practices for VMware vSphere 6.5 for general guidance for vSphere configurations.

MySQL Parameters Best Practices

It is important to optimize the MySQL performance and protection method through configuring InnoDB parameters defined under the /etc/my.cnf configuration file.

It is recommended to test the parameters thoroughly in a test/dev environment before applying them to the MySQL production workloads.

- Plan your MySQL data directory and innodb tablespace home directory with data_dir and innodb_data_home_dir parameter, respectively.

- Set innodb_file_per_table to ON to enable InnoDB to store data and index on separate data files instead of system tables.

- For write-intensive MySQL workload, set the innodb_adaptive_flushing parameter to ON to avoid sudden dips in throughput such as sharp checkpoint.

- Increase the default MySQL innodb buffer pool with the innodb_buffer_pool_size parameter for performance consideration. The general guidance of this value is up to 70-80% of the server memory in case reserve memory overhead for OS consumption.

- Make sure each innodb buffer pool instance divided is at least 1GB to improve concurrency by setting the innodb_buffer_pool_instances parameter. If the buffer pool is less than 1 GB, set to 1 instead.

- Set innodb_log_files_in_group to default and recommended value (2). Pre-allocate the innodb log files by increasing the innodb_log_file_size parameter.

- For MySQL workload with large transactions, such as update, insert or delete many rows, increase innodb_log_buffer_size to avoid frequent log write operation to disks.

- Carefully configure the innodb_flush_log_at_trx_commit parameter. For full data integrity and transaction ACID requirements, keep the default value (1), otherwise you may set the number to (2) for better performance.

- For all-flash vSAN, set the innodb_flush_method to “O_DIRECT” may provide better performance against the default value “fsync”. Set the innodb_flush_neighbors to (1) to spread out write operations.

- For application level high availability consideration, you may start MySQL group replication deployment with three instances and add up more members as your demanding protection level grows.

For more details, please refer to InnoDB Startup Options and System Variables .

Conclusion

This section provides a summary of this reference architecture.

vSAN is optimized for modern all-flash storage with near-linear scalability, deduplication and compression for storage efficiency, compression, erasure coding capabilities and hardware fault tolerance that lower TCO while delivering mission-critical performance for MySQL workload.

In this reference architecture, we deployed both a single instance configuration and a group replication configuration for MySQL database running on a vSAN all-flash cluster.

We validated single VM scaling performance in the SysBench multi-threading test, and scale out performance for different MySQL virtual machine count. The test result showed a close-to-linearity performance capability for vSAN all-flash.

We also validated vSAN SPBM offering with data services for MySQL database. With vSAN all-flash deduplication and compression and erasure coding, users can choose to improve space efficiency with a minimized performance impact. Stripe width setting allows users to possibly improve performance with more evenly distributed MySQL virtual disk objects. What’s more, it is recommended to use CPU offloaded checksum feature to ensure MySQL data integrity on the vSAN level.

We proved that vSAN offers resiliency and high availability to Tier-1 applications with running workloads and guarantees business continuity of MySQL workloads.

Furthermore, we verified MySQL group replication feature with a single primary configuration, which helped provide application-level high availability to prevent any MySQL service failure or database software crashes.

Reference

This section provides vSAN, MySQL, and Quanta references.

See more vSAN, MySQL, and Quanta references:

- VMware vSAN Design and Sizing Guide

- VMware vSAN Network Design Guide

- MySQL

- QuantaPlex T41S-2U

About the Author and Contributors

This section provides a brief background on the authors of this solution guide.

Mark Xu, Senior Solutions Engineer in the Product Enablement team of the Storage and Availability Business Unit wrote the original version of this paper.

Catherine Xu, Senior Technical Writer in the Product Enablement team of the Storage and Availability Business Unit edited this paper to ensure that the contents conform to the VMware writing style.