Oracle RAC on VMware Cloud Foundation on Dell EMC VxRail

Executive Summary

Business Case

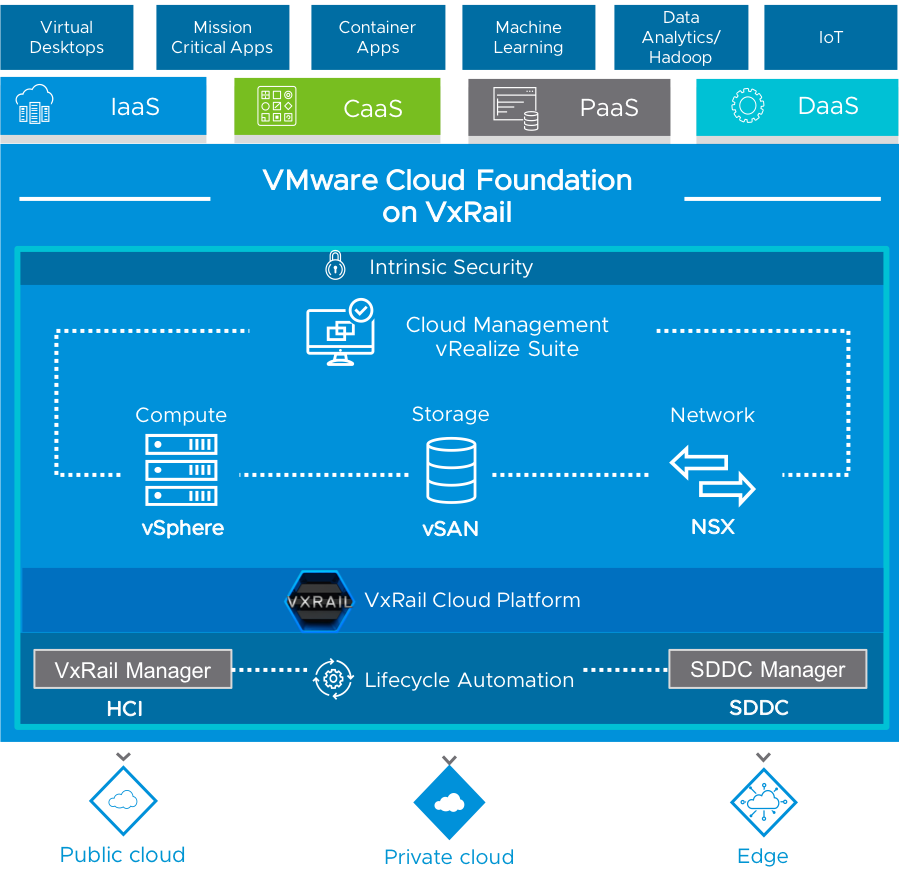

Customers wanting to deploy virtualized Oracle RAC infrastructure are seeking a cost-effective, highly scalable, and an easy-to-manage solution. While the traditional ways to manage the infrastructure involves many physical server installations, manual storage configurations, and network management, VMware Cloud Foundation accelerates infrastructure provisioning with full stack deployment consisting of compute, storage, networking, and management. Through automatic and reliable deployment of multiple workload domains, it increases administrator productivity while reducing overall TCO to delivering a faster path to a hybrid cloud.

VMware Cloud Foundation is built on VMware’s leading hyperconverged architecture, VMware vSAN™, with all-flash performance and enterprise-class storage services including deduplication, compression and erasure coding. vSAN implements hyperconverged storage architecture, by delivering an elastic storage and simplifying the storage management. VMware Cloud Foundation also delivers end-to-end security for all applications by implementing micro-segmentation, distributed firewalls, VPN (VMware NSX®); VM, hypervisor, VMware vSphere® vMotion® encryption, and AI-powered workload security and visibility (vSphere); and data-at-rest storage encryption (vSAN).

Figure 1. VMware Cloud Foundation on Dell EMC VxRail

VMware Cloud Foundation on Dell EMC VxRail™ builds upon native VxRail and Cloud Foundation capabilities with unique integration features, jointly engineered between Dell EMC and VMware that simplify, streamline, and automate the operations of your entire SDDC from Day 0 through Day 2 operations. The full stack integration with Cloud Foundation on VxRail allows both HCI infrastructure layer and VMware cloud software stack lifecycle to be managed as one, complete, automated, turnkey hybrid cloud experience greatly reducing risk and increasing IT operational efficiency. This solution also allows administrators to perform cloud operations through a familiar set of tools, offering a consistent experience, with a single vendor support relationship and consistent SLAs across all your cloud workloads, public and private cloud, as well as edge deployments.

In this solution, we provide deployment procedures, design and sizing guidance, best practices for enterprise infrastructure administrators and application owners to run virtualized Oracle RAC database on the Cloud Foundation platform.

This solution was validated against VMware Cloud Foundation 3.8.1 and it applies to VMware Cloud Foundation 3.8.1 and above.

Business Values

Here are top five benefits of deploying Oracle database in a VMware Cloud Foundation and VxRail environment:

- Rapid deployment and configuration: Native VxRail deployment process of HCI infrastructure and SDDC Manager Orchestration automated the deployment of vCenter, NSX and vRealize Suite above the ESXi and vSAN layers of VxRail, and deeply integrated day 0 to day 2 operations of the entire cloud infrastructure.

- High performance and scalable hyperconverged storage: Ensure consistent performance and predictable scalability for mission-critical Oracle OLTP workloads, which makes instance design and database sizing easier for Oracle database administrators.

- Automated Lifecycle Management and Continuously Validated States: Minimize Oracle database workload impact and downtime during necessary patching and upgrading of the full private cloud stack using VxRail HCI infrastructure Continuously Validated State update packages combined with verified Cloud Foundation SDDC component update packages that are applied using automated and self-managed services within the workload domain.

- End-to-End Security: Enhance compute, network and storage security with vSphere, NSX and vSAN technologies such as vSphere vMotion encryption, NSX micro-segmentation, and vSAN data-at-rest storage encryption for Oracle virtual machines. VxRail has security built in at every level of the integrated technology stack.

- Simple path to hybrid cloud: Connect private and public cloud seamlessly.

Key Results

This reference architecture is a showcase of VMware Cloud Foundation on Dell EMC VxRail for operating and managing Oracle RAC database in a fully integrated SDDC environment. Key results can be summarized as the following:

- VMware Cloud Foundation on Dell EMC VxRail simplifies and accelerates the necessary virtual infrastructure deployment desired for running OLTP workloads on Oracle RAC.

- VxRail provides near-linear scalability and predictable performance capability for OLTP workloads on Oracle RAC.

- The peak VM client IOPS at the storage side was 100,000 IOPS with 0.7 milliseconds (or less) response time at read and single-digit millisecond response time at write for an 8TB RAC database.

- An Oracle RAC virtual infrastructure workload domain consisting of four VxRail P570F nodes is capable of servicing more than one million transactions per minute with 20 milliseconds (or less) transactional response time2 from the database level.

- Predictable performance is demonstrated when scaling up the Oracle database and RAC node number.

Note:

- The performance results in this solution are validated on VMware Cloud Foundation on Dell EMC VxRail, and can also be applied VMware vSAN with the similar configurations.

- Transactional response time measures application transaction latency instead of the backend storage response time only, which includes the network latency, database service time, and I/O latency.

Audience

This reference architecture is intended for IT administrators, Oracle DBAs, and storage experts who are involved in the early phases of planning, design, and deployment of virtualized Oracle database workloads on VMware Cloud Foundation on Dell EMC VxRail. It is assumed that the reader is familiar with the concepts and operations of Oracle database, VMware Cloud Foundation related components.

Technology Overview

VMware Cloud Foundation

VMware Cloud Foundation is an integrated software stack that bundles compute virtualization (VMware vSphere), storage virtualization (VMware vSAN), network virtualization (VMware NSX), and cloud management and monitoring (VMware vRealize Suite) into a single platform that can be deployed on premises as a private cloud or run as a service within a public cloud. This documentation focuses on the private cloud use case. VMware Cloud Foundation helps to break down the traditional administrative silos in data centers, merging compute, storage, network provisioning, and cloud management to facilitate end-to-end support for application deployment.

VMware Cloud Foundation on Dell EMC VxRail is a jointly engineered solution offering that provides best-in-class serviceability and lifecycle management capabilities for customers looking to automate the deployment and management of the full VMware Software Defined Datacenter (SDDC) stack on Dell EMC VxRail:

- VxRail integration with VCF delivers a simple and direct path to the hybrid cloud with one, complete, automated platform.

- VCF on VxRail delivers unique integrations with Cloud Foundation to offer a seamless, automated upgrade experience.

VMware vSphere

VMware vSphere is the next-generation infrastructure for next-generation applications. It provides a powerful, flexible, and secure foundation for business agility that accelerates the digital transformation to cloud computing and promotes success in the digital economy. vSphere 6.7 supports both existing and next-generation applications through its:

- Simplified customer experience for automation and management at scale

- Comprehensive built-in security for protecting data, infrastructure, and access

- Universal application platform for running any application anywhere

With VMware vSphere, customers can run, manage, connect, and secure their applications in a common operating environment, across clouds and devices.

VMware vSAN

VMware vSAN is the industry-leading software powering VMware’s software defined storage and powers VMware’s HCI solution. vSAN helps customers evolve their data center without risk, control IT costs and scale to tomorrow’s business needs. vSAN, native to the market-leading hypervisor, delivers flash-optimized, secure storage for all of your critical vSphere workloads, and is built on industry-standard x86 servers and components that help lower TCO in comparison to traditional storage. It delivers the agility to easily scale IT and offers the industry’s first native HCI encryption.

vSAN continuously provides performance improvements and availability SLAs on all-flash configurations with deduplication enabled. Latency sensitive applications have better performance in terms of predictable I/O latencies and increased sequential I/O throughput. Rebuild times from disk or node failures are shorter, which provides better availability SLAs. vSAN also supports cloud native storage that provides comprehensive data management for stateful applications. With Cloud Native Storage, vSphere persistent storage integrates with Kubernetes.

vSAN simplifies day-1 and day-2 operations, enabling customers to quickly deploy and extend cloud infrastructure and minimize maintenance disruptions. Stateful containers orchestrated by Kubernetes can leverage storage exposed by vSphere (vSAN, VMFS, NFS) while using standard Kubernetes volume, persistent volume, and dynamic provisioning primitives.

VMware NSX Data Center

VMware NSX Data Center is the network virtualization and security platform that enables the virtual cloud network, a software-defined approach to networking that extends across data centers, clouds, and application frameworks. With NSX Data Center, networking and security are brought closer to the application wherever it’s running, from virtual machines to containers to bare metal. Like the operational model of VMs, networks can be provisioned and managed independent of underlying hardware infrastructure. NSX Data Center reproduces the entire network model in software, enabling any network topology—from simple to complex multi-tier networks—to be created and provisioned in seconds. Users can create multiple virtual networks with diverse requirements, leveraging a combination of the services offered via NSX or from a broad ecosystem of third-party integrations ranging from next-generation firewalls to performance management solutions, to build inherently more agile and secure environments. These services can be extended to a variety of endpoints within and across clouds.

In this solution, we use VMware NSX Data Center for vSphere to form the foundation of the software-defined data center (SDDC) and make operationalizing zero-trust security for Oracle RAC database applications attainable and efficient in both private and public cloud environments.

VMware vRealize Suite

vRealize Suite is a hybrid cloud management platform that helps IT enable developers to quickly build applications in any cloud with secure and consistent operations. It provides developer-friendly infrastructure, supporting both VMs and containers, and a common approach to hybrid and multi-cloud, supporting major public clouds such as Amazon Web Services, Azure, and Google Cloud Platform.

VMware vRealize Suite delivers an enterprise-proven, hybrid cloud management platform (CMP) that includes the following products:

- vRealize Automation—Self-service hybrid clouds, multi-cloud automation and governance, DevOps service delivery and Kubernetes cluster management.

- vRealize Operations—Powered by artificial intelligence and machine learning (AI/ML), continuous performance optimization, proactive capacity, and cost management, intelligent remediation and integrated compliance.

- vRealize® Log Insight™—Real-time log management and log analysis.

- vRealize Suite Lifecycle Manager—Automated installation, configuration, upgrade, patching, configuration management, drift remediation, health and content management for vRealize Suite.

Dell EMC VxRail

VxRail systems are jointly developed by Dell EMC and VMware and are the only fully integrated, preconfigured, and tested HCI system optimized for VMware vSAN technology for software defined storage. Managed through the ubiquitous VMware vCenter Server interface, VxRail provides a familiar vSphere experience that enables streamlined deployment and the ability to extend the use of existing IT tools and processes.

VxRail systems are fully loaded with integrated, mission-critical data services from Dell EMC and VMware including compression, deduplication, replication, and backup. VxRail delivers resiliency and centralized-management functionality enabling faster, better, and simpler management of consolidated workloads, virtual desktops, business-critical applications, and remote office infrastructure. As the exclusive hyperconverged infrastructure system from Dell EMC and VMware,

VxRail is the easiest and fastest way to stand up a fully virtualized VMware environment. VxRail is the only HCI system on the market that fully integrates Intel-based Dell EMC PowerEdge Servers with VMware vSphere, and vSAN. VxRail is jointly engineered with VMware and supported as a single product, delivered by Dell EMC. VxRail seamlessly integrates with existing (and optional) VMware eco-system and cloud management solutions, including vRealize, NSX, Horizon, Platinum and any solution that is a part of the vast and robust vSphere ecosystem.

VxRail provides an entry point to the software defined datacenter (SDDC) for most workloads. Customers of all sizes and types can benefit from VxRail, including small- and medium-sized environments, remote and branch offices (ROBO), and edge departments, as well as providing a solid infrastructure foundation for larger datacenters.

In addition, nodes are available with different compute power, memory, and cache configurations to closely match the requirements of new and expanding use cases. As VxRail essentials Fully integrated, preconfigured, and tested hyperconverged infrastructure appliance simplifies and extends VMware environments Seamlessly integrates with existing VMware eco-system management solutions for streamlined deployment and management in VMware environments. Start small, with a few as three nodes. Single node scaling, storage capacity expansion, and vSphere license independence enable growth that meets business demands. Backup distributed applications or workloads with integrated data protection options, including RecoverPoint for VMs. Single point of global 24x7 support for both the hardware and software requirements grow, the system easily scales out and scales up in granular increments. Finally, because the VxRail is jointly engineered, integrated, and tested, organizations can leverage a single source of support and remote services from Dell EMC.

VxRail systems enable organizations to start small and scale-out as the IT organization transforms and adapts to managing converged infrastructure versus silos. With a rich set of data services, including data protection, tiering to the cloud, and active-active datacenter support, VxRail can be the foundational infrastructure for IT. Best of all, you can simply add new systems into existing clusters (and decommission aging systems) to provide an evergreen HCI environment, never having to worry about costly SAN data migrations ever again. As organizations continue to transform to a cloud model, integration with the VMware vRealize Suite enables full cloud automation and service delivery capabilities.

The VxRail software layers use VMware technology for server virtualization and software defined storage. VxRail nodes are configured as ESXi hosts, and VMs and services communicate using the virtual switches for logical networking. VMware vSAN technology, implemented at the ESXi-kernel level, pools storage resources. This highly efficient SDS layer consumes minimal system resources, making more resources available to support user workloads. The kernel-level integration also dramatically reduces the complexities involved in infrastructure management. vSAN presents a familiar datastore to the nodes in the cluster and Storage Policy Based Management provides the flexibility to easily configure the appropriate level of service for each VM.

VxRail HCI System Software, the VxRail management platform, is a strategic advantage for VxRail and further reduces operational complexity. VxRail HCI System Software provides out-of-the-box automation and orchestration for day 0 to day 2 system-based operational tasks, which reduces the overall IT OpEx required to manage the stack. No build-it-yourself HCI solution provides this level of lifecycle management, automation, and operational simplicity. With VxRail HCI System Software, upgrades are simple and automated with a single click. You can sit back and relax knowing you are going from one known good state to the next, inclusive of all the managed software and hardware component firmware. No longer do you need to verify hardware compatibility lists, run test and development scenarios, sequence and trial upgrades, and so on. The heavy lifting of sustaining and lifecycle management is already done for you.

Within the VxRail HCI System Software, the VxRail Manager plugin presents a simple integrated dashboard interface on vCenter Server for infrastructure monitoring and automation of lifecycle management tasks such as software upgrades. Since VxRail nodes function as ESXi hosts, vCenter Server is used for VM-related management, automation, monitoring, and security.

VxRail systems are optimized for VMware vSAN software, which is fully integrated in the kernel of vSphere and provides full-featured and cost-effective software-defined storage. vSAN implements an efficient architecture, built directly into hypervisor. This distinguishes vSAN from solutions that typically install a virtual storage appliance (VSA) that runs as a guest VM on each host. Embedding vSAN into the ESXi kernel layer has advantages in performance and memory requirements. It has little impact on CPU utilization (less than 10 percent) and self-balances based on workload and resource availability. It presents storage as a familiar data store construct and works seamlessly with other vSphere features such as VMware vSphere vMotion.

VMware Cloud Foundation on Dell EMC VxRail

VMware Cloud Foundation on Dell EMC VxRail, the foundation for Dell Technologies Cloud Platform, provides the simplest path to the hybrid cloud through a fully integrated platform that leverages native VxRail hardware and software capabilities as well as unique VxRail integrations (such as vCenter plugins and Dell EMC networking integration) to deliver a turnkey user experience with full stack integration. Full stack integration enables customers to experience both the HCI infrastructure layer and cloud software stack in one, complete, automated lifecycle, turnkey experience.

VMware Cloud Foundation on Dell EMC VxRail provides a consistent hybrid cloud experience unifying customer public and private cloud platforms under a common operating model and management framework. Customers can operate both their public and private platforms using one set of tools and processes with a single management view and provisioning experience across both platforms. Customers are able to build, run, and manage a broad set of workloads from traditional and legacy applications to virtual desktops, as well as next generation workloads from artificial intelligence and machine learning to cloud native and container-based workloads.

What allows Cloud Foundation to build a complete software-defined data center on VxRail is SDDC Manager and VxRail Manager software integration. SDDC Manager orchestrates the deployment, configuration, and lifecycle management of vCenter, NSX, and vRealize Suite above the ESXi and vSAN layers of VxRail. It enables VxRail clusters to serve as a resource platform for workload domains or as multi-cluster workload domains. It can also automatically install VMware PKS framework for container-based workloads and VMware Horizon for virtual desktop workloads. Integrated with the SDDC Manager management experience, VxRail Manager is used to deploy, configure, and lifecycle manage ESXi, vSAN and HCI infrastructure hardware firmware. VxRail lifecycle management is accomplished using fully integrated and seamless SDDC Manager orchestration that leverages VxRail Manager to execute it natively.

Through the standardized hardware and software architecture integrated into Cloud Foundation on VxRail, customers can build heterogeneous workloads. Using SDDC Manager, infrastructure building blocks based on native VxRail clusters are created enabling customers to scale up and scale out incrementally.

VxRail Manager delivers automation, lifecycle management, support, and serviceability capabilities integrated with SDDC Manager and vCenter to extend the Cloud Foundation management experience and simplify operations. VxRail Manager functionality is available in vCenter through an HTML5 plugin.

All Cloud Foundation on VxRail lifecycle patching and upgrade operations are orchestrated using SDDC Manager. As a part of this monitoring, SDDC Manager automatically discovers when new VxRail and Cloud Foundation updates are available for download and proactively notifies the administrator accordingly within the user interface. All updates are scheduled, executed, and orchestrated by SDDC Manager but may be executed by either SDDC Manager or VxRail Manager using integrated APIs.

VMware Cloud Foundation on Dell EMC VxRail can be delivered as either a cluster of nodes that leverages the customer’s existing network infrastructure or as an integrated rack system with or without integrated networking. With rack assembly services from Dell EMC, VxRail rack integrated systems can be delivered with customer-chosen rack and networking component options.

Dell EMC Services accelerates the deployment of Cloud Foundation on VxRail with a full range of integration and implementation services. Dell EMC Services helps IT organizations quickly realize the value of their investment both by deploying the hardware and software components of Cloud Foundation on VxRail, as well as achieving IaaS through integration of this integrated cloud platform into their application portfolio, operating model, and enterprise infrastructure.

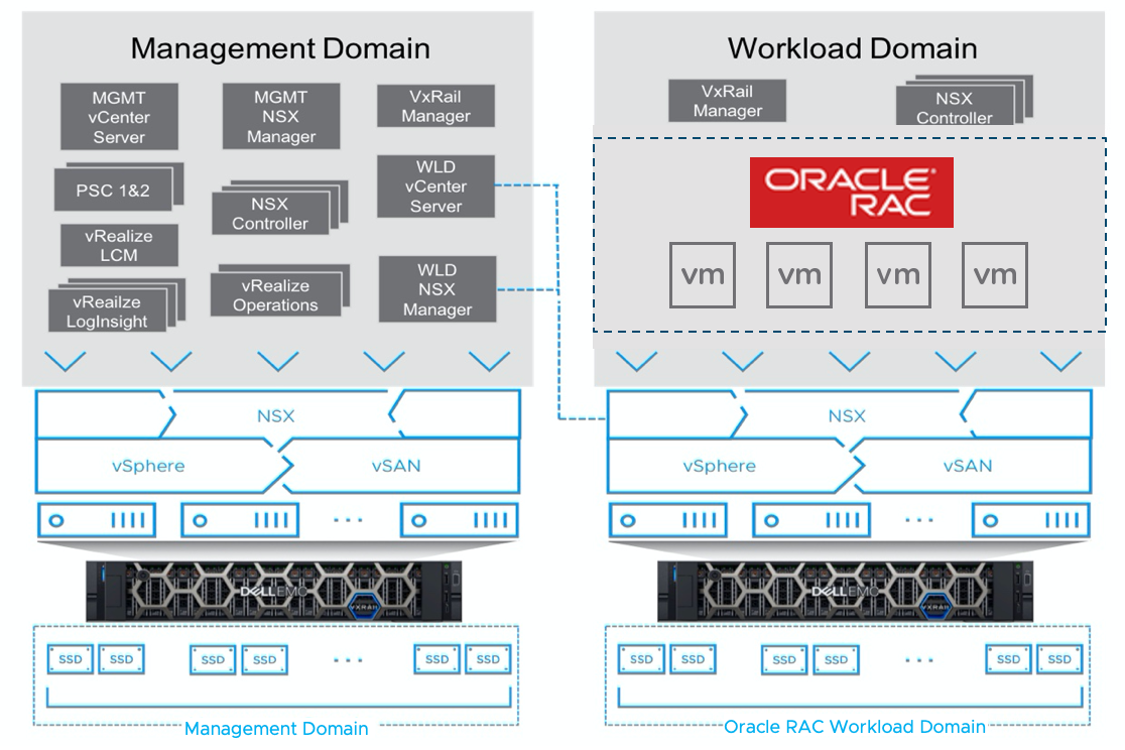

Figure 2. VxRail Manager and SDDC Manager Integration

Oracle 19c and Oracle Real Application Clusters (RAC)

Oracle RAC 19c, is the long-term support release of the Oracle Database 12c and 18c family of products, offering customers the best performance, scalability, reliability, and security for all their operational and analytical workloads. The adoption of Oracle 19c has become a popular option for many customers for their mission-critical workloads.

Oracle RAC is an option to the award-winning Oracle Database Enterprise Edition. Oracle RAC is a cluster database with a shared cache architecture that overcomes the limitations of traditional shared-nothing and shared-disk approaches to provide highly scalable and available database solutions for all business applications.

Oracle RAC provides customers with the highest database availability by removing individual database servers as a single point of failure. In a clustered server environment, the database itself is shared across a pool of servers, which means that if any server in the server pool fails, the database continues to run on surviving servers.

Oracle RAC enables the transparent deployment of Oracle Databases across a pool of clustered servers. This enables customers to easily re-deploy their single server Oracle Database onto a cluster of database servers, and thereby take full advantage of the combined memory capacity and processing power the clustered database servers provide.

Oracle RAC provides all the software components required to easily deploy Oracle Databases on a pool of servers and take full advantage of the performance, scalability, and availability that clustering provides. Oracle RAC utilizes Oracle Grid Infrastructure as the foundation for Oracle RAC database systems. Oracle Grid Infrastructure includes Oracle Clusterware and Oracle Automatic Storage Management (ASM) that enable efficient sharing of server and storage resources in a highly available and scalable database cloud environment.

Solution Configuration

Architecture Diagram

In this solution, we deployed an Oracle 19c RAC environment using VMware Cloud Foundation for VxRail. We created a 4-node VxRail P570F cluster for VMware Cloud Foundation management cluster, running multiple virtual machines and appliances including:

- One management vCenter Server appliance and two Platform Service Controllers

- One management NSX Manager and three NSX controllers (NSX-V)

- One VxRail Manager appliance

- One vRealize Lifecycle Manager appliance

- Two vRealize Operations appliances

- Three vRealize Log Insight appliances

- One Virtual Infrastructure (VI) workload NSX Manager

- One VI workload vCenter Server appliance

For the Oracle RAC workload domain cluster, we created another 4-node VxRail P570F cluster and deployed four Oracle RAC virtual machines with each Oracle RAC virtual machine allocated 24 vCPU and 192GB memory with 100 percent memory reservation

The OLTP workload was used for the performance test against the four Oracle RAC nodes. A VxRail Manager and three workload NSX-V controllers were also deployed in the workload domain cluster, as shown in Figure 3.

Figure 3. Oracle RAC on VMware Cloud Foundation

Hardware Resources

In this Reference architecture, we used a total of eight VxRail R570F nodes each configured with two disk groups, and each disk group consists of a single cache-tier write-intensive SAS SSD and four capacity-tier read-intensive SAS SSDs.

Each VxRail node in the cluster had the configuration shown in Table 1.

Table 1. Hardware Configuration for VxRail P570F

| PROPERTY | SPECIFICATION |

|---|---|

|

VxRail Model |

8 x VxRail P570F |

|

CPU |

2 x Intel(R) Xeon(R) Platinum 8180M CPU @ 2.50GHz, 28 core each |

|

RAM |

512GB |

|

Network adapter |

Broadcom BCM57414 NetXtreme-E 10Gb/25Gb RDMA Ethernet Controller |

|

Storage adapter |

Dell HBA330 Adapter |

|

Cache disks |

2 x 800GB Write Intensive SAS SSDs |

|

Capacity disks |

8 x 3.84TB Read Intensive SAS SSDs |

Software Resources

Table 2 shows the software resources used in this solution.

Table 2. Software Resources

| Software | Version | Purpose |

|---|---|---|

|

VMware Cloud Foundation on Dell EMC VxRail |

VCF 3.8.1 VxRail 4.7.300 |

A unified SDDC platform on Dell EMC VxRail that brings together VMware ESXi, vSAN, NSX and optionally, vRealize Suite components, into a natively integrated stack to deliver enterprise-ready cloud infrastructure for the private and public cloud. See BOM of VMware Cloud Foundation on VxRail for details. |

|

Oracle Linux |

Enterprise Edition 7.5 |

Operating system |

|

Oracle Database |

19c |

Database server platform |

|

Swingbench |

2.6 |

Oracle OLTP data population and workload generate tool |

Network Configuration

Figure 4 shows the VMware vSphere Distributed Switch configuration for the Oracle RAC VI workload domain of the VMware Cloud Foundation on VxRail. 2-port 25 GbE vmnic was used and configured with different teaming policies.

Figure 4. Virtual Distributed Switch Configuration

The NSX-V controllers resided on the management port group of the vDS, and the VxRail Manager was on another VxRail management port group. The Oracle RAC virtual machines were configured with a VM public and private network on the vDS. vSphere vMotion, vSAN, and VXLAN VTEP for NSX-V had a dedicated port group created as shown in table 3.

Table 3. Virtual Distributed Switch Teaming Policy for 2x25 GbE Profile

| Port Group | Teaming Policy | VMNIC0 | VMNIC1 |

|---|---|---|---|

|

Management network |

Route Based on Physical NIC load |

Active |

Active[1] |

|

VxRail Management |

Route Based on Originating Virtual Port |

Active |

Standby |

|

VM public network |

Active and standby failover |

Active |

Standby |

|

VM private network (RAC Interconnect) |

Active and standby failover |

Standby |

Active |

|

vSphere vMotion |

Route Based on Physical NIC load |

Active |

Active |

|

vSAN |

Route Based on Physical NIC load |

Active |

Active |

|

VXLAN VTEP |

Route Based on Originating Virtual Port |

Active |

Active |

[1] For more details, refer to VMware Cloud Foundation on VxRail Architecture Guide.

VMware Cloud Foundation Management Domain Virtual Machine Configuration

The VMware Cloud Foundation management domain virtual machine configuration in this Reference Architecture is described in Table 4. This is to provide information on how much compute resources are required for the management domain.

Table 4. Management Domain Virtual Machine Configuration

| VM Role | vCPU | Memory (GB) | VM Count |

|---|---|---|---|

|

Management Domain VxRail Manager |

2 |

8 |

1 |

|

Management Domain vCenter Server |

16 |

24 |

1 |

|

Platform Server Controller |

2 |

4 |

2 |

|

SDDC Manager |

4 |

16 |

1 |

|

Management Domain NSX Manager |

4 |

16 |

1 |

|

Management Domain NSX Controller |

4 |

4 |

3 |

|

VI Workload Domain vCenter Server |

16 |

24 |

1 |

|

VI Workload Domain NSX Manager |

4 |

16 |

1 |

|

vRealize Suite Lifecycle Manager |

4 |

16 |

1 |

|

vRealize Operations |

8 |

32 |

2 |

|

vRealize Log Insight |

8 |

16 |

3 |

|

Cloud Builder |

4 |

8 |

1 |

vSAN SPBM Policy

vSAN provides the storage level data protection using FTT equal to or greater than one. We used RAID-1 with 100 percent space reservation at the setting of SPBM for shared disks including data, FRA, Online REDO Log, CRS, and kept the default vSAN policy for the OS disks. RAID-1 mirroring for Oracle database VMDKs is the recommended failure tolerance method for the mission critical workload, and pre-allocating the space for Oracle database files can reserve the space to avoid the OUT OF SPACE issue. The capacity will be reserved upfront from the vSAN datastore.

When configuring ASM using storage protection, we used external redundancy. With external redundancy, Oracle ASM does not provide any redundancy for the disk group, instead, the underlying disks in the disk group provides the redundancy.

Oracle RAC VM and Database Storage Configuration

Each Oracle RAC VM is installed with Oracle Enterprise Linux 7.5 (OEL) and configured with 24 vCPU and 196GB memory. The SGA setting is 128GB and the PGA setting is 48GB.

For the designed large database data store, we created twelve 1024GB VMDKs for the data disk group, created one 750GB VMDK for the FRA disk group, and one 500GB VMDK for the REDO disk group in the ASM disk group configuration. These disks were shared by the four Oracle RAC nodes. And one 3TB disk was created on one of the RAC nodes as the Oracle Data Pump export directory.

Oracle ASM data disk group is configured with the allocation unit (AU) size of 1M. Data, Fast Recovery Area (FRA), and DATA disk groups are present on different VMware Paravirtual SCSI (PVSCSI) controllers, Redo logs are on the separate disk group with FRA. Table 5 provides ASM disk group configuration.

Table 5. Oracle RAC ASM Disk Group Configuration

| ASM Disk Group Name | Paravirtual SCSI Controller | Size (GB) x Number of VMDK |

Total Storage (GB) |

|---|---|---|---|

|

Oracle Grid Infra CRS and voting disk |

SCSI 0 |

50 x 1 |

50 |

|

Database data disks* |

SCSI 1 |

1024 x 6 |

6144 |

|

Database data disks* |

SCSI 2 |

1024 x 6 |

6144 |

|

FRA |

SCSI 3 |

750x 1 |

750 |

|

Online REDO |

SCSI 3 |

500 x 1 |

500 |

*NOTE that for 1.5TB schema size, configuring three 1TB disks in the data disk group is acceptable for the DB and increase the disk number according to actual requirement.

Storage provisioning from vSAN storage to Oracle:

- Configure disks to use PVSCSI Controller. In our configuration, we added three additional PVSCSIs to each virtual machine.

- Create a VM storage policy to be applied to the virtual disks used as the RAC’s shared storage. In our configuration, we created a policy with a 100 percent object space reservation (OSR =100).

- Create shared disks in the eager-zeroed mode. See the configuration steps from the VMware KB 2121181.

Note: Starting from VMware vSAN 6.7 Patch 01, Oracle RAC on vSAN does NOT require the shared VMDKs to be Eager Zero Thick provisioned (OSR=100) for multi-writer mode to be enabled. This reference uses vSAN version prior to vSAN 6.7 Patch 01 so this does not apply yet.

- Attach the shared disks to all the VMs, and explicitly enable the multi-writer mode for all the VMs and virtual disks.

- Apply the VM storage policy to the shared disks.

DB Size and Scale

We used 1TB as the scale factor generating the dataset using the Order Entry schema in the Swingbench. Aschemais a collection of logical structures of data or schema objects and a schema is owned by a database user and has the same name as that user. Each user owns a single schema. The schema size was around 1.5TB in total after data loading. We duplicated Order Entry schema to multiple copies under different users by using Oracle Data Pump. We tested the database performance with the multiple schemas using multiple test clients generating the workload against different schemas at the same time, and the result comes up with the aggregated Transaction Per Minutes (TPM) and averaged latency. As shown in Figure 5, totally we tested up to five schemas with around 7.5TB schema size and 8TB at the database size.

Figure 5. Oracle RAC DB Size and Scale

Solution Validation

Solution Overview

This solution validation includes the configuring and monitoring the infrastructure underneath and running the workload against Oracle RAC database. We measured the performance of the OLTP workload, which reflects one of the key requirements in the HCI solution, though it is not the only purpose throughout the validations.

In this solution validation, we demonstrated the deployment of VxRail for the VMware Cloud Foundation management and virtual infrastructure workload domain dedicated for Oracle RAC environment. We validated the OLTP performance of running workloads against 1.5TB schema database by scaling up the RAC node from one to four and then validated the OLTP performance by linearly increasing the schema size from 1.5TB up to 7.5TB.

In this performance validation, we measured the performance of running Swingbench OLTP (Order Entry or OE) workload, against the Oracle RAC database. The user number of Swingbench was adjusted to maximize the TPM with optimal latency which is 20 milliseconds as our designed optimal response time.

Note:

- Swingbench mimics the real-world workload its TPM reflects an application transaction (OLTP workload) instead of a database transaction.

- See the appendix—the exported spfile for the instance configuration on one of the RAC nodes.

Solution Validation Tools

We used the following monitoring tools and benchmark tools in the solution testing:

- Monitoring tools

vSAN Performance Service is used to monitor the performance of the vSAN environment, using the vSphere web client. The performance service collects and analyzes performance statistics and displays the data in a graphical format. You can use the performance charts to manage your workload and determine the root cause of problems.

vSAN Health Check delivers a simplified troubleshooting and monitoring experience of all things related to vSAN. Through the vSphere web client, it offers multiple health checks specifically for vSAN including cluster, data, limits, physical disks. Oracle AWR reports with Automatic Database Diagnostic Monitor (ADDM)

Automatic Workload Repository (AWR) collects, processes, and maintains performance statistics for problem detection and self-tuning purposes for Oracle database. This tool can generate a report for analyzing Oracle performance.

The Automatic Database Diagnostic Monitor (ADDM) analyzes data in the Automatic Workload Repository (AWR) to identify potential performance bottlenecks. For each of the identified issues, it locates the root cause and provides recommendations for correcting the problem.

We gathered AWR reports during every test to measure the KPIs including the RAC node CPU utilization.

- Database generation and workload stress test tool

Swingbench is a free load generator (and benchmarks) designed to stress test an Oracle database (12c, 18c, 19c). The benchmark – Order Entry is based on the "oe" schema that ships with Oracle 12c/Oracle 18c/Oracle 19c. This benchmark is designed specifically to test and stress both interconnects and memory. It is an OLTP benchmark andhighly CPU intensive, and will stress CPU, Memory and Interconnects between RAC nodes if testing in a RAC environment.

Swingbench reports the maximum transactions per minute (TPM) and the average of TPM which is a key transaction performance for our test. The user number of Swingbench can be adjusted to maximize the TPM with the desired latency.

Deploy VMware Cloud Foundation on VxRail

Overview

We deployed a four-node VxRail cluster for the VMware Cloud Foundation management cluster and another a second four-node VxRail cluster for the VMware Cloud Foundation workload domain dedicated to the Oracle RAC environment. It is highly recommended to follow the steps described in VMware Cloud Foundation on Dell EMC VxRail documentation. For more details and instructions, contact VMware and Dell EMC Customer Services or your sales representative.

Management and VI Workload Domain

SDDC Manager is the centralized management software in VMware Cloud Foundation used to automate the lifecycle of components, from bring up to configuration, to infrastructure provisioning to upgrades/patches. SDDC Manager complements vCenter Server and vRealize Suite products by delivering new functionality that helps cloud administrators build and maintain the SDDC.

Figure 7 shows the management domain and VI workload domain cluster created for Oracle RAC virtual machines in the SDDC Manager.

Figure 7. Management and Virtual Infrastructure Workload Domains for Oracle RAC in the SDDC Manager

Figure 8 shows the detailed view of Virtual Infrastructure Workload Domains for Oracle RAC environment after the deployment.

Figure 8. Detailed view of Virtual Infrastructure Workload Domains for Oracle RAC

4-node Oracle RAC Performance

Overview

The scalability validation intended to evaluate the performance scale-out of the OLTP workload from one RAC node up to four RAC nodes. We validated the scalability of the 2TB database which contains around 1.5TB Order Entry schema.

We used Swingbench to generate Oracle OLTP workload (TPC-C like workload). Oracle RAC is set up with SCAN (Single Client Access Name) with three IP addresses. SCAN provides load balancing and failover for client connections to the database. SCAN works as a cluster alias for databases in the cluster.

Validation and Results

- 4-node RAC performance result

Swingbench reported the average TPM of 1,024,000, and average response time of 19.8 ms, shown in Table 6 when running the workload against the 1.5TB schema size.

Note that the TPM of the test run was rounded to the nearest 1,000.

Table 6. Oracle RAC Database Performance in TPM and Response Time

| Transaction Per Minute | Transaction Response Time (ms) |

|---|---|

|

1,024,000 |

19.8 |

From the AWR report, we checked the physical I/O per second, and they are 52k at read and 23k at write on average. It was determined that the two I/O performance related wait events, which are all “log file resyc” and “db sequential read”. The average wait time of “log file sync” was 2.7ms. The average wait time of “db file sequential read” was 1.1ms.

During this workload, the CPU utilization of each virtual machine in the AWR ranged from 72.3 percent to 78.6 percent, averaging at 74.8 percent, as shown in Table 7.

Table 7. Average CPU Utilization in the AWR Report

| RAC Node 1 | RAC Node 2 | RAC Node 3 | RAC Node 4 | Average |

|---|---|---|---|---|

|

72.3% |

71.6% |

72.6% |

78.6% |

74.8% |

We monitored the vSAN performance using vSAN Performance Service when the Oracle database was handling the OLTP workload. As shown in Table 8, we found that the range of latency was from 0.64ms to 0.7ms at read and 1.54ms to 2.56ms at write. IOPS peaked and troughed during the test run and it ranged from 49,800 to 55,600 at read, and 25,100 to 26,900 at write, reported from vSAN Performance Service.

Table 8. vSAN Performance during the Test Run

| Performance Matrix | vSAN Client |

|---|---|

|

Read IOPS |

49,800-55,600 |

|

Write IOPS |

25,100-26,900 |

|

Read Latency |

0.64ms-0.70ms |

|

Write Latency |

1.54ms-2.56ms |

- Oracle RAC Scalability

Figure 8 demonstrates the performance of TPM and Response Time when increasing the node count from one, up to four. From the graph, you can see that the average TPM increased with near-linear trendline (shown by the dotted line) as additional RAC nodes were added, while response time was maintained at 20 milliseconds or less.

Note that linear performance could NOT be achieved by adding more nodes due to the design nature of RAC which is for high availability. Waits in more RAC nodes are node dependent on concurrency of access, instance, network, and other factors. As such, more nodes can be better than fewer if there is no resource contention among the more nodes, however, RAC is not as good as some other options for linear performance scale-up.

Figure 8. Oracle RAC Performance Scalability

AWR reports gathered the instance CPU utilization during the test run. From the AWR on different RAC node, you can see more than 90 percent CPU utilization if only one RAC node served the database. The average CPU utilization decreased to below 75% as additional RAC nodes were added. It demonstrated that more RAC nodes can decrease the average CPU utilization and unleased more compute resources for near-incremental performance.

Figure 9. Average CPU Utilization in the AWR

Table 9 showed the VM client performance matrix measured from the vSAN Performance Service. We found that more RAC nodes pushed more IOPS while producing more TPM.

Table 9. vSAN Performance from One RAC Node up to Four RAC nodes

| Performance Matrix | 1 RAC Node | 2 RAC Nodes | 3 RAC Nodes | 4 RAC Nodes |

|---|---|---|---|---|

|

Read IOPS |

25,100-25,600 |

28,510-33,800 |

31,400-37,900 |

49,800-55,600 |

|

Write IOPS |

12,000-12,500 |

15,100-17,700 |

15,900-18,800 |

25,100-26,900 |

|

Read Latency |

0.42ms-0.43ms |

0.63ms-0.67ms |

0.62ms-0.65ms |

0.64ms-0.70ms |

|

Write Latency |

1.36ms-1.41ms |

1.57ms-2.07ms |

1.59ms-1.65ms |

1.54ms-2.56ms |

Summary

A prime advantage of running Oracle RAC is to achieve higher performance by distributing the workload to more nodes or Oracle RAC instances. From this validation, you will find that Oracle RAC database can have predicable performance on TPM and transactional response time. More Oracle RAC nodes provides better performance and the average CPU utilization decreased while performance increased near-linearly. We also demonstrated using 1024GB virtual disk for RAC database, for management convenience without compromising performance.

DB Scalability and Performance

Overview

We used the Oracle Data Pump to export and import the Order Entry schema from the database to duplicate the schema in order to increase the DB size. For OLTP performance test, we used different connections to run the workload on different schema. The baseline schema size is about 1.5TB and reaches up to 7.5TB in total, which increased the DB size up to about 8TB. We tested the OLTP performance on different scales and database sizes.

Validation and Results

Shown in Figure 10, Swingbench reported the TPMs were at the same level, approximately one million, on different scales of the schema, and average response time equaled or was less than 20 milliseconds when running the workloads against the various schemas.

Figure 10. Oracle RAC Performance on Different Schema Scale and DB Size

From the AWR, we checked the physical I/O per second on every schema size. Shown in Figure 11, the IOPS ranged from 75 thousand to 98 thousand, combing read and write. This pushed the peak IOPS on the vSAN backend from 109 thousand to 131 thousand while the reported backend latency was less than 0.7ms at write and 0.4ms at read at peak workload.

Figure 11. IOPS in the Oracle AWR on Different Schema Scale and DB Size

We checked the vSAN performance when the Oracle database was at 8TB with 7.5TB schemas. Reportedly from the vSAN client, the read IOPS peaked and troughed from 67,500 to 69,100 at read, and 28,700 to 30,900 at write, And the peak read latency was less than 0.7ms and peak write latency was less than 3.2ms.

Summary

Different-sized DBs kept the TPM at the same level, that was about one million while keeping the response time at 20ms or less. When increasing the DB size, the physical read and write IOPS increased near-linearly, reported from the Oracle AWR, indicating more read and write I/O requests to the backend storage, under the same configuration. Despite this, vSAN still provided excellent storage performance at sub-milliseconds at read and single-digit milliseconds latency at write when the peak client IOPS was up to 100 thousand.

Best Practices

In this solution, we validated the Oracle RAC OLTP workload and measured the performance in TPM and transactional response time metrics.

The following recommendations provide the best practices and sizing guidance to run Oracle RAC on VMware Cloud Foundation on VxRail.

- Cloud management consideration:

- See vRealize Operations Manager Best Practices for best practices for details.

- See Architecting a VMware vRealize Log Insight Solution for VMware Cloud Providers for details.

- Compute consideration:

- Follow the Oracle DB Size Template on Sizing for RAC compute resource allocation

- Network consideration:

- Separate physical network using different network range and VLAN ID for management, vSphere vMotion, vSAN, VM Network, and VXLAN VTEP network. Also, separate vLAN for Oracle RAC Private network.

- For NSX Data Center for vSphere design best practices for workload domain, see VMware NSX for vSphere (NSX) Network Virtualization Design Guide.

- Storage consideration:

- Follow the generic best practices Oracle on VMware Best Practices for environment setting

- Follow the KB VMware knowledge base article 1033570 to configure EZT disks

Conclusion

VMware Cloud Foundation on Dell EMC VxRail is the ideal hybrid cloud platform for running Oracle RAC databases. Powered by VMware vSphere, vSAN, NSX Data Center, vRealize Suite, and Dell EMC VxRail, this platform allows IT administrators to enable fast cloud deployment, achieve better scalability for performance, ensure infrastructure and application security, monitor data center operational health, and lower TCO expenditure.

In this solution, we validated Oracle RAC database running on VMware Cloud Foundation powered by Dell EMC VxRail. We deployed the entire test environment with agility by using Dell EMC VxRail and VMware SDDC Manager and validated Oracle RAC database scalability with different RAC nodes. We also validated performance on linear-increased DB schema and DB size. The results showcased that the HCI platform, specifically VxRail in this solution, provided near-linear scalability and predictable transactional response time for Oracle OLTP workload when RAC nodes increase/decrease. And also we achieved the high throughput of the TPM and predictable transactional response time when linearly increased the RAC database size.

Reference

- VMware Cloud Foundation

- VMware vSphere

- VMware vSAN

- VMware NSX Data Center

- VMware vRealize Suite

- Dell EMC VxRail

- VMware Cloud Foundation on VxRail Whitepaper

- VMware Cloud Foundation on Dell EMC VxRail Admin Guide

- VMware Cloud Foundation on VxRail Architecture Guide

- Oracle Real Application Clusters on VMware Virtual SAN

- Using Oracle RAC on a vSphere 6.x vSAN Datastore (2121181)

- Oracle DB Size Template on Sizing

About the Author

Tony Wu, Senior Solutions Architect in the SolutionsArchitecture team of the Hyperconverged Infrastructure (HCI) Business Unit, wrote the original version of this paper.

The following reviewers also contributed to the paper contents:

- Victor Dery, Senior Principal Engineer ofVxRailTechnical Marketing in Dell EMC

- David Glynn, Senior Principal Engineer ofVxRailTechnical Marketing in Dell EMC

- William Leslie, Senior Manager of VxRail Product Management in Dell EMC

- Mark Xu, SolutionsArchitectin the SolutionsArchitecture team of the HCI Business Unitin VMware

- Chen Wei, Staff Solutions Architect in the SolutionsArchitecture team of the HCI Business Unitin VMware

- Dinara Alieva, Senior Product Marketing Manager ofthe HCI Business Unitin VMware

Appendix—Oracle pfile

# Oracle init.ora parameter file generated by instance with overshadowing the internal IP addresses manually

*.__data_transfer_cache_size=0

orcl1.__db_cache_size=84G

orcl2.__db_cache_size=84G

orcl3.__db_cache_size=84G

orcl4.__db_cache_size=84G

*.__inmemory_ext_roarea=0

*.__inmemory_ext_rwarea=0

*.__java_pool_size=0

*.__large_pool_size=512M

*.__oracle_base='/u01/app/oracle' # ORACLE_BASE set from environment

*.__pga_aggregate_target=48G

*.__sga_target=96G

*.__shared_io_pool_size=256M

orcl1.__shared_pool_size=10752M

orcl2.__shared_pool_size=10752M

orcl3.__shared_pool_size=10752M

orcl4.__shared_pool_size=10752M

*.__streams_pool_size=512M

*.__unified_pga_pool_size=0

*._always_anti_join='CHOOSE'

*._always_semi_join='CHOOSE'

*._b_tree_bitmap_plans=TRUE

*._bloom_serial_filter='ON'

*._complex_view_merging=TRUE

*._compression_compatibility='19.0.0'

*._cursor_plan_hash_version=1 # kespmEvolveDrv:reset

orcl1._diag_adr_trace_dest='/u01/app/oracle/diag/rdbms/orcl/orcl1/trace'

orcl2._diag_adr_trace_dest='/u01/app/oracle/diag/rdbms/orcl/orcl2/trace'

orcl3._diag_adr_trace_dest='/u01/app/oracle/diag/rdbms/orcl/orcl3/trace'

orcl4._diag_adr_trace_dest='/u01/app/oracle/diag/rdbms/orcl/orcl4/trace'

*._disk_sector_size_override=TRUE

*._ds_xt_split_count=1

*._eliminate_common_subexpr=TRUE

*._fast_full_scan_enabled=TRUE

*._generalized_pruning_enabled=TRUE

*._gs_anti_semi_join_allowed=TRUE

*._high_priority_processes='LMS*|LM1*|LM2*|LM3*|LM4*|LM5*|LM6*|LM7*|LM8*|LM9*|LM*|LCK0|CKPT|DBRM|RMS0|LGWR|CR*|RS0*|RS1*|RS2*'

*._improved_outerjoin_card=TRUE

*._improved_row_length_enabled=TRUE

*._index_join_enabled=TRUE

*._ipddb_enable=TRUE

*._key_vector_create_pushdown_threshold=20000

*._ksb_restart_policy_times='0'

*._ksb_restart_policy_times='60'

*._ksb_restart_policy_times='120'

*._ksb_restart_policy_times='240' # internal update to set default

*._left_nested_loops_random=TRUE

*._mv_access_compute_fresh_data='ON'

*._new_initial_join_orders=TRUE

*._new_sort_cost_estimate=TRUE

*._nlj_batching_enabled=1

*._odci_index_pmo_rebuild=FALSE # domain index pmo rebuild

*._optim_enhance_nnull_detection=TRUE

*._optim_peek_user_binds=TRUE

*._optimizer_ads_use_partial_results=TRUE

*._optimizer_better_inlist_costing='ALL'

*._optimizer_cbqt_or_expansion='ON'

*._optimizer_cluster_by_rowid_control=129

*._optimizer_control_shard_qry_processing=65528

*._optimizer_cost_based_transformation='LINEAR'

*._optimizer_cost_model='CHOOSE'

*._optimizer_extended_cursor_sharing='UDO'

*._optimizer_extended_cursor_sharing_rel='SIMPLE'

*._optimizer_extended_stats_usage_control=192

*._optimizer_join_order_control=3

*._optimizer_max_permutations=2000

*._optimizer_mode_force=TRUE

*._optimizer_native_full_outer_join='FORCE'

*._optimizer_or_expansion='DEPTH'

*._optimizer_proc_rate_level='BASIC'

*._optimizer_system_stats_usage=TRUE

*._optimizer_try_st_before_jppd=TRUE

*._optimizer_use_cbqt_star_transformation=TRUE

*._or_expand_nvl_predicate=TRUE

*._ordered_nested_loop=TRUE

*._parallel_broadcast_enabled=TRUE

*._pga_max_size=2G

*._pivot_implementation_method='CHOOSE'

*._pred_move_around=TRUE

*._push_join_predicate=TRUE

*._push_join_union_view=TRUE

*._push_join_union_view2=TRUE

*._px_dist_agg_partial_rollup_pushdown='ADAPTIVE'

*._px_groupby_pushdown='FORCE'

orcl1._px_load_balancing_policy='LOAD_BALANCED'

orcl2._px_load_balancing_policy='LOAD_BALANCED'

*._px_partial_rollup_pushdown='ADAPTIVE'

*._px_shared_hash_join=FALSE

*._px_wif_dfo_declumping='CHOOSE'

*._smm_max_size=1048576

*._smm_max_size_static=1048576

*._smm_min_size=1024

*._smm_px_max_size=25165824

*._smm_px_max_size_static=25165824

*._sql_model_unfold_forloops='RUN_TIME'

*._sqltune_category_parsed='DEFAULT' # parsed sqltune_category

*._subquery_pruning_mv_enabled=FALSE

*._table_scan_cost_plus_one=TRUE

*._union_rewrite_for_gs='YES_GSET_MVS'

*._unnest_subquery=TRUE

*._use_column_stats_for_function=TRUE

*._xt_sampling_scan_granules='ON'

*.audit_file_dest='/u01/app/oracle/admin/orcl/adump'

*.audit_trail='DB'

*.cluster_database=TRUE

*.compatible='19.0.0'

*.connection_brokers='((TYPE=DEDICATED)(BROKERS=1))'

*.connection_brokers='((TYPE=EMON)(BROKERS=1))' # connection_brokers default value

*.control_files='+REDO/ORCL/CONTROLFILE/current.281.1030247839'

orcl1.core_dump_dest='/u01/app/oracle/diag/rdbms/orcl/orcl1/cdump'

orcl2.core_dump_dest='/u01/app/oracle/diag/rdbms/orcl/orcl2/cdump'

orcl3.core_dump_dest='/u01/app/oracle/diag/rdbms/orcl/orcl3/cdump'

orcl4.core_dump_dest='/u01/app/oracle/diag/rdbms/orcl/orcl4/cdump'

*.cpu_count=24

*.cpu_min_count='24'

*.db_block_size=8192

*.db_create_file_dest='+DBDATA'

*.db_create_online_log_dest_1='+REDO'

*.db_name='orcl'

*.db_recovery_file_dest='+FRA'

*.db_recovery_file_dest_size=600G

*.diagnostic_dest='/u01/app/oracle'

*.dispatchers='(PROTOCOL=TCP) (SERVICE=orclXDB)'

orcl1.instance_number=1

orcl2.instance_number=2

orcl3.instance_number=3

orcl4.instance_number=4

*.job_queue_processes=480 # job queue processes default tuning

*.listener_networks=''

orcl1.local_listener=' (ADDRESS=(PROTOCOL=TCP)(HOST=172.xxx.xxx.1)(PORT=1521))'

orcl2.local_listener=' (ADDRESS=(PROTOCOL=TCP)(HOST=172.xxx.xxx.2)(PORT=1521))'

orcl3.local_listener=' (ADDRESS=(PROTOCOL=TCP)(HOST=172.xxx.xxx.3)(PORT=1521))'

orcl4.local_listener=' (ADDRESS=(PROTOCOL=TCP)(HOST=172.xxx.xxx.4)(PORT=1521))'

*.log_buffer=228904K # log buffer update

*.nls_language='AMERICAN'

*.nls_territory='AMERICA'

*.open_cursors=300

*.optimizer_mode='ALL_ROWS'

*.pga_aggregate_target=48G

*.plsql_warnings='DISABLE:ALL' # PL/SQL warnings at init.ora

*.processes=1920

*.query_rewrite_enabled='TRUE'

*.remote_listener=' scan-19c:1521'

*.remote_login_passwordfile='EXCLUSIVE'

*.resource_manager_plan='DEFAULT_PLAN'

*.result_cache_max_size=503328K

*.sga_target=96G

orcl1.thread=1

orcl2.thread=2

orcl3.thread=3

orcl4.thread=4

orcl1.undo_tablespace='UNDOTBS2'

orcl2.undo_tablespace='UNDOTBS1'

orcl3.undo_tablespace='UNDOTBS3'

orcl4.undo_tablespace='UNDOTBS4'