Running Greenplum with VMware Cloud Foundation on Dell EMC VxRail

Executive Summary

Business Case

VMware Tanzu™ Greenplum® Database is a massively parallel processing (MPP) database server supporting next-generation data warehousing and large-scale analytics processing. By partitioning data and running parallel queries, it allows a cluster of servers to operate as a single database supercomputer, performing orders of magnitude faster than a traditional database. Greenplum supports SQL, machine learning, and transaction processing at data volumes ranging from tens of terabytes to multi-petabyte scale.

The manageability of operating a Greenplum environment with virtualized infrastructure can be improved over the management of traditional IT infrastructure on bare metal since the demand for resources can fluctuate with business needs, leaving the Greenplum cluster either under-powered or over-provisioned. IT needs a more flexible, scalable, and secure infrastructure to handle the ever-changing demands the business places on Greenplum. With a single architecture that is easy to deploy, VMware Cloud Foundation™ can provision compute, network, and storage on demand. VMware Cloud Foundation protects the network and its data with micro-segmentation and satisfies compliance requirements with data-at-rest encryption. Policy-based management delivers business-critical performance. VMware Cloud Foundation delivers flexible, consistent, secure infrastructure and operations across private and public clouds and is ideally suited to meet the demands of Greenplum.

Dell EMC VxRail™, powered by Dell EMC PowerEdge server platforms and VxRail HCI System Software, features next-generation technology to future proof your infrastructure and enables deep integration across the VMware ecosystem. The advanced VMware hybrid cloud integration and automation simplifies the deployment of a secure VxRail cloud infrastructure.

VMware Cloud Foundation on Dell EMC VxRail, the Dell Technologies Cloud Platform, builds upon native VxRail, and VMware Cloud Foundation capabilities with unique integration features jointly engineered between Dell Technologies and VMware that simplify, streamline, and automate the operations of your entire SDDC from Day 0 through Day 2 operations. The full stack integration with VMware Cloud Foundation on VxRail allows both the HCI infrastructure layer and VMware cloud software stack lifecycle to be managed as one complete, automated, and turnkey hybrid cloud experience, significantly reducing risk and increasing IT operational efficiency. This solution also allows us to perform cloud operations through a familiar set of tools, offering a consistent experience, with a single vendor support relationship and consistent SLAs across all of your traditional and modernized workloads, public and private cloud, as well as edge deployments.

In this reference architecture, we provide a set of design and deployment guidelines for Greenplum on VMware Cloud Foundation on Dell EMC VxRail.

Technology Overview

Solution technology components are listed below:

- VMware Cloud Foundation

- VMware vSphere

- VMware vSAN

- VMware NSX Data Center

- VMware vRealize Suite

- Dell EMC VxRail Integrated System

- VxRail HCI System Software

- VMware Tanzu Greenplum

VMware Cloud Foundation

VMware Cloud Foundation is an integrated software stack that combines compute virtualization (VMware vSphere®), storage virtualization (VMware vSAN™), network virtualization (VMware NSX®), and cloud management and monitoring (VMware vRealize® Suite) into a single platform that can be deployed on-premises as a private cloud or run as a service within a public cloud. This paper focuses on the private cloud use case. VMware Cloud Foundation bridges the traditional administrative silos in data centers, merging compute, storage, network provisioning, and cloud management to facilitate end-to-end support for application deployment.

VMware vSphere

VMware vSphere is VMware's virtualization platform, which transforms data centers into aggregated computing infrastructures that include CPU, storage, and networking resources. vSphere manages these infrastructures as a unified operating environment and provides operators with the tools to administer the data centers that participate in that environment. The two core components of vSphere are ESXi™ and vCenter Server®. ESXi is the hypervisor platform used to create and run virtualized workloads. vCenter Server is the management plane for the hosts and workloads running on the ESXi hosts.

VMware vSAN

VMware vSAN is the industry-leading software powering VMware’s software defined storage and HCI solution. vSAN helps customers evolve their data center without risk, control IT costs, and scale to tomorrow’s business needs. vSAN, native to the market-leading hypervisor, delivers flash-optimized, secure storage for all of your critical vSphere workloads and is built on industry-standard x86 servers and components that help lower TCO in comparison to traditional storage. It delivers the agility to scale IT easily and offers the industry’s first native HCI encryption.

vSAN simplifies Day 1 and Day 2 operations, and customers can quickly deploy and extend cloud infrastructure and minimize maintenance disruptions. vSAN helps modernize hyperconverged infrastructure by providing administrators a unified storage control plane for both block and file protocols and provides significant enhancements that make it a great solution for traditional virtual machines and cloud-native applications. vSAN helps reduce the complexity of monitoring and maintaining infrastructure and enables administrators to rapidly provision a fileshare in a single workflow for Kubernetes-orchestrated cloud native applications.

VMware NSX Data Center

VMware NSX Data Center is the network virtualization and security platform that enables the virtual cloud network, a software-defined approach to networking that extends across data centers, clouds, and application frameworks. With NSX Data Center, networking and security are brought closer to the application wherever it’s running, from virtual machines to containers to bare metal. Like the operational model of VMs, networks can be provisioned and managed independently of the underlying hardware. NSX Data Center reproduces the entire network model in software, enabling any network topology—from simple to complex multitier networks—to be created and provisioned in seconds. Users can create multiple virtual networks with diverse requirements, leveraging a combination of the services offered via NSX or from a broad ecosystem of third-party integrations ranging from next-generation firewalls to performance management solutions to build inherently more agile and secure environments. These services can then be extended to a variety of endpoints within and across clouds.

Dell EMC VxRail Hyperconverged Integrated System

The only fully integrated, pre-configured, and pre-tested VMware hyperconverged integrated system optimized for VMware vSAN and VMware Cloud Foundation, VxRail transforms HCI networking and simplifies VMware cloud adoption while meeting any HCI use case - including support for many of the most demanding workloads and applications. Powered by Dell EMC PowerEdge server platforms and VxRail HCI System Software, VxRail features next-generation technology to future proof your infrastructure and enables deep integration across the VMware ecosystem. The advanced VMware hybrid cloud integration and automation simplifies the deployment of a secure VxRail cloud infrastructure.

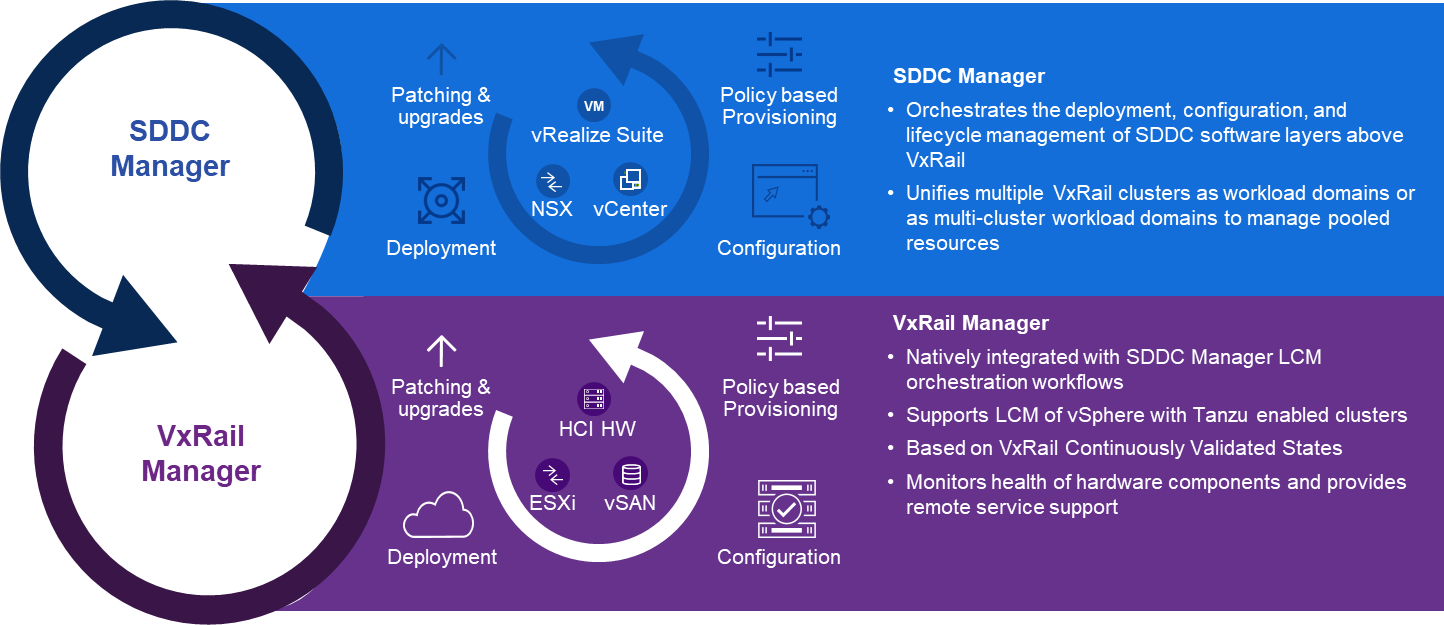

VxRail HCI System Software

VxRail HCI system software is integrated software that delivers a seamless and automated operational experience, offering 100% native integration between VxRail Manager and vCenter. Intelligent lifecycle management automates non-disruptive upgrades, patching, and node addition or retirement while keeping VxRail infrastructure in a continuously validated state to ensure that workloads are always available. The HCI System Software includes SaaS multi-cluster management and orchestration for centralized data collection and analytics that uses machine learning and AI to help customers keep their HCI stack operating at peak performance and ready for future workloads. IT teams can benefit from the actionable insights to optimize infrastructure performance, improve serviceability, and foster operational freedom.

Figure 1. VxRail Manager and SDDC Manager Integration

VMware Tanzu Greenplum

VMware Tanzu Greenplum is based on PostgreSQL and the Greenplum Database project. It offers optional use-case specific extensions like Apache Madlib for machine learning and graph analytics, PostGIS for geospatial analysis, and Python and R for advanced analytics. These are pre-integrated to ensure a consistent experience, not a DIY open-source approach. Instead of depending on expensive proprietary databases, users can benefit from the contributions of a vibrant community of developers.

Greenplum simplifies the deployment of analytics infrastructure by providing you with a single, scale-out environment for converging analytic and operational workloads and can execute low latency point queries, fast data ingestion, data science exploration, and long-running reporting queries with high and increasing scale and concurrency. The Greenplum Command Center provides a single management console for database administrators to set up Workload Management policies, monitor system utilization, review current and historic query details, and setup database alerts to be sent proactively to administrators. Greenplum reduces data silos by integrating with Apache Kafka for streaming ingestion and Apache Spark for in memory Data Science compute.

Test Tools

We leveraged the following monitoring and benchmark tools in the scope of our functional validation of Greenplum on VMware Cloud Foundation.

Monitoring Tools

vSAN Performance Service

vSAN Performance Service is used to monitor the performance of the vSAN environment through the vSphere Client. The performance service collects and analyzes performance statistics and displays the data in a graphical format. You can use the performance charts to manage your workload and determine the root cause of problems.

vSAN Health Check

vSAN Health Check delivers a simplified troubleshooting and monitoring experience of all things related to vSAN. The vSphere client offers multiple health checks specifically for vSAN, including cluster, hardware compatibility, data, limits, and physical disks. It is used to check the vSAN health before deploying the mixed-workload environment.

Workload Generation and Testing Tools

gpcheckperf

gpcheckperf verifies the baseline hardware performance of the specified hosts.

TPC-DS Based Testing

TPC-DS is one of the benchmarking tools for database systems. We used it as a basis for Greenplum databases performance testing. It has a collection of scripts that automatically load data into the database and measure the database performance by executing both single-user and concurrent-user queries.

Solution Configuration

This section introduces the following resources and configurations:

- Architecture diagram

- Virtual machine placement and VMware vSphere Distributed Resource Scheduler™

- VMware vSphere High Availability

- Greenplum capacity planning

- Virtual disks and segments

- Greenplum optimization

- Hardware resources

- Software resources

- Network configuration

- vSAN configuration

Architecture Diagram

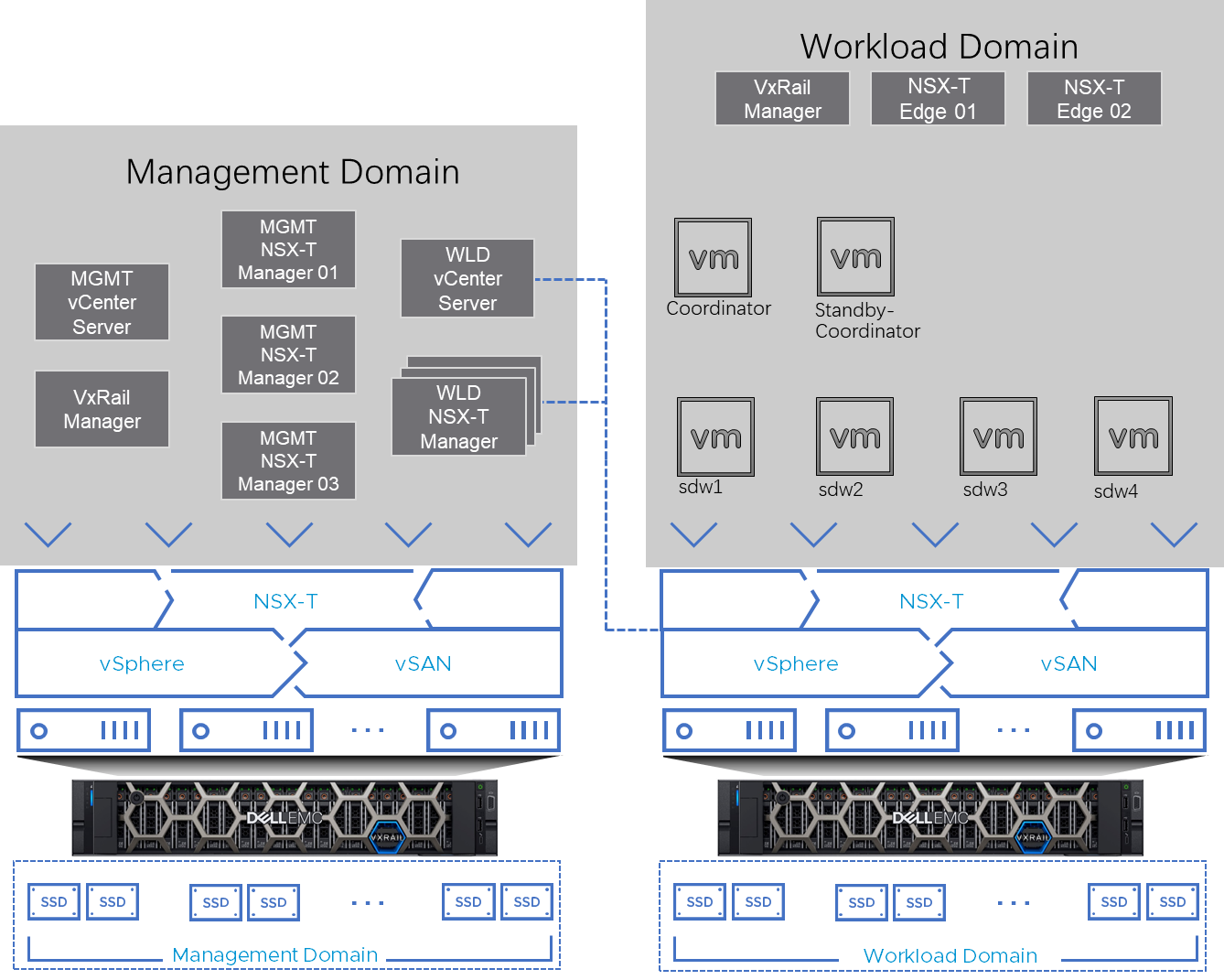

The VMware Cloud Foundation test environment was composed of a management domain and a workload domain. We deployed Greenplum in the workload domain. And all other infrastructure VMs were in the separate management workload domain (Figure 2).

Figure 2. Greenplum on VMware Cloud Foundation Solution Architecture

Notation in Figure 2:

- Coordinator: Greenplum Coordinator Host

- Standby-Coordinator: Greenplum Standby- Coordinator

- Sdw1 – Sdw4: Greenplum Segment Hosts (virtual machines)

In our solution, we created a 4-node VxRail P570F cluster for the VMware Cloud Foundation management domain, running management virtual machines and appliances. The management domain can be used to create and manage other workload domains.

Table 1. Management Domain VMs

|

VM Role |

vCPU |

Memory (GB) |

VM Count |

|

Management Domain vCenter Server |

4 |

16 |

1 |

|

SDDC Manager |

4 |

16 |

1 |

|

Management Domain NSX-T Manager |

6 |

24 |

3 |

|

Workload Domain NSX-T Manager |

12 |

48 |

3 |

|

Workload Domain vCenter Server |

8 |

28 |

1 |

|

VxRail Manager Appliance |

2 |

8 |

1 |

For the workload domain, we created another 4-node VxRail P570F cluster with a separate NSX-T Fabric, deployed an NSX Edge Cluster, and deployed the Greenplum VMs in the workload domain.

Table 2 shows the deployment of the workload domain edge nodes and Greenplum VMs. For the workload domain edge node, we recommend that NSX Edge transport nodes are deployed with “Large” form factor.

Table 2. Workload Domain VMs

|

VM Role |

vCPU |

Memory (GB) |

Storage |

Deployment Size |

VM Count |

|

Workload Domain Edge node |

8 |

32 |

200 GB |

Large |

2 |

|

Greenplum Coordinator Host |

8 |

32 |

200GB for OS |

n/a |

1 |

|

Greenplum Standby-Coordinator |

8 |

32 |

200GB for OS |

n/a |

1 |

|

Greenplum Segment Hosts |

32 |

384 |

200GB for OS 500GB for each segment or mirror VMDK |

n/a |

4 |

This is called a building block for Greenplum's basic installation with VMware Cloud Foundation on Dell EMC VxRail. Based on the customer demands and database size, we can expand the workload domain to include more physical hosts. A cluster with vSAN enabled supports up to 64 physical hosts for a non-stretched cluster. Adding more hosts to the vSAN cluster, not only increases the capacity of CPU and memory for computing, but also increases the capacity of vSAN storage and performance is increased accordingly. This is one of the benefits of Hyperconverged Infrastructure (HCI) -- that we can increase the capacity of computing and storage proportionately and at the same time.

We have tested expanding the workload domain from 4 nodes to 8, 16, 24, 32, and 64 nodes, respectively. Under each of these conditions, the computing and storage resources grew as demand and performance grew near linearly.

Figure 3. Expanding the Workload Domain and the Greenplum Cluster

Virtual Machine Placement and vSphere DRS

VMware vSphere Distributed Resource Scheduler (DRS) is a feature included in the vSphere Enterprise Plus. In this solution, if DRS is enabled in the cluster, the guideline is:

- Place the coordinator host and standby-coordinator host on two different physical hosts to accommodate one host failure.

- Place the segment host virtual machines on different physical hosts. Each physical host can run at most one segment virtual machine.

If DRS is enabled in the cluster, we must create two DRS Anti-Affinity rules to separate the VMs to different physical hosts. The first Anti-Affinity rule applies to the coordinator and standby-coordinator hosts. The second Anti-Affinity rule applies to the segment host virtual machines. For DRS Anti-Affinity rules, see the DRS document.

VMware vSphere High Availability

We recommend enabling vSphere HA for the workload domain cluster. Meanwhile, we recommend reserving 100% percent of the allocated virtual machines’ CPU and memory to avoid oversubscription of resources in case of a physical host failure.

If vSphere HA is enabled, in case of a physical host failure and there are enough remaining resources to satisfy the resource reservation, like having a spare host, vSphere can automatically power on the impacted virtual machines on some other surviving hosts.

In case of a physical host failure and if there is not enough remaining resources to satisfy the resource reservation, vSphere HA would not restart the impacted virtual machines, which is by design. Because forcing a virtual machine restart on a surviving host may cause resource contention and imbalanced performance among the Greenplum segments.

Greenplum Capacity Planning

We recommended using Greenplum with mirrors, by following the Greenplum Installation Guide to estimate the database size.

We recommended setting vSAN’s Failures-to-Tolerance (FTT) to 1. In the case of using RAID1 in vSAN policy, there are two copies for each piece of data in vSAN. So, the estimated database capacity requirement should not exceed half of the vSAN’s overall capacity. In the case of RAID5, vSAN consumes 1.33 times the raw capacity, and you can calculate the storage usage accordingly. If a capacity increase is needed, additional physical servers can be added to the cluster, and vSAN can increase the data capacity storage for Greenplum online without interruption of service to Greenplum users.

Virtual Disks and Segments

In this solution, we enabled Greenplum mirrors for application level high availability. In each segment host virtual machine, we placed eight primary segments and eight mirror segments on it. Each primary segment or mirror segment is backed by a virtual disk (VMDK). So apart from the OS disk, there were sixteen VMDKs configured for each virtual machine.

In vSphere, each virtual machine can have up to four virtual SCSI controllers for the VMDKs to attach to. So, in this solution, we used the maximum number of four virtual SCSI controllers in the Greenplum segment host virtual machines. This can help avoid the virtual SCSI controller from becoming a bottleneck. Each virtual SCSI controller is attached with four VMDKs. Meanwhile, we set the type of virtual SCSI controllers to 'VMware Paravirtual', which is proven to provide the best performance.

For the VMDKs attached to a virtual SCSI controller, half of the VMDKs are used for primary segments, while the remaining half is used for mirror segments. For example, for SCSI Controller-1, VMDK1 and VMDK2 are used for primary segments. VMDK3 and VMDK4 are used for mirror segments.

Figure 4. The Virtual SCSI Controller and VMDK Architecture of a Segment Virtual Machine

Greenplum optimization

Firstly, follow the Configuring Your Systems section of the Greenplum Installation Guide to optimize the operating system level settings.

Secondly, follow the Configuration Parameters section of the Greenplum Reference document. Specifically, we need to set two parameters. The first is to set ‘memory_spill_ratio’ to 0 let Greenplum Database use the ‘statement_mem’ server configuration parameter value to control the initial query operator memory amount. The second is to set ‘statement_mem’ to a proper value according to your servers’ configuration and the calculation in the ‘statement_mem’ section.

Hardware Resources

In this solution, for the workload domain of Greenplum, we used a total of four VxRail R570F nodes. Each server was configured with two disk groups, and each disk group consisted of one cache-tier write-intensive SAS SSD and four capacity-tier read-intensive SAS SSDs.

Each VxRail node in the cluster had the following configuration, as shown in Table 3.

Table 3. Hardware Configuration for VxRail

|

PROPERTY |

SPECIFICATION |

|

Server model name |

VxRail P570F |

|

CPU |

2 x Intel(R) Xeon(R) Gold 6148 CPU @ 2.40GHz, 28 cores each |

|

RAM |

512GB |

|

Network adapter |

2 x Broadcom BCM57414 NetXtreme-E 25Gb RDMA Ethernet Controller |

|

Storage adapter |

1 x Dell HBA330 Adapter |

|

Disks |

Cache - 2 x 800GB Write Intensive SAS SSDs Capacity - 8 x 3.84TB Read Intensive SAS SSDs |

Software Resources

Table 4 shows the software resources used in this solution.

Table 4. Software Resources

|

Software |

Version |

Purpose |

|

VMware Cloud Foundation on Dell EMC VxRail |

4.0 |

A unified SDDC platform on Dell EMC VxRail that brings together VMware vSphere, vSAN, NSX, and optionally, vRealize Suite components into a natively integrated stack to deliver enterprise-ready cloud infrastructure for the private and public cloud. See BOM of VMware Cloud Foundation on VxRail for details. |

|

Dell EMC VxRail |

7.0.000 |

Turnkey Hyperconverged Infrastructure for hybrid cloud |

|

VMware vSphere |

7.0 |

VMware vSphere is a suite of products: vCenter Server and ESXi. |

|

VMware vSAN |

7.0 |

vSAN is the storage component in VMware Cloud Foundation to provide low-cost and high-performance next-generation HCI solutions. |

|

NSX-T |

3.0 |

NSX-T is a key network component in VMware Cloud Foundation on VxRail and is deployed automatically. It is designed for networking management and operation. |

|

Greenplum |

6.10 |

The main Greenplum software being tested in this solution. |

Network Configuration

Figure 5 shows the VMware vSphere Distributed Switch™ network configuration for the Greenplum cluster in the workload domain of the VMware Cloud Foundation on VxRail. NSX-T is used for the Greenplum cluster networking. To enable external access for the Greenplum cluster, it is necessary to deploy an NSX-T edge cluster, and to configure BGP peering and route distribution of the upstream network. For more details, refer to VMware Cloud Foundation 4.0 on VxRail Planning and Preparation Guide.

Figure 5. The Overall NSX-T Networking Architecture

This figure 5 shows the VMware vSphere Distributed Switches configuration for both the management domain and the workload domain of the VMware Cloud Foundation. For each domain, two 25 GbE vmnics were used and configured with teaming policies. The management domain can be shared among different workloads.

The NSX-T controllers resided in the management domain. The Greenplum virtual machines were configured with a VM network called ‘Virtual Machine PG’ on an NSX-T segment. VMware vSphere vMotion®, vSAN, and VXLAN VTEP for NSX-T had another dedicated segment created. In the workload domain’s uplink setting, we used one physical NIC as the active uplink for vSAN traffic and another physical NIC as the standby uplink. For Greenplum virtual machines’ traffic, the active and standby uplinks are opposite. The reason is that both vSAN and Greenplum cause high network traffic, so both of them need to be on the dedicated NIC.

Jumbo Frames (MTU=9000) were enabled on the physical switches, vSAN VMkernel, and all the virtual switches to improve performance.

NSX-T managers and edges have more than one instance to form NSX clusters to achieve HA and better load balancing. In addition, based on workloads, the vCPU and memory may be adjusted to achieve better performance. Table 5 shows the configuration of the NSX-T managers and edge nodes’ virtual machines. The NSX-T managers reside in the management workload domain, so they will not consume compute resources for Greenplum VMs. However, the NSX-T edge nodes reside in the Greenplum workload domain and will consume some CPU and memory resources. This should be taken into consideration while doing sizing the cluster before Greenplum is deployed.

Table 5. NSX-T VM Configuration

|

nsx-T VM Role |

INSTANCE |

vCPU |

memory (GB) |

vm name |

Virtual disk size |

Operating System |

|

NSX-T Manager |

3 |

12 |

48 |

NSX-unified-appliance-<version> |

200GB |

Ubuntu |

|

NSX-T Edge Nodes |

2 |

4 |

8 |

Edge-<UUID> |

120GB |

Ubuntu |

vSAN Configuration

The solution validation was based on a 4-node vSAN cluster as a building block. We have tested expanding the workload domain from 4 nodes to 8, 16, 24, 32, and 64 nodes, respectively. Under each of these conditions, the computing and storage resources grew as demand and performance grew near linearly.

The tests were conducted using the default vSAN datastore storage policy of RAID 1 FTT=1, checksums enabled, deduplication and compression disabled, and no encryption. In the below sections, we will explain the detailed configuration of the vSAN cluster and some items in the Storage Policy Based Management (SPBM).

Deduplication and Compression

‘Deduplication and Compression’ is configured on the cluster level, and it can be enabled or deactivated for the whole vSAN cluster. Although in our testing, we deactivated it, enabling it can reduce the vSAN storage usage but induce higher latencies for the Greenplum application. This is a tradeoff for customers’ choice.

Failures-to-Tolerance (FTT)

Failures-to-Tolerance (FTT) is a configuration item in vSAN’s storage policy. In our testing, we set FTT equal to 1. Never set the FTT equals to 0 in a Greenplum with vSAN deployment because FTT=0 can cause the data of a primary segment of the data of its mirror segment being stored in the same physical disk. This may cause Greenplum data loss in the case of a physical disk failure. 1 is the minimum number for FTT.

Checksum

Checksum is a configuration item in vSAN’s storage policy. We compared the Greenplum performance between enabling and disabling checksum. By disabling vSAN’s checksum, there is barely any performance improvement for Greenplum, while by enabling it, we can ensure the data is correct from the vSAN storage hardware level. So, we recommend keeping the checksum enabled, which is the default.

Erasure Coding (RAID 1 vs. RAID 5)

Erasure Coding is a configuration item in vSAN’s storage policy. It is also known as configuring RAID 5 or RAID 6 for vSAN objects. With FTT=1 and RAID 1, the data in vSAN is mirrored, and the capacity cost would be two times the raw capacity. With FTT=1 and RAID 5, the data is stored as RAID 5, and the capacity cost would be 1.33 times the raw capacity.

In our testing, we used FTT=1 and without Erasure Coding (RAID 1). By enabling Erasure Coding, we could save some vSAN storage spaces but induce higher latencies for the Greenplum application. Again, this is a tradeoff for customers’ choice.

Encryption

vSAN can perform data at rest encryption. Data is encrypted after all other processing, such as deduplication. Data at rest encryption protects data on storage devices in case a device is removed from the cluster.

Encryption is not used in our testing. Use encryption according to your company’s Information Security requirements.

Failure Testing

This section introduces the failure scenarios and the behavior of failover and failback. This section includes:

- Physical host failure

- Physical cache disk failure

- Physical capacity disk failure

Physical Host Failure

We mimicked one physical host failure by directly powering off one of the physical hosts through the VxRail server iDRAC. We observed the following:

- If there is a spare host, vSphere HA automatically powered on the impacted virtual machines on other surviving hosts. If there is no spare host or the surviving hosts do not meet the requirements of the resource reservation, the failed virtual machines would not be restarted until more resources are added to the cluster.

- Regardless of restarting the failed virtual machines or not, the host failure immediately caused some of Greenplum’s primary segments to fail. The corresponding mirror segments on other hosts immediately took over the primary role, and the Greenplum service kept running.

- For data in vSAN, there were always two copies of a VMDK file, and they must reside on different hosts. If one copy of a VMDK resided on the impacted host, the VMDK is marked as degraded while it was still working from virtual machine’s perspective. All virtual machines were still running even though some of the VMDKs were degraded.

- If the failed host was brought back in one hour, vSAN will resync the newly written data from other healthy hosts to this restarted host.

- If the failed host was brought back after an hour, or requires more time to recover, vSAN will rebuild the data of the degraded VMDKs to healthy hosts to ensure there are two copies of data on the surviving healthy hosts in the case of FTT=1.

Physical Cache Disk Failure

We mimicked a physical cache disk failure by injecting an error to one of the cache disks following the vSAN PoC Guide.

If a cache disk fails, the vSAN disk group that contains the failed cache disk will be marked as failed. All the impacted data were immediately rebuilt to other healthy disk groups. In the meantime, all the virtual machines kept running without any interruption because there was still one copy of data working from the vSAN storage level. Greenplum service was not interrupted. vSAN will intelligently control the networking traffic for data rebuilding, which only had minimum impact on the Greenplum performance.

Physical Capacity Disk Failure

We mimicked a physical capacity disk failure by injecting an error to one of the capacity disks as above.

The behavior is similar to a cache disk failure. All the virtual machines kept running without any interruption, and Greenplum service was not interrupted.

If ‘Deduplication and Compression’ is enabled on the vSAN cluster, the vSAN’s behavior was exactly the same as a cache disk failure. The vSAN disk group that contains the failed capacity disk will be marked as failed. All the impacted data were immediately rebuilt to other healthy disk groups.

If ‘Deduplication and Compression’ is deactivated on the vSAN cluster, the difference from a cache disk failure is that a capacity disk failure would only impact the data on this specific failed disk. Only the impacted data on this failed capacity disk would be rebuilt on other healthy capacity disks.

Best Practices

- Use the same server model for the physical hosts in the workload domain.

- Set up Greenplum mirrors.

- Use DRS anti-affinity rules to separate the Greenplum coordinator and standby-coordinator to different physical hosts.,

- se DRS anti-affinity rules to separate all the segment host virtual machines to different physical hosts.

- Follow the 'Configuring Your Systems' guidelines from Greenplum Installation Guide.

- Set ’memory_spill_ratio’ to 0 and set ‘statement_mem’ to a proper value to optimize the memory utilization for spill files.

- Enable Jumbo Frames on the physical switches. Use Jumbo Frames on the vSAN VMKernel and all virtual switches.

- Set Failures-To-Tolerate (FTT) to 1 in vSAN’s storage policy.

- Enable vSAN's checksum.

- Enable vSphere HA in the cluster. Addionally, set the CPU and memory reservation to 100% to avoid resource contention in case of a physical host failure.

- Use four virtual SCSI controllers in the VMs of Greenplum's segment hosts. Set the type of virtual SCSI controllers to 'VMware Paravirtual'.

Conclusion

VMware Cloud Foundation on VxRail delivers flexible, consistent, secure infrastructure and operations across private and public clouds. It is ideally suited to meet the demands of modern applications like Greenplum running in a virtualized environment.

With VMware Cloud Foundation, we can easily manage the lifecycle of the hybrid cloud environment. We also have a unified management plane for all applications, including Greenplum. With VMware Cloud Foundation, we can leverage the leading virtualization technologies, including vSphere, NSX-T, and vSAN.

In this solution paper, we demonstrated the architecture of running Greenplum with VMware Cloud Foundation on Dell EMC VxRail. We showed the configuration details, the hardware resources, and the software resources used in the solution validation. We showed the various configuration options and best practices. VxRail manager and VMware Cloud Foundation manager provides the lifecycle management. vSAN provides reliable, high-performance, and flexible storage to Greenplum. NSX-T provides fine grained, secured, and high-performance virtual networking infrastructure to Greenplum. In addition, vSphere DRS and vSphere HA provides efficient resource usage and high availability. All the above lead to an excellent solution of running Greenplum with VMware Cloud Foundation on Dell EMC VxRail.

References

- VMware Cloud Foundation

- What’s New with VMware Cloud Foundation 4.1

- Get the Facts of VMware Cloud Foundation – Part 6

- Dell EMC VxRail

- VMware vSphere

- VMware vSAN

- VMware NSX Data Center

- VMware Cloud Foundation on Dell EMC VxRail Admin Guide

- VMware Cloud Foundation on VxRail Architecture Guide

- Greenplum 6.10 Documentation

About the Author

Victor (Shi) Chen, Solutions Architect in the Solutions Architecture team of the Cloud Platform Business Unit at VMware, wrote the original version of this paper.

The following reviewers also contributed to this paper:

- Ka Kit Wong, Staff Solutions Architect, Solutions Architecture team of the Cloud Platform Business Unit at VMware

- Scott Kahler, Product Line Manager, Modern Application Platform Business Unit at VMware

- Ivan Novick, Senior Manager of Product Management, Modern Application Platform Business Unit at VMware

- Mike Nemesh, Platform Software Engineer, Modern Application Platform Business Unit at VMware

- William Leslie, Senior Manager of VxRail Technical Marketing at Dell Technologies

- Vic Dery, Senior Principal Engineer of VxRail Technical Marketing at Dell Technologies