Storage vMotion Improvements

Overview

The vMotion feature is heavily updated in vSphere 7, resulting in faster live-migrations while drastically lowering the guest performance impact during the vMotion process together with a far more efficient way of switching over the virtual machine (VM) between the source and destination ESXi host. vSphere 7 also introduces improvements for the Fast Suspend and Resume (FSR) process, as FSR inherents some of the vMotion logic.

FSR is used when live-migrating VM storage with Storage vMotion, but also for VM Hot Add. Hot Add is the capability to add vCPU, memory and other selected VM hardware devices to a powered-on VM. When the VM is powered-off, adding compute resources or virtual hardware devices is just a .vmx configuration file change. FSR is used to do the same for live VMs.

Note: using vCPU Hot Add can introduce a workload performance impact as explained in this article.

The FSR Process

FSR has a lot of similarities to the vMotion process. The biggest difference being that FSR is a local live-migration. Local meaning within the same ESXi host. For a compute vMotion, we need to copy the memory data from the source to destination ESXi host. With FSR, the memory pages remain within the same host.

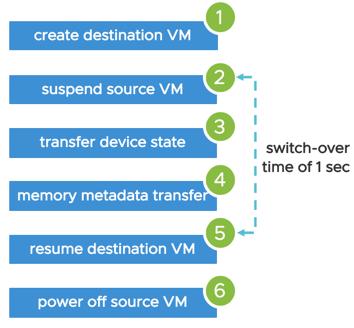

When a Storage vMotion is initiated or when Hot Add is used, a destination VM is created. This name can be misleading, it is a ‘ghost’ VM running on the same ESXi host as where the source VM is running. When the ‘destination’ VM is created, the FSR process suspends the source VM from execution before it transfers the device state and the memory metadata. Because the migration is local to the host, there’s no need to copy memory pages, but only the metadata. Once this is done, the destination VM will be resumed and the source VM is cleaned up, powered off and deleted.

As with vMotion, we need to keep the time between suspending and resuming the VM to be < 1 second to minimize guest OS impact. Typically, that was never a problem for smaller VM sizings. However, with large workloads (‘Monster’ VMs), the impact could be significant depending on VM sizing and workload characteristics.

How is Memory Metadata Transferred?

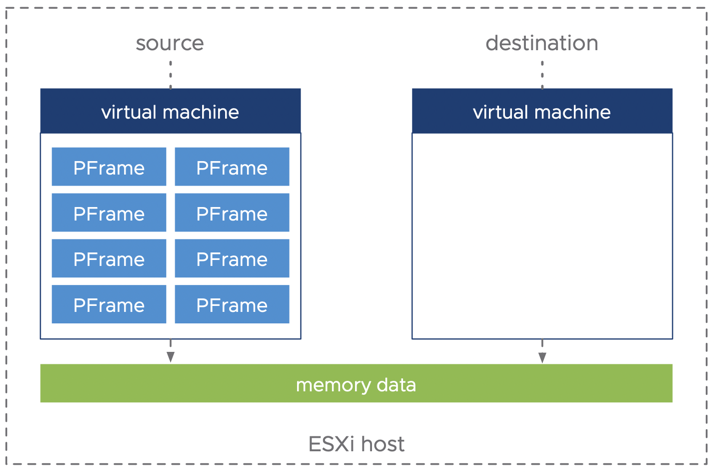

During a FSR process, the most time is consumed in transferring the memory metadata. You can see the memory metadata as pointers for the VM to understand where the data in global system memory is placed. Memory metadata is using Page Frames (PFrames), which provides the mapping between the VM its virtual memory and the actual Machine Page Numbers (MPN), which identifies the data in physical memory.

Because there’s no need to copy memory data, FSR just needs to copy over the metadata (PFrames) to the destination VM on the same host, telling it where to look for data in the system memory.

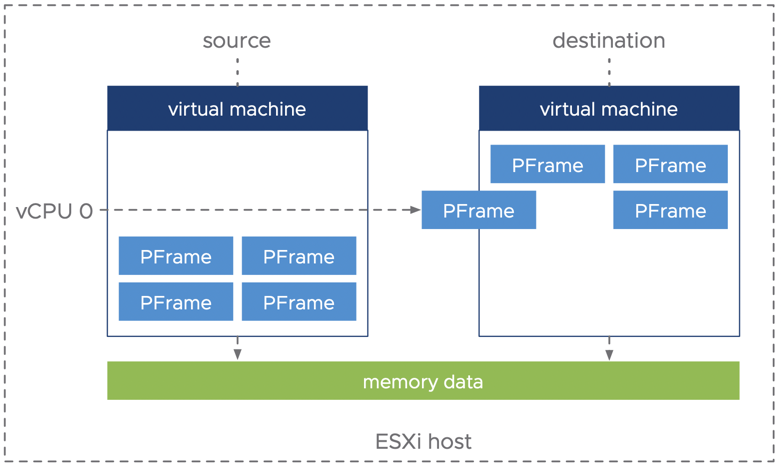

In vSphere versions prior to vSphere 7, the transfer of the memory metadata is single threaded. Only one vCPU is claimed and used to transfer the PFrames in batches. All other vCPUs are sleeping during the metadata transfer, as the VM is briefly suspended. This method is okay for smaller sized VMs, but could introduce a challenge for large VMs, especially with a large memory footprint.

The single threaded transfer doesn’t scale with large VM configurations, potentially resulting in switch-over times over 1 second. So, as with vMotion in vSphere 7, there’s a need to lower the switch-over time (aka stun-time) when using FSR.

Improved FSR in vSphere 7

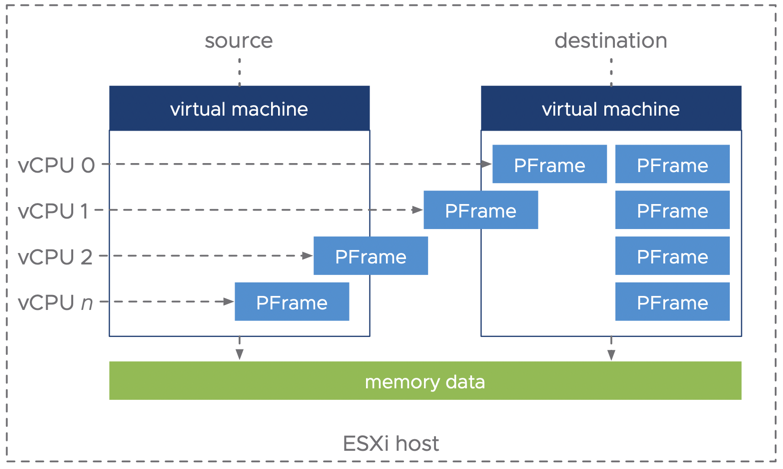

So, why not use all the VM its vCPUs to transfer the PFrames? Remember that the VM is suspended during the metadata transfer, so there’s no point in letting the vCPUs stay in an idle state. Let’s put them to work for speeding up the transfer of the PFrames. The VMs memory is divided into segments and each vCPU is assigned a memory metadata segment to transfer.

In vSphere 7, the FSR logic moved from a serialized method, to a distributed model. The PFrames transfer is now distributed over all vCPUs that are configured for the VM, and the transfers run in parallel.

The Effect of Leveraging all vCPUs

The net result of leveraging all the vCPUs for memory metadata transfers during a FSR process, is drastically lowered switch-over times. The performance team tested multiple VM configurations and workloads with Storage vMotion and Hot Add. Using a VM configured with 1 TB of memory and 48 vCPU’s, they experienced a switch-over time cut down from 7.7 seconds using 1 vCPU for metadata transfer, to 500 milliseconds when utilizing all vCPUs!

The FSR improvements strongly depend on VM sizing and workload characteristics. With vSphere versions up to 6.7, there was a challenge with the 1 sec SLA when using Storage vMotion or Hot Add operations. Running vSphere 7, customers can again feel comfortable using these capabilities because of the lowered switch-over times!