VCF 4.1 Proof Of Concept Guide

POC Guide Overview

The purpose of this document is to act as a simple guide for proof of concepts involving VMware Cloud Foundation 4.1 and associated infrastructure tasks to configure and manage Software Defined infrastructure.

This document is intended for data center cloud administrators who deploy a VMware Cloud Foundation system in their organization's data center. The information in this guide is written for experienced data center cloud administrators.

This document is not a replacement for VMware product documentation however it should be thought of as a guide to augment existing guidance throughout the lifecycle of a proof-of-concept exercise.

Offical product documentation (https://docs.vmware.com/allproducts.html ) should supersede guidance documented here, if there is a divergence.

When referring to any statements made in this document, verification regarding support capabilities, minimums and maximums should be cross-checked against official VMware Technical product documentation at https://docs.vmware.com/en/VMware-Cloud-Foundation/index.html and https://configmax.vmware.com/ in case of more recent updates or amendments to what is stated here.

This document is laid out into several distinct sections to make the guide more consumable depending on the use case and proof of concept scenario as the guide aims to offer a structured approach during evaluation of VCF features.

VCF 4.1 What’s New / Overview

VCF 4.1 BOM updates and new features

Section 1 Planning / Day 0

This section covers guidance and requirements for VCF bring up and considerations such as external datacenter resources and dependencies.

Section 2 VCF Infrastructure Deployment / Day 1

Deployment of VCF infrastructure components such as Management, Workload domains and NSX-T Edge Clusters.

Section 3 vRealize Suite Install

Guidelines to deploy SDDC components as vRealize Operations and vRealize Log Insight.

Section 4 Solution Deployment guidelines.

Proof of Concept guidelines to deploy SDDC infrastructure using specific features and vSphere with Tanzu, Stretch Cluster, vVOLs or vLCM.

Section 5: Day 2 Use cases

NSX-T Network validation.

Certificate Management.

Password Management

VCF Upgrades

vRealize Suite Monitoring and Alerting configuration and management

Appendices

NSX-T overview

VCF 4.1 What’s New / Overview

Cloud Foundation Bill of Materials (BOM)

For more information, please refer to the release notes in case of updates or amendments

Below table lists the full BOM of VCF 4.1.

|

Software Component |

Version |

Date |

Build Number |

|

Cloud Builder VM |

4.1.0.0 |

06 OCT 2020 |

16961769 |

|

SDDC Manager |

4.1.0.0 |

06 OCT 2020 |

16961769 |

|

VMware vCenter Server Appliance |

7.0 Update 1 |

06 OCT 2020 |

16860138 |

|

VMware ESXi |

7.0 Update 1 |

06 OCT 2020 |

16850804 |

|

VMware vSAN |

7.0 Update 1 |

06 OCT 2020 |

16850804 |

|

VMware NSX-T Data Center |

3.0.2 |

17 SEP 2020 |

16887200 |

|

VMware vRealize Suite Lifecycle Manager |

8.1 Patch 1 |

25 AUG 2020 |

16776528 |

|

Workspace ONE Access |

3.3.2 |

14 APR 2020 |

15951611 |

|

vRealize Automation |

8.1 Patch 2 |

28 JUL 2020 |

16633378 |

|

vRealize Log Insight |

8.1.1 |

28 MAY 2020 |

16281169 |

|

vRealize Log Insight Content Pack for NSX-T |

3.9.0 |

n/a |

n/a |

|

vRealize Log Insight Content Pack for Linux |

2.1 |

n/a |

n/a |

|

vRealize Log Insight Content Pack for Linux - Systemd |

1.0 |

n/a |

n/a |

|

vRealize Log Insight Content Pack for vRealize Suite Lifecycle Manager 8.0.1+ |

1.0 |

n/a |

n/a |

|

vRealize Log Insight Content Pack for VMware Identity Manager |

2.0 |

n/a |

n/a |

|

vRealize Operations Manager |

8.1.1 |

09 JUL 2020 |

16522874 |

|

vRealize Operations Management Pack for VMware Identity Manager |

1.1 |

n/a |

n/a |

VCF 4.1 Summary update

For more information, please review

Release Notes

VCF 4.1 Blog

https://blogs.vmware.com/cloud-foundation/2020/09/15/whats-new-with-vmware-cloud-foundation-4-1/

- VCF Remote Clusters. Extending VCF capabilities to the ROBO & Edge sites with VCF Remote Clusters was added in this release

- ESXi Cluster-Level and Parallel Upgrades: Enables administrators to update vSphere software on multiple clusters in the management domain or a workload domain in parallel.

- NSX-T Data Centre Cluster-Level and Parallel Upgrades: Enables administrators to upgrade all Edge clusters in parallel, and then all host clusters in parallel

- Support for ESXi hosts with external CA signed certs: VCF supports APIs to perform bring-up of hosts with certificates generated by an external CA.

- Read-only access and local accounts: cloud admins can now create “Viewer” users that have read-only access to VCF. They can also create a local account for use in break-glass scenarios where a remote identity provider is unreachable

- Backup Enhancements:

SDDC Manager backup-recovery workflows and APIs have been improved to add capabilities such as backup management, backup scheduling, retention policy, on-demand backup & auto retries on failure - vVOLs as Principal Storage in VCF Workload Domain: VMware Cloud Foundation now supports vVOLs providing a common storage management framework for external storage, providing automation for pre-defined storage including volume management and provisioning.

- VMware Skyline Support for VMware Cloud Foundation: VMware Skyline brings proactive intelligence to VMware Cloud Foundation by identifying management and workload domains, and proactively surfacing VMware Cloud Foundation solution findings

- Object Rename Support. We now allow customers to change object names after initial deployment.

- vCLS Support – The vSphere Clustering Service (vCLS) is a new capability that is introduced in the vSphere 7 Update 1 release and is included in the VCF 4.1 release.

- Increase pNIC support –VI Workload domains can have hosts with multiple pNICs and vSphere Distributed Switches (vDS) that can scale up-to the vSphere maximums supported in the vSphere version included in the BOM.

Section 1 VCF Deployment Planning & Bring Up / Day 0

To plan for a successful VCF POC, there is a considerable number of external requirements to ensure success

The key to a successful plan is to use a reasonable hardware configuration that resembles what you plan to use in production.

Physical Network and External services

Certain requirements such as routable VLANS, MTU and DNS and DHCP services are required, these are in summary:

- Top of Rack switches are configured. Each host and NIC in the management domain must have the same network configuration.

- IP ranges, subnet mask, and a reliable L3 (default) gateway for each VLAN.

- Note its not strictly required to have a L3 gatway for vSAN, however Cloud Builder validation will post a warning that validation of L3 gateway failed.

- At minimum, an MTU of 1600 is required on the NSX-T Host Overlay (Host TEP) and NSX-T Edge Overlay (Edge TEP) VLANs end-to-end through your environment.

- VLANs for management, vMotion, vSAN, NSX-T Host Overlay (Host TEP), NSX-T Edge Overlay (Edge TEP), and NSX uplink networks are created and tagged to all host ports. Each VLAN is 802.1q tagged. NSX-T Host Overlay (Host TEP) VLAN and NSX-T Edge Overlay (Host TEP) VLAN are routed to each other.

- Management IP is VLAN-backed and configured on the hosts. vMotion and vSAN IP ranges are configured during the bring-up process.

- DHCP with an appropriate scope size (one IP per physical NIC per host) is configured for the NSX Host Overlay (Host TEP) VLAN.

AVNs or Application Virtual Networks are optional to configure but highly recommended to evaluate vRealize Suite and vSphere Tanzu

To use Application Virtual Networks (AVNs) for vRealize Suite components you also need:

- Top of Rack (ToR) switches configured with the Border Gateway Protocol (BGP), including Autonomous System (AS) numbers and BGP neighbor passwords, and interfaces to connect with NSX-T Edge nodes.

- Two VLANs configured and presented to all ESXi hosts to support the uplink configuration between the (ToR) switches and NSX-T Edge nodes for outbound communication.

Physical Hardware and ESXi Hosts

Refer to the VMware vSAN Design and Sizing Guide for information on design configurations and considerations when deploying vSAN. Be sure the hardware you plan to use is listed on the VMware Compatibility Guide (VCG). BIOS updates, and firmware and device driver versions should be checked to make sure these aspects are updated according to the VCG.

- Identical hardware (CPU, Memory, NICs, SSD/HDD, and so on) within the management cluster is highly recommended. Refer to vSAN documentation for minimum configuration.

- Hardware and firmware (including HBA and BIOS) is configured for vSAN.

- Physical hardware health status is "healthy" without any errors.

- ESXi is freshly installed on each host.

- Each ESXi host is running a non-expired license. The bring-up process will configure the permanent license.

Software and Licenses

- The ESXi version matches the build listed in the Cloud Foundation Bill of Materials (BOM). See the VMware Cloud Foundation Release Notes for the BOM.

- VCF Cloud Builder OVA

- Adequate licenses for VCF components and number of workload domains that is planned for deployment

Further online resources

- VMware Cloud Foundation Deployment guide - https://docs.vmware.com/en/VMware-Cloud-Foundation/4.1/com.vmware.vcf.ovdeploy.doc_41/GUID-F2DCF1B2-4EF6-444E-80BA-8F529A6D0725.html

- Planning and Preparation Workbook https://docs.vmware.com/en/VMware-Validated-Design/6.1/vmware-validated-design-61-vmware-cloud-foundation-41-sddc-planning-and-preparation-workbook.zip

This workbook supports both VMware Cloud Foundation 4.1 and VMware Validated Design 6.1. It is a Microsoft Excel workbook that helps you gather the inputs required for deploying Cloud Foundation (known as bring-up), VI workload domains, Workload Management, and vRealize Suite Lifecycle Manager. It also provides guidance on the requirements for additional components that you can add to your Cloud Foundation environment, such as vRealize Log Insight, vRealize Operations Manager, vRealize Automation, and VMware Workspace ONE Access. - Cloud foundation Tech Zone collateral and interactive demos https://core.vmware.com/

- Parameter worksheet and bring up process https://core.vmware.com/deploying-cloud-foundation

- Deploying vRealize Suite on VMware Cloud Foundation https://core.vmware.com/deploying-vrealize-suite-vmware-cloud-foundation

Step by step overview on how to stand up Enabling Kubernetes on Cloud Foundation on VMware Cloud Foundation

https://core.vmware.com/delivering-developer-ready-infrastructure#step_by_step_guide_to_deploying_developer_ready_infrastructure_on_cloud_foundation_isim_based_demos

Management Workload Domain Overview

SDDC Manager and other vSphere, vSAN, and NSX components that form the core of VMware Cloud Foundation are initially deployed to an environment known as the Management workload domain. This is a special-purpose grouping of systems devoted to managing the VMware Cloud Foundation infrastructure.

Each Cloud Foundation deployment begins by establishing the Management workload domain, which initially contains the following components:

- SDDC Manager

- vCenter Server with integrated Platform Services Controller

- vSAN

- NSX-T

Management Workload Domain Logical View:

In addition to the Cloud Foundation components that are provisioned during the bring-up process, additional virtual machine workloads may be deployed to the Management workload domain if required. These optional workloads may include third party virtual appliances or other virtual machine infrastructure workloads necessary to support a particular Cloud Foundation instance.

The vCenter with internal Platform Service Controller instance deployed to the Management workload domain is responsible for SSO authentication services for all other workload domains and vSphere clusters that are subsequently deployed after the initial Cloud Foundation bring-up is completed.

Additional details regarding the configuration and usage of Cloud Foundation workload domains may be found in the following section of this guide, Workload Domain Creation.

Prerequisites and Preparation

VMware Cloud Foundation (VCF) deployment is orchestrated by the Cloud builder appliance, which builds and configures VCF components. To deploy VCF, a parameter file (in the form of an Excel workbook or JSON file) is used to set deployment parameters such as host name, IP address, and initial passwords. Detailed descriptions of

VCF components may be found in the VCF Architecture and Deployment Guide.

The Cloud Builder appliance should be deployed on either an existing vSphere cluster, standalone host, or laptop (requires VMware Workstation or VMware Fusion). The Cloud Builder appliance should have network access to the Management Network segment defined the parameter file to enable connectivity to the ESXi hosts composing the management workload domain.

There are specific requirements that need to be fulfilled before the automated build process or ‘bring-up’ may begin. for instance, DNS records of the hosts, vCenter, NSX Manager, etc. should have been configured. Before starting, download the parameter spreadsheet to support planning and configuration of deployment prerequisites.

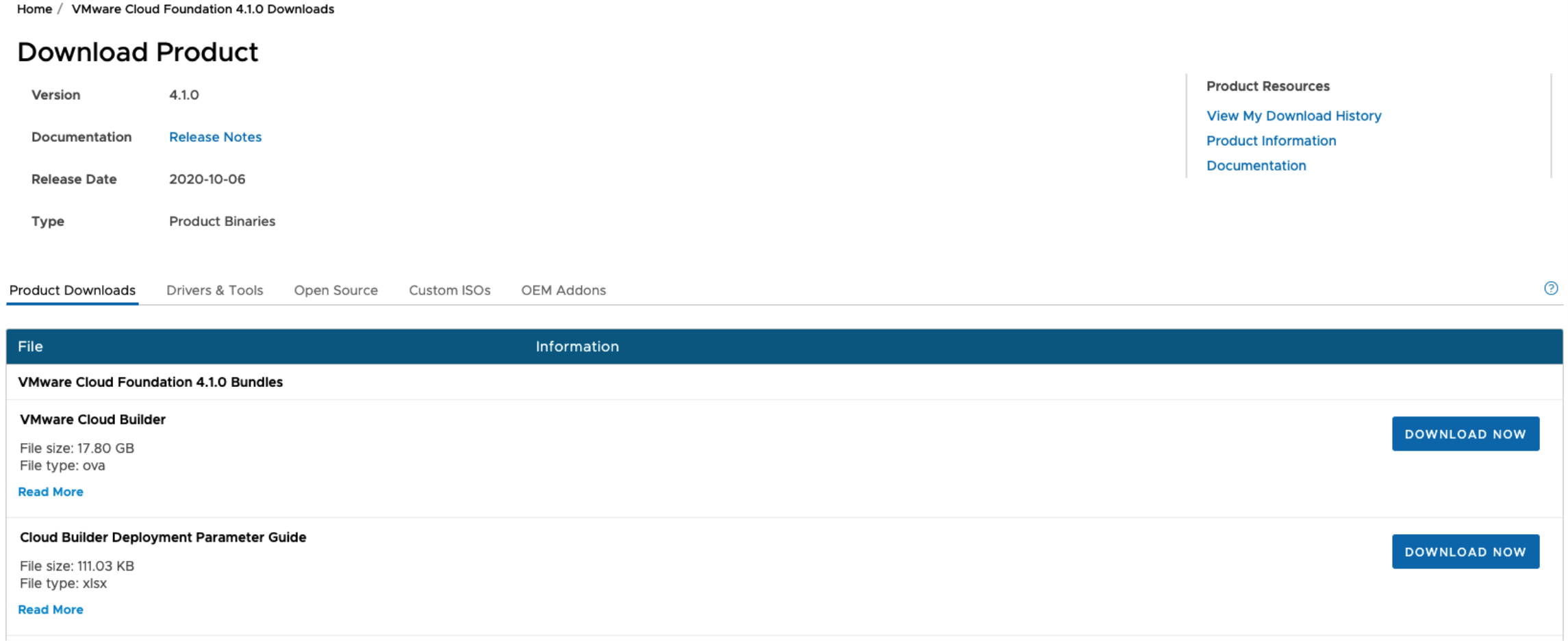

The OVA for Cloud Builder appliance and parameter workbook (Cloud Builder Deployment Parameter Guide) for version 4.1 can be found at:

https://my.vmware.com/web/vmware/downloads/info/slug/datacenter_cloud_infrastructure/vmware_cloud_foundation/4_1_0

Alternatively, the parameter workbook may also be downloaded from the Cloud Builder appliance after it has been deployed.

Once the workbook has been completed, the file should be uploaded to the appliance, where upon a script converts the Excel to a JSON file. This JSON file is then validated and used in the bring-up process.

The VMware Cloud Foundation YouTube channel is a useful resource to reference alongside this guide.

Deploy Cloud Builder Appliance

Download the Cloud Builder appliance and import the OVA. Once the OVA has been imported, complete the appliance configuration:

The ‘Deployment Architecture’ should be set to ‘vcf’ (default).

Enter credentials for the admin and root accounts; the hostname and IP address of the appliance and gateway, DNS, and NTP details.

Bring-Up Parameters

Parameters required for configuring VCF during the bring-up process are entered into an Excel workbook, which may be downloaded from the Cloud Builder download page or from the appliance itself. Each version of VCF has a specific version of the parameter workbook associated with it.

There are several worksheets within the Excel workbook. Certain fields are subject to validation based on inputs elsewhere in the workbook. Care should be taken not to copy/paste cells, or otherwise alter the structure of the spreadsheet.

'Prerequisite Checklist’: This worksheet lists deployment prerequisites. Mark the ‘Status’ column for each row ‘Verified’ when each prerequisite is satisfied.

‘Management Workloads’: Shows the VMs deployed on the management domain. Only licenses, i.e., column L, should be populated on this worksheet. For the current versions of VCF (4.1), leave the SDDC Manager Appliance license empty. Entering information into the 'Management Workload Domain Calculations' section will help to understand resource usage after deployment.

‘Users and Groups’: Enter a password for each service account. Ensure that each password entered meets cell validation requirement

‘Hosts and Networks’: VLANs, IP addresses/gateways, and management workload domain hostnames should be entered in this worksheet. If the ‘Validate ESXi Thumbprints’ option is set to ‘No’, then the respective host SSH fingerprints will be ignored. Any native VLAN should be marked with a zero (0). In many cases, and especially for POC deployments, the vSAN and vMotion networks may be non-routable and not have a gateway. In this case, enter a gateway value within the respective subnet range, but not used by any device (this will produce a warning on bring-up which may be ignored).

Note: The MTU used here is not reflective of a production environment. the MTU was chosen for internal lab restrictions when creating this document. Supported MTU sizes for are 1600 - 9000 for NSX-T based traffic

‘Deploy Parameters’: This worksheet contains the bulk of the information required. Here, infrastructure dependencies such as DNS and NTP are specified, along with hostnames and IP addresses of management components.

NSX-T Details: More will be covered on Application Virtual Networks section later on in this document

SDDC Manager Details

Specifications related to host network configurations, as well as object names within the vSphere hierarchy are also specified within this worksheet.

To view an interactive demonstration of this process with step-by-step instructions, please visit Deployment Parameters Worksheet in the VCF resource library on core.vmware.com.

Network and VLAN Configuration

There are several VLANs that must be configured for the management domain:

Management (for hosts and management VMs) vMotion

vSAN

NSX-T Host Overlay

NSX-T Edge Overlay

NSX-T Edge Uplinks (2)

In addition, for VCF version 4.x, two uplink VLANs are required for BGP peering between the NSX-T Edge VMs and the top of rack switch (see below).

For initial host bring-up, it is important to note that the default ‘VM Network’ port group should be on the same VLAN as the Management port group. The Cloud Builder appliance and SSDC Manager should be deployed to the same VLAN.

Jumbo frames are required for NSX / VTEP (MTU of at least 1600) and recommended for other VLANS (MTU 9000). Configure the network infrastructure to facilitate frames of 9000 bytes.

Note: In the above example, the VLAN was marked zero (0) due to internal lab restrictions. As mentioned previously, native VLAN should be marked with a zero (0).

Also note the MTU used here is not reflective of a production environment. the MTU was chosen for internal lab restrictions when creating this document. Supported MTU sizes for are 1600 - 9000 for NSX-T based traffic.

Finally, a DHCP server is required on the Host Overlay VLAN to issue addresses on each host. If there is no DHCP server available, there will be warnings during the bring-up process. To bypass this issue for the purpose of a POC, static IP addresses may be assigned directly to the newly created VMkernel ports on each host. The bring-up process may then be resumed/restarted.

Application Virtual Networks

In order to support Application Virtual Networks (AVNs); BGP peering between the NSX-T Edge Service Gateways and upstream network switches is required for the management domain.

The diagram below shows an overview the BGP AS setup between the two NSX-T Edges deployed with VCF and the physical top of rack switches:

Inside the rack, the two NSX-T edges form one BGP AS (autonomous system). Upstream, we connect to two separate ToR switches, each in their own BGP AS. The two uplink VLANs connect northbound from each edge to both ToRs.

The BGP configuration is defined in the parameter spreadsheet, in the 'Deploy Parameters' tab, under the section 'Application Virtual Networks'. We define the ToR details (as per the diagram above), with the respective IP address, BGP AS and password:

To complete the peering, the IP addresses of the two edges, with the ASN should be configured on the ToR (as BGP neighbors).

Note: BGP Password is required and cannot be blank. NSX-T Supports a maximum of 20 characters for the BGP password.

The Cloud builder appliance must be able to resolve and connect to the NSX-T edges in order to validate the BGP setup, etc.

Note that for the purposes of a PoC, virtual routers (such as Quagga or vyos) could be used to peer with. In this case, make sure that communication northbound for NTP and DNS is available

Installing ESXi Software on Cloud Foundation Servers

Hardware components should be checked to ensure they align with the VMware vSphere Compatibility Guide (VCG). Drives and storage controllers must be vSAN certified, and firmware/drivers must be aligned with those specified in the VCG. See section 4.1.1 for a full list of host requirements.

Note that VCF requires identical hardware and software configuration for each ESXi host within a given workload domain, including the Management workload domain.

ESXi should be installed on each host. Hosts must match the ESXi build number specified the VCF Bill of Materials (BOM) for the version of VCF being deployed. Failure to do so may result in failures to upgrade ESXi hosts via SDDC Manager. It is permissible to use a custom image from a hardware vendor as long as the ESXi build number still matches the VCF BOM. The BOM may be located within the Release Notes for each version of VCF.

The release notes for VCF is located at: https://docs.vmware.com/en/VMware-Cloud-Foundation/4.1/rn/VMware-Cloud-Foundation-41-Release-Notes.html

From here, we can see that the ESXi build number should be 16850804

Therefore, ensure the correct version and build of ESXi is installed on the hosts:

https://my.vmware.com/en/web/vmware/downloads/info/slug/datacenter_cloud_infrastructure/vmware_vsphere/7_0

After ESXi has been installed, login to the host client on each host and ensure that:

- The login password is the same as on the parameter spreadsheet

- The correct management IP address and VLAN (as per the parameter spreadsheet) has been configured Only one physical adapter is connected to the Standard Switch

- No vSAN configuration is present, and all disks (other than the boot disk) have no partitions present

- NTP should be configured with the IP address or hostname of the NTP server

- Both the SSH and NTP service should started and the policy changed to ‘Start and stop with host’ Finally, ensure that the hosts are not in maintenance mode.

DNS Configuration

Every IP address and hostname combination defined in the parameter workbook (i.e., hosts, NSX Manager, vCenter, etc.) must have forward and reverse entries in DNS before bring-up.

Ensure entries are correct and accounted for before starting the bring-up process and test each DNS entry for forward and reverse lookup.

Bring up will more than likely fail if DNS is not configured correctly.

Post bring-up tasks such as creating new VI Workload domains, new clusters, adding hosts, etc. also require creating forward and reverse DNS lookup entries for associated components.

SDDC Bring-Up

Once each host has been configured, DNS entries confirmed, and networks setup, verify that the parameter workbook is complete, then begin the bring-up process.

Deploy and Power-on the Cloud Builder appliance. If configured correctly, the appliance will boot to a console displaying the IP address of the appliance:

To start the bring up process, navigate to the Cloud Builder in a web browser and login with the credentials that were provided in the OVA import.

Select ‘VMware Cloud Foundation’ as the platform.

Next, review the bring-up checklist to ensure all steps have been completed:

On the next page, we are given the option to download the parameter spreadsheet and upload a completed file for validation. If needed, download the Deployment Parameter Spreadsheet.

After the spreadsheet has been completed, upload it to Cloud builder.

Introduced in VCF 3.9.1 Application Virtual Networks (AVN) are the network foundation for supporting workload mobility in applications such as VMware vRealize Automation, VMware vRealize Operations Manager, and VMware vRealize Orchestrator. VMware recommends enabling AVNs from the beginning: configuring AVNs later is possible, but it is a manual process.

The configuration for AVNs can be found in the deployment spreadsheet:

Once the parameter spreadsheet has been uploaded, click on ‘Next’ to begin the validation process.

Once the process has completed, review any errors and warnings. Pay close attention to any password, DNS, or network warnings (note that in many cases, especially for POCs, both vSAN and vMotion networks may not be routable – and therefore the gateway for that network may show as unreachable).

Once satisfied that the issues have been addressed, click Next:

Click On “Deploy SDDC: to begin the deployment process:

During the bring-up process, periodically monitor the running tasks. Filter for ‘In-progress' to see the current task, the Deployment of VCF usually completes in 2-4 hours:

To monitor progress with greater visibility, use tail to display the bring-up logs on the Cloud Builder appliance: open an SSH session to the appliance and log in using the admin account. Run the command below to tail the bring-up logs. Note that there will be a considerable number of messages:

tail -fqn0 /var/log/vmware/vcf/bringup/* | grep -v "handling get all"It may also be useful to login to the deployed vCenter instance (check the status messages to determine when it is available) to monitor bring-up progress.

To view an interactive demonstration of this process with step-by-step instructions, please visit VCF4 Bringup without AVN or VCF4 Bringup with AVN in the VCF resource library.

Once all tasks have been finished, the appliance will indicate that the SDDC setup has been successfully completed:

Bring-up is complete, and the Cloud Builder appliance may be powered off.

Post Bring-Up Health Check

After the bring-up process has finished, login to SDDC Manager. Upon login, a dashboard presenting an overview of the VCF environment is presented.

All VCF management activities are accomplished through SDDC Manager – no configuration changes should be made to any of the deployed components, including vCenter.

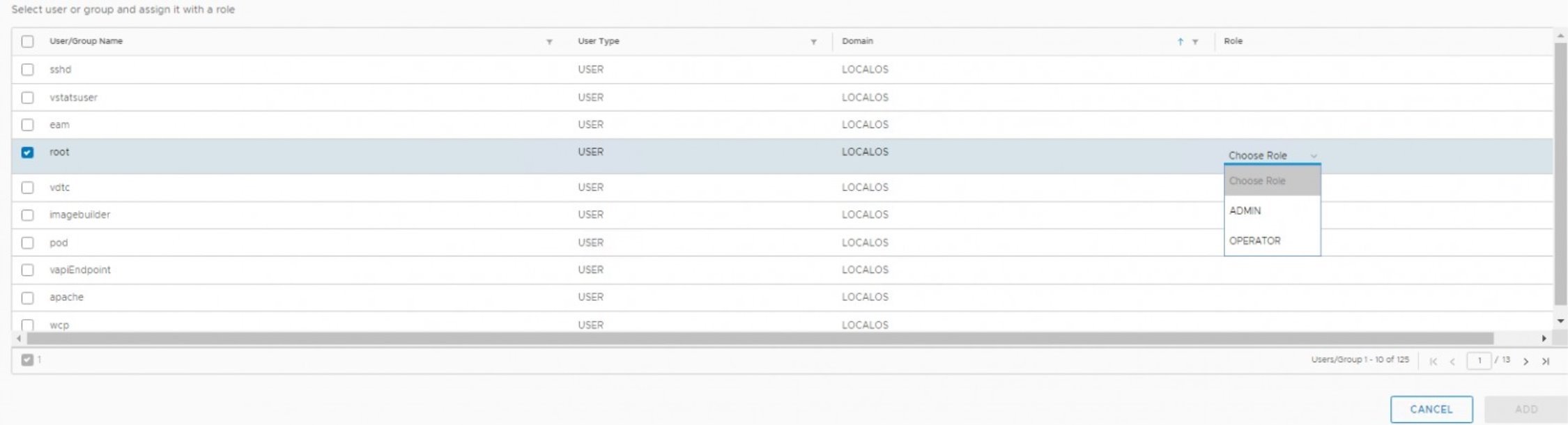

The ‘Users’ panel on the left of the interface shows a list of users inherited from vCenter. To add a user or group, click on '+ User or Group.

As such, identity sources from Active Directory, LDAP and Open LDAP added to vCenter will appear here. Note that there are three roles defined in SDDC Manager, ADMIN and OPERATOR and VIEWER.

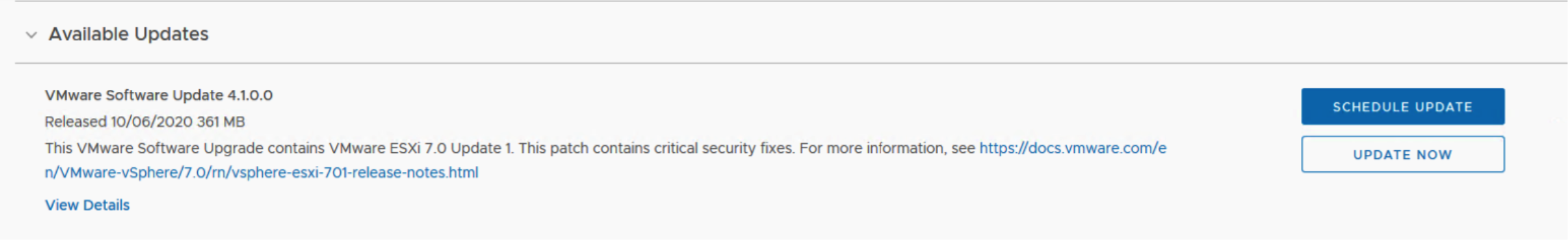

SDDC Manager: Repository Settings

Once SDDC Manager is setup, users are required to enter ‘My VMware’ account details to enable software bundle downloads. This may require configuration of a proxy in some environments.

Navigate to the ‘Repository Settings’ panel on the left of the interface and enter your myvmware account details:

Once bundles are available to download, the ‘Bundles’ panel will populate:

See the section on ‘LCM Management’ for further information on managing bundles.

SDDC Manager: Backup Configuration

It is recommended that the NSX managers are backed up to an external destination (currently SFTP is supported). Navigate to ‘Backup Configuration’ on the panel on the left and click on ‘Register External’:

Enter the IP address, port, user credentials, etc. for the external destination:

Section 2 VCF Infrastructure Deployment / Day 1.

Workload Domain Creation

Workload Domain Overview

In VMware Cloud Foundation, a “workload domain” (or WLD) is a policy-based resource container with specific availability and performance attributes that combines compute (vSphere), storage (vSAN, NFS, VMFS or vVOLs), and networking (NSX-T) into a single consumable entity. Each workload domain may be created, expanded, and deleted as part of the SDDC lifecycle operations, and may contain one or more clusters of physical hosts.

Every Cloud Foundation deployment begins with provisioning a management workload domain, which hosts SDDC components necessary for Cloud Foundation to function. After the management workload domain is successfully deployed, SDDC Manager may be used to deploy additional Virtual Infrastructure VI) workload domains to host VM and container workloads. Each VI workload domain is managed by a corresponding vCenter instance, which resides within the VCF management domain; other management- related workloads associated with each workload domain instance may also be deployed within the management domain.

Cloud Foundation uses and is validated against vSAN, NFS v3, and VMFS on FC for principal storage. The management domain requires vSAN for principal storage. You can use vSAN, NFS v3, or VMFS on FC for principal storage with VI workload domains. The type of principal storage used by the initial vSphere cluster in a VI workload domain is defined when the VI workload domain is created. For VI workload domains, you can add vSphere clusters that use a different type of principal storage than the type selected for the initial cluster when the VI workload domain was created. After a vSphere cluster has been deployed you cannot change to another storage type.

Each VCF workload domain requires a minimum of three (3) hosts. Exact requirements vary depending on the workload domain type the host resides in. See the table below for details.

|

Component |

Requirements

• For vSAN-backed VI workload domains, three (3) compatible vSAN ReadyNodes are required. For information about compatible vSAN ReadyNodes, see the VMware Compatibility Guide. • For NFS-backed workload domains, three (3) servers compatible with the vSphere version included with the Cloud Foundation BOM are required. For information about the BOM, see the Cloud Foundation Release Notes. For compatible servers, see the VMware Compatibility Guide. • For VMFS on Fibre Channel backed workload domains, three (3) servers compatible with the vSphere version included with the Cloud Foundation BOM are required. For information about the BOM, see the Cloud Foundation Release Notes. In addition, the servers must have supported Fibre Channel (FC) cards (Host Bus Adapters) and drivers installed and configured. For compatible servers and Fibre Channel cards, see the VMware Compatibility Guide.

Servers within a cluster must be of the same model and type.

• For vSAN-backed VI workload domains, supported vSAN configurations are required. • For NFS-backed VI workload domains, configurations must be compatible with the vSphere version included with the Cloud Foundation BOM. For more information about the BOM, see the Cloud Foundation Release Notes. • For VMFS on Fibre Channel backed workload domains, configurations must be compatible with the vSphere version included with the Cloud Foundation BOM. For information about the BOM, see the Cloud Foundation Release Notes.

• Two 10GbE (or faster) NICs. Must be IOVP certified. • (Optional) One 1GbE BMC NIC |

|

Servers |

|

|

CPU, Memory, and Storage |

|

|

NICs |

In this proof-of-concept guide, we will focus on configuration of workload domains with vSAN-backed storage. For configuration of NFS or FC-backed storage, please consult the Cloud Foundation documentation in conjunction with documentation from the NFS or FC storage array vendor.

- To commission a host in SDDC manager, navigate to the Inventory > Hosts view, and select ‘Commission Hosts’ at the top right of the user interface.

- Verify that all host configuration requirements have been met, then click ‘Proceed’.

- On the next screen, add one or more hosts to be commissioned. These may be added via the GUI interface, or alternatively may be added through a bulk import process. To add hosts via the GUI, ensure the ‘Add new’ radio button has been selected, and fill in the form. Then, click ‘Add’.

- Alternatively, to bulk import hosts, click the ‘JSON’ hyperlink to download a JSON template for entering host information. After entering host details into the .JSON file, save it locally and select the ‘Import’ radio button. Then, click ‘Browse’ to select the .JSON file, and click ‘Upload’ at the lower right to upload the file to SDDC Manager.

- When all hosts for commissioning are added, confirm the host fingerprints by selecting all hosts in the ‘Hosts Added’ table by clicking the grey circle with a checkmark located beside each host fingerprint listed in the ‘Confirm Fingerprint’ column. When the circle turns green, click the ‘Validate All’ button located near the upper right corner of the table.

- After clicking ‘Validate All’, wait for the host validation process to complete. This may take some time. When the validation process completes, verify that all hosts have validated successfully, then click ‘Next’ to advance the wizard. On the final screen of the wizard, review the details for each host, then click ‘Commission’ to complete the process.

To configure a new VI workload domain, a minimum of three unused vSphere hosts must be available in the Cloud Foundation inventory.

Further, the host management interfaces should be accessible by SDDC Manager, and appropriate upstream network configurations should be made to accommodate vSphere infrastructure traffic (i.e., vMotion, vSAN, NSX-T, management traffic, and any required VM traffic).

If available hosts that meet requirements are not already in the Cloud Foundation inventory, they must be added to the inventory via the Commission Hosts process. Hosts that are to be commissioned should not be associated with a vCenter and should not be a member of any cluster. Additionally, prior to commissioning, each host must meet certain configuration prerequisites:

- Hosts for vSAN-backed workload domains must be vSAN compliant and certified per the VMware Hardware Compatibility Guide. BIOS, HBA, SSD, HDD, etc. must match the VMware Hardware Compatibility Guide.

- Host has a standard virtual switch back by two (2) physical NIC ports with a minimum 10 Gbps speed. NIC numbering should begin with vmnic0 and increase sequentially.

- Host has the drivers and firmware versions specified in the VMware Compatibility Guide.

- Host has ESXi installed on it. The host must be preinstalled with supported versions listed in the BOM. SSH and syslog are enabled on the host.

- Host is configured with DNS server for forward and reverse lookup and FQDN. Hostname should be same as the FQDN.

- Management IP is configured to first NIC port. in a multi-nic enviroment. you can manually specify the nics used via the API. For the mgmt domain/in CB, you can configure this on the bring-up json.

- Ensure that the host has a standard switch and the default uplinks with 10Gb speed are configured starting with traditional numbering (e.g., vmnic0) and increasing sequentially.

- Host hardware health status is healthy without any errors. All disk partitions on HDD / SSD are deleted.

- Ensure required network pool is created and available before host commissioning.

- Ensure hosts to be used for VSAN workload domain are associated with vSAN enabled network pool. Ensure hosts to be used for NFS workload domain are associated with NFS enabled network pool.

- Ensure hosts to be used for VMFS on FC workload domain are associated with NFS or vMotion only enabled network pool.

Workload Domain Creation Steps

- To create a VI workload domain, navigate to the Workload Domains inventory view. Then, at the top right of the screen, click “+Workload Domain”, then select VI – Virtual Infrastructure from the dropdown.

- Choose the storage type to use for this workload domain, vSAN, NFS, or VMFS on Fiber Channel (this cannot be changed later).

- Enter configuration details for the workload domain. Note that information will be used to provision a new instance of vCenter. This instance’s VM resides in within the Management workload domain, and manages the clusters associated with its respective VI workload domain. Please ensure that valid forward and reverse DNS entries for the vCenter FQDN are configured, then click ‘Next’.

- On the next screen, choose the version of NSX to deploy, and enter the deployment parameters. Ensure that forward and reverse DNS entries for NSX Manager FQDNs are be configured, and that the correct NSX software bundles have been downloaded on SDDC Manager. Then, click ‘Next’.

- On the fourth screen in the wizard, configure the vSAN default Failures To Tolerate (FTT). Enabling the Dedupe and Compression feature for all-flash clusters is optional. Then, click ‘Next’.

- The next step requires selecting available hosts from inventory to add to the workload domain. If there are no hosts available, please follow the instructions above for commissioning hosts within SDDC Manager. VMware recommends deploying no less than 4 hosts per workload domain in order to ensure that compliance with vSAN FTT=1 policy may be maintained if a vSAN cluster host is offline. However, in cases where hosts available for the POC are limited, it is acceptable to construct a workload domain with the minimum three (3) required hosts, then later add an additional host for the purposes of demonstrating workload domain expansion functionality. For clusters supporting vSAN FTT polices greater than one (1) (i.e., FTT=2 or FTT=3), it is recommended to deploy at least one additional host above the minimum required for policy compliance. See the vSAN Design and Sizing guide for additional details. After selecting the hosts to be added, click ‘Next’.

- Now, choose licenses available within SDDC Manager for the workload domain. If no applicable licenses are present, please add them to SDDC Manager (Administration > Licensing) then click ‘Next’.

- Finally, review the deployment summary, then click ‘Finish’ to launch the deployment.

To view an interactive demonstration of this process with step-by-step instructions, please visit Create Workload Domain (NSX-T and vSAN) in the VCF resource library on TechZone.

Review Workload Domain Components

Components deployed during the workload domain creation process may be viewed within SDDC Manager. To view these components, navigate to Inventory > Workload Domains within SDDC Manager, then click the name of the workload domain you would like to inspect.

To view an interactive walk-through of VCF SDDC components, please visit the Review Workload Domain Components demonstration https://core.vmware.com/?share=isim_demo2129 in the VCF resource center on core.vmware.com.

Expand Workload Domain Cluster

To expand the host resources available within a workload domain, the SDDC Manager interface is used to move one or more unused hosts from the SDDC Manager inventory to a workload domain cluster.

Before attempting to add additional hosts to a workload domain, verify that ‘Unassigned’ hosts are available in the SDDC Manager Inventory. If no hosts are presently ‘Unassigned’, please follow the host commissioning process to make one or more hosts available for use.

To view an interactive demonstration of this process with step-by-step instructions, please visit the Expand Cluster demonstration https://core.vmware.com/?share=isim_demo2127 in the VCF resource center on core.vmware.com.

NSX Configuration Overview: VI Workload Domain(s)

When creating a VI workload domain, NSX-T is deployed to support its networking stack. There are prerequisites for deploying NSX-T; please refer to the VCF Product Documentation for details.

A cluster of three NSX-T Manager nodes is deployed by default when an NSX-T based workload domain is created. On the workload domain page, select the summary view, and then

If an NSX-T Edge Cluster is also created, it will be visible and associated to the workload domain instance of NSX-T.

Click the FQDN of the NSX-T Cluster. This will open a new browser tab and automatically log into one of the NSX-T Manager instances.

Confirm that the NSX-T management cluster is in ‘STABLE’ state. Also verify that the Cluster Connectivity for each node is ‘Up’:

To review the Transport Zones configured, Select System > Fabric > Transport zones

There are two Overlay transport zones and one VLAN transport zone.

Hosts associated with this workload domain are connected to the default NSX-T overlay for the workload domain, in this case four hosts

Select System > Fabric > Nodes and select Host Transport Nodes tab in the “Managed by” drop-down list to show all transport nodes in the cluster associated with the vCenter instance associated with the workload domain.

Ensure the ‘Configuration’ is set to ‘Success’ and “Node Status” is ‘Up’ for each node:

vCenter

All NSX Managers for new workload domains are deployed on the Management workload domain resource pool. From the management vCenter, go to Hosts and Clusters and expand the resource pool mgmt-rp

vSphere Networking

With previous versions of NSX-T, installing NSX-T required setting N-VDS and migrating to from vDS. Now it is possible to use a single vSphere Distributed Switch for both NSX-T 3.0 with vSphere 7 networking. When installing NSX-T 3.0 it can run on top of the existing vDS without needing to move pNICs to N-VDS.

Note: When NSX-T is associated with the vSphere VDS it will be updated on the summary page that it is managed by NSX-T instance.

NSX-T Edge Cluster Deployment

You can add multiple NSX-T Edge clusters to workload domains for scalability and resiliency. However, multiple Edge clusters cannot reside on the same vSphere cluster.

NSX-T Data Centre supports a maximum of 16 Edge clusters per NSX Manager cluster and 8 Edge clusters per vSphere cluster.

The north-south routing and network services provided by an NSX-T Edge cluster created for a workload domain are shared with all other workload domains that use the same NSX Manager cluster.

For more information, please review VCF documentation https://docs.vmware.com/en/VMware-Cloud-Foundation/4.1/com.vmware.vcf.admin.doc_41/GUID-8FA66DA3-3166-426B-84A8-C45FA7651658.html

So, in this POC guide we will have already deployed an additional workload domain.

The purpose of this document is to walk through the configuration to understand the network requirements and finally to check and validate that the edge(s) were deployed successfully

Prerequisites

As per the documentation

Note: Please refer to official documentation for detailed steps on https://docs.vmware.com/en/VMware-Cloud-Foundation/4.1/com.vmware.vcf.admin.doc_41/GUID-D17D0274-7764-43BD-8252-D9333CA7415A.html. Official documentation should supersede if it differs from guidance documented here.

Below is a guided deployment with screenshots to augment the deployment.

- Separate VLANs and subnets are available for NSX-T Host Overlay (Host TEP) VLAN and NSX-T Edge Overlay (Edge TEP) VLAN. A DHCP server must be configured on the NSX-T Host Overlay (Host TEP) VLAN.

- You cannot use DHCP for the NSX-T Edge Overlay (Edge TEP) VLAN.

- NSX-T Host Overlay (Host TEP) VLAN and NSX-T Edge Overlay (Edge TEP) VLAN are routed to each other.

- For dynamic routing, set up two Border Gateway Protocol (BGP) Peers on Top of Rack (ToR) switches with an interface IP, BGP autonomous system number (ASN), and BGP password.

- Reserve a BGP ASN to use for the NSX-T Edge cluster’s Tier-0 gateway.

- DNS entries for the NSX-T Edge nodes are populated in the customer-managed DNS server.

- The vSphere cluster hosting an NSX-T Edge cluster must include hosts with identical management, uplink, host TEP, and Edge TEP networks (L2 uniform).

- You cannot deploy an Edge cluster on a vSphere cluster that is stretched. You can stretch an L2 uniform vSphere cluster that hosts an Edge cluster.

- The management network and management network gateway for the Edge nodes must be reachable.

- Workload Management supports one Tier-0 gateway per transport zone. When creating an Edge cluster for Workload Management, ensure that its overlay transport zone does not have other Edge clusters (with Tier-0 gateways) connected to it.

Deployment Planning

As a proof of concept, we will deploy a new Edge Cluster to an already deployed workload domain called wld01.

- We will deploy a small Edge Node Form Factor: 4 GB memory, 2 vCPU, 200 GB disk space. The NSX Edge Small VM appliance size is suitable for lab and proof-of-concept deployments.

Note: You cannot change the size of the edge form factor after deployment. Only use SMALL for lab or proof of concepts. For Tanzu (Kubernetes) please use LARGE

- We will deploy two Edges in Active-Active High Availability Mode (In the active-active mode, traffic is load balanced across all members and If the active member fails, another member is elected to be active)

We have gathered the following details prior to deployment

- ASN number: 65004 for Tier-0 BGP

- ToR Switch IP addresses and subnets

- NSX-T Overlay VLAN 1252 (routable to host overlay)

- Static IP addresses for Edge Overlay VLAN 1252

- Edge Uplinks VLAN 2081 and 2082. (for connectivity to Top of Rack

- Switch Static IP addresses for VLAN 2081 and 2082

NSX-T Edge deployment Step by Step Procedure

From SDDC manager Click on Workload Domains, select a workload domain, and click on Actions Chose Click Add Edge Cluster

Click Begin to walk through the wizard

The following walk-through demo can be reviewed here to understand the process. Please navigate to Add NSX-T Edge Cluster https://core.vmware.com/?share=isim_demo2119

Validation of NSX-T Edge Cluster

From SDDC Manager UI the new edge cluster is listed on the Workload Domain summary

Validation is also explored by reviewing the walk through demo can be reviewed here to understand the process please navigate to demo Add NSX-T Edge Cluster https://core.vmware.com/?share=isim_demo2119 Once the demo is started, navigate to step 47 of the demo

From the SDDC manager shortcut launch the NSX-T web interface

Click System > Fabric Edge Transport Nodes to see the edge node details. We can see edge01- wld01.vcf.sddc.lab and edge02-wld01.vcf.sddc.lab deployed

From the top menu click Networking to view the Tier-0 and Tier-1 dashboards

We can see from the dashboard. the Tier-0 gateways. This is responsible for North-South Routing. We can see BGP is enabled and a Peer is configured. The Tier-1 gateway is used for East-West traffic.

To view the topology layout between Tier-1, Tier-0 and the outside physical infrastructure, Select Network Topology

We can see 192.168.17.4/24, 192.168.16.4/24 192.168.17.5/24 and 192.168.16.5/24 represent the IP addresses on the edges that are peered to the of rack AS 65001.

To verify BGP connectivity and peering status to the top of rack switches

- Navigate back to Network Overview, select Tier-0 Gateways, select the Tier-0 Edge, edge01-t0, to expand on the details

- Click on BGP to expand

- Click on 2 for BGP neighbor details (as we have two neighbors configured)

We can see the status of both Top of Rack BGP Peers. Status of Success indicates peering has been successfully established.

vSphere

The NSX-T Edge Cluster will be deployed on the associated workload domain. And Edge Cluster resource pool is created, and the edges are deployed onto the workload domain cluster, in this case wld01

Note: the vCenter and NSX-T unified controllers are deployed on the Management Domain vSphere Cluster

To view the edges from a vSphere perspective, login to the vSphere Client, navigate from host and clusters to the vCenter instance associated with the workload domain, expand the cluster and resource pool to inspect the NSX-T Edges.

vSphere Networking and vDS details

Two additional vDS port groups are created on the workload domain vDS

from the vSphere Web client, navigate to vSphere Networking, the workload domain vCenter, and the associated VDS to inspect the edge port-groups.

Edge vNICs

Each Edge will have a similar VM networking configuration

Network adapter 1 is for the management network connectivity (MGMT VLAN 0) Network Adapter 2 is associated with the Edge Uplink (VLAN 2081) Network Adapter 2 is associated with the Edge Uplink (VLAN 2082)

This configuration can be explored on the summary of the Edge Virtual Machine appliance.

Reusing an existing NSX-T manager for a new workload domain

If you already have an NSX Manager cluster for a different VI workload domain, you can reuse that NSX Manager cluster.

In order to share an NSX Manager cluster, the workload domains must use the same update manager mechanism. The workload domains must both use vSphere Lifecycle Manager (vLCM), or they must both use vSphere Update Manager (VUM).

Note: - Do not share an NSX Manager cluster between workload domains catering to different use cases that would require different NSX-T Edge cluster specifications and configurations.

Please review the click through demo that complements this guide Add Workload Domain with NSX Manager Reuse https://core.vmware.com/?share=isim_demo2181 The demo first reviews an existing workload domain and then walks through deploying a new workload domain. To quickly go through this scenario, we will go through the main parts of the demo

From SDDC Manager start the deploy wizard for a new VI - Virtual Infrastructure to deploy workload domain,

Once new workload domain wizard is launched, add the entries for the workload domain name and new vCenter instance

Instead of deploying a brand new NSX-T Instance, we will Re-use the NSX-T Instance associated with an existing workload domain, in our case wld01

The VLAN ID for the pre-existing NSX-T host overlay needs to be validated

All NSX-T entries are greyed out as we are using the NSX-T instance associated with wld01 which SDDC Manager is already aware of

The following resources will be pre-fixed workload domain name wld01

- vSphere Distributed Switch

- Resource Pool

- Distributed port-group vSAN

- Distributed port-group vMotion

- vSAN Datastore

Once the Workload domain has been deployed it will simply appear as a new workload domain on SDDC Manager but associated with the NSX-T instance belonging to wld01.

From a vSphere perspective, a new vCenter Server is deployed, a new datacenter and cluster object is created, and hosts added and configured

We can also observe the vCenter server appliance vcenter-wld02.vcf.sddc.lab is hosted on the management workload domain with no further additional NSX-T instances.

vSphere Networking comprises of a vDS and 3 port-groups for mgmt, vSAN and vMotion.

NSX-T

The vCenter Server is registered as an additional compute manager to the existing NSX-T instance (as specified on the new workload domain wizard)

The vSphere hosts are configured as Host Transport nodes associated with that vCenter

However, they are added to the same transport zone as the transport Nodes in the first workload domain, wld01, i.e., overlay-tx-nsx-wld01.vcf.sddc.lab

Section 3 vRealize Suite Deployment

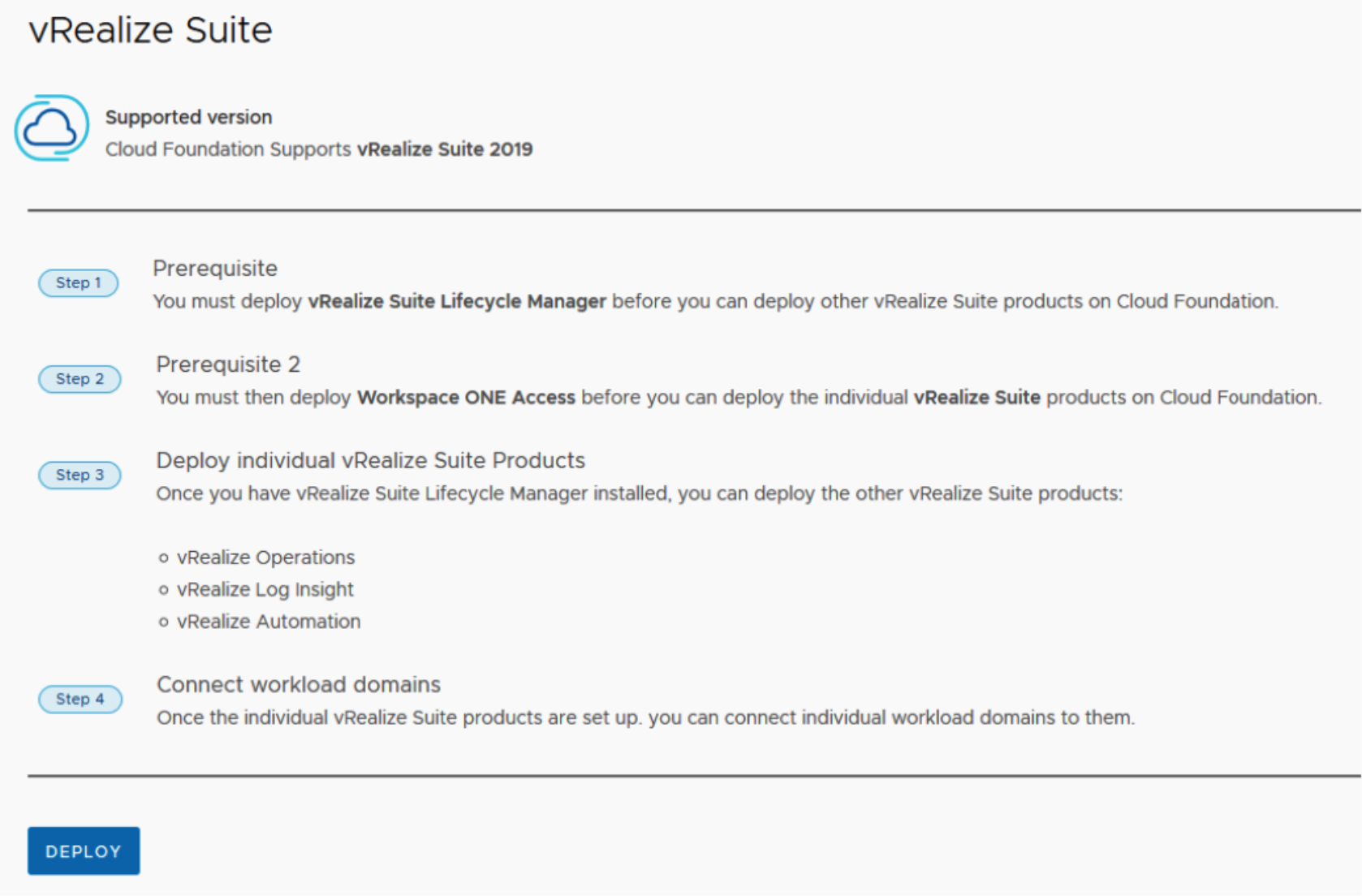

VCF 4.1 now supports vRealize Suite 2019

VMware Cloud Foundation 4.1 introduces an improved integration with vRealize Suite Lifecycle Manager. When vRealize Suite Lifecycle Manager in VMware Cloud Foundation mode is enabled, the behavior of vRealize Suite Lifecycle Manager is aligned with the VMware Cloud Foundation architecture.

Note: Please refer to official documentation for detailed steps on https://docs.vmware.com/en/VMware-Cloud-Foundation/4.1/com.vmware.vcf.admin.doc_41/GUID-8AD0C619-6DD9-496C-ACEC-95D022AE6012.html. Official documentation should supersede if it differs from guidance documented here.

Below is a guided deployment with screenshots to augment the deployment.

Prerequisites

- You must deploy vRealize Suite Lifecycle Manager before you can deploy other vRealize Suite products on Cloud Foundation

- You must then deploy Workspace ONE Access before you can deploy the individual vRealize Suite products on Cloud Foundation.

Once you have vRealize Suite Lifecycle Manager installed, you can deploy vRealize Suite products such as

- vRealize Operations

- vRealize Log Insight

- vRealize Automation

Once Deployed you can connect individual workload domains to them.

For the purposes of this POC Guide we will cover

- vRealize Life Cycle Manager

- vRealize Workspace One Access

- vRealize Operations

- vRealize Log Insight

Deploying vRealize Suite Life Cycle Manager

vRealize Suite Lifecycle Manager introduces a functionality where you can enable VMware Cloud Foundation mode in vRealize Suite Lifecycle Manager 8.1.

Any operation triggered through vRealize Suite Lifecycle Manager UI is aligned with the VMware Cloud Foundation architecture design.

When a VMware Cloud Foundation admin logs in to vRealize Suite Lifecycle Manager, you can perform normal regular operations like any vRealize Suite Lifecycle Manager user. The VMware Cloud Foundation user can view applications like, User Management, Lifecycle Operations, Locker, Marketplace, and Identity and Tenant Management but with some limitations.

You can perform the same set of operations with limited access to the latest version of the vRealize Suite products. To perform a regular operation, you have to specify the license and certificate settings using the Locker in vRealize Suite Lifecycle Manager UI.

Some of the features that are used by VMware Cloud Foundation from vRealize Suite Lifecycle Manager.

- Binary mapping. vRealize Suite Lifecycle Manager in VMware Cloud Foundation mode has a sync binary feature from which you can poll the binaries from the VMware Cloud Foundation repository and maps the source automatically in vRealize Suite Lifecycle Manager.

- Cluster deployment for a new Environment. You can deploy vRealize Automation, vRealize Operations Manager, vRealize Log Insight in clusters, whereas in VMware Identity Manager, you can only deploy both cluster and single node, and later expand to a cluster.

- Product Versions. You can only access the versions for the selected vRealize products that are specifically supported by VMware Cloud Foundation itself.

- Resource Pool and Advanced Properties. The resources in the Resource Pools under the Infrastructure Details are blocked by the vRealize Suite Lifecycle Manager UI, so that the VMware Cloud Foundation topology does not change. Similarly, the Advanced Properties are also blocked for all products except for Remote Collectors. vRealize Suite Lifecycle Manager also auto-populates infrastructure and network properties by calling VMware Cloud Foundation deployment API.

vRSLCM Perquisites

To Deploy SDDC manager you will need

- vRSLCM downloaded via SDDC Manager.

- AVN networks ensuring routing between AVNs and Management networks is functioning correctly.

- IP address and DNS record for vRealize Suite Life Cycle Manager.

- Free IP address in AVN Segment for Tier 1 gateway.

- DNS and NTP services available from AVN Segments.

Note: Please refer to official documentation for detailed steps on https://docs.vmware.com/en/VMware-Cloud-Foundation/4.1/com.vmware.vcf.admin.doc_41/GUID-8AD0C619-6DD9-496C-ACEC-95D022AE6012.html. Official documentation should supersede if it differs from guidance documented here.

Below is a guided deployment with screenshots to augment the deployment.

Step by Step Deployment

From SDDC Manager, select vRealize Suite and click Deploy.

AVN Network Segment, Subnet, gateway, DNS and NTP settings should be prepopulated by SDDC Manager.

Click Next

For NSX-T Tier 1 Gateway, enter in free IP Address on AVN Segment. Do not use the same IP address as another IP address on the AVN segment. It must be a free and unused IP address

The default System Administrator userid is vcfadmin@local

vRSLCM Deployment task can be monitored via SDDC Manager Tasks

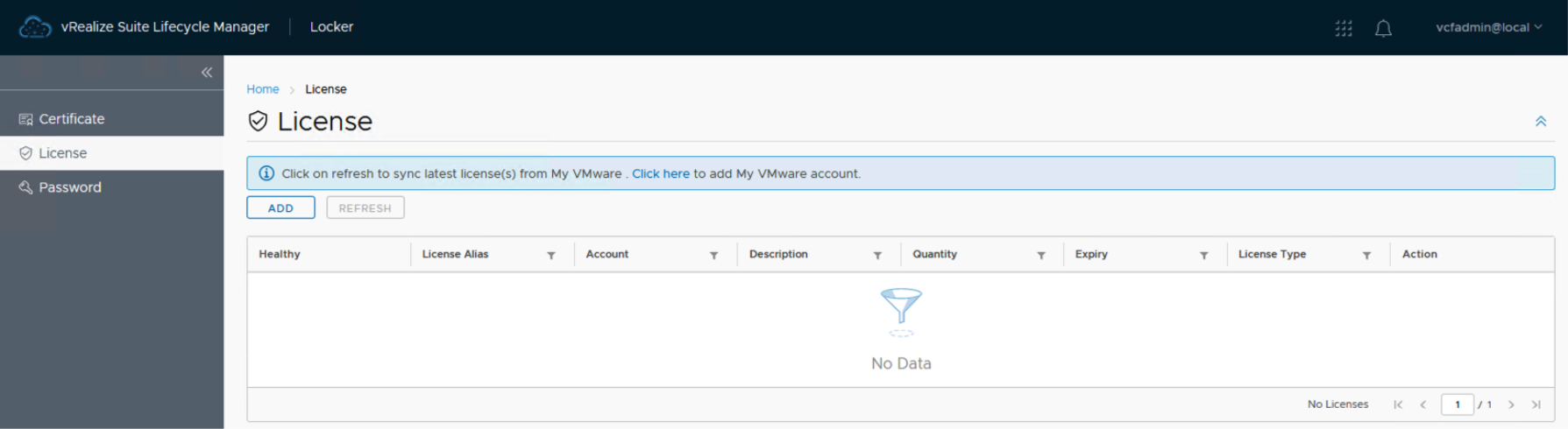

Once vRSLCM is deployed successfully the next step is to license vRealize Suite

Add vRealize Suite License key.

Add license key to vRSLCM

- Login to vRSLCM with vcfadmin@local (you may have to change password on initial login)

- Navigate to Locker and Select License.

3. Select Add license and validate. Once Validated select Add.

Deploying VMware Identity Manager

You must deploy Workspace ONE via vRealize Suite Lifecycle Manager

Requirements are

- Workspace ONE Access software bundle is downloaded under Bundle Management on SDDC Manager

- vRealize Suite License key

- 5 static IP address with FQDNs (forward and reverse lookup)

- CA signed certificate or self-signed certificate

- vcfadmin@local password

Note: Please refer to official documentation for detailed steps on https://docs.vmware.com/en/VMware-Cloud-Foundation/4.1/com.vmware.vcf.admin.doc_41/GUID-8AD0C619-6DD9-496C-ACEC-95D022AE6012.html. Official documentation should supersede if it differs from guidance documented here.

In this POC scenario we will deploy a clustered Workspace one instance , so we will require an IP address for Cluster VIP, Database IP for 3 IP addresses for each cluster member and a certificate which includes the FQDN names and IP addresses of each member, for example:

|

IP (AVN Segment) |

FQDN |

Purpose |

|

192.168.11.13 |

m01wsoa.vcf.sddc.lab |

Cluster VIP |

|

192.168.11.14 |

m01wsoa1.vcf.sddc.lab |

Cluster Node 1 |

|

192.168.11.15 |

m01wsoa2.vcf.sddc.lab |

Cluster Node 2 |

|

192.168.11.16 |

m01wsoa3.vcf.sddc.lab |

Cluster Node 3 |

|

192.168.11.17 |

n/a |

Database IP |

Ensure binaries are downloaded via SDDC manager. vRSLCM should map product binaries to SDDC repro as part of deployment.

To verify, in the navigation pane, select Lifecycle management > Bundle management.

Click the Bundles tab, locate the Workspace ONE Access install bundle. Click Download bundle if not present

Login to vRSLCM with vcfadmin@local

Navigate to Lifecycle Operations > Settings > Binary Mappings

Ensure Workspace ONE OVA is present. You may have to synch binaries from SDDC Manager if OVA is not present

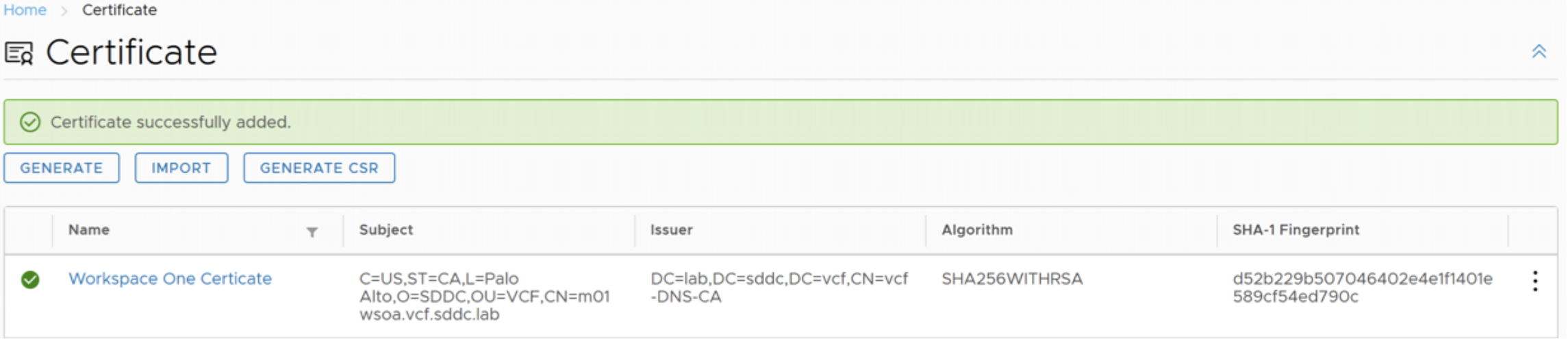

Add Workspace ONE Certificate

You can generate a self-signed certificate or Generate CSR for an external CA

In this scenario we are going to generate a CSR to create a CA signed certificate.

Login to vRSLCM with vcfadmin@local

Navigate to Locker, Select Certificate and Generate.

Add Name, CN name (this is the IP of Workspace ONE Cluster IP)

If Workspace ONE is going to be deployed in cluster mode add Cluster IP, and cluster members (FQDN) and IP addresses for each member.

It is also possible to generate a certificate signing request to submit to an external CA. Click Generate CSR.

You will be prompted to download and save the .pem file which includes private key and signing request once all fields have been filled out

Save the file, so it can be retrieved later

The CSR can be signed by an appropriate CA. If using an internal Microsoft CA, paste the CSR PEM file contents to the CA to generate a new certificate request.

Download Certificate and Certificate chain and use Base 64 Encoding

Once Certificate chain is generated and downloaded (Base 64 encoded), return to vRSLCM > Lifecycle Operations > Locker > Certificate and Click Import

To import the generated Certificate. Provide a Name, e.g., Workspace ONE Certificate, paste the Private Key from the CSR request and Paste the certificate chain generated earlier in the procedure

Note: The Private Key and Certificate can be combined into a single file to simplify the process.

Add the Private Key first then append the certificate chain to a file

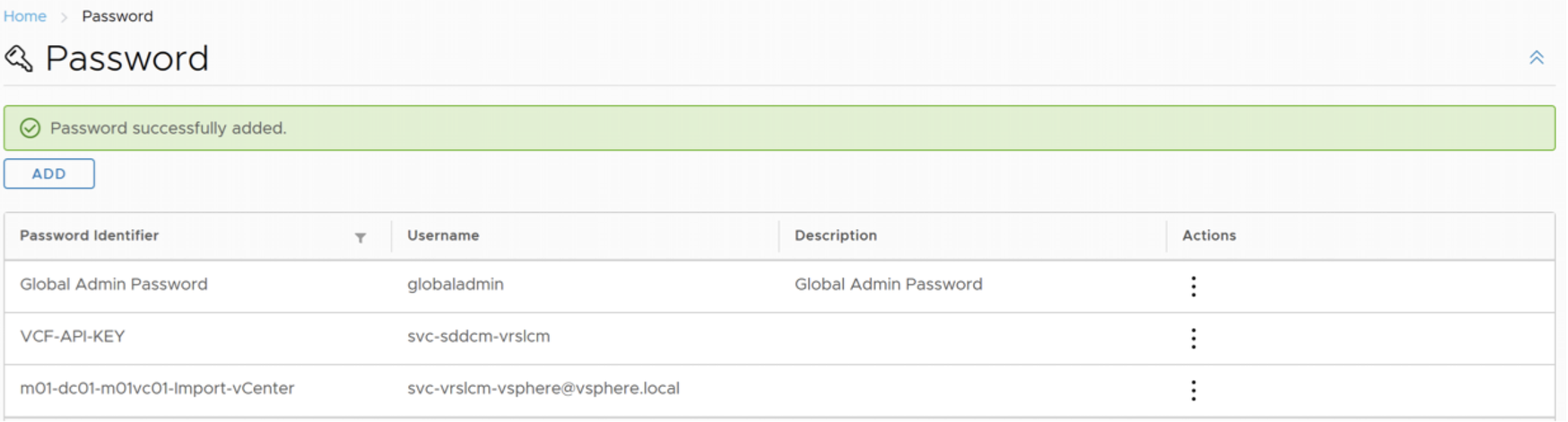

In vRealize Suite Lifecycle Manager 8.X stores all the passwords that are used across the vRealize Suite Lifecycle Manager. You can configure a password at the locker level and are retrieved from the UI.

Login to vRSLCM with vcfadmin@local

Navigate to Locker, and Select password, and select Add

The default password must be a minimum of eight characters.

Add the details for Password alias, password itself Description and Username

Install Identity Manager

Login to vRSLCM with vcfadmin@local

Navigate to Lifecyle Operations, Create Environment. Use the global environment as is already populated with the vCenter details

Add Administrator email and Default Password.

Note: if Password is not already configured in Locker, a new password can be created by clicking on the “Plus” Sign to add

Select Datacenter, which should already be populated from the Management workload domain from drop down list

Opt in or out of VMware Customer Experience Improvement program and click Next

Select VMware Identity Manager and in this scenario, we are selecting clustered mode which means we will need 5 IP addresses in AVN Network Segment, ensure corresponding FQDN are created. Click Next to continue

Accept the EULA, click Next.

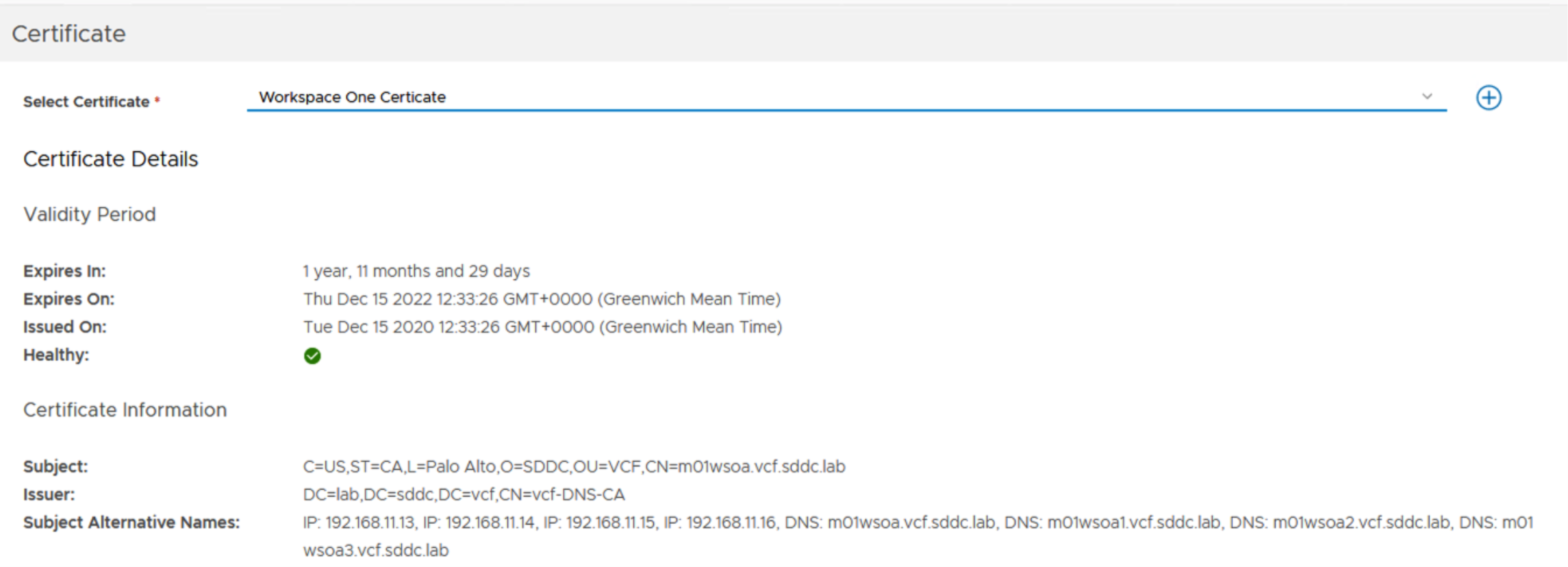

Select the Certificate that was created earlier (or create a new certificate).

Infrastructure details should already be populated from SDDC manager. Since we are deploying to vSAN, chose Thin mode, which means the appliances will be deployed thinly provisioned using the default vSAN Storage Policy, click Next.

Network Details should also be pre-populated from AVN details, click Next

VMware Identity Manager Details must be entered for certificate, password, Cluster IP, Database IP, and Cluster members.

Below are screenshots for each screen to illustrate the number of inputs.

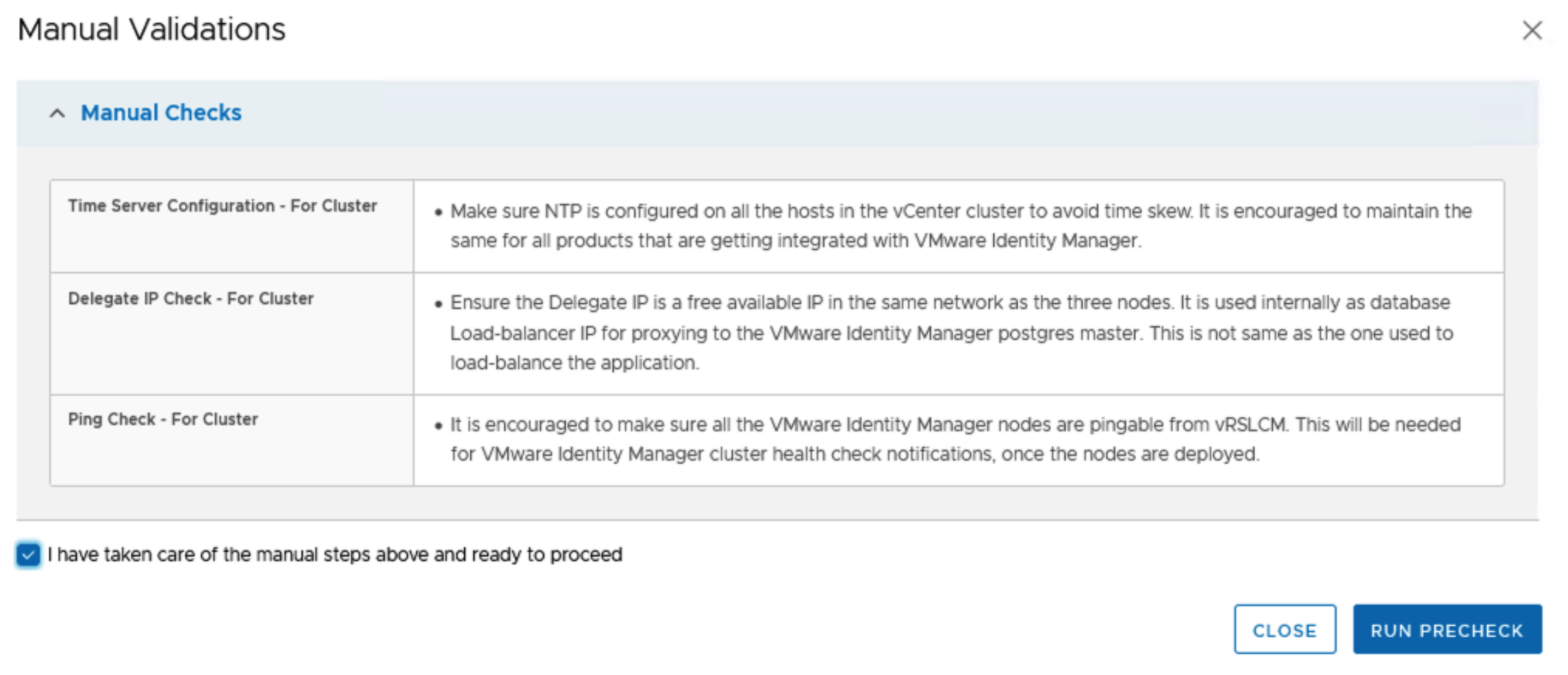

Run Pre-check before deployment to validate inputs and infra.

Ensure all pre-checks pass validation

The pre-check report can be downloaded in PDF format or pre-check can be re-run.

Click Next.

At this point you are ready to submit, a json file can be downloaded to deploy programmatically. Pre-check can be re-run. Or progress can be saved now to submit later. Review settings and click Submit.

Deployment progress can be monitored from vRSLCM, SDDC manager and vCenter

SDDC Manager

NSX-T load balancer will be deployed from SDDC manager as part of the deployment, this can be monitored on SDDC Manager tasks.

vCenter

Workspace one OVAs will be deployed on the management cluster

Once Deployed Successfully the task should be marked as complete from vRSLCM > Life Cycle Operations > Requests.

Verify Workspace ONE Identity Manager

Navigate to vRSLCM > Lifecycle Operations > Environments

Click on global environment and View details

Trigger inventory sync to trigger sync from vRSLCM to VIDM and to SDDC Manager

This task can be monitored from Requests on SDDC Manager.

From SDDC manager Dashboard Navigate to vRealize Suite. Details of vRSLCM and Workspace One access is registered

Connect to Workspace one access and connect using the credentials specified during install

Deploying vRealize Operations

With vRSLCM and Workspace One deployed you are now able to deploy vROPS

In this POC scenario we will deploy a 3 node vROPs cluster (Master Replica ad Data node)

Note: Please refer to official documentation for detailed steps. Official documentation should supersede if it differs from guidance documented here.

vROPS Requirements

- vRealize Operations Manager binaries

- vRealize Operations Manager bundle synched to vRSLCM product binaries

- at least 4 IP addresses for vROPS cluster IP, Master, Replica and data node.

- Appropriate vRealize License Key

- Certificate (self-signed or signed by CA)

- Password setup

For example, we need the following IP addresses with FQDN (forward and reverse lookups

|

IP (AVN Segment) |

FQDN |

Purpose |

|

192.168.11.18 |

m01vrops.vcf.sddc.lab |

Cluster VIP |

|

192.168.11.19 |

m01vropsmaster.vcf.sddc.lab |

Master VROPS Node |

|

192.168.11.20 |

m01vropsreplica.vcf.sddc.lab |

VROPs Replica Node |

|

192.168.11.21 |

m01vropsdata1.vcf.sddc.lab |

Data Node |

vROPS Bundle Mapping

Verify VROPS 8.1.1 bundle has been downloaded on SDDC manager

If product binaries are displayed on vRSLCM a manual sync maybe necessary

Connect to vRSLCM and login with vcfadmin@local

Navigate to Lifecycle Operations > Settings > Binary Mappings

Similar to Workspace One, we may need to create a default password credential and Certificate for the vROPS Cluster

vROPS Default Password

From vRSLCM, Navigate to Locker > Password. Click Add

Below is a sample value for vROPS Passwords

|

Setting |

Value |

|

Password Alias |

vrops-root |

|

Password |

vrops-root-password |

|

Confirm Password |

vrops-root-password |

|

Password Description |

vROPS Root user |

|

Username |

root |

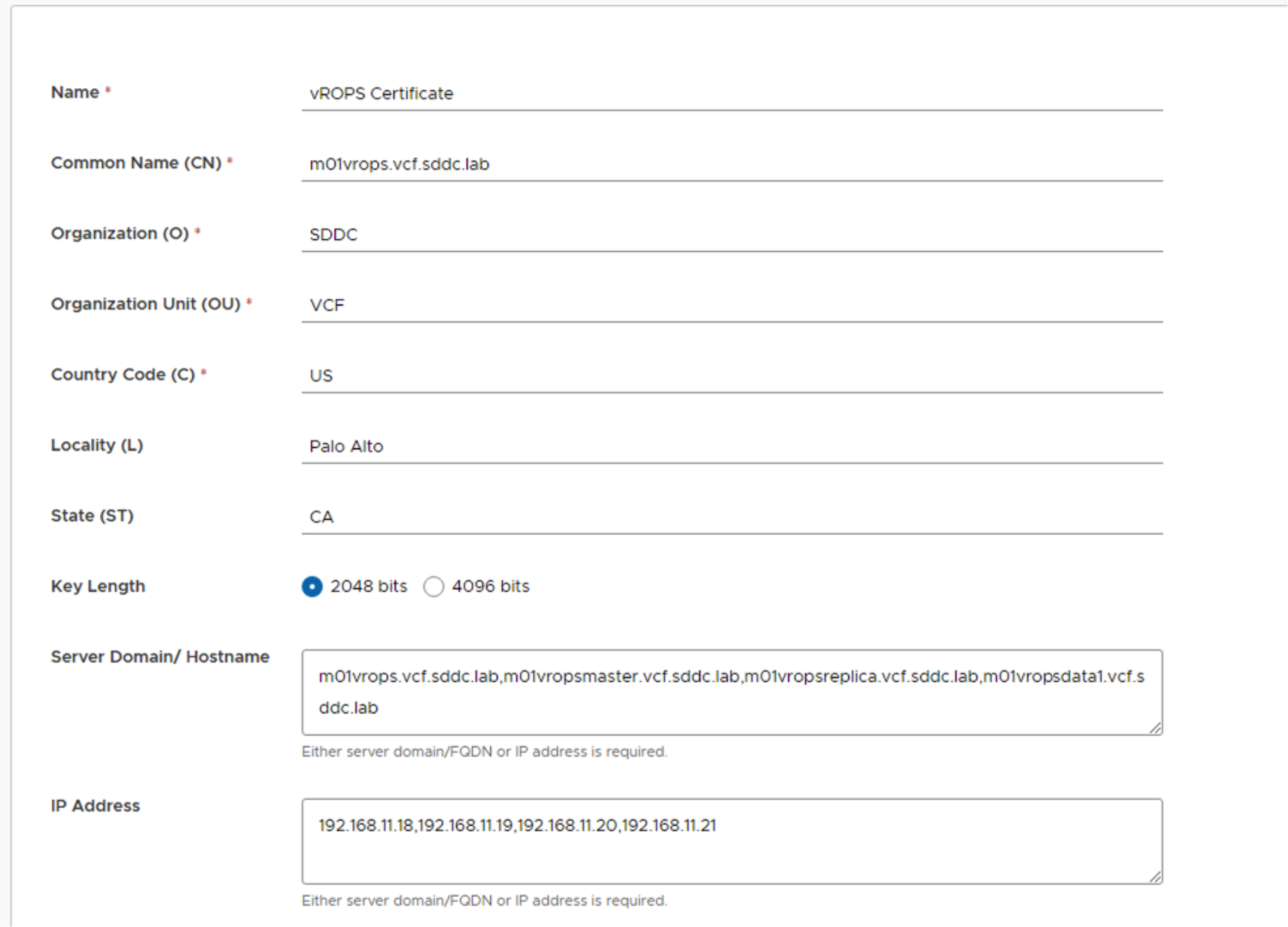

vROPs Certificate

Again, as per Workspace one we can generate a self-signed certificate or a CA signed certificate.

From vRSLCM, Navigate to Locker > Certificate > Generate for self-signed or Generate CSR for external CA

In our case as we already have an external CA, we will generate a CSR

Ensure to add the following CN name should match the cluster VIP and add the master, replica and data nodes in hostname and IP fields,

Here is a worked example

Click Generate if generating self-signed or Generate CSR

In this example we are generating a CSR.

Once the CSR is generated, sign with external CA and import certificate

Create Environment

We are going to setup a new environment for vROPs. This is in addition to the “globalenviroment” already created.

On vRSLCM dashboard click Lifecycle operations > Create Environment

In this case we will call the environment VCF-POC with default password of vrops-root we created earlier

The datacenter will be from the mgmt. workload domain

Select vROPS, New install with size of medium, and 3 nodes

For Product details enter the following, as per VVD guidance

We will implement

|

Setting |

Value |

|

Disable TLS version |

TLSv1, TLSv1.1 |

|

Certificate |

vROPS Certificate |

|

Anti-affinity / affinity Rule |

Enabled |

|

Product Password |

vrops-root |

|

Integrate with identity Manager |

Selected |

Select and Validate your license

Select the vROPS Certificate created earlier

![]()

vCenter Infrastructure details are pre-filled out and are displayed to be acknowledged, select Disk Mode to Thin and click next

As with Workspace One, networking details are pulled from SDDC Manager to reflect AVN networks, Click Next

For Cluster VIP add vROPS cluster FQDN

For Master Node component add FQDN (m01vropsmaster.vcf.sddc.lab) and IP address details.

The VM name can be changed to match particular naming conventions.

Click on advanced settings (highlighted) to review NTP and time zone Settings

For Replica Node component add Replica FQDN (m01vropsreplica.vcf.sddc.lab) and IP details

Click on advanced configuration Icon to add timezone details

For Data Node component add Data Node FQDN (m01vropsdata1.vcf.sddc.lab) and IP details

Click on advanced configuration Icon to add or check time zone details.

Click Next to continue and run RUN PRECHECK

Address any errors on Precheck and ensure all validations succeed

Review Summary and submit vROPS Deployment

Progress can also be tracked from Life Cycle Operations > Requests

Progress can also be tracked from SDDC Manager Tasks

As we can see as part of deployment vROPS will automatically configured to begin monitoring VCF management domain which includes vCenter, vSAN and Workspace One

Once deployed, the environment can be viewed from Lifecycle Operations > Environments

Progress

Click on view details to see the details

Clicking on TRIGGER INVENTORY SYNC will rediscover inventory of VCF management Domain.

Deploying vRealize Log Insight

Similar to vROPS we can now deploy vRealize Log insight in a new environment on vRSCLM

In this POC scenario we will deploy a 3 node vRealize Log Insight (vRLI) Cluster (one vRLI Master and two worker nodes)

Note: Please refer to official documentation for detailed steps. Official documentation should supersede if it differs from guidance documented here.

vRealize Log Insight Requirements

- vRealize Log Insight Binaries downloaded on SDDC Manager

- vRealize Log Insight bundle synched to vRSLCM product binaries

- at least 4 IP addresses for vRLI cluster IP, Master, two worker nodes.

- Appropriate vRealize License Key

- Certificate (self-signed or signed by CA) added to vRSLCM Locker

- Password added to vRSLCM locker

Sample IP addresses for vRli Cluster need the following IP addresses with FQDN (forward and reverse lookups

|

IP (AVN Segment) |

FQDN |

Purpose |

|

192.168.11.22 |

m01vrli.vcf.sddc.lab |

vRli Cluster IP |

|

192.168.11.23 |

m01vrlimstr.vcf.sddc.lab |

vRli Master Node |

|

192.168.11.24 |

m01vrliwrkr01.vcf.sddc.lab |

Worker Node 1 |

|

192.168.11.25 |

m01vrliwrkr02.vcf.sddc.lab |

Worker Node 2 |

vRealize Log Insight Bundle Download

Ensure install bundle for vRealize Log Insight 8.1.1 is downloaded on SDDC Manager and binaries are synched to vRSLCM

From vRealize Suite lifecycle manager, navigate to Lifecyle Operations > Settings > Binary Mappings

Ensure binaries are synched once vRealize Log Insight 8.1.1 has been downloaded to SDDC manager

vRealize Log Insight Default Password.

From vRSLCM, Navigate to Locker > Password. Click Add

|

Setting |

Value |

|

Password Alias |

vrli-admin |

|

Password |

vrli-admin-password |

|

Confirm Password |

vrli-admin-password |

|

Password Description |

Log Insight admin password |

|

Username |

admin |

vRealize Log Insight Certificate

Again, as per Workspace One and vROPS we can generate a self-signed certificate or a CA signed certificate

Since this is a cluster, we need a certificate for the following hostnames.

This IP range is based on the “Region A – Logical Segment” as part of VCF bring up using AVNs.

|

IP (AVN Segment) |

FQDN |

|

192.168.10.22 |

m01vrli.vcf.sddc.lab |

|

192.168.10.23 |

m01vrlimstr.vcf.sddc.lab |

|

192.168.10.24 |

m01vrliwrkr01.vcf.sddc.lab |

|

192.168.10.25 |

m01vrliwrkr02.vcf.sddc.lab |

This maps to Segment in NSX-T Logical Networks for the management domain

From vRSLCM, Navigate to Locker > Certificate > Generate for self-signed or Generate CSR for external CA

Either Generate a new certificate or import a certificate

vRealize Log Insight Create Environment

From vRSLCM dashboard go to Lifecycle Operations, then Create Environment

Add VCF POC Log Insight

|

Setting |

Value |

|

Environment name |

VCF POC vRli |

|

Administrator email |

|

|

Default Password |

Global Admin Password |

|

Select Datacenter |

m01-dc01 |

Select vRli with deployment type of Cluster

Click Next and Accept the EULA.

Select license, click Validate Association, and click Next

Select vRealize Log Insight Certificate that was created earlier and click next.

Verify infrastructure details, click next.

Note: NSX-T Segment should match VCF deployment)

Verify Network Details

Install Log Insight

For the purposes of this POC document we will select “Small “form factor for Node Size

Select Certificate, DRS Anti-affinity rule and integrate with Identity Manager

Add the IP addresses FQDN for Cluster VIP, Master, and two worker nodes

Run Precheck once all IP addresses and FQDN have been entered.

Address any issues and re-run pre-check

Once all prechecks are validated, review the configuration and initiate deployment

Deployment can be monitored by vRSLCM, vCenter and SDDC manager.

Once vRLI has been deployed, Navigate to SDDC Manager – vRealize Suite and verify vRealize Log Insight has been registered to VCF

Verify vRealize Log Insight connection to vRealize Operations Integration

Using a web browser navigate to vRli master node FQDN

Login as “admin”

Navigate to Administration > Integration, vRealize Operations

Ensure vROPs hostname and password are pointing to vROPS instance.

Click Test to verify setting

If not already enabled, enable alert management, launch in context and metric calculation and metric calculation.

Update content packs Navigate to Content Packs and updates as shown below.

Click Update All.

Section 4 Solution Deployment guidelines

Deploying vSphere 7.0 with Tanzu on VCF

vSphere with Tanzu provides the capability to create upstream compliant Kubernetes clusters within dedicated resource pools by leveraging Tanzu Kubernetes Clusters. Another advantage of vSphere with Tanzu is the ability to run Kubernetes workloads directly on ESXi hosts (vSphere Pods).

vSphere with Tanzu brings Kubernetes awareness to vSphere and bridges the gap between IT Operations and Developers. This awareness fosters collaboration between vSphere Administrators and DevOps teams as both roles are working with the same objects.

IT Operators continue to provision, view and monitor their virtual infrastructure as they have always done, but now with the Kubernetes awareness and insight that has eluded them in the past.

Developers can now deploy K8s and container-based workloads directly on vSphere using the same methods and tools they have always used in the public cloud. VMware vSphere with Tanzu provides flexibility as developers can choose to run pods native to ESXi (native pods) or inside purpose-build Kubernetes clusters hosted on top of namespaces configured on the vSphere clusters (Tanzu Kubernetes Clusters).

Both teams benefit by being able to use their existing tools, nobody has to change the way the work, learn new tools, or make concessions. At the same time, both teams have a consistent view and are able to manage the same objects.

Running vSphere with Tanzu on VMware Cloud Foundation (VCF) provides a best-in-class modern hybrid cloud platform for hosting both traditional and modern application workloads. VMware Cloud Foundation is a proven, prescriptive approach for implementing a modern VMware based private cloud. One of the key benefits of VCF is the advanced automation capabilities to deploy, configure, and manage the full VMware SDDC software stack including products such as vSphere with Tanzu, vSAN, and NSX among others.

Enabling vSphere with Tanzu

In order to enable vSphere with Tanzu it is necessary to complete a set of tasks. vSphere with Tanzu will be deployed in a Virtual Infrastructure Workload Domain; however, there is also an option to deploy vSphere with Tanzu on a Consolidated VCF deployment (Management Domain). For more information about vSphere with Tanzu supportability on VCF Management Domain please refer to this Blog Post and this White Paper. An NSX-T Edge Cluster will be required as well as tasks including enabling Workload Management, creating a content library, creating a namespace, deploying harbor, obtaining CLI Tools, creating guest clusters and deploying containers.

This is a workflow overview of the procedure from a two-persona perspective (IT Operator and Developer).

vSphere with Tanzu Requirements

The requirements are as below; a VI workload domain needs to be created with at least three hosts, backed by an NSX-T edge cluster.

vSphere with Tanzu on Consolidated Architecture Requirements

This is a special case whereby a K8s cluster can be stood up with just four hosts in total. In order to achieve this, an NSX Edge cluster must be created for the Management domain. Application Virtual Networks (AVNs) is now supported on the management domain together with K8s. The requirements are:

- Cloud Foundation 4.X deployed with one vSphere cluster on the management domain

- NSX-T configured (edge cluster (large form factor) created, hosts added. etc.)

- Enough capacity on the vSAN datastore for all components

NOTE: vSphere with Tanzu on consolidated architecture requires some important steps to be followed. Please refer to this document for step-by-step

instructions: https://blogs.vmware.com/cloud-foundation/files/2020/05/VMW-WP-vSphr-KUBERNETES-USLET-101-WEB.pdf

See this blog post for more

information: https://cormachogan.com/2020/05/26/vsphere-with-kubernetes-on-vcf-4-0-consolidated-architecture/

Creating VI Workload Domain

Creating a VI Workload Domain (VI WLD) falls in the IT Operator persona. The IT Operator will create a new VI WLD from SDDC by following the steps from the that particular POC section. However, there are a few aspects that should be taken into considerations when creating a VI WLD for vSphere with Tanzu use case.

Note that the VI WLD for Kubernetes should be created should be using VUM (as opposed to vLCM):

Requirements:

- Minimum of 3 hosts; 4 or more hosts recommended Licensed for vSphere with Tanzu

- New NSX-T Fabric

- VI WLD with VUM enabled (no vLCM)