vSAN RA - Target Workloads - Delphix Dynamic Data Platform on VMware vSAN

Executive Summary

This section covers the business case, solution overview, solution benefits, and key results of Delphix Dynamic Data Platform on VMware vSAN solution.

Business Case

In today’s digital economy, data is the essential ingredient to a company’s success and competitive advantage. From the bottom-line efficiency to the top-line growth and innovation, turning data into action requires fast and secure access across the company.

Delphix introduces DataOps to achieve this. It is about optimizing data workflows in a business. Delphix solutions address the change in data management requirements with an unprecedented transformation to the decades-old way of traditional data cloning, data security, and data refresh.

Having high-performance and cost-effective infrastructure hardware and software is critical to delivering a Delphix solution for agile mission-critical workloads.

Companies can accelerate their infrastructure modernization using VMware vSAN™ together with Delphix Dynamic Data Platform to provide a comprehensive approach to DataOps, making IT a strategic and cost-effective solution and helping customers evolve their data center without risk, controlling IT costs and scaling to tomorrow’s business needs.

Why Delphix on VMware vSAN?

VMware vSAN and Delphix are market leaders in their respective domains and many enterprise customers have asked for solutions to move the Delphix Dynamic Data Platform on vSAN, running on VMware certified x86 servers. With the collaboration of vSAN and Delphix:

Traditional IT silos of compute, storage, and networking are eliminated.

All storage management moves into a single software stack, thus taking advantage of the security, performance, scalability, operational simplicity, and cost effectiveness of vSAN.

Workloads can be easily migrated from traditional storage arrays to a modern, dynamic, and consolidated hyperconverged infrastructure based on vSAN.

Delphix solutions are already hosted and proven on the VMware vSphere platform for many years. Further, since vSAN is natively integrated with vSphere, the hosting model with vSAN storage will be a familiar deployment model for vSphere customers.

Solution Overview

This joint solution is a showcase of using VMware vSAN as a platform for deploying Delphix Dynamic Data Platform in a VMware vSphere ® environment and provides validated testing results based on parameter variations running on TPC-C like workload.

All storage management moves into a single software stack, thus taking advantage of the security, operational simplicity, and cost-effectiveness of vSAN.

Workloads can be easily migrated from bare-metal configurations to a modern, dynamic, and consolidated hyperconverged infrastructure based on vSAN.

vSAN is natively integrated with vSphere, which helps to provide smarter solutions to reduce the design and operational burden of a data center.

Key Results

The technical white paper:

- Provides the solution architecture for deploying Delphix in a vSAN Cluster for development.

- Measures the baseline performance when running TPC-C like workloads on a Delphix Dynamic Data Platform.

- Evaluates the impact of different parameter settings in the performance testing.

- Provides best practice guides.

Audience

This reference architecture is intended for virtualization administrators, Delphix administrators and storage architects involved in planning, designing, or administering of Delphix Dynamic Data Platform on vSAN.

Terminology

This paper includes the following terminologies.

Table 1. Terminology [1]

| Term | Definition |

|---|---|

| Delphix Engine or Delphix VM | A virtual machine containing a Delphix installation. Leverages storage allocated to VM for storing compressed copies of source data. Supplies data to target VM over NFS and iSCSI. |

| dSource | The virtualized representation of a database that is created by the Delphix Engine. As a virtualized representation, it cannot be managed, manipulated, or examined by database tools. |

| VDB (virtual database) | A database provisioned from either a dSource or another VDB, which is a full read/write copy of the source data. A VDB is created and managed by the Delphix Engine. |

| DxFS | The filesystem used by the Delphix Engine. Stores and manages application data and is responsible for the optimization of storage and performance. |

| SnapSync | The standard process for importing data from a linked source into the Delphix Engine. An initial SnapSync is performed to create a copy of data on the Delphix Engine. Incremental SnapSyncs are performed to update the copy of data on the Delphix Engine. |

| Source database | The original (sometimes physical) database that is usually the production database at a site, although it could be any database that the user designates as a source. Delphix creates a dSource from the source database. |

| Target VM | The VM on which the Delphix Engine creates VDBs. |

Technology Overview

This section provides an overview of the technologies used in this solution: • VMware vSphere 6.5 • VMware vSAN 6.6 • Delphix Dynamic Data Platform

VMware vSphere 6.5

VMware vSphere 6.5 is the infrastructure for next-generation applications. It provides a powerful, flexible, and secure foundation for business agility that accelerates the digital transformation to cloud computing and promotes success in the digital economy.

vSphere 6.5 supports both existing and next-generation applications through its:

- Simplified customer experience for automation and management at scale

- Comprehensive built-in security for protecting data, infrastructure, and access

- Universal application platform for running any application anywhere

With vSphere 6.5, customers can run, manage, connect, and secure their applications in a common operating environment, across clouds and devices.

VMware vSAN 6.6

VMware vSAN, the market leader in HyperConverged Infrastructure (HCI), enables low-cost and high-performance next-generation HCI solutions, converges traditional IT infrastructure silos onto industry-standard servers and virtualizes physical infrastructure to help customers easily evolve their infrastructure without risk, improve TCO over traditional resource silos, and scale to tomorrow with support for new hardware, applications, and cloud strategies. The natively integrated VMware infrastructure combines radically simple VMware vSAN storage, the market-leading VMware vSphere Hypervisor, and the VMware vCenter Server ® unified management solution all on the broadest and deepest set of HCI deployment options.

See VMware vSAN 6.6 Technical Overview [1] for details .

Figure 1. vSAN HCI Advantages

Delphix Dynamic Data Platform

The Delphix Dynamic Data Platform provides a comprehensive approach to DataOps, enabling companies to easily deliver and secure data, wherever it exists. With Delphix, businesses manage data distribution and access with the speed, simplicity, and level of security required to drive digital transformation.

The Delphix Dynamic Data Platform reduces data friction by providing a collaborative platform for data operators (such as DBAs, InfoSec, and IT Operations teams) and data consumers (such as developers, QAs, analysts, and data scientists), ensuring that sensitive data is secured and that the right data is made available to the right people, when and where they need it.

Delphix bridges the gap between people and data through five key steps:

- Connect: Non-disruptively collect data from databases, applications, and file systems. After compressing this data, the platform stays synchronized with sources by recording all changes over time.

- Virtualize: Through intelligent data block sharing, create virtual copies of the sources that are space-efficient, portable, and fully readable/writeable.

- Secure: Discover sensitive data and automatically apply data masking or tokenization—with the flexibility to define custom algorithms—to scramble confidential information in copies. Define policies that set privileges and integrate access control into data governance workflows.

- Manage: Quickly provision secure data copies to users in their target environments with functionality to audit, monitor, and report against access and usage.

- Self Service: Provide developers, testers, analysts, data scientists, or other users with controls to manipulate data at will. Users can refresh data to reflect the latest state of production, rewind environments to a prior point in time, bookmark data copies for later use, branch data copies to work across multiple releases, or easily share data with other users.

Solution Configuration

This section introduces the resources and configurations for the solution including an architecture diagram, hardware and software resources and other relevant configurations.

Overview

This section introduces the component configuration for the solution including:

- Solution architecture

- Hardware resources

- Software resources

- Network configuration

- vSAN policy

- Delphix, test and development database VM configuration

- Delphix VM

- Oracle database configuration

Solution Architecture

To ensure continued data protection and availability during planned or unplanned downtime, a minimum of four nodes are recommended for the vSAN Cluster. In this reference solution, a 4-node vSAN Cluster was used to validate the cluster functions for typical Delphix workloads and scaling of test/dev environments using the Delphix Dynamic Data Platform.

A typical configuration includes two or more disk groups per node. Custom vSAN storage policies can be created for different use cases to satisfy performance, resource commitment, failure tolerance, checksum, and quality -of-service requirements in an application-centric way. An all-flash configuration is recommended for high-performance requirements.

Figure 2. Solution Architecture

The vSAN enabled vSphere Cluster in the Delphix testing environment consists of four DELL R630 servers for hosting the Delphix Engine and the test development VMs hosting the VDB engine. This is an all-flash vSAN and vSphere Cluster. For the production database, we used a separate 4-node vSAN and vSphere Cluster. The production database was used for the initial copy to the Delphix Engine and a subsequent incremental copy that was intelligently managed by Delphix. The vSphere Cluster details hosting the production database (also called as a source database) is used to create dSource on the Delphix Engine. Further, all the testing was run on the test/dev vSphere Cluster so that details regarding the production vSphere Cluster is not of a significant importance in this reference architecture and not provided here. The hardware configurations and software configurations provided below are for vSphere Cluster hosting the Delphix Engine and test/dev VMs.

Hardware Resources

Table 2. Hardware Resources

| PROPERTY | SPECIFICATION |

|---|---|

| Server | DELL R630 |

| CPU cores | 2 sockets, 12 cores each of 2.3GHz with hyper-threading enabled |

| RAM | 256GB |

| Network adapter | 2 x 10Gb NIC, 1 x 1Gb NIC |

| Storage adapter | SAS Controller Dell LSI PERC H730 Mini |

| Disks | Cache-layer SSD: 2 x 400GB SATA SSD Intel S3710 Capacity-layer SSD: 8 x 400GB SATA SSD Intel S3610 |

Software Resources

Table 3. Software Resources

| Product/Software Name | Version |

|---|---|

| VMware ESXi | 6.5.0d |

| VMware vSAN | 6.6 |

| Delphix | 5.1.7 |

| Oracle Database | 12.1.0.2.0 |

| Database VM—Operating System | Oracle Linux 7.3 |

| Oracle TPC Workload Generator | Swingbench 2.6 (TPC-C like workload) |

Network Configuration

A VMware vSphere Distributed Switch™ (VDS) acts as a single virtual switch across all associated hosts in the data cluster. This setup allows virtual machines to maintain a consistent network configuration as they migrate across multiple hosts. The vSphere Distributed Switch uses two 10GbE adapters per host. Figure 3 shows the VDS configuration snippet for one of the ESXi Host. Link Aggregation Control Protocol (LACP) is used to combine and aggregate multiple network connections (2 x 10GbE). When NIC teaming is configured with LACP, the load balancing of the vSAN network occurs across multiple uplinks. However, this happens at the network layer, and is not done through vSAN. The physical network switch is also configured using LACP, so the LAG (link aggregation group) is formed. For details about LAG for vSAN, refer to the VMware vSAN Network Design guide.

Figure 3. Distributed Switch with Port Groups

A port group defines properties regarding security, traffic shaping, and NIC teaming. Jumbo frames (MTU=9000 bytes) were enabled on the vSAN interface and the default port group setting was used. Figure 3 shows the distributed switch with port groups created for different functions. Two port groups were created:

- VM management port group for VMs (Delphix VM and target VM for test/dev)

- vSAN port group for the kernel port used by vSAN traffic

vSAN Policy

We used vSAN policies to set the availability, capacity, and performance policy on a per VM or object basis when a VM is deployed on the vSAN datastore. The key policy parameters discussed in this solution include:

- Failure tolerance method —Specifies whether the data replication method optimizes for performance or capacity. RAID 1 (Mirroring) uses more disk space to place the components of objects but provides better performance for accessing the objects. Another failure tolerance method is RAID 5/6 (Erasure Coding) capacity, it uses less disk space but the performance is reduced.

- Primary level of Failures to Tolerate (PFTT) —This rule defines the number of host and device failures that a virtual machine object can tolerate. The greater the PFTT, the higher the availability and more space the objects consume from vSAN datastore.

Delphix, Test and Development Database VM Configuration

In a 4-node vSphere Cluster, Delphix VM was placed on one of the ESXi hosts and test/dev VMs were spread across the other three ESXi hosts. Hosting the test/dev development VMs and Delphix on the same vSphere Cluster efficiently utilized the compute and storage available in the cluster. vSphere HA was enabled, which helped restart the VM on other available hosts in clusters if there was host failure.

Figure 4. vSAN Datastore

Delphix VM

The Delphix VM was configured with different CPU and memory to understand the behavior during workload tests. Even though the recommended minimum memory for Delphix VM is 64GB. It was purposely configured low (16GB and 32GB), so the workload was disk IO bound to generate more IOPS on vSAN Cluster and less cache hits. However, in a real-world scenario, depending on the application and requirements, the memory allocated to Delphix VM may be much higher for high-performance requirements. Depending on the storage capacity required for Delphix Engine, choose the size of the data disk and use multiple virtual disks to help improve performance by reducing inherent limitations of SCSI. While choosing the size of the disk, keep in mind the maximum number of vSCSI targets supported per VM is 60. In a large Delphix environment, the size of the disks allocated may be significantly larger. In this reference architecture, 2.1TB is allocated to the Delphix Engine. Table 4 shows how the 12 data disks of 175GB each were allocated to the Delphix Engine and the disks were evenly balanced across the four LSI logic parallel SCSI controllers. Delphix system disk was not used to load balance disks across vSCSI controllers. Only the data disks were used.

All these disks were eager zero thick (EZT) provisioned as per Delphix’s recommendation . Unlike VMFS datastore in vSAN datastore when creating EZT disk, it is not zeroed automatically; However, it reserves space in vSAN datastore and can provide consistent performance.

Delphix creates its own file system called DxFS by using the assigned storage. The DxFS file system takes care of snapshot, cloning and compression, and handles all this automatically transparent to users.

Table 4. Data Disk Allocation

| Name | SCSI TYPE | SCSI ID (Controller, LUN) | Size (GB) |

|---|---|---|---|

| Delphix system disk | LSI Logic | SCSI (0:0) | 300 |

| Data disk 1 | LSI Logic | SCSI (0:1) | 175 |

| Data disk 2 | LSI Logic | SCSI (1:0) | 175 |

| Data disk 3 | LSI Logic | SCSI (2:0) | 175 |

| Data disk 4 | LSI Logic | SCSI (3:0) | 175 |

| Data disk 5 | LSI Logic | SCSI (0:2) | 175 |

| Data disk 6 | LSI Logic | SCSI (1:1) | 175 |

| Data disk 7 | LSI Logic | SCSI (2:1) | 175 |

| Data disk 8 | LSI Logic | SCSI (3:1) | 175 |

| Data disk 9 | LSI Logic | SCSI (0:3) | 175 |

| Data disk 10 | LSI Logic | SCSI (1:2) | 175 |

| Data disk 11 | LSI Logic | SCSI (2:2) | 175 |

| Data disk 12 | LSI Logic | SCSI (3:2) | 175 |

Two different vSAN policy settings were tested in this solution as shown in Table 5.

Table 5. vSAN Policy Used in this Solution

| vSAN Policy Name | Protection |

Object SPACE RESERVATION (OSR) |

|---|---|---|

| R1 (Default Policy) | Mirror (PFTT=1) | 100% |

| R5 | Erasure Coding (R5) | 100% |

All other vSAN policy parameters were set to the default value: the stripe width was set to 1 and the checksum was enabled. Because the Delphix data disks are created with the EZT option, the object space reservation (OSR) is set to 100% by default even if it is not explicitly set.

Oracle Database Configuration

Virtual databases (VDBs) were configured as below:

- System Global Area (SGA) was set to 5GB, Program Global Area (PGA) was set to 1GB

- Three redo groups each with 2GB

- Archive log enabled

Database cache was configured low, so the workload was disk IO bound, which helped to push physical IOs to the vSAN layer. The point of this exercise was primarily validating the vSAN storage IO capability for Delphix workloads, and was not necessarily covering the CPU and memory capability of vSphere in detail. Delphix on vSphere is implemented at many customers and has been successfully running for many years. CPU and memory requirement is well documented for details, refer to Architecture Best Practices for Delphix on ESXi Host .

Solution Validation

In this section, we present the test methodologies and results used to validate this solution.

Test Overview

We validated the health and suitability of the vSAN enabled vSphere Cluster for Delphix using Delphix HostChecker, Delphix IO report card, and Delphix network performance tool test. Once verified, the following high-level steps were performed to make the environment ready for testing.

Delphix supports different types of database, Oracle database is used in this testing and the source database size is 450GB. First, register the source database server in the Delphix Engine. Then link the source database on that server to the Delphix Engine. Linking performs the initial full backup of the source database onto Delphix via Recovery Manager (RMAN) APIs. All the RMAN APIs are run in the background automatically and are not visible to the Delphix users. This full backup of the source database, which is created on the Delphix Engine, is a virtualized representation of a database called dSource. For further sync from the source database to Delphix dSource is going to be only incremental using the SnapSync mechanism. dSource cannot be managed, manipulated, or examined by database tools. VDB is leveraged to create and distribute copies of dSource.

In our testing, multiple VDBs were created from a single dSource using the Delphix user interface. The time taken to provision each VDB copy from dSource was around 2-3 minutes. The VDBs were provisioned to target VMs (test/dev) using NFS. Each VDB provisioned was hosted on separate VMs in the test. These target VMs are in the three ESXi hosts (ESXi 2, ESXi 3, ESXi 4). Three or six VMs were used to mount these VDBs depending on the number of VDBs provisioned during the test.

And then we run the following tests to generate the workload and observe the performance:

- Baseline testing: Read-intensive TPC-C like workload on Delphix VDBs

- Increasing the vCPU allocated to Delphix VM and run the baseline testing

- Increasing the memory allocated to Delphix VM and run the same baseline testing

- Scaling the number of VDBs and run the baseline testing

- Write-intensive TPC-C like workload with different vSAN policies

Workload Generator

Swingbench is an Oracle workload generator designed to test an Oracle database. It is a free tool and provides options to run different benchmarks such as order entry (TPC-C like), sales history, and TPC-DS like. We used Swingbench order entry to generate Oracle TPC-C like workload for the testing.

Swingbench VM was set up to generate two different TPC-C like workloads:

- Read-intensive workload: This workload is read intensive and primarily includes Browse products, Browse Orders, and Sales Rep Query.

- Write-intensive workload: This workload is primarily Customer Registration, Update Customer Details, Order Products, Process Orders including few Browse Products and Sales Rep Query.

Both of these workloads were set up to generate high IO throughputs at the VDB level, and these throughputs are also observed at the vSAN level. All the tests were run for one hour and the metrics shown were average during this period unless otherwise mentioned.

Health and Performance Data Collection Tools

We used the following vSphere, vSAN, and Delphix performance data collection tools and configuration checking tools to check the readiness before and during running workload tests:

- vSAN Performance Service: vSAN Performance Service is used to monitor the performance of the vSAN environment, using the web client. The performance service collects and analyzes performance statistics and displays the data in a graphical format. You can use the performance charts to manage your workload and determine the root cause of problems.

- vSAN Health Check: vSAN Health Check delivers a simplified troubleshooting and monitoring experience of all things related to vSAN. Through the web client, it offers multiple health checks specifically for vSAN including cluster, hardware compatibility, data, limits, physical disks. It is used to check the health of the vSAN before Delphix deployment. See vSAN Health Check Information for more information.

- esxtop utility: esxtop is a command-line utility that provides a detailed view of the ESXi resource usage. Refer to the VMware Knowledge Base Article 1008205 for more information.

- Delphix IO report card: Delphix IO report card is a fio-based storage performance tool that executes a synthetic workload to evaluate the performance characteristics of the storage assigned to the Delphix Engine. The Storage Performance Tool is a feature that is only available from the command-line interface (CLI). Because the test is destructive, the Storage Performance Tool can only be run before setting up the Delphix Engine, or when adding new storage devices.

- Delphix HostChecker: The HostChecker is a standalone program that validates whether host machines are configured correctly before the Delphix Engine uses them as data sources and provision targets.

- Delphix Network Performance tool: This iperf-based Network Performance Test Tool executes a synthetic workload on the network to evaluate the performance characteristics available between the Delphix Engine and the Target servers.

- Oracle AWR reports with Automatic Database Diagnostic Monitor (ADDM):

Automatic Workload Repository (AWR) collects, processes, and maintains performance statistics for problem detection and self-tuning purposes for Oracle database. This tool can generate reports for analyzing Oracle performance.

Note: Any vSAN performance data is a result of the combination of hardware configuration, software configuration, test methodology, test tool, and workload profile used in the testing.

Baseline Testing: Read intensive TPC–C like Workload on Delphix VDBs

In this test, a read-intensive TPC workload was run on three VDBs using Swingbench. Delphix VM was configured with 24vCPUs and 16GB of memory. The test was run for one hour. We used the R1 (Mirror) vSAN storage policy.

The AWR reports of the three VDBs were captured and a snippet of the physical IO is shown in Figure 5. From the VDB level, there was approximately 43,000 read IOPS and 340MBps IO throughput on each VDB. The aggregate IOPS and IO throughput respectively were 129,000 and 1,020 MBps. Some of these physical IOs were masked from vSAN due to caching at the Delphix level.

Note: The figures in the AWR chart are in byte and we convert the unit to MB in the text for better users’ understanding.

Figure 5. Oracle AWR Snippet from VDBs

The IO metrics were also captured at the vSAN level using the vSAN Performance Service. Figure 6 provides the storage metrics of the Delphix VM, which is the only VM on host “ESXi 1” (w2-pe-vsan-esx-045). The workload was steady throughout the one-hour test period. As shown in the vSAN performance service graph, the total disk IOPS and the throughput (MBps) on the Delphix VM observed were steady around 100,000 and 910 respectively. The read latency was around 1.44ms.

.png)

Figure 6. vSAN Performance Service Graph

Because there is significant network traffic from both Delphix and vSAN, one of the key utilization metrics to observe during this workload is the network utilization: at the Delphix level for hosting the database files using the NFS and at the vSAN level for storage traffic. Figure 7 shows the aggregate network traffic at the ESXi 1 host (w2-pe-vsan-esx-045) level, which includes both vSAN and Delphix (VM traffic). The total network throughput was 1,850MBps with vmnic1 utilization at 1,150MBps and vmnic2 utilization at 700MBps.

Figure 8 shows the network traffic at the Delphix VM level, which is hosted on ESXi 1 (w2-pe-vsan-esx-045). The total network utilization was 1,170MBps with vmnic1 utilization at 780MBps and vmnic2 utilization at 390MBps.

.png)

Figure 7. ESXi 1 Host Level (Includes vSAN and Delphix Traffic)

.png)

Figure 8. Delphix VM Level (Only Delphix Traffic)

Increasing Delphix VM vCPU

Increasing the vCPU allocated to the Delphix VMs and running the same TPC-like workload increased the IO workload when other host resources (network /disk IO) are available. Figure 9 shows the IO workload during the TPC-C like run on three VDBs. The tests were run on two different Delphix vCPU configurations. The memory allocated was 16GB, and the rest of the configuration and workload were the same as in the previous test. We used the R1 storage policy in both tests.

Figure 9. IO Workload with Different Delphix vCPU Configuration

Increasing Delphix VM Memory

Increasing the memory allocated to the Delphix VMs reduced the disk bound IO. Figure 10 shows the IO workload during the same TPC-C like run on three VDBs. The tests were run on two different Delphix memory configurations (16GB and 32GB) in both tests. The vCPU allocated was 24vCPU and the rest of the configuration and workload were the same as in the previous tests. We used the R1 storage policy in both tests.

Figure 10. Workload with Different Delphix VM Memory

Scaling the Number of VDBs

Increasing the number of VDBs and running the same workload on all of them would ideally drive more disk IOs. However, if there are not sufficient resources (vCPU) in Delphix VM, there will be more contention as shown in Figure 11. In this test, 8 vCPUs were allocated to Delphix VM and the workload was run on 3 and 6 VDBs. The rest of the configurations were as per earlier tests. We used the R1+C storage policy in both tests. The vCPU utilization during three VDBs’ test was 100%, and increasing the VDB to 6 did not increase the IOPS due to CPU resource bottlenecks.

Figure 11. Workload with Scaling VDBs

Write-Intensive TPC-C Like Workload with Different vSAN Policies

In this test, write-intensive TPC-C like workload was run on Delphix VDBs. Delphix VM with 16 vCPU, 16Gb memory was configured. The Swingbench write-intensive workload was run on 6 VDBs. In this test, different vSAN storage policies were used including R1 storage policy, which was used in previous tests.

As shown in Figure 12 and Figure 13, vSAN Mirror provides better performance over RAID 5 (Erasure Coding). We can see the decreased IO throughput and the increased write latency during RAID 5 testing due to the large block write-intensive workload. The vSCSI traces of this run show 50% of the write IO size of 128KB blocks and the mean write IO size of 64KB blocks. In the case of write-intensive and latency sensitive workload, consider using Mirror instead of RAID 5. Delphix already provides the storage efficiency in the form of highly compressed virtualized data copies, so choosing erasure coding may not be worthwhile in some cases due to the additional write penalty.

Figure 12. IOPS under Different vSAN Policy

Figure 13. IO Throughput and Latency under Different vSAN Policies

Best Practices

A well designed and deployed vSAN is key to a successful implementation of Delphix solution. Delphix solutions have already been hosted and proven on the VMware vSphere platform for many years. The best practices of Delphix on vSphere apply to the vSAN implementation as well. For best practice information about setting up Delphix Engine on vSphere, refer to Architecture Best Practices for Hypervisor Host and VM Guest . The design highlights concerning Delphix on vSAN are listed as follows. We also highlight some of the differences between Delphix on vSphere with traditional storage and Delphix on vSphere with vSAN storage.

- vSAN

vSAN is a distributed object-store datastore formed from locally attached devices from the ESXi host. It uses disk groups to pool together flash devices as single management constructs. Therefore, it is recommended to use similarly configured and sized ESXi hosts for the vSAN Cluster to avoid the imbalance. This ensures a balance of virtual machine storage components across the cluster of disks and hosts.

This reference architecture shows one of the ways to use the CPU and storage capacity efficiently. The Delphix VM is in one of the 4-node vSphere Clusters, and the other three ESXi hosts contain the test/dev VMs (target VMs). While the Delphix VM is CPU and storage heavy, the target VM is just going to use CPU with the minimal native vSAN storage footprint (root disk) as Delphix provisions VDBs to target VMs through NFS. Hosting the target VMs and Delphix on the same vSphere Cluster efficiently utilizes the compute and storage available in the cluster.

Due to the nature of vSAN datastore (distributed object-store) for maximum performance, it is recommended to have multiple VMDKs, so vSAN objects are naturally spread across the cluster of disks and hosts. Therefore, provision multiple data disks to Delphix VM and balance them across the four vSCSI controllers. After provisioning data disks if the objects are not spread across the host's disk, consider increasing the vSAN stripe width from the default value of 1 to a higher value. vSphere web client and vSAN performance service can help identify the object balance.

As suggested in Delphix Best Practices for Storages , provision disks with EZT option. Unlike VMFS datastore in vSAN datastore when creating EZT disk, it is not zeroed automatically. However, it pre-allocates spaces in vSAN datastore and can provide consistent performance. Because the Delphix data disks are created with the EZT option, the object space reservation (OSR) is set to 100% by default even if it is not explicitly set.

In a latency-sensitive environment, use RAID 1 (Mirror) for Delphix data disks. Otherwise, use RAID 5 (erasure coding) for providing the balance between space efficiency and performance. Use Delphix IO report card and vSAN Performance Service to analyze the IO metrics.

For information about setting up vSAN Cluster, refer to the VMware vSAN Design and Sizing Guide .

- Network

Delphix VM presents the VDBs through NFS or iSCSI to the target servers so the native Delphix workload is very network intensive. In Delphix on a vSphere environment with traditional storage array (three-tier architecture), the storage IO traffic moves across the isolated fabric to the storage array. In a vSphere environment with vSAN storage (HCI), this traffic runs on the IP network and most likely shares the network bandwidth with VM traffic. It is critical to plan and size additional bandwidth required for vSAN distributed storage traffic. Keeping all this in consideration: add network ports on the host or consider larger bandwidth ports like 25Gbps or above. For more information about planning network in the vSAN environment, refer to the VMware vSAN Network Design Guide .

- Space efficiency

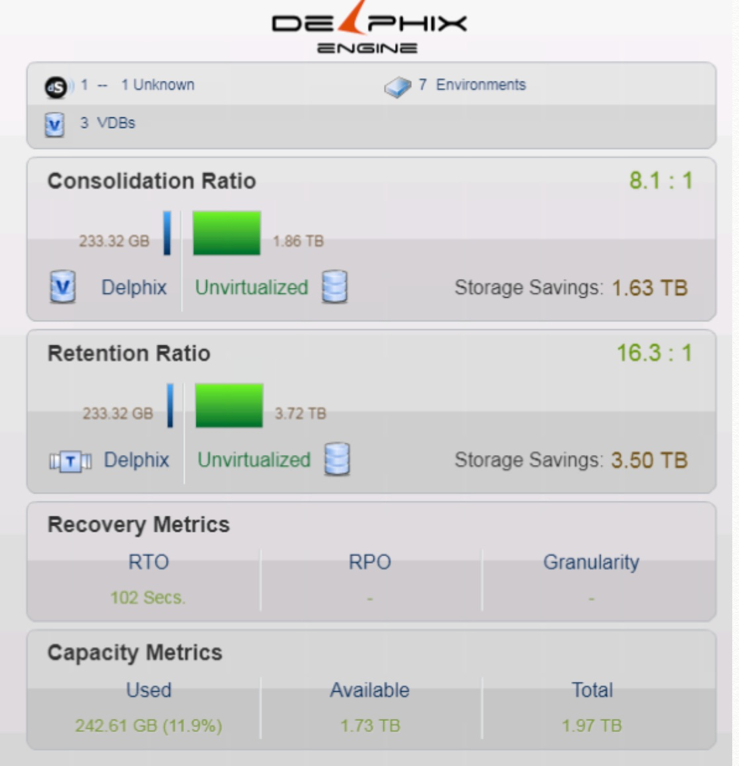

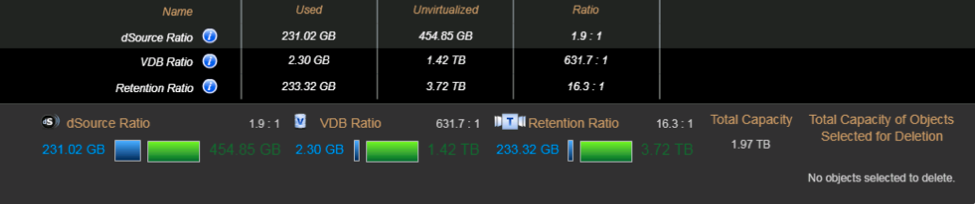

A feature of data virtualization tier of the Delphix application stack provides improved performance for data storage and retrieval through the data compression and consolidation. Consolidation ratio is a useful metric to understand the capacity saving provided by Delphix.

Consolidation ratio is the amount of space that dSources and VDBs occupy compared to the amount that would be occupied by a traditional physical database.

Figure 14 and Figure 15 from Delphix Engine show the consolidation ratio. In this case, there is one dSource created from 450GB database and three VDBs provisioned and the consolidation ratio between Delphix to unvirtualized is 8.1:1. There are other capacity view metrics to understand the saving in more greater details, refer to Delphix Overview of Capacity and Performance Information .

Figure 14. Delphix Engine

Figure 15. dSource Ratio

Considering the data is already consolidated and compressed at the Delphix level, vSAN level deduplication and compression may not provide additional space savings.

- Day 2 operations and management

Use Delphix UI, Delphix network test, and vSphere Web client to monitor the performance and health of the environment. Common metrics for a host include CPU, RAM and disk, and network utilization. Disk and network utilization are critical for Delphix workloads.

Keep in mind of operation flexibility when sizing a large Delphix Engine from the storage and compute perspective. Based on the growth, implement multiple Delphix Engines by line of business instead of a single large engine. This may require some sacrificing centralized Delphix provisioning and management.

vMotion can be used for migrating Delphix from other storage to vSAN, for more details and other migration options refer to the Delphix Storage Migration documentation.

Summary

VMware vSphere, vSAN, and vCenter Server collectively power the best HCI solution for deploying, running, and managing mission-critical database applications that require predictable performance and high availability. The integration of vSAN with vSphere simplifies the operational management with a single software stack.

While VMware HCI offers an efficient, high performance, and cost-effective platform, Delphix empowers data operators to ensure that sensitive data is secured and the right data is made available to the right people, when and where they need it.

The combined solution provides agility and speed to satisfy new DataOps requirements, greatly improving from traditional data cloning, security, and refresh methods as well as the decades-old way of deploying complex and costly IT infrastructure.

Learn More

See more vSAN and Delphix references:

- VMware vSAN Design and Sizing Guide

- VMware vSAN Network Design Guide

- Solution Overview Delphix on VMware vSAN

- Delphix Architecture Best Practices for ESXi Hypervisor Host and VM Guest

- Delphix Performance Tuning, Configuration and Analytics

See Storage Hub for additional vSAN technical documentation.

About the Author and Contributors

Palanivenkatesan Murugan, Solutions Architect in the Product Enablement team of the Storage and Availability Business Unit wrote the original version of this paper.

Raji Sabbagh, Senior Solutions Engineer at Delphix, worked with Palani as a secondary author on this paper.

Catherine Xu, Senior Technical Writer in the Product Enablement team of the Storage and Availability Business Unit edited this paper to ensure that the contents conform to the VMware writing style.