vVols Getting Started Guide

Introduction

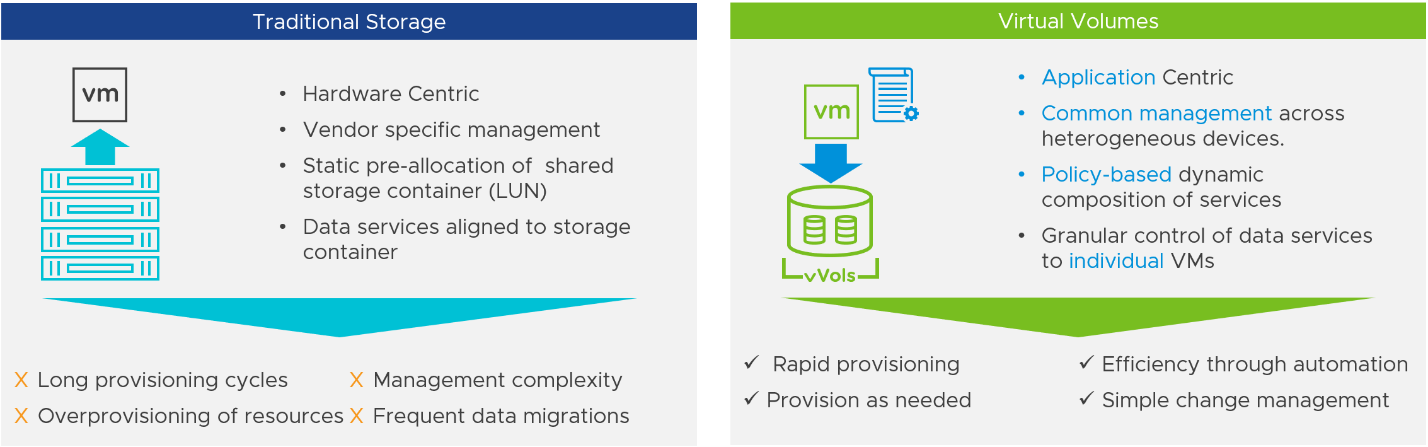

vSphere Virtual Volumes or vVols implements the core tenets of the VMware Software-Defined Storage vision to enable a fundamentally more efficient operational model for external storage in virtualized environments, centering it on the application instead of the physical infrastructure.

Overview

vVols enables application-specific requirements to drive storage provisioning decisions while leveraging the rich set of capabilities provided by existing storage arrays. Some of the primary benefits delivered by vVols are focused on operational efficiencies and flexible consumption models.

- vVols simplifies storage operations by automating manual tasks and reducing operational dependencies between the vSphere Admin and the Storage Admin. By using policy-driven automation as the operations model, provisioning and change management are simplified and expeditated.

- vVols simplifies the delivery of storage service levels to applications by providing administrators with a finer control of storage resources and data services at the VM level that can be dynamically adjusted in real-time.

- vVols improve resource utilization by enabling more flexible consumption of storage resources, when needed and with greater granularity. The precise consumption of storage resources eliminates overprovisioning. The Virtual Datastore defines capacity boundaries, access logic, and exposes a set of data services accessible to the virtual machines provisioned in the pool.

- Virtual Datastores are purely logical constructs that can be configured on the fly, when needed, without disruption, and don’t require formatting with a file system.

Historically, vSphere storage management has been based on constructs defined by the storage array: LUNs and filesystems. A storage administrator would configure array resources to present large, homogenous storage pools that would then be consumed by vSphere administrator.

Since a single, homogeneous storage pool would potentially contain many different applications and virtual machines; this approach resulted in needless complexity and inefficiency. vSphere administrators could not easily specify specific requirements on a per-VM basis.

Changing service levels for a given application usually meant relocating the application to a different storage pool. Storage administrators had to forecast well in advance what storage services might be needed in the future, usually resulting in the overprovisioning of resources.

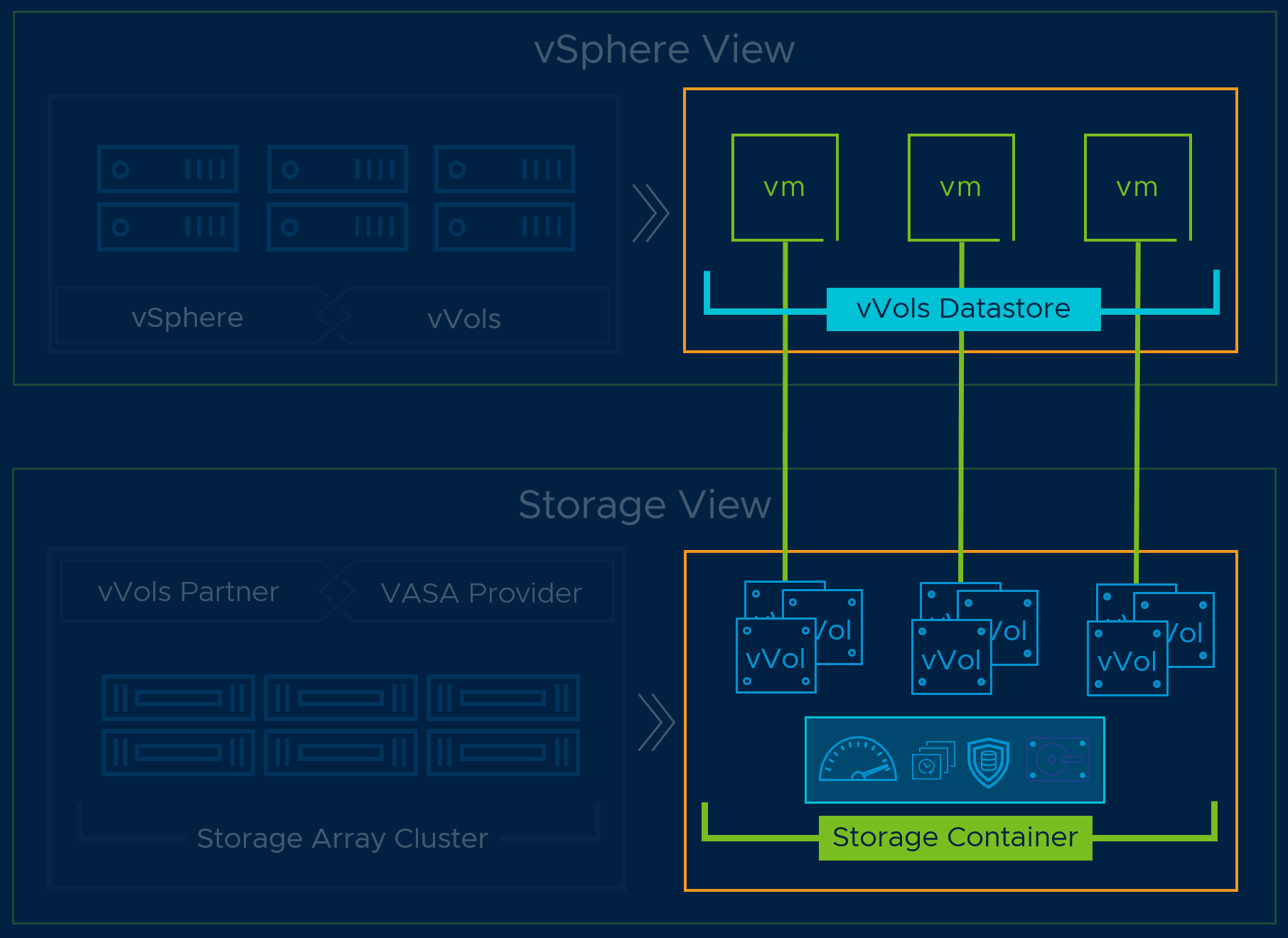

With vVols, this approach is fundamentally changed. vSphere administrators use policies to communicate application requirements to the storage array. The storage array responds with an individual storage container that precisely maps to application requirements and boundaries.

Typically, the Virtual Datastore is the lowest granular level at which data management occurs from a storage perspective. However, a single Virtual Datastore contains multiple virtual machines, which might have different requirements. With the traditional approach, differentiation on a per virtual machine level is difficult. The vVols functionalities allow for the differentiation of virtual machine services on a per-application level by offering a new approach to storage management.

Rather than arranging storage around features of a storage system, vVols arranges storage around the needs of individual virtual machines, making storage virtual machine-centric. vVols map virtual disks and their respective components directly to objects, called vVols, on a storage system. This mapping allows vSphere to offload intensive storage operations such as snapshots, cloning, and replication to the storage system. It is important to familiarize yourself with the concepts that are relevant to vVols and their functionality. This document provides a summarized description and definitions of the key components of Virtual Volumes.

vSphere Virtual Volumes Components

The following is a summarized description and definition of the key components of vVols:

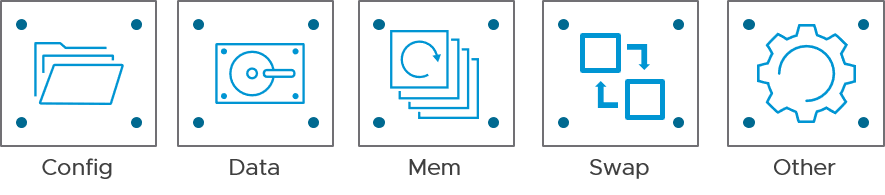

vVols are a new type of virtual machine objects, which are created and stored natively on the storage array. vVols are stored in storage containers and mapped to virtual machine files/objects such as VM swap, VMDKs, and their derivatives.

There are five different types of vVols, and each of them maps to a different and specific virtual machine file.

- Config – VM Home, Configuration files, logs

- Data – Equivalent to a VMDK

- Memory – Snapshots

- SWAP – Virtual machine memory swap

- Other – vSphere solution specific object

Vendor Provider or VASA Provider (VP)

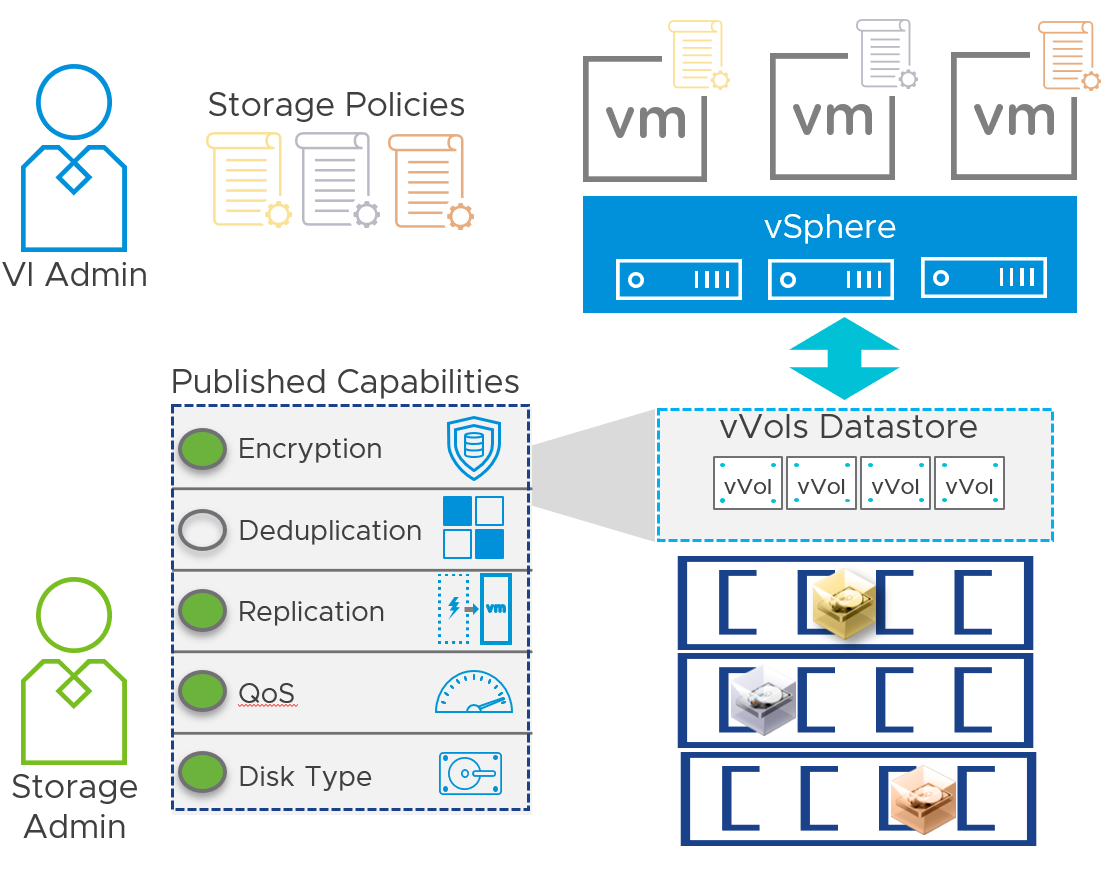

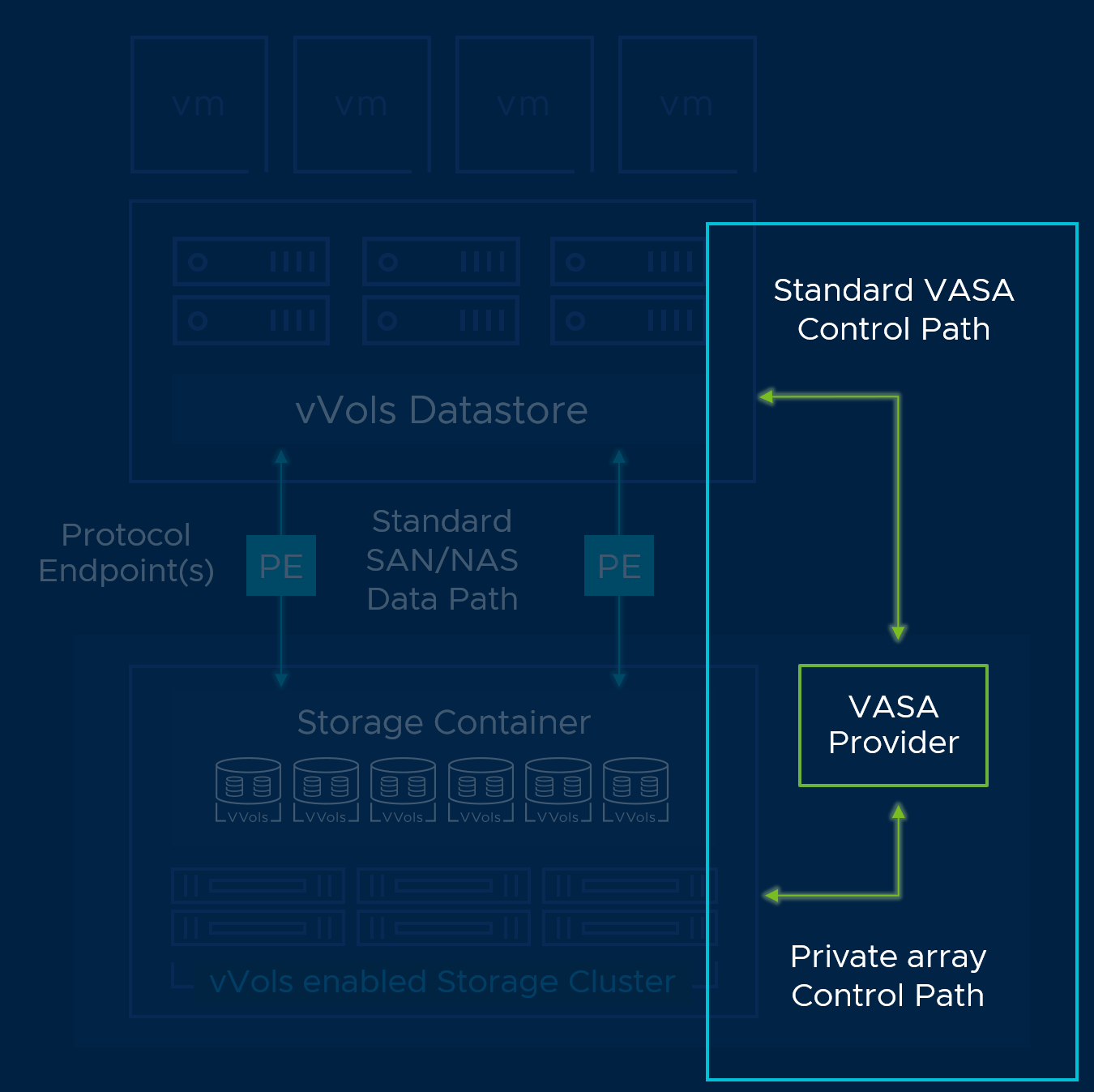

The vendor provider, also known as the VASA provider, is a storage-side software component that acts as a storage awareness service for vSphere and mediates out-of-band communication between vCenter Server and vSphere hosts on one side and a storage system on the other. Storage vendors exclusively develop VASA providers.

vSphere hosts and vCenter Server connect to the VASA Provider and obtain information about available storage topology, capabilities, and status.

Subsequently, vCenter Server provides this information to vSphere clients, exposing the capabilities around which the administrator might craft storage policies in SPBM.

VASA Providers are typically setup and configured by the vSphere administrator in one of two ways:

- Automatically via the array vendor's plug-in

- Manually through the vCenter Server

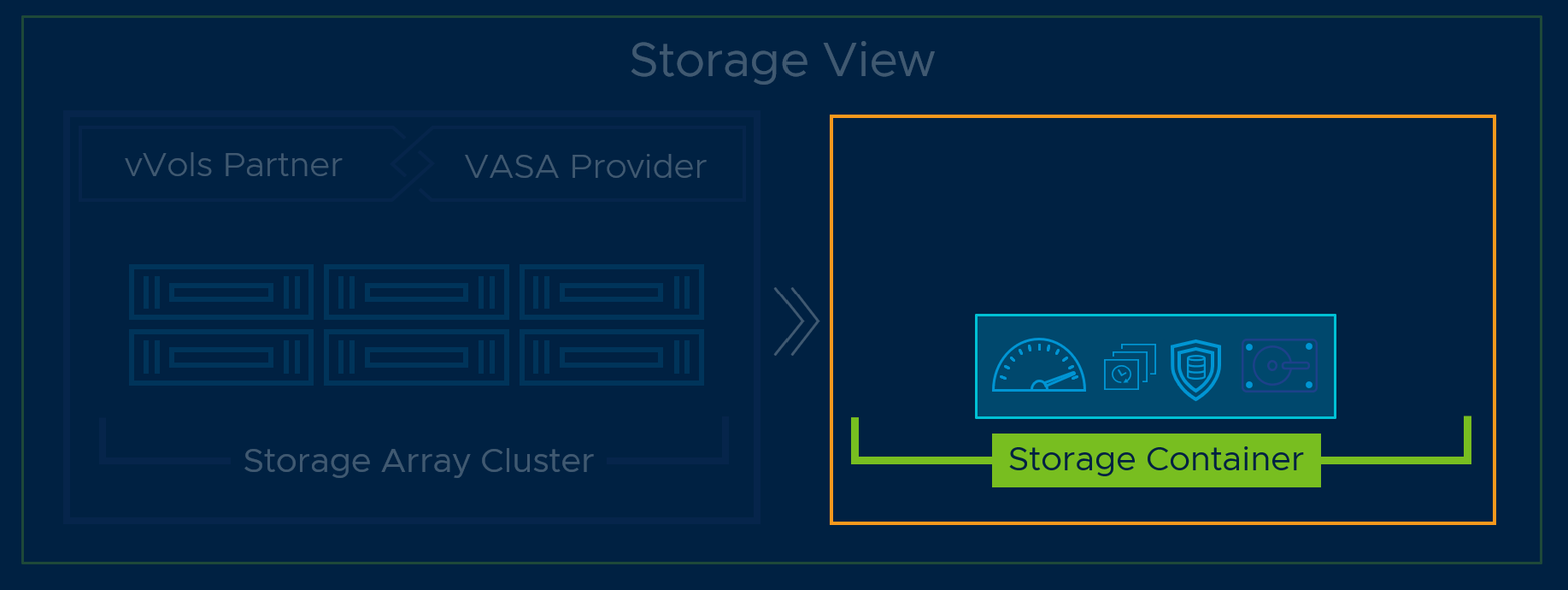

Storage Container (SC)

Unlike traditional LUN or NFS based vSphere storage, the vVols functionality does not require pre-configured volumes on the storage side.

Instead, vVols uses a storage container, which is a pool of raw storage capacity and/or an aggregation of storage capabilities that a storage system can provide to vVols.

Depending on the storage array implementation, a single array may support multiple storage containers. Storage Containers are typically setup and configured by the storage administrator.

Containers are used to define:

- Storage capacity allocations and restrictions

- Storage policy settings based on data service capabilities on a per virtual machine basis

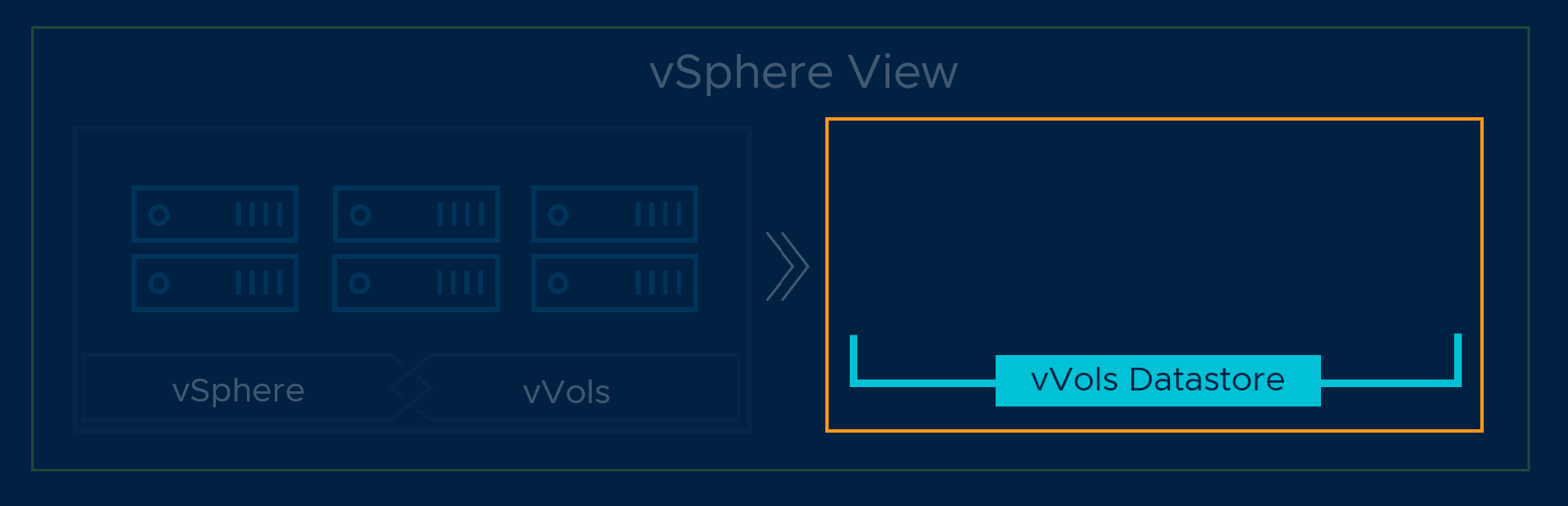

Virtual Datastore

A Virtual Datastore represents a storage container in a vCenter Server instance and the vSphere Client. A vSphere Virtual Datastore represents a one-to-one mapping to the storage system’s storage container.

The storage container (or Virtual Datastore) represents a logical pool where individual vVolsVMDKs are created.

Virtual Datastores are typically setup and configured by the vSphere administrator.

There is a one-to-one mapping of vVols datastore to a storage container on the array. If another vVols datastore is needed, a new storage container must be created.

Note: For in-depth information about vVols and its components please referrer to the official vVols product page http://www.vmware.com/products/virtual-volumes.

Protocol Endpoints (PE)

Although storage systems manage all aspects of vVols, vSphere hosts have no direct access to vVols on the storage side. Instead, vSphere hosts use a logical I/O proxy, called the Protocol Endpoint (PE), to communicate with vVols and virtual disk files that vVol encapsulate.

vSphere hosts use PEs to establish a data path, on-demand, from virtual machines to their respective vVols.

Protocol Endpoints are compatible with all SAN/NAS industry-standard protocols:

- iSCSI

- NFS v3

- Fiber Channel (FC)

- Fiber Channel over Ethernet (FCoE)

Protocol Endpoints are setup and configured by Storage and vSphere administrators.

vSphere Virtual Volumes Requirements

Software

The use of vVols requires the following software components:

- vCenter Server 6.0 Appliance (VCSA) or vCenter Server 6.0 for Windows

- ESXi 6.0

- vSphere Web Client

Hardware

The use of vVols requires the following hardware components:

- Any server that is certified for vSphere 6.0 listed on the VMware compatibility guide.

- A third-party storage array system that supports vVols and is able to integrate with vSphere through the VMware APIs for Storage Awareness (VASA).

- Depending on the specific vendor implementation, storage array system may or may not require a firmware upgrade in order to support vVols. Check with your storage vendor for detailed information and configuration procedures.

License

The use of vVols requires the following license:

- Standard

- Enterprise Plus

Configuring vSphere Virtual Volumes

Get detailed information on the procedures to configure the vVols.

Overview

The configuration of vVols requires that both the storage system and the vSphere environment are prepared correctly. From a storage perspective, the vVols required components such as the Protocol Endpoints, storage containers, and storage profiles must be configured.

The procedure for configuring the vVols components on the storage system varies based on the storage vendor implementation and can be potentially different based on the array brand and model.

For detailed information on the procedures to configure the vVols required components, refer to the storage system’s documentation or contact your storage vendor.

Configuration Guidelines

The following requirements must be satisfied before enabling vVols:

Storage

- The storage system must be vVols compatible and able to integrate with vSphere 6.0 through the VMware APIs for Storage Awareness (VASA) 2.0.

- A storage Vendor Provider (VP) must be available. If the VP is not available as part of the storage system a VP appliance must be deployed.

- The Protocol Endpoints, Storage Containers, and storage profiles must be configured on the storage system.

vSphere

- Make sure to follow the appropriate vendor and VMware guidelines to setup the appropriate storage solution that will be used. IE: Fiber Channel, FCoE, iSCSI, or NFS. This may require the installation and configuration of physical or software storage adapters on vSphere hosts.

- Synchronize the time of all storage components with vCenter Server and all vSphere hosts. It is recommended to utilize Network Time Protocol (NTP) for synchronization.

Configuration Procedures

In order to configure vVols in vSphere the procedures defined below must be performed. The procedures are focused on vSphere specific tasks and workflow of the components:

- vSphere Storage Time synchronization

- Storage Provider (VASA) registration

- Creating a Virtual Datastore for vVols

vSphere Storage Time Synchronization

Configuration Procedures

In order to configure vVols in vSphere the procedures defined below must be performed. The procedures are focused on vSphere specific tasks and workflow of the components:

- vSphere Storage Time synchronization

- Storage Provider (VASA) registration

- Creating a Virtual Datastore for vVols

vSphere Storage Time Synchronization

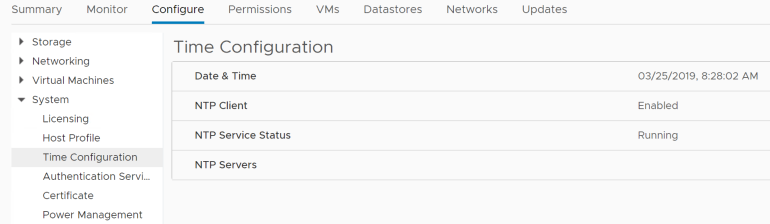

Before enabling vVols , it is recommended for all the vSphere hosts and vCenter Server instances to have their time synchronized. VMware recommends the use of a Network Time Server for all the systems in order to maintain accurate timekeeping.

vCenter Server Time Synchronization

Perform the required procedure to configure the vCenter Server instances to utilize a time synchronization service suitable to the version of vCenter Server being used. (Windows or Linux).

ESXi host Time Synchronization

Perform the following steps on vSphere hosts that will utilize for vVols. This procedure may be automated with PowerCLI and other command line utilities.

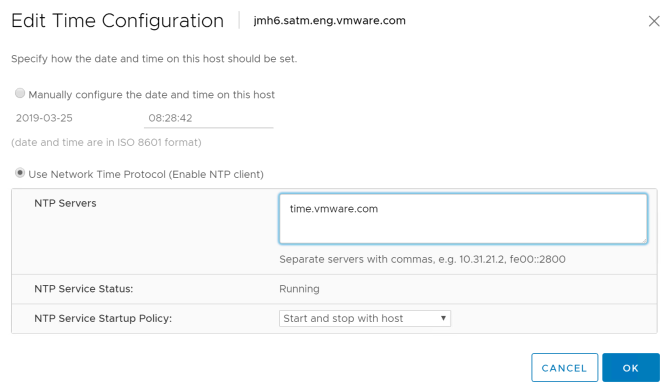

vSphere Host Time Synchronization Configuration Procedure

- Select the host in the vSphere inventory.

- Click the Configure tab.

- In the System section, select Time Configuration.

- Click Edit and set up the NTP server.

a. Select Use Network Time Protocol (Enable NTP client).

b. Set the NTP Service Startup Policy to Start and Stop with host.

c. Enter the IP or URL addresses of the NTP server(s) to synchronize.

5. Click OK. The host will now synchronize with the NTP server(s).

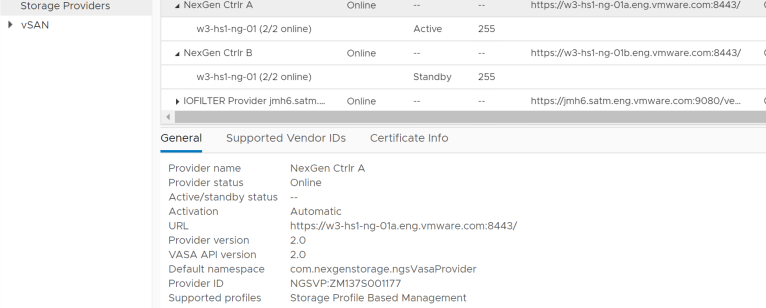

Storage Provider Registration

In order to create a Virtual Datastore for vVols , a storage container must exist in vSphere, and a communication link must be established between the vCenter Server instance and the storage system. The VASA provider exports the storage system’s capabilities and presents them to the vCenter Server instances as well as the vSphere hosts via the VASA APIs.

Storage Provider Registration Procedure

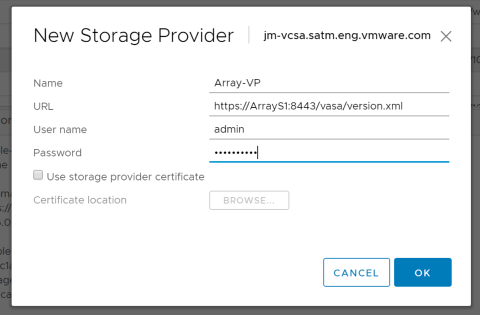

In the event the storage provider is not implemented as a hardware component of the storage system, verify that a VASA provider appliance has been deployed and obtain its credentials from the storage administrator.

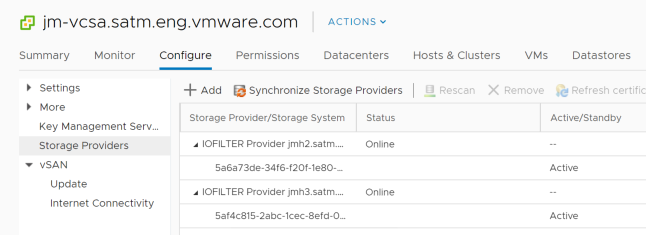

- Browse to vCenter Server in the vSphere Client.

- Click the Configure tab and click Storage Providers.

- Click the +Add to add a new storage provider.

4. Type the connection information for the storage provider, including the name, URL, and credentials.

5. (Optional) To direct vCenter Server to the storage provider certificate, select the Use storage provider certificate option and specify the certificate's location. If you do not select this option, a thumbprint of the certificate is displayed. You can check the thumbprint and approve it.

6. Click OK to complete the registration.

At this point, the vCenter Server instance has been registered with the VASA provider and established a secure SSL connection.

Note: Storage Providers can also be automatically configured through the storage system’s vSphere Client plug-in or from the storage system’s UI when registering a vCenter.

Virtual Datastore Creation Procedure

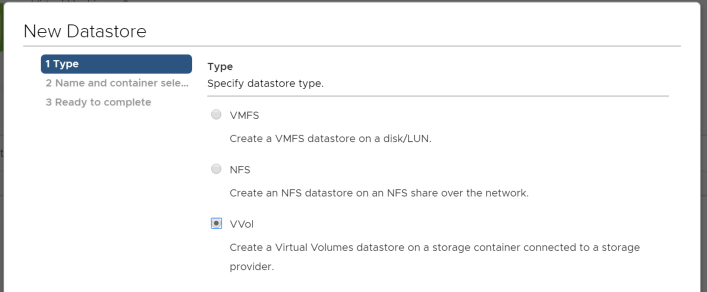

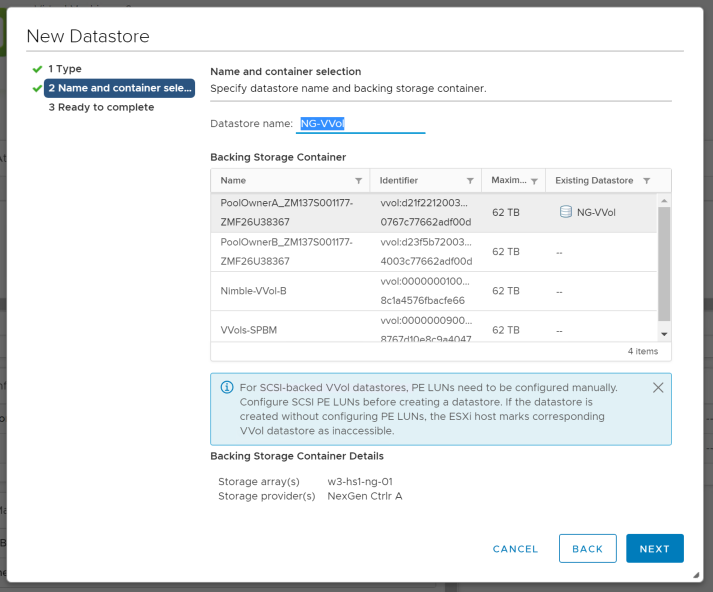

To create a Virtual Datastore for vVols use the New Datastore wizard from the vSphere Client.

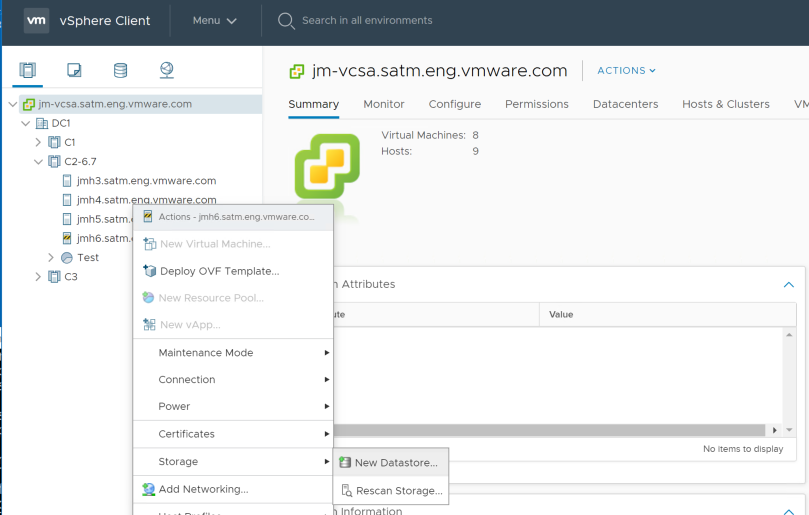

- Select a host in the vSphere inventory.

- Right-click and browse to the storage menu.

- Click the New Datastore option.

- Type a datastore name.

- Ensure the name utilized is not a duplicate of another datastore name in the vCenter Server inventory.

- If the same datastore will be mounted on multiple hosts, the name of the datastore must be the same across all the hosts.

5. Select the vVol as the Virtual Datastore type.

6. From the list of storage containers, select a backing storage container.

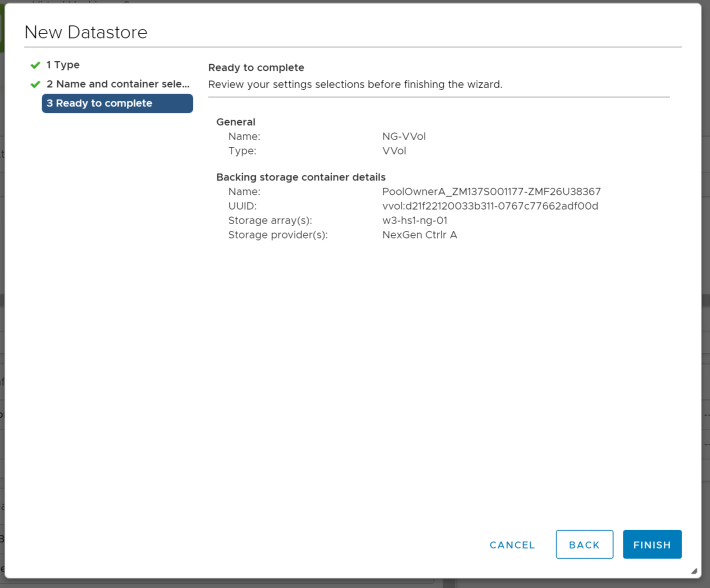

7. Click next to review the configuration options and click Finish.

After the Virtual Datastore is created, other datastore operations such as renaming, browsing, mounting, and unmounting the datastore may be performed. To mount the Virtual Datastores to other hosts use the Mount Datastore to Additional Hosts wizard in the vSphere Client.

Mounting the Virtual Datastore onto Multiple Hosts

Once a Virtual Datastore is created and mounted onto a single host, the Virtual Datastore configuration procedure will not work. This is because a Virtual Datastore is already mapped to a storage container. In order to mount the Virtual Datastore use the Mounting Virtual Datastore to Multiple Hosts procedure

This procedure is performed from the Storage view of the vSphere Client.

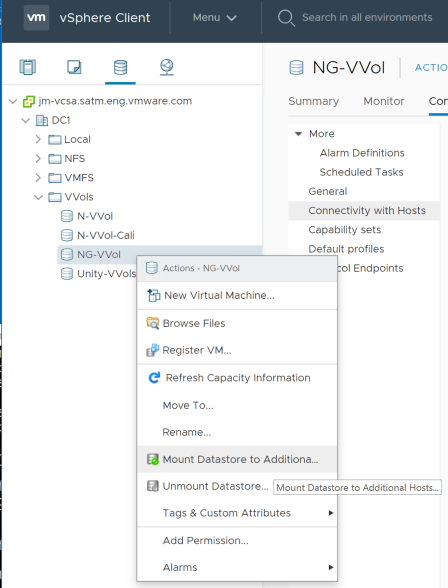

- From the vSphere Client navigate to the Storage view tab.

- Right click on the desired Virtual Datastore and select Mount Datastore to Additional Hosts.

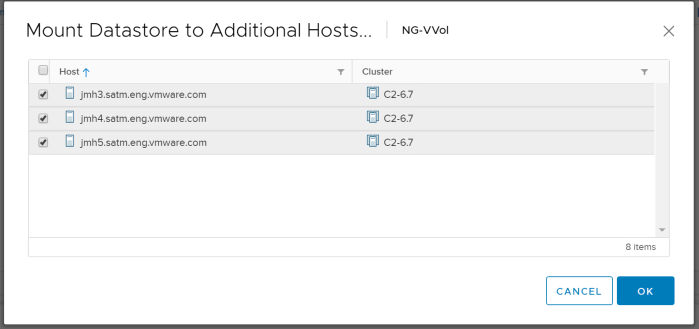

3. Select the available hosts to mount the Virtual Datastore and click OK.

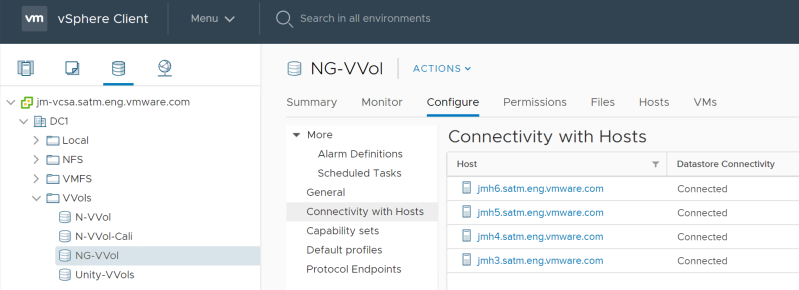

The Virtual Datastore should be automatically mounted to all the selected hosts. Looking at the Connectivity with Hosts can validate the action was successful. The Connectivity with Host view is located in the Virtual Datastore settings under the Configure tab of the storage view in the vSphere Client.

Mapping Storage Capabilities to VM Storage Policies

The Storage capabilities are configured and managed on the storage systems by the storage admin. Storage capabilities are presented to vSphere via the VASA APIs in the form of data services and unique storage system features.

A vSphere admin maps the storage capabilities presented to vSphere and organizes them into a set of rules that are designed to capture the quality of service requirements for virtual machines and its application. These rules are saved to vSphere in the form of a VM Storage Policy.

vVols utilize VM Storage Policy to manage related operations such as placement decision, admission control, quality of service compliance monitoring, and dynamic storage resource allocation.

Mapping Storage Capabilities to VM Storage Policies Procedure

Once all the vVols related components have been configured in the infrastructure, a vSphere admin needs to define storage requirements and storage service for a virtual machine and/or virtual disks.

In order to satisfy the virtual machines service requirements, a VM Storage Policy needs to be created in vSphere.

Before proceeding with the definition and creating of a VM Storage Policies verify that the vendor provider is available and online.

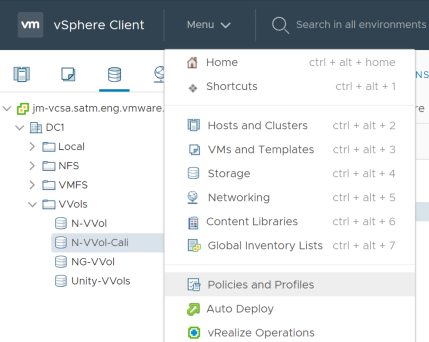

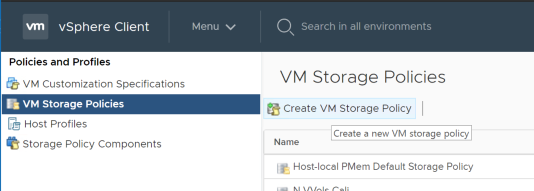

- From the vSphere Client Home screen, click on the Menu, then Policies and Profiles.

2. Click the Create VM Storage Policy icon.

3. Select the vCenter Server instance if more than one vCenter is available.

4. Enter a name and description for the storage policy.

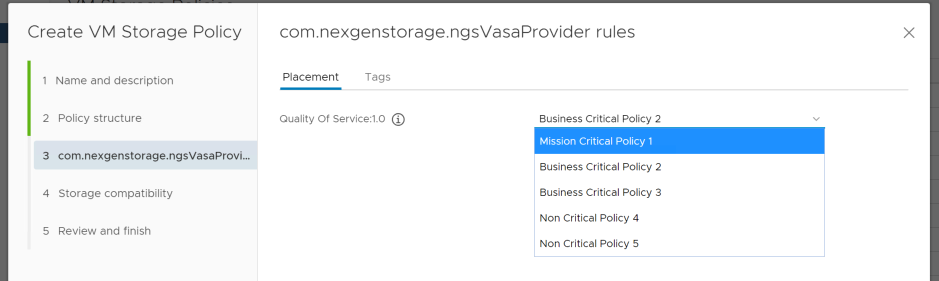

5. On the Rule-Set 1 window, select the vendor provider for the storage system that is registered with vSphere from the Rule based and data services drop-box.

a. The page expands to show the capabilities reported by the storage system

b. Add the necessary capabilities and specify a value if needed

c. Make sure the value provided is within the range of values advertised by the capability profile of the storage system.

d. (optional) add tag-based capabilities.

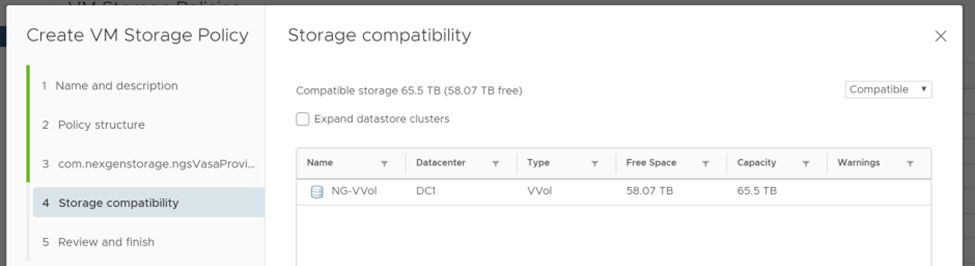

6. Review the list of datastores that match the VM Storage Policy and click Next.

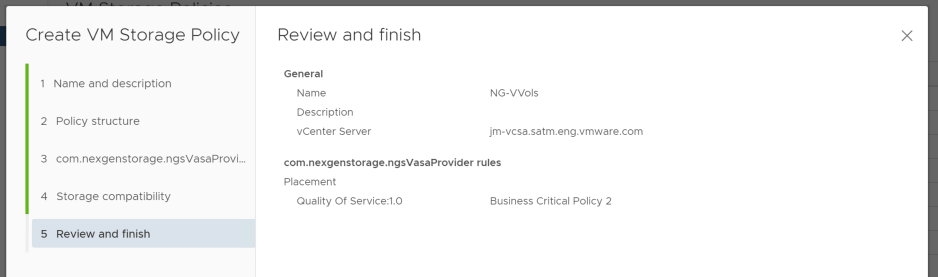

7. Verify the VM Storage Policy configuration settings and click Finish.

To be eligible, a Virtual Datastore needs to satisfy all rule sets defined within the VM Storage Policy.

Make sure that the storage systems storage containers meet the requirements set in the VM Storage Policy and appear on the list of compatible datastores. The VM Storage Policy should have now been added to the list and can be applied to virtual machines and its virtual disks.

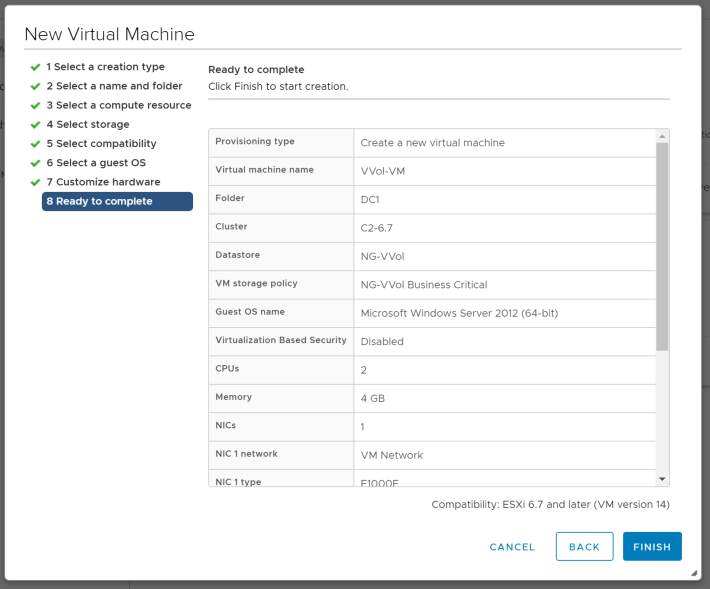

Virtual Machine Creation

Once the vSphere infrastructure and the storage systems are ready and their respective policies and capabilities have been configured and defined, a vSphere admin can start deploying virtual machines onto Virtual Datastores for vVols.

To create a new virtual machine and deploy it onto a Virtual Datastore for vVols follow the procedure below.

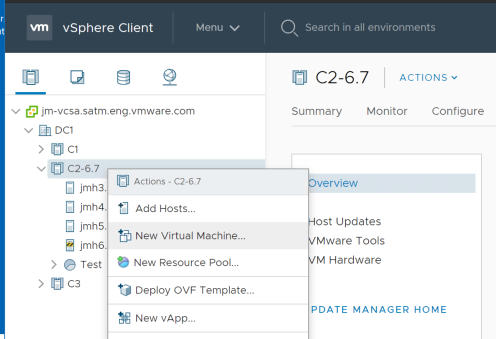

- Select any virtual machine parent object in the vSphere inventory.

- Datacenter

- Cluster

- Host

- Resource pool

- Folder

2. Right click any of the objects listed above and choose new Virtual Machine.

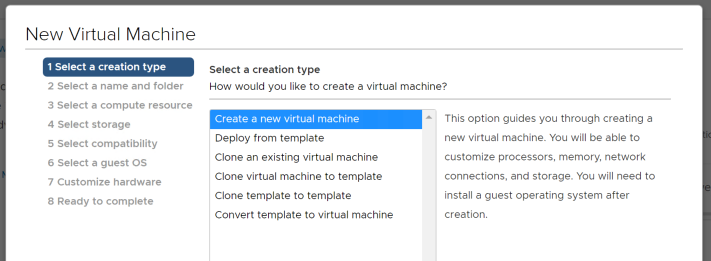

3. Once the New Virtual Machine wizard opens select the Create a new Virtual Machine option and click Next.

4. Enter a name for the virtual machine and select a location for the Virtual Machine and click Next.

a. Datacenter

b. VM folder

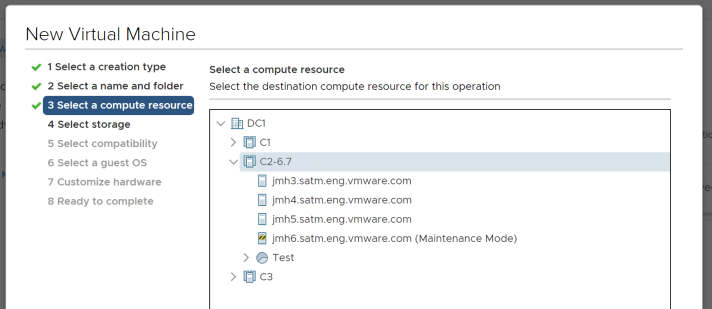

5. Select the compute resource for the New Virtual Machine deployment operation and click Next.

a. Cluster

b. Host

c. vApp

d. Resource Pool

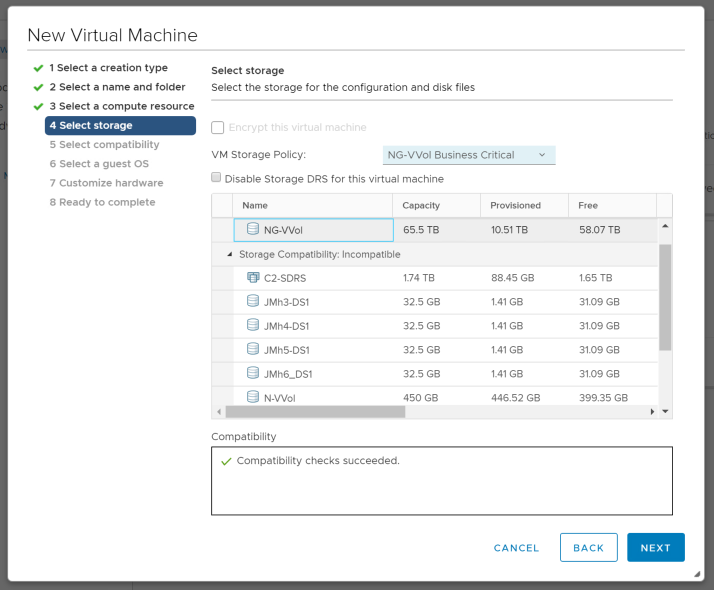

6. Choose a VM Storage Policy to configure the VM storage requirements for a Virtual Datastore. Then select the compatible datastore that meets the storage requirements of the chosen policy and click Next.

Note: The select VM Storage policy option is available on all virtual machine provisioning related operations such as deploy from template, clone an existing virtual machine, clone a virtual machine to template, clone template to template.

7. Select the host compatibility level for the Virtual Machine and click next

8. Choose the Guest OS Family and Guest OS version that will be installed on the Virtual Machine and click Next.

9. Customized the virtual machine hardware as needed then click Next.

10. the virtual machine configuration, including the VM Storage Policy selected, for accuracy then click Finish.

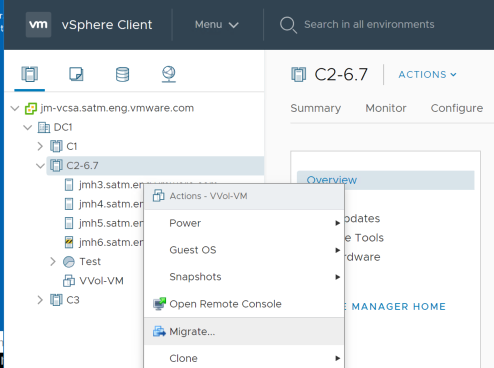

Virtual Machine Migration with Storage vMotion

With Storage vMotion, virtual machines and their disk files may be migrated from a VMFS or NFS type Virtual Datastore to a vVols type of Virtual Datastore. The migration operation can be performed between Virtual Datastores located on the same storage system or on different storage systems.

The migration operations may be performed while the virtual machines are powered on or powered off.

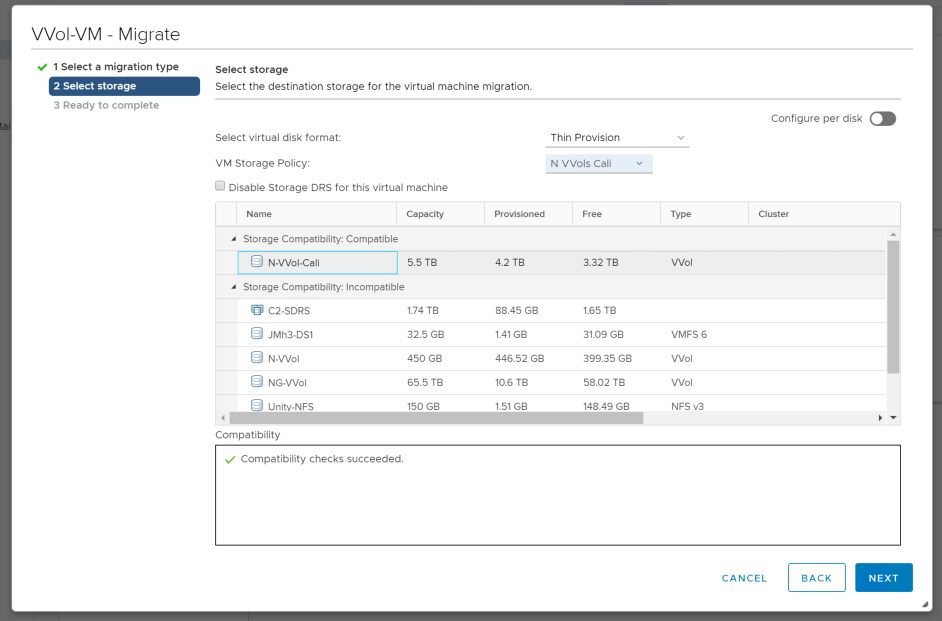

As part of the migration operation, you select the virtual disk format, VM Storage Policy and choose to place the virtual machine and all its disks in a single location or select separate locations for the virtual machine configuration file and each virtual disk. The virtual machine does not change execution host during a migration with Storage vMotion.

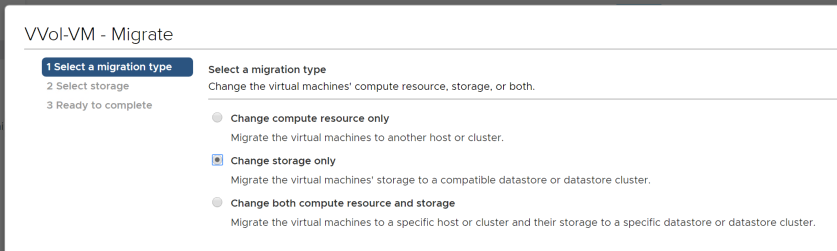

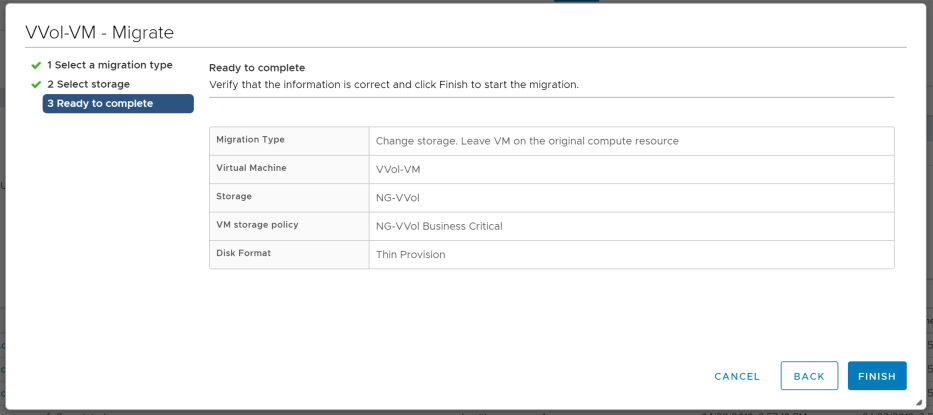

To migrate a new virtual machine onto a vVols datastore follow the procedure below.

- Select any virtual machine in the vSphere inventory and select Migrate.

2. Select one of the migration types options, in this case Change storage only and click Next.

a. Change compute resource only

b. Change storage only

c. Change both compute and storage

3. Select the destination storage, virtual disk format, VM Storage Policy, and suitable Virtual Datastore and click Next.

4. Review the migration details including the Virtual Datastore target, VM Storage Policy, and disk format settings selected for accuracy then click Finish.

vSphere Virtual Volumes Interoperability

vSphere Enterprise Features

The following table highlights the vSphere Enterprise features that are available in the vSphere 6.x are supported with vVols.

| vSphere 6.x Supported Features |

| Storage Policy Based Management (SPBM) |

| Thin Provisioning |

| Linked Clones |

| Native Snapshots |

| NFSv3.x |

| View Storage Accelerator (CBRC) |

| vMotion |

| Storage vMotion |

| vSphere SDK (VC API) |

| vSphere Web Client |

| Host Profiles / Stateless |

| vSphere HA |

| XvMotion |

| vSphere Auto Deploy |

VMware Products and Solutions

The following table highlights the products and solutions that are available, which provide support and interoperability for are vVols.

| VMware Supported Products and Solutions |

| VMware vSphere 6.x |

| VMware vRealize Automation 6.2 |

| VMware Horizon 6.1 |

| VMware vSphere Replication 6.0 |

| VMware vSAN 6.0 |

vSphere Virtual Volumes CLI Commands

The esxcli command line framework has been updated to include a Virtual Volume module. All of the new Virtual Volumes esxcli commands are grouped under the storage, vVols namespace.

vVols ESXCLI Namespaces

The esxcli command line framework has been updated to include a vVols module. All of the new vVols esxcli commands are grouped under the storage, vVol namespace.

- storagecontainer – Operations to create, manage, remove vVol Storage Containers

- deamon – Operations pertaining to vVol deamon

- protocolendpoint – Operations on vVol Protocol Endpoints.

- vasacontext – Operations on the vVol and VASA context.

- vasaprovider – Manage vVol VASA Provider Operations

esxcli storage vvol command line syntax samples:

esxcli storage vvol –h

Usage: esxcli storage vvol {cmd} [cmd options]

Available Namespaces:

storagecontainer Operations to create, manage, remove and restore VVol StorageContainers.

daemon Operations pertaining to VVol daemon.

protocolendpoint Operations on VVol Protocol EndPoints.

vasacontext Operations on the VVol VASA context.

vasaprovider Manage VVol VASA Provider Operations.

vVols ESXCLI Namespace Commands

The vVol storagecontainer namespace commands provide the ability to list the storage containers are mapped to an ESXi host. As well as the ability to scan for abandoned vVols within storage containers.

esxcli storage vvol storagecontainer command line syntax samples:

esxcli storage vvol storagecontainer –h

Usage: esxcli storage vvol storagecontainer {cmd} [cmd options]

Available Namespaces:

abandonedvvol Operations on Abandoned Virtual Volumes.

Available Commands:

list List the VVol StorageContainers currently known to the ESX host.abandonedvvol – is a state in which vVols are placed whenever a failure to delete event happens. i.e. failure to delete swap vVol during a VM power-off operation thought a particular path. This behavior typically happens when there are communication issues with the Vendor/VASA Provider.

In this scenario instead of failing the VM power-off operation, the system makes note of that vVol on a per-VM-namespace basis onto an abandon VVols tracking file so that it could be deleted later when the Vendor/VASA Provider is back online. A periodic thread tries to delete such abandoned VVols.

scan option – this option allows the initiation of a background scan of a respective vVol datastore, searching for abandoned vVols. The operation goes over all the Config-VVols, looking for the abandoned VVols tracking files and tries to delete them.

The successful initiation of the scan doesn't indicate that the operation succeeded or failed. This is a long-running operation that might take a long time to complete, as we don't scan all the config-VVols at once to avoid putting a load on the Vendor/VASA Provider for a non-important operation like garbage collecting old vVols.

esxcli storage vvol storagecontainer abandonedvvol syntax samples:

esxcli storage vvol storagecontainer abandonedvvol scan -p eqlDatastore true

List – provides the ability to display or list the number of virtual datastores and details for vVols and that are know to a particular ESX host.

esxcli storage vvol storagecontainer list syntax sample:

esxcli storage vvol storagecontainer list eqlDatastore StorageContainer Name: eqlDatastore UUID: vvol:6090a0681067ae78-2e48c5020000a0f6 Array: com.dell.storageprofile.equallogic.std:eqlgrp1 Size(MB): 1048590 Free(MB): 972540 Accessible: true Default Policy: engDatastore StorageContainer Name: engDatastore UUID: vvol:6090a06810770d5b-cd4ad5d7a1042074 Array: com.dell.storageprofile.equallogic.std:eqlgrp1 Size(MB): 4194315 Free(MB): 4173930 Accessible: true Default Policy: dbDatastore StorageContainer Name: dbDatastore UUID: vvol:6090a0681077bdce-8b4b1515a2049013 Array: com.dell.storageprofile.equallogic.std:eqlgrp1 Size(MB): 1024005 Free(MB): 1009635 Accessible: true Default Policy:

The daemon in the namespace – Is utilized to perform unbind virtual volume operations from all Vendor/VASA Provider that are known to a particular ESX host.

unbindall – this option is utilized to unbind all vVols from all the Vendor/VASA Provider known to a particular ESXi Host. This operation is performed for testing purposes or to force the cleanup of all vVols data path.

esxcli storage vvol storagecontainer daemon unbindall syntax sample:

Usage: esxcli storage vvol daemon unbindall [cmd options] Description: unbindall Unbind all virtual Volumes from all VPs known to the ESX host. Cmd options: esxcli storage vvol daemon unbindall

The protocolendpoint namespace commands provide the ability to list the all the information with regards to the Protocol Endpoints configuration to an ESXi host.

List – provides the ability to display or list the number of protocol endpoints and their configuration details to a particular ESX host.

esxcli storage vvol protocolendpoint

Usage: esxcli storage vvol protocolendpoint {cmd} [cmd options]

Available Commands:

list List the VVol Protocol EndPoints currently known to the ESX host.esxcli storage vvol protocolendpoint list naa.6090a0681077ad11863e05020000a061 Host Id: naa.6090a0681077ad11863e05020000a061 Array Id: com.dell.storageprofile.equallogic.std:eqlgrp1 Type: SCSI Accessible: true Configured: true Lun Id: naa.6090a0681077ad11863e05020000a061 Remote Host: Remote Share: Storage Containers: 6090a068-1067-ae78-2e48-c5020000a0f6

The vasacontext namespace command provides the ability to get the vCenter Server UUID for which the Vendor/VASA Provider is currently registered.

get - this option is utilized to get the VVol VASA context or vCenter Server UUID’s.

esxcli storage vvol vasacontext –h

Usage: esxcli storage vvol vasacontext {cmd} [cmd options]

Available Commands:

get Get the VVol VASA Context (VC UUID).esxcli storage vvol vasacontext get 5742ead8-0695-48bd-9ae4-7416164423ef

The vasaprovider namespace command provides the ability to list the Vendor/VASA Providers that are is currently registered onto a particular ESXi host.

list - this option is utilized to list all the Vendor/VASA Providers and their information details that are registered to a particular ESX host.

esxcli storage vvol vasaprovider –h

Usage: esxcli storage vvol vasaprovider {cmd} [cmd options]

Available Commands:

list List the VASA Providers registered on the host.

esxcli storage vvol vasaprovider list

Dell Equallogic VASA Provider

VP Name: Dell Equallogic VASA Provider

URL: https://10.144.106.39:8443/vasa-version.xml

Status: online

Arrays:

Array Id: com.dell.storageprofile.equallogic.std:eqlgrp1

Is Active: true

Priority: 0

Reference

vSphere Virtual Volumes Resources

vVols Product Page https://www.vmware.com/products/vsphere/features/virtual-volumes.html

Product Documentation

VMware Docs

Working with vVols

KB Articles

VMware Blogs

vVols Lightboard Videos: https://www.youtube.com/watch?v=e2gzw_6TcNQ&list=PL8_k3uUCO39vjhv2pAaUTiB28ejr1mN9s

vVols now supports WSFC https://blogs.vmware.com/virtualblocks/2018/05/31/scsi-3-vvols/

What’s new with vSphere 6.7 Core Storage https://blogs.vmware.com/virtualblocks/2018/04/17/whats-new-vsphere-6-7-core-storage/

vVols http://blogs.vmware.com/vsphere/2015/02/vsphere-virtual-volumes.html

vVols Interoperability: VAAI APIs vs VVols https://blogs.vmware.com/vsphere/2015/02/vsphere-virtual-volumes-interoperability-vaai-apis-vs-vvols.html

VMworld Presentations

VMworld 2019

- vVols: Technical Deep Dive [HBI2853BU]

- The Industry on vVols: Tech Panel Q & A [HCI3008PE]

- Why should I use Virtual Volumes? A technical review. [HBI3416BUS]

VMworld 2018

-

A Deep Dive into Storage Policy-Based Management for Managing SDS (VIN3396BU)

-

vVols Deep Dive with Pure Storage (VIN3776BUS)

-

vVols Deep Dive (HCI2810BU)

VMworld 2017

- vVols Technical Deep Dive (STO2446BE)

- Replicating VMware vVols: A technical deep dive into vVol array based replication in vSphere 6.5 (STO3305BES)

- vVols made easy with Pure Storage (PBO3367BES)

-

vVols in the Wild: Partner Panel (STO1782PU)

VMworld 2016

- 5 Tips for Getting The Most From vVols and vSphere Storage Policy-Based Management (STO9977-QT)

-

Achieving Agility, Flexibility, Scalability, and Performance with VMware Software Defined Storage (SDS) and vVols for Business critical databases (STO7549)

-

Containers & vVols - a technical deep dive on new technologies that revolutionize storage for vSphere (STO9617-SPO)

-

Deploy Scalable Private Cloud with vVols (STO8840)

-

High-Speed Heroics: Array-based Replication and Recovery for vVols (STO8694)

-

vVols Technical Deep Dive (STO7645)

-

vVols: Why? (STO8422)

-

VMware vSphere Virtual Volumes in a NetApp Environment (STO8144)

-

vVol and Storage Policy-Based Management? Is It Everything They Said It Would Be? (STO9054)

Virtual Volumes FAQ

Getting Started

- Q1. What are the software requirements for vVols?

-

A. You will need vSphere 6.0 in addition to equivalent array vendor vVols software. More details on supported array models are available at the hardware compatibility guide page: http://www.vmware.com/resources/compatibility/

- Q2. Where can I get the storage array vendor vVols software?

-

A. Storage vendors are providing vVols integration in different ways. Please contact your storage vendor for more details or visit your vendor’s website for more information on vVols integration.

Key Elements of vVols

- Q3. What is a Protocol Endpoint (PE)?

-

A. Protocol endpoints are the access points from the hosts to the storage systems, which are created by storage administrators. All path and policies are administered by protocol endpoints. Protocol Endpoints are compliant with both, iSCSI and NFS. They are intended to replace the concept of LUNs and mount points.

- Q4. What is a storage container and how it relates to a Virtual Datastore?

-

A. A storage container is a logical abstraction on to which vVols are mapped and stored. Storage containers are setup at the array level and associated with array capabilities. vSphere will map storage containers to Virtual Datastores and provide applicable datastore level functionality. The Virtual Datastore is a key element and it allows the vSphere Admin to provision VMs without depending on the Storage Admin. Moreover, the Virtual Datastore provides logic abstraction for managing a very large number of vVols. This abstraction can be used for better managing multi-tenancy, various departments within a single organization, etc.

- Q5. How does a PE function?

-

A. A PE represents the IO access point for a vVols . When a vVols is created, it is not immediately accessible for IO. To Access vVols , vSphere needs to issue “Bind” operation to a Virtual Provider (VP), which creates IO access point for a vVols on a PE chosen by a VP. A single PE can be the IO access point for multiple vVols. “Unbind” Operation will remove this IO access point for a given vVols.

- Q6. What is the association of the PE to storage array?

-

A. PEs are associated with arrays. One PE is associated with one array only. An array can be associated with multiple PEs. For block arrays, PEs will be a special LUN. ESX can identify these special LUNs and make sure that visible list of PEs is reported to the VP. For NFS arrays, PEs are regular mount points.

- Q7. What is the association of a PE to storage containers?

-

A. PEs are managed per array. vSphere will assume that all PEs reported for an array are associated with all containers on that array. IE: If an array has 2 containers and 3 PEs then ESX will assume that vVols on both containers can be bound on all 3 PEs. But internally VPs and storage arrays can have specific logic to map vVols and storage containers to PE.

- Q8. What is the association of a PE to Hosts??

-

A. PEs are like LUNs or mount points. They can be mounted or discovered by multiple hosts.

Architecture and Technical Details

- Q9. Can I have one PE connect to multiple hosts across clusters?

-

A. Yes. VPs can return the same vVols binding information to each host if the PE is visible to multiple hosts.

- Q10. I use multi-pathing policies today. How do I continue to use them with vVols?

-

A. All multi-pathing policies today will be applied to a PE LUN. This means if path failover happens it is applicable to all vVols bound on that PE. Multi-pathing plugins have been modified not to treat internal vVols error conditions as path failure. vSphere will make sure that if older MPPs won’t claim PE LUNs.

- Q11. How many Storage Containers can I have per storage array?

-

A. It depends on what array is configured, but the number of containers is normally small count in (few tens in number). There is a limit of 256 storage containers per host.

- Q12. Can a single Virtual Datastore span different physical arrays?

-

A. No. In 2015, vSphere will not support this.

- Q13. We have the multi-write VMDK feature today? How will that be represented in vVols?

-

A. A vVol can be bound by multiple hosts. vSphere provides multi-writer support for vVols.

- Q14. Can I use VAAI enabled storage arrays along with vVols enabled arrays?

-

A. Yes. vSphere will use VAAI support whenever possible. In fact, VMware mandates ATS support for configuring vVols on SCSI.

- Q15. Can I use legacy datastores along with vVols?

-

A. Yes.

- Q16. Can I replace RDMs with vVols?

-

A. Yes, with the release of vSphere 6.7, vVols do support SCSI-3 reservations. This means clusters like Microsoft WSFC are now supported. If an application requires direct access to the physical device, vVols are not a replacement for pass-thru RDM (ptRDM). vVols are superior to non-pass-thru RDM (nptRDM) in a majority of virtual disks use cases.

- Q17. Can I use SDRS/SIOC to provision vVols enabled arrays?

-

A. No. SDRS is not supported. SIOC will be supported as we support IO scheduling policies for individual vVols.

- Q18. Is VASA 2.0 a requirement for vVols support?

-

A. Yes. vVols does require VASA 2.0. The version 2.0 of the VASA protocol introduces a new set of APIs specifically for vVols that are used to manage storage containers and vVols. It also provides communication between vCenter, hosts, and the storage arrays.

- Q19. How do vVols affect backup software vendors?

-

A. vVols are modeled in vSphere exactly as today's virtual disks. The VADP APIs used by backup vendors are fully supported on vVols just as they are on vmdk files on a LUN. Backup software using VADP should be unaffected.

- Q20. Is vSAN using some of the vVols features under the covers?

-

A. Although vSAN presents some of the same capabilities (representing virtual disks as objects in storage, for instance) and introduces the ability to manage storage SLAs on a per-object level with SPBM, it does not use the same mechanisms as vVols. vVols use VASA 2.0 to communicate with an array's VASA Provider to manage vVols on that array but vSAN uses its own APIs to manage virtual disks. SPBM is used by both, and SPBM's ability to present and interpret storage-specific capabilities lets it span vSAN's capabilities and vVols array's capabilities and presents a single, uniform way of managing storage profiles and virtual disk requirements.

- Q21. Can you shrink the Storage Container as well as increase on the fly?

-

A. Storage Containers are a logical entity only and are entirely managed by the storage array. In theory, there's nothing to prevent them from growing and shrinking on the fly. That capability is up to the array vendor to implement.

- Q22. Where are the Protocol Endpoints (PE) setup? In the vCenter with vSphere web client?

-

A. PEs are configured on the array side and vCenter is informed about them automatically through the VASA Provider. Hosts discover SCSI PEs as they discover today's LUNs; NFS mount points are automatically configured.

- Q23. Where are the array policies (snap, clone, replicate) applied?

-

A. Each array will have a certain set of capabilities supported (snapshot, clone, encryption, etc) and these are defined at the storage container level. In vSphere, a policy is a combination of multiple capabilities and when a VM is provisioned, recommended datastores that match a policy are presented.

- Q24. From the vSphere Admin, is a Virtual Datastore accessed like a LUN? (storage browser, vm logs, vm.vpx config file, etc)

-

A. You can browse a vVols Datastore as you would browse any other datastore (you will see all config vVosl, one per VM). It's these config vVols that hold the information previously in the VM's directory, like vmx file, VM logs, etc.

- Q25. Is there a maximum number of vVols or maximum capacity for an SC/PE?

-

A. Those limits are entirely determined by the array vendor's implementation. The vVols implementation does not impose any particular limits.

- Q26. Can you shrink the PE as well as increase on the fly?

-

A. PEs don't really have a size - they're just a conduit for data traffic. There's no file system created ON the LUN, for instance - they're just the destination for data I/O on vVols that are bound to it.

- Q27. Does the virtual disk vVol contain both the .vmdk file and the -flat.vmdk file as a single object?

-

A. The .vmdk file (the virtual disk descriptor file) is stored in the config vVol with the other VM description information (vmx file, log files, etc.). The vVol object takes the place of the -flat file you mention - the vmdk file has the ID of the vVol.

- Q28. How many vVols will be created for a VM , do we have a different vVols for flat.vmdk and different vVol for .vmx files etc?

-

A. For every VM a single vVol is created to replace the VM directory in today's system. That's 1 so-called "config" vVol. Then there's 1 vVol for every virtual disk, 1 vVol for swap if needed, and 1 vVol per disk snapshot and 1 per memory snapshot. The minimum is typically 3 vVols/VM (1 config, 1 data, 1 swap). Each VM snapshot would add 1 snapshot per virtual disk and 1 memory snapshot (if requested), so a VM with 3 virtual disks would have 1+3+1=5 vVols. Snapshotting that VM would add 3+1=4 vVols.

- Q29. From a vSphere perspective, are snapshots allowed to be created at the individual VMDK (vVol) level?

-

A. The APIs provide for snapshots of individual vVols but note that the UI only provides snapshots on a per-VM basis, which internally translates to requests for (simultaneous) snapshots of all a VM's virtual disks. It is not per LUN, though.

- Q30. Will PowerCLI provide support for native vVols cmdlets?

-

A. Yes.

- Q31. Is there documentation that shows exactly which files belong to each type of vVols object?

-

A. There are no "files". The vVols are directly stored by the storage array and are referenced by the VM as it powers on. There is metadata information linking a vVol to a particular VM so a management UI could be created for the vVols of a VM.

- Q32. Is there any NFS or SCSI conversions going on under PE or array side?

-

A. Storage Containers are configured and the PEs set up to use NFS or SCSI for data in advance. The NFS traffic is unaltered NFS using a mount-point and a path. SCSI I/O is directed to a particular vVol using the secondary LUN ID field of the SCSI command.

- Q33. Will it be possible to use storage policy definitions to automate VM's to choose different SC/array-like flash, etc?

-

A. Yes. SPBM will separate all datastores (VMFS, VSAN, vVols, NFS) into "Compatible" and "Incompatible" sets based on the VM's requirement policy.

About the Author

Rawlinson Rivera was a Senior Architect at VMware focused on Software-Defined Storage technologies primarily responsible for Virtual SAN, vVols Virtual Volumes.

Twitter: @PunchingClouds

Updates by:

Jason Massae, is the Sr. Technical Marketing Architect for vVols and Core Storage. Focusing on external storage technologies working closely with VMware Storage Partners.

Twitter: @jbmassae