How vSAN Cluster Topologies Change Network Traffic

The flexibility of vSAN and VCF powered by vSAN gives our customers a multitude of design options to meet virtually any use case: Doing so in ways that make it easy to operate and adjust for future needs. With that flexibility comes questions in both design, and operation. VMware provides guidance on both topics through the vSAN Design Guide, and the vSAN Operations Guide to help you make the best choices for your deployment of vSAN.

The topology and features used with vSAN can change where and how vSAN storage traffic traverses a network. vSAN is a distributed storage system, and while it provides unprecedented levels of flexibility, these choices can impact your network in ways that perhaps you may have not considered.

Let's look at some examples.

Stretched clusters

Stretched cluster topologies provide a way to ensure that a VM will stay running and available should one site of the stretched cluster become unavailable. To provide this resilience, all write operations for a VM must traverse the inter-site link (ISL) to protect the data on both sides. vSAN minimizes the use of the ISL by attempting to fetch read requests from the site the VM resides, and it will also deliver write requests efficiently as shown in Figure 4 of the post, “Performance with Stretched Clusters.” Nevertheless, the ISL is an important detail of a vSAN stretched cluster topology.

Figure 1. Data path considerations in a stretched cluster topology (witness site omitted for clarity).

The dependence of the ISL (in bandwidth and latency) is fairly obvious to most, but it is not the only networking component that should be considered in the topology. The connection path from the ISL to the hosts at each site must not be mired down with any type of firewalls, intrusion detection systems (IDS), load balancers, proxies, or similar intercepting devices. These devices in the data path offer very little other than slowing down the data path. My colleague John Nicholson wrote a humorous, and informative post on the matter, called "Peanut Butter is not Supported with vSphere Storage Networking for vSAN and VCF."

Often overlooked, the capabilities and performance of network switchgear at each respective site can be just as important as in a standard vSAN cluster, especially if secondary levels of resilience are assigned courtesy of the storage policies. Secondary levels of resilience can impact where and how writes are distributed across the vSAN hosts. See the post, "Performance with vSAN Stretched Clusters" for more information.

Recommendation: Keep the data path between sites as simple and efficient as possible. This will give the highest levels of performance, and prevents critical storage traffic from being manipulated or delayed by unnecessary solutions.

Large clusters

As noted in the blog post "Performance Capabilities in Relation to vSAN Cluster Size" given the same number of hosts connecting to a pair of switches, there is very little correlation between cluster size and demands of the switchgear. However, cluster size can change where and how vSAN traffic traverses across the network stack.

For example, consider an environment with 4, full-height racks using a leaf-spine network, as shown in Figure 2. These 42U racks can typically hold a maximum of 20, 2U hosts, leaving enough room for the top-of-rack/leaf switches. The cluster of 20 hosts shown on the left would only transmit vSAN traffic through the top-of-rack switches for that rack. While a 60 host vSAN cluster would transmit vSAN traffic across the spine. While there is nothing inherently wrong with the traffic transmitting across the spine (as demonstrated in the following examples), it demonstrates how cluster size can change the resources that vSAN uses.

Figure 2. Comparing vSAN traffic in a smaller cluster to a larger cluster.

Recommendation: If smaller clusters are deployed with no rack-level resilience, ensure all of the hosts in a vSAN cluster are in the same rack and use the same top-of-rack switches. If using larger clusters, ensure sufficient networking capabilities exist at the spine.

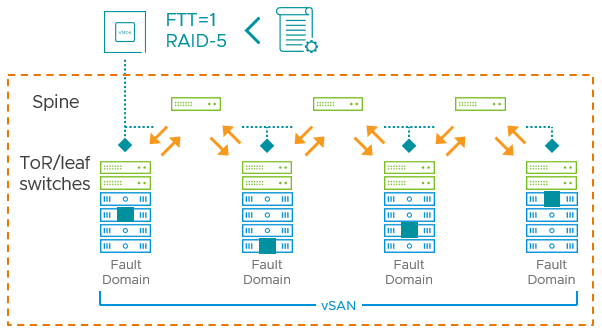

Using the vSAN Fault Domains Feature

The fault domains feature in vSAN gives administrators a way to provide rack-level or room-level resilience. The blog post: "Design and Operation Considerations when Using vSAN Fault Domains" addresses many of the common questions asked about the use of fault domains. But just as with the use of larger clusters, the use of the fault domains feature will force vSAN traffic to traverse across the spine of a spine-leaf architecture. Again, this is not necessarily a bad thing, but knowing this can be helpful in your efforts to understand how resource usage may be affected

Figure 3. The traversal of vSAN data when using the vSAN Fault Domains feature.

Recommendation: Follow the recommended practices noted in the "Design and Operation Considerations when Using vSAN Fault Domains" and the vSAN Design Guide for the optimal configurations for vSAN Fault Domains

HCI Mesh

HCI Mesh has a similar effect on impacting how and where storage traffic traverses. Unlike the previous examples, HCI Mesh server clusters are transmitting and receiving vSAN storage traffic from different clusters in addition to their own. These external client clusters could be other vSAN clusters that are borrowing storage from another cluster, vSphere clusters augmenting existing external storage, or vSphere clusters using an HCI Mesh server cluster exclusively for its storage needs.

In these cases, vSAN storage traffic may traverse to other racks or areas of the data center across the spine. This can be contrary to some of the assumptions made by the network teams who may need to be aware of these types of considerations to ensure that traffic shaping or physical limits across the spine may not be limiting the performance capabilities.

Figure 4. The potential reshaping of network traffic when using HCI Mesh.

Recommendation: Use 25/100Gb networking as a minimum for clusters consuming or providing storage to one another. As documented in "Running Tier-1 Apps using HCI Mesh" sufficient network bandwidth with minimal latency can deliver exemplary performance for remote vSAN storage.

Summary

The flexibility of vSAN means that you can create clusters of all shapes and sizes. Understanding how topology, features, and the size of a vSAN cluster can affect the data path is an important step in any comprehensive design exercise.