Fujitsu PRIMEFLEX for VMware vSAN 20,000 User Mailbox Exchange 2016 Mailbox Resiliency Storage Solution

Overview

This section covers the overview and disclaimer of this solution.

This document provides information on Fujitsu PRIMEFLEX for VMware vSAN solution for Microsoft Exchange Server, based on the Microsoft Exchange Solution Reviewed Program (ESRP) – Storage program*. For any questions or comments regarding the contents of this document, see Contact for Additional Information.

*The ESRP – Storage program was developed by Microsoft Corporation to provide a common storage testing framework for storage and server OEMs to provide information on its storage solutions for Microsoft Exchange Server software. For more details on the Microsoft ESRP – Storage program, click http://technet.microsoft.com/en-us/exchange/ff182054.aspx.

Disclaimer

This document has been produced independently of Microsoft Corporation. Microsoft Corporation expressly disclaims responsibility for, and makes no warranty, express or implied, with respect to, the accuracy of the contents of this document.

The information contained in this document represents the current view of Fujitsu and VMware on the issues discussed as of the date of publication. Due to changing market conditions, it should not be interpreted to be a commitment on the part of Fujitsu and VMware, and Fujitsu and VMware cannot guarantee the accuracy of any information presented after the date of publication.

Components

This section provides an overview of the components used in this solution.

Hardware Resource

Fujitsu Integrated System PRIMEFLEX for VMware vSAN is a validated server configuration for VMware® vSAN ReadyNode™ in a tested and certified hardware form factor for vSAN deployment, jointly recommended by Fujitsu and VMware. For more details about VMware vSAN ReadyNode, visit VMware Compatibility Guide.

Hardware List

|

Item |

Description |

|

Platform |

Fujitsu PRIMEFLEX for VMware vSAN |

|

Hardware Model |

Fujitsu PRIMERGY RX2540 M4 |

|

CPU |

Intel(R) Xeon(R) Gold 6154 CPU @ 3.00GHz |

|

Socket/core |

2/18 |

|

Memory |

192GB |

|

Network |

2 x 1Gbps Intel Corporation I350 Gigabit Network Connection 4 x Intel(R) Ethernet Connection X722 for 10GBASE-T |

|

Hypervisor |

VMware ESXi, 6.7.0, 9214924 |

|

Storage |

VMware vSAN 6.7.0 1 x 400GB Cache SSD 4 x 1,920GB Capacity SSDs |

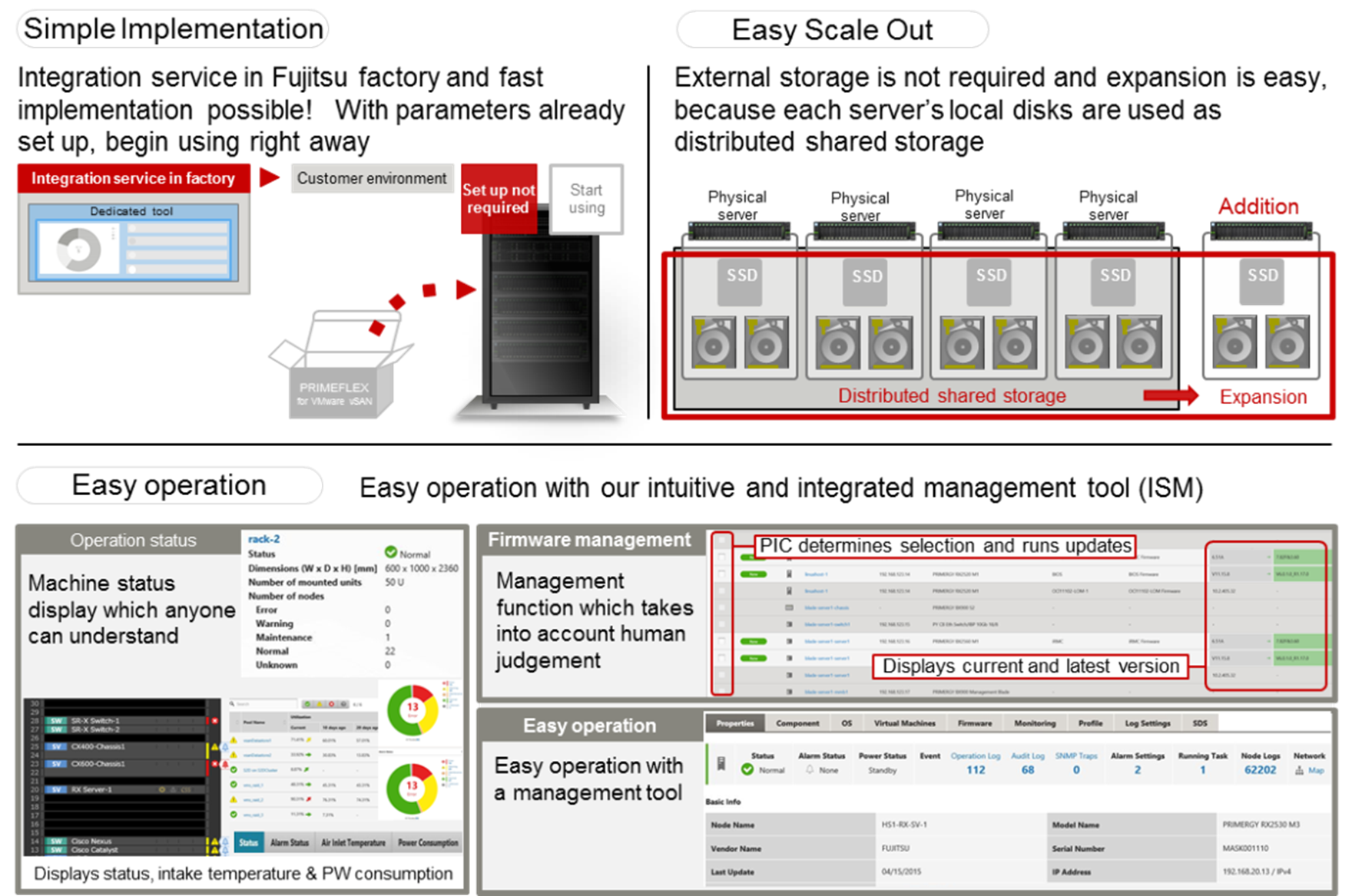

On top of the general VMware vSAN ReadyNode, PRIMEFLEX for VMware vSAN adds several unique features delivered through Fujitsu factory integration service and Fujitsu Software Infrastructure Manager.

Figure 1 . Fujitsu Factory Integration Service and Fujitsu Software Infrastructure Manager

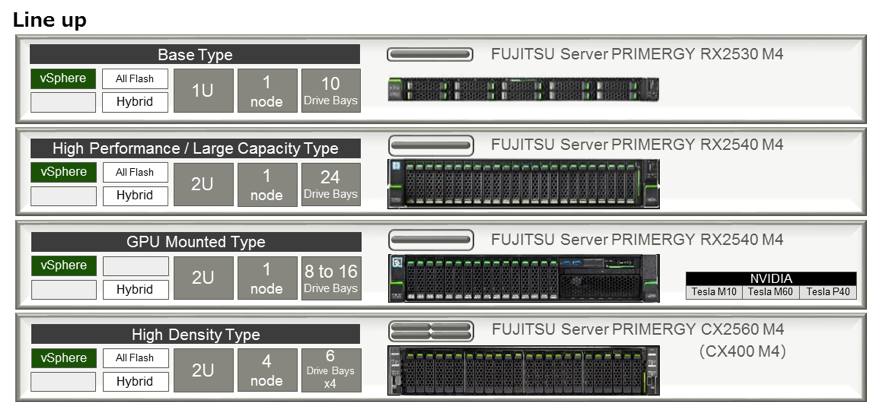

Fujitsu offers several types of PRIMEFLEX system which can fit various workloads.

Figure 2 . PRIMEFLEX System Types

For more details about PRIMEFLEX for VMware vSAN, visit FUJITSU Integrated System.

VMware vSphere 6.7

VMware vSphere 6.7 is the next-generation infrastructure for next-generation applications. It provides a powerful, flexible, and secure foundation for business agility that accelerates the digital transformation to cloud computing and promotes success in the digital economy. vSphere 6.7 supports both existing and next-generation applications through its:

- Simplified customer experience for automation and management at scale

- Comprehensive built-in security for protecting data, infrastructure, and access

- Universal application platform for running any application anywhere

With vSphere 6.7, customers can run, manage, connect, and secure their applications in a common operating environment, across clouds and devices.

VMware vSAN 6.7

VMware vSAN, the market leader hyper converged infrastructure (HCI), enables low-cost and high-performance next-generation HCI solutions. vSAN converges traditional IT infrastructure silos onto industry-standard servers and virtualizes physical infrastructure to help customers easily evolve their infrastructure without risk, improve TCO over traditional resource silos, and scale to tomorrow with support for new hardware, applications, and cloud strategies. The natively integrated VMware infrastructure combines radically simple VMware vSAN storage, the market-leading VMware vSphere Hypervisor, and the VMware vCenter Server® unified management solution, all on the broadest and deepest set of HCI deployment options.

vSAN 6.7 introduces further performance and space efficiencies. Adaptive Resync ensures fair-share of resources are available for VM IOs and Resync IOs during dynamic changes in load on the system providing optimal use of resources. Optimization of the destaging mechanism has resulted in data that drains more quickly from the write buffer to the capacity tier. The swap object for each VM is now thin provisioned by default and will also match the storage policy attributes assigned to the VM introducing the potential for significant space efficiency.

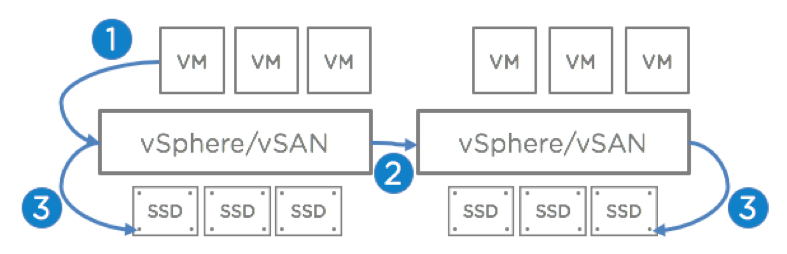

Native to vSphere Hypervisor

vSAN does not require the deployment of storage virtual appliances or the installation of a vSphere Installation Bundle (VIB) on every host in the cluster. vSAN is native in the vSphere hypervisor and typically consumes less than 10% of the computing resources on each host. vSAN does not compete with other virtual machines for resources and the I/O path is shorter.

Figure 3 . vSAN is Native in the vSphere Hypervisor

As shown in Figure 3, a shorter I/O path and the absence of resource-intensive storage virtual appliances enables vSAN to provide excellent performance with minimal overhead. Higher virtual machine consolidation ratios translate into lower total costs of ownership.

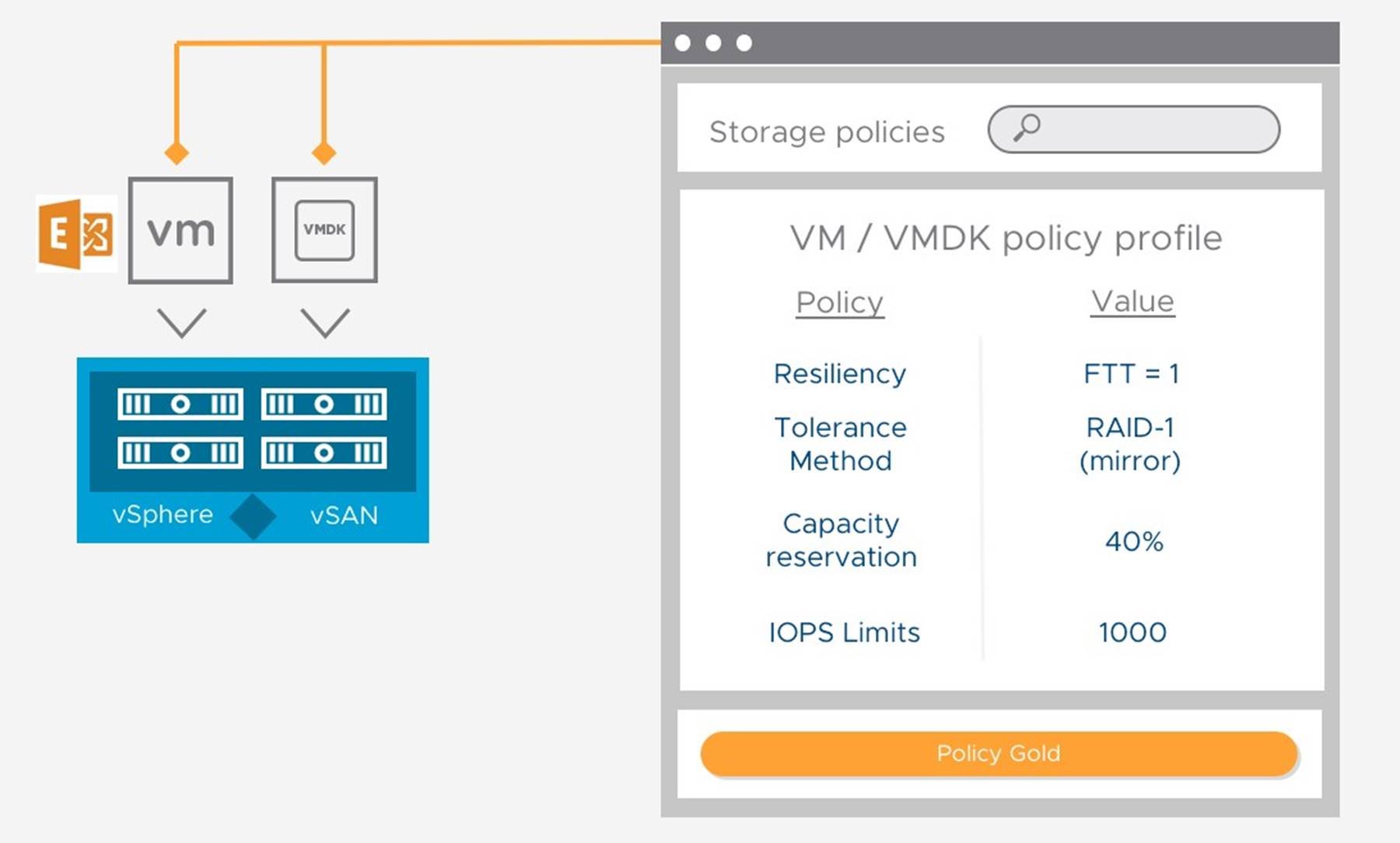

Storage Policy Based Management

As shown in Figure 4, Storage Policy-Based Management (SPBM) from VMware enables the precise control of storage services. Like other storage solutions, VMware vSAN provides services such as resiliency, tolerance method, capacity reservation, and IOPS limits. A storage policy contains one or more rules that define service levels.

Figure 4 . vSAN Storage Policy Based Management

Storage policies are created and managed using the vSphere Web Client. Policies can be assigned to virtual machines and individual objects such as a virtual disk. Storage policies are easily changed or reassigned if application requirements change. These modifications are performed with no downtime and without the need to migrate virtual machines from one datastore to another. SPBM makes it possible to assign and modify service levels with precision on a per-virtual machine basis.

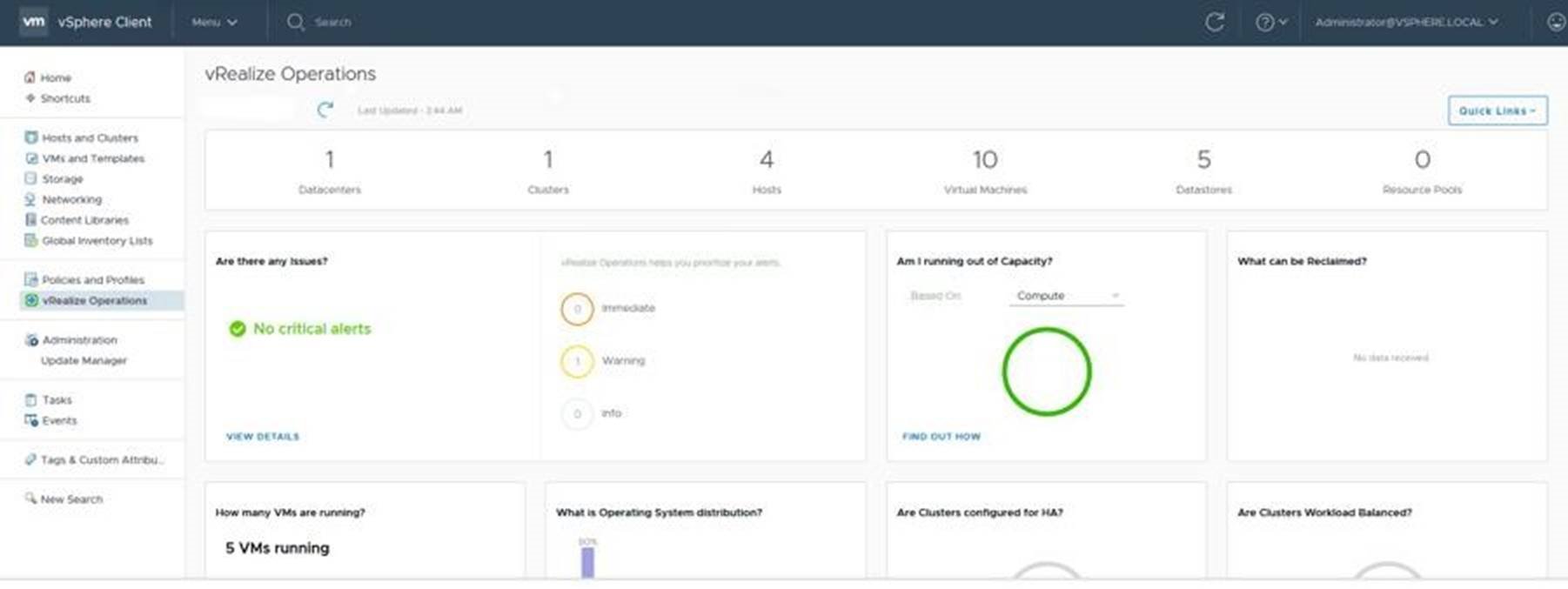

Monitoring with vRealize Operations

vSphere and vSAN 6.7 includes VMware vRealize® Operations™ within vCenter. This new feature allows vSphere customers to see a subset of intelligence offered up by vRealize Operations through a single vCenter user interface. Light-weight purpose-built dashboards are included for both vSphere and vSAN. It is easy to deploy, provides multi-cluster visibility, and does not require any additional licensing.

Figure 5 . vRealize Operation Management Portal

Solution Description

Solution Overview

Solution Overview

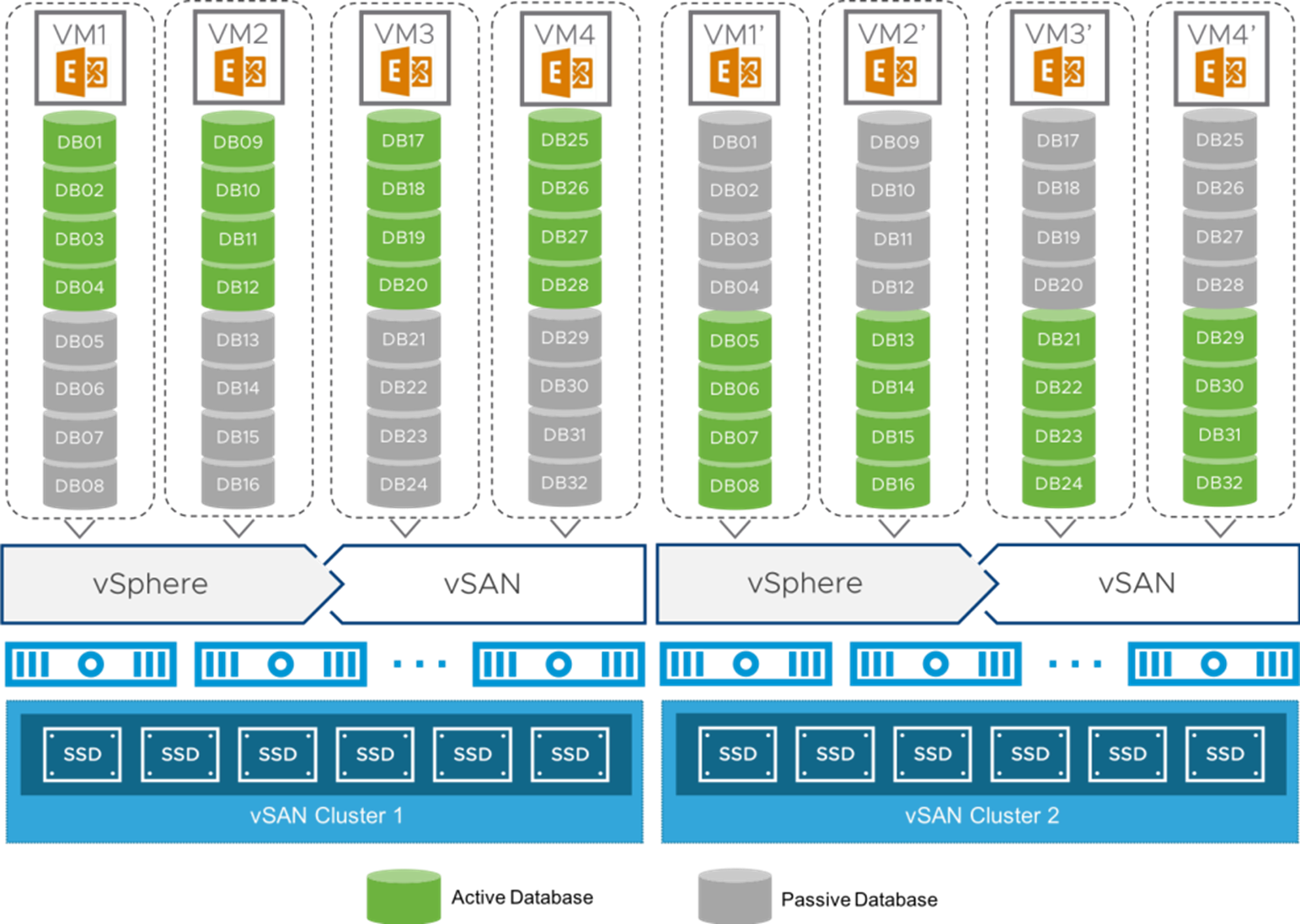

As shown in Figure 6, we design Microsoft Exchange 2016 Mailbox resiliency solution targeted for medium to large enterprises. Exchange Database Availability Group feature is enabled to support Mailbox resiliency across the VMware vSAN clusters. Each VMware vSAN cluster consists of four Fujitsu PRIMERGY RX2540 M4 ESXi servers with 1x400GB SSD as cache tier and 4x1,920GB SSDs as capacity tier.

Each mailbox virtual machine is configured with 8 vCPU and 64 GB memory, running Microsoft Exchange 2016 on Windows Server 2016 Datacenter platform. A single mailbox VM contains eight databases including 4 active copies and 4 passive copies.

Figure 6 . 20,000 Mailbox Resilience Solution Architecture on VMware vSAN

Network configuration

We created a vSphere Distributed Switch™ to act as a single virtual switch across all four Fujitsu PRIMERGY RX2540 M4 server in the vSAN cluster.

The vSphere Distributed Switch used two 10GbE adapters for the teaming and failover. A port group defines properties regarding security, traffic shaping, and NIC teaming. To isolate vSAN, VM (node) and vMotion traffic, we used the default port group settings except for the uplink failover order. We assigned one dedicated NIC as the active link and assigned another NIC as the standby link. For vSAN and vMotion, the uplink order is reversed. See Table 1 for network configuration.

Table 1 Distributed Switch Port Group Configuration

|

Distributed port group |

Active uplink |

Standby uplink |

|

VMware vSAN |

Uplink 1 |

Uplink 2 |

|

VM and vSphere vMotion |

Uplink 2 |

Uplink 1 |

Exchange Virtual Machine Configuration

We configure each Exchange 2016 virtual machine as described in Table 2, and all the virtual machines are with the identical configuration. The virtual disks are configured with thin provisioning by default. We set the virtual SCSI controller mode for Exchange database disks for both data and transaction log to VMware Paravirtual with even distribution.

Table 2 Exchange Virtual Machine Configuration

|

Exchange VM |

vCPU |

Memory (GB) |

Virtual Disks |

SCSI ID (Controller, ID) |

SCSI Type |

|

EX01, EX02, EX03, EX04 |

8 |

64 |

OS disk: 40GB |

SCSI(0, 0) |

LSI Logic |

|

Data disk 1: 320GB |

SCSI(1, 0) |

VMware Paravirtual |

|||

|

Data disk 2: 320GB |

SCSI(2, 0) |

VMware Paravirtual |

|||

|

Data disk 3: 320GB |

SCSI(3, 0) |

VMware Paravirtual |

|||

|

Data disk 4: 320GB |

SCSI(1, 1) |

VMware Paravirtual |

|||

|

Data disk 5: 320GB |

SCSI(2, 1) |

VMware Paravirtual |

|||

|

Data disk 6: 320GB |

SCSI(3, 1) |

VMware Paravirtual |

|||

|

Data disk 7: 320GB |

SCSI(1, 2) |

VMware Paravirtual |

|||

|

Data disk 8: 320GB |

SCSI(2, 2) |

VMware Paravirtual |

vSAN Storage Policy Configuration

In this solution, we use the default vSAN storage policy for Exchange 2016 databases. The detailed configuration is defined in Table 3.

Table 3 vSAN Storage Policy Configuration

|

Settings |

Value |

Description |

|

Failure to Tolerate |

1 |

Defines the number of disk, host, or fault domain failures a storage object can tolerate. |

|

Erasure Coding |

RAID 1 (Mirroring) |

Defines the method used to tolerate failures. By default, Exchange database will preserve two copies on vSAN as storage level protection. |

|

Number of disk stripes per object |

1 |

The number of capacity disks across which each replica of a storage object is striped. |

|

Checksum |

Enabled |

Checksum is calculated by default to prevent from Exchange data corruption. |

Scalability for Exchange 2016 on vSAN

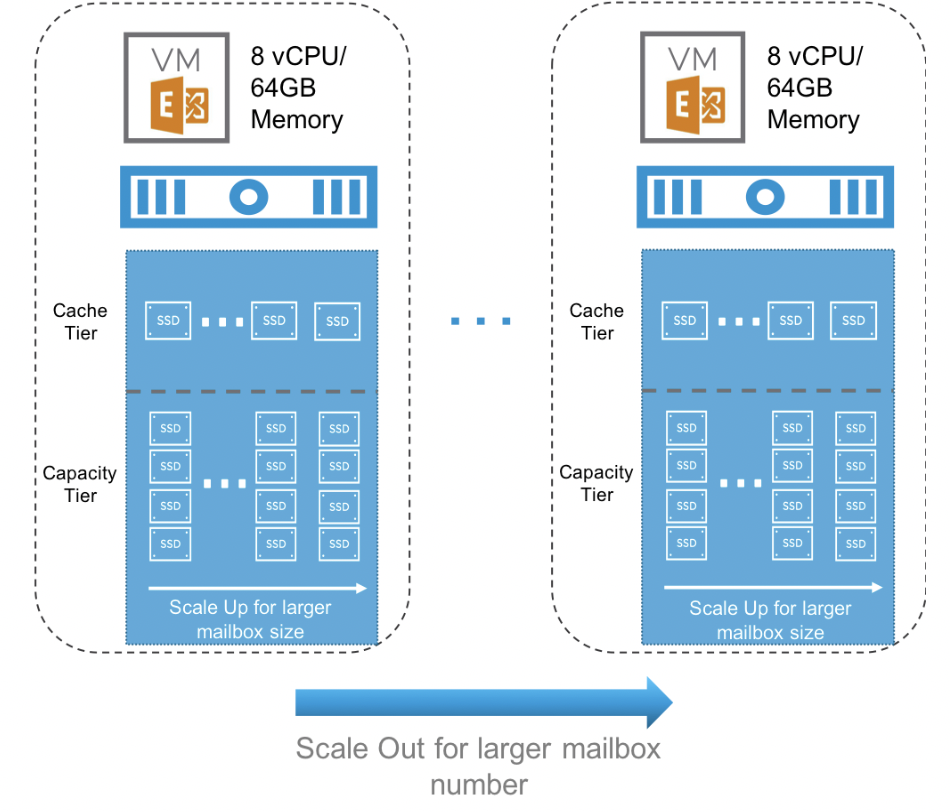

VMware vSAN is designed for easy scalability for business-critical applications. In this solution, we configure a fournode Fujitsu PRIMEFLEX for VMware vSAN cluster with one disk group for Exchange 2016 mailbox resiliency solution. vSAN supports both scale-up and scale-out for capacity and performance considerations for Exchange.

Figure 7 . Building Block Methodology for vSAN Scale-up and Scale-out Sizing for Exchange 2016

As shown in Figure 7, for larger mailbox size sizing, we set each building block as single disk group, or 1 x 400GB Cache Tier SSD plus 4 x 1,920GB Capacity Tier SSDs in the Fujitsu PRIMERGY Rx2540 M4 server. A single disk group can support up to 2,500 user mailboxes per node with 1GB per mailbox size, and up to 0.36 IOPS per user (including 20 percent overhead). It is easy to scale up for 2GB mailbox size with the same profile by simply adding another disk group to the system. As you may have multiple hosts in the vSAN cluster, it is recommended to plan for scale-up for all the servers with identical configuration in the cluster as best practices.

For larger mailbox number sizing, we set each building block as single vSAN node, or one Fujitsu PRIMERGY Rx2540 M4 server. You may scale out for additional 2,500 user mailboxes support with the same profile by adding another Fujitsu node to the vSAN cluster.

For more details about vSAN sizing and scalability guide, visit VMware® vSAN™ Design and Sizing Guide.

Targeted Customer Profile

The targeted customer profile for the tested Microsoft Exchange 2016 Mailbox profile in this solution is defined as follows:

- 20,000 user mailboxes

- 1GB mailbox size

- 8 Exchange Servers with DAG configured

- Mailbox Resiliency with 2 database copies

- 0.36 IOPS per user mailbox (450 message per day, including 20 percent headroom)

- 24x7 Background Database Maintenance job enabled

Network Configuration

We created a vSphere Distributed Switch™ to act as a single virtual switch across all four Fujitsu PRIMERGY RX2540 M4 server in the vSAN cluster.

The vSphere Distributed Switch used two 10GbE adapters for the teaming and failover. A port group defines properties regarding security, traffic shaping, and NIC teaming. To isolate vSAN, VM (node) and vMotion traffic, we used the default port group settings except for the uplink failover order. We assigned one dedicated NIC as the active link and assigned another NIC as the standby link. For vSAN and vMotion, the uplink order is reversed. See Table 1 for network configuration.

Table 1. Distributed Switch Port Group Configuration

|

Distributed port group |

Active uplink |

Standby uplink |

|

VMware vSAN |

Uplink 1 |

Uplink 2 |

|

VM and vSphere vMotion |

Uplink 2 |

Uplink 1 |

Exchange Virtual Machine Configuration

We configure each Exchange 2016 virtual machine as described in Table 2, and all the virtual machines are with the identical configuration. The virtual disks are configured with thin provisioning by default. We set the virtual SCSI controller mode for Exchange database disks for both data and transaction log to VMware Paravirtual with even distribution.

Table 2. Exchange Virtual Machine Configuration

|

Exchange VM |

vCPU |

Memory (GB) |

Virtual Disks |

SCSI ID (Controller, ID) |

SCSI Type |

|

EX01, EX02, EX03, EX04 |

8 |

64 |

OS disk: 40GB |

SCSI(0, 0) |

LSI Logic |

|

Data disk 1: 320GB |

SCSI(1, 0) |

VMware Paravirtual |

|||

|

Data disk 2: 320GB |

SCSI(2, 0) |

VMware Paravirtual |

|||

|

Data disk 3: 320GB |

SCSI(3, 0) |

VMware Paravirtual |

|||

|

Data disk 4: 320GB |

SCSI(1, 1) |

VMware Paravirtual |

|||

|

Data disk 5: 320GB |

SCSI(2, 1) |

VMware Paravirtual |

|||

|

Data disk 6: 320GB |

SCSI(3, 1) |

VMware Paravirtual |

|||

|

Data disk 7: 320GB |

SCSI(1, 2) |

VMware Paravirtual |

|||

|

Data disk 8: 320GB |

SCSI(2, 2) |

VMware Paravirtual |

vSAN Storage Policy Configuration

In this solution, we use the default vSAN storage policy for Exchange 2016 databases. The detailed configuration is defined in Table 3.

Table 3. vSAN Storage Policy Configuration

|

Settings |

Value |

Description |

|

Failure to Tolerate |

1 |

Defines the number of disk, host, or fault domain failures a storage object can tolerate. |

|

Erasure Coding |

RAID 1 (Mirroring) |

Defines the method used to tolerate failures. By default, Exchange database will preserve two copies on vSAN as storage level protection. |

|

Number of disk stripes per object |

1 |

The number of capacity disks across which each replica of a storage object is striped. |

|

Checksum |

Enabled |

Checksum is calculated by default to prevent from Exchange data corruption. |

Scalability for Exchange 2016 on vSAN

VMware vSAN is designed for easy scalability for business-critical applications. In this solution, we configure a four-node Fujitsu PRIMEFLEX for VMware vSAN cluster with one disk group for Exchange 2016 mailbox resiliency solution. vSAN supports both scale-up and scale-out for capacity and performance considerations for Exchange.

Figure 7. Building Block Methodology for vSAN Scale-up and Scale-out Sizing for Exchange 2016

As shown in Figure 7, for larger mailbox size sizing, we set each building block as single disk group, or 1 x 400GB Cache Tier SSD plus 4 x 1,920GB Capacity Tier SSDs in the Fujitsu PRIMERGY Rx2540 M4 server. A single disk group can support up to 2,500 user mailboxes per node with 1GB per mailbox size, and up to 0.36 IOPS per user (including 20 percent overhead). It is easy to scale up for 2GB mailbox size with the same profile by simply adding another disk group to the system. As you may have multiple hosts in the vSAN cluster, it is recommended to plan for scale-up for all the servers with identical configuration in the cluster as best practices.

For larger mailbox number sizing, we set each building block as single vSAN node, or one Fujitsu PRIMERGY Rx2540 M4 server. You may scale out for additional 2,500 user mailboxes support with the same profile by adding another Fujitsu node to the vSAN cluster.

For more details about vSAN sizing and scalability guide, visit VMware® vSAN™ Design and Sizing Guide.

Targeted Customer Profile

The targeted customer profile for the tested Microsoft Exchange 2016 Mailbox profile in this solution is defined as follows:

- 20,000 user mailboxes

- 1GB mailbox size

- 8 Exchange Servers with DAG configured

- Mailbox Resiliency with 2 database copies

- 0.36 IOPS per user mailbox (450 message per day, including 20 percent headroom)

- 24x7 Background Database Maintenance job enabled

Tested Deployment

The following tables summarize the testing environment:

Simulated Exchange Configuration

|

Number of Exchange mailboxes simulated |

20,000 |

|

Mailbox Size |

1GB |

|

Number of Database Availability Groups (DAGs) |

1 |

|

Number of servers/DAG |

8 (4 tested) |

|

Number of active mailboxes/server |

2,500 |

|

Number of databases/host |

8 |

|

Number of copies/database |

2 |

|

Number of mailboxes/database |

Up to 320 |

|

Simulated profile: I/O’s per second per mailbox (IOPS, include 20% headroom) |

0.36 |

|

Database/Log LUN size |

320GB |

|

Total database size for performance testing |

10TB |

|

% storage capacity used by Exchange database |

71.4% (including vSAN storage mirroring copy) |

Storage Hardware

|

Storage Connectivity |

Pass-Through |

|

Storage model and OS/firmware revision |

VMware vSAN 6.7 build number 9214924 |

|

Storage cache |

1 x 400GB SSD as Cache Tier per node |

|

Number of storage controllers |

4 |

|

Number of storage ports |

2 x 10Gb Ethernet port per node |

|

Maximum bandwidth of storage connectivity to host |

2 x 10Gbps per node |

|

HBA model and firmware |

Fusion-MPT 12GSAS SAS3008 PCI-Express Fw Rev. 13.00.00.00 |

|

Number of HBA’s/host |

1 |

|

Host server type |

4 x Fujitsu PRIMERGY RX2540 M4 Intel(R) Xeon(R) Gold 6154 CPU @ 3.00GHz 192GB memory |

|

Total number of disks tested in solution |

1 Cache Tier SSD and 4 Capacity Tier SSDs per host |

|

Maximum number of spindles can be hosted in the storage |

24 per host |

Storage Software

|

Storage Software |

VMware vSAN 6.7 |

|

HBA driver |

lsi-msgpt3 version 16.00.01.00 |

|

HBA QueueTarget Setting |

N/A |

|

HBA QueueDepth Setting |

N/A |

|

Multi-Pathing |

NMP (Direct-Access) |

|

Host OS |

FUJITSU Custom Image for ESXi 6.7 |

|

ESE.dll file version |

15.01.1531.003 |

|

Replication solution name/version |

N/A |

Storage Disk Configuration (Mailbox Store and Transactional Log Disks)

|

Disk type, speed and firmware revision |

Cache Tier: TOSHIBA PX05SMB040 SSD Capacity Tier: HGST SDLL1CLR020T5CF1 SSD |

|

Raw capacity per disk (GB) |

Cache Tier: 400GB per disk Capacity Tier: 1,920GB per disk |

|

Number of physical disks in test |

One disk group per host Cache Tier: 1 SSD Capacity Tier: 4 SSDs |

|

Total raw storage capacity (GB) |

27.95TB |

|

Disk slice size (GB) |

N/A |

|

Number of slices per LUN or number of disks per LUN |

N/A |

|

Raid level |

RAID 1 (Mirroring) |

|

Total formatted capacity |

19.22TB |

|

Storage capacity utilization |

68.7% |

|

Database capacity utilization |

35.7% |

Best Practices

This section highlights the best practices to be followed for the solution.

Exchange server is a disk-intensive application. Based on the testing run using the ESRP framework, we would recommend the following practices to improve the storage performance. The best practices for Microsoft Exchange 2013 and 2016 are applicable to each other.

- For Exchange virtualization best practices for VMware vSphere, visit Microsoft Exchange 2013 on VMware Best Practices Guide.

- For Exchange on VMware vSAN best practices, visit Microsoft Exchange 2013 on VMware vSAN.

- For Exchange 2007 best practices on storage design, visit Planning Storage Configurations.

Backup Strategy

VMware vSAN snapshot and clone technologies are primarily used for providing support to VM level backup and restore for Exchange operations.

vSphere Data Protection enables simple and robust backup and recovery solution integrated with vCenter and Microsoft Exchange. Site Recovery Manager provides a disaster recovery plan built in and automated within vCenter that can be tested before an outage, planned maintenance, or periodically in preparation for a disaster situation. Using VMware’s Site Recovery Manager and vSphere Data Protection provides a resilient and highly available Microsoft Exchange environment.

Other third-party data protection products, like Veeam, provide application-level backup and restore since it internally integrated with Microsoft Volume Snapshot Service (VSS) Writer for application quiescing methodology to provide point-in-time backup and restore, which simplifies the database maintenance in a VMware virtualized environment for Exchange administrators. Best practices and implementation recommendations vary by the third-party and it is recommended to consult with your data protection product vendor for optimal solutions.

Contact for Additional Information

See Storagehub for more vSAN details.

Test Result Summary

This section provides a high-level summary of the test data from ESRP and the link to the detailed html reports that are generated by the ESRP testing framework.

Reliability

A number of tests in the framework are to check reliability tests runs for 24 hours. The goal is to verify the storage can handle high IO load for a long period of time. Both log and database files will be analyzed for integrity after the stress test to ensure there is no database/log corruption.

The reliability test results are summarized as follows:

- Minimal performance drop compared with 2-hour performance test.

- No error reported in the saved eventlog file.

- No error reported during the database and log checksum process.

See Appendix A—Stress Test Result Report for more details.

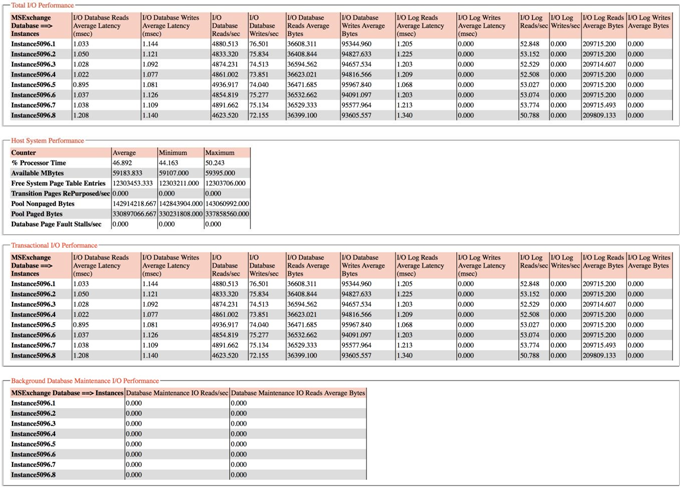

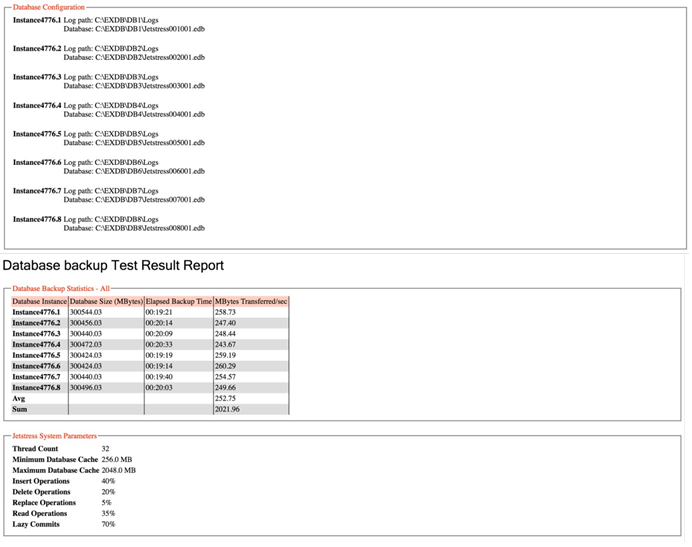

Storage Performance Results

The primary storage performance testing is designed to exercise the storage with the maximum sustainable

Exchange type of IO for 2 hours. The test is to show how long it takes for the storage to respond to an IO under load. The data below is the sum of all of the logical disk I/Os and the average of all the logical disks I/O latency in the 2-hour test. Each server is listed separately and the aggregated number across all servers is listed as well.

Individual Server Metrics

The sum of I/Os across storage groups and the average latency across all storage groups on a per server basis.

|

Database I/O |

EX01 |

EX02 |

EX03 |

EX04 |

|

Database Disks Transfers/sec |

11,508.80 |

11,551.85 |

11,684.21 |

11,834.36 |

|

Database Disks Reads/sec |

7,060.64 |

7,049.51 |

7,095.86 |

7,193.28 |

|

Database Disks Writes/sec |

4,448.16 |

4,502.34 |

4,588.35 |

4,641.09 |

|

Average Database Disk Read Latency (ms) |

1.51 |

1.51 |

1.47 |

1.44 |

|

Average Database Disk Write Latency (ms) |

5.13 |

5.05 |

4.99 |

5.23 |

|

Transaction Log I/O |

||||

|

Log Disks Writes/sec |

1,003.47 |

1,015.28 |

1,018.85 |

1,015.86 |

|

Average Log Disk Write Latency (ms) |

2.58 |

2.51 |

2.47 |

2.51 |

Aggregate Performance across All Servers Metrics

The following table shows the sum of I/O’s across servers in solution and the average latency across all servers in this solution.

|

Database I/O |

|

|

Database Disks Transfers/sec |

46,579.22 |

|

Database Disks Reads/sec |

28,399.29 |

|

Database Disks Writes/sec |

18,179.93 |

|

Average Database Disk Read Latency (ms) |

1.48 |

|

Average Database Disk Write Latency (ms) |

5.10 |

|

Transaction Log I/O |

|

|

Log Disks Writes/sec |

4,053.44 |

|

Average Log Disk Write Latency (ms) |

2.52 |

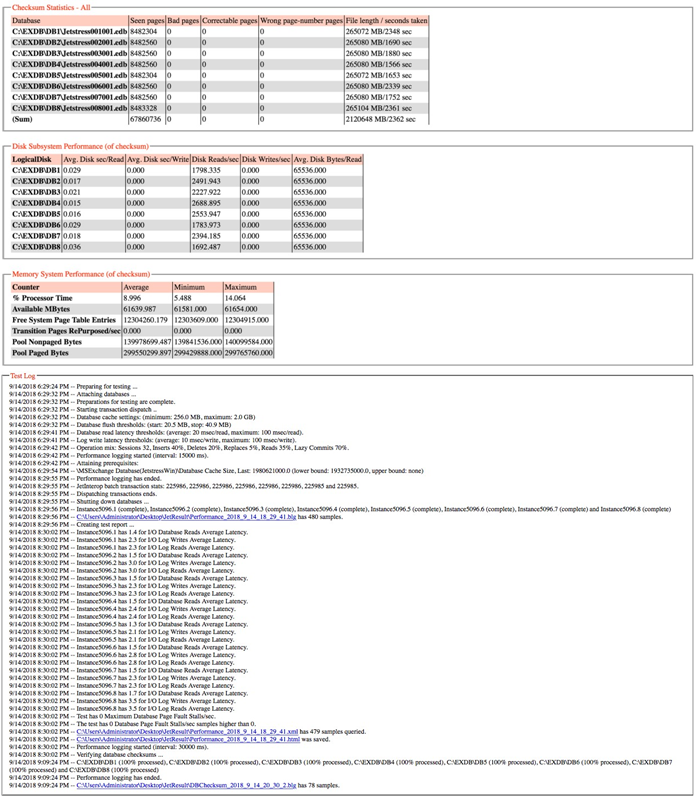

Database Backup/Recovery Performance

There are two test reports in this section. The first one is to measure the sequential read rate of the database files, and the second is to measure the recovery/replay performance (playing transaction logs in to the database).

Database Read-only Performance

The test is to measure the maximum rate at which databases could be backed up via Volume Shadow Copy

Service (VSS). The following table shows the average rate for a single database file and the aggregated bandwidth.

|

MB read/sec per database |

213.86 |

|

MB read/sec total per server (8 databases) |

1,710.84 |

|

MB read/sec total per server (4 servers) |

6,843.32 |

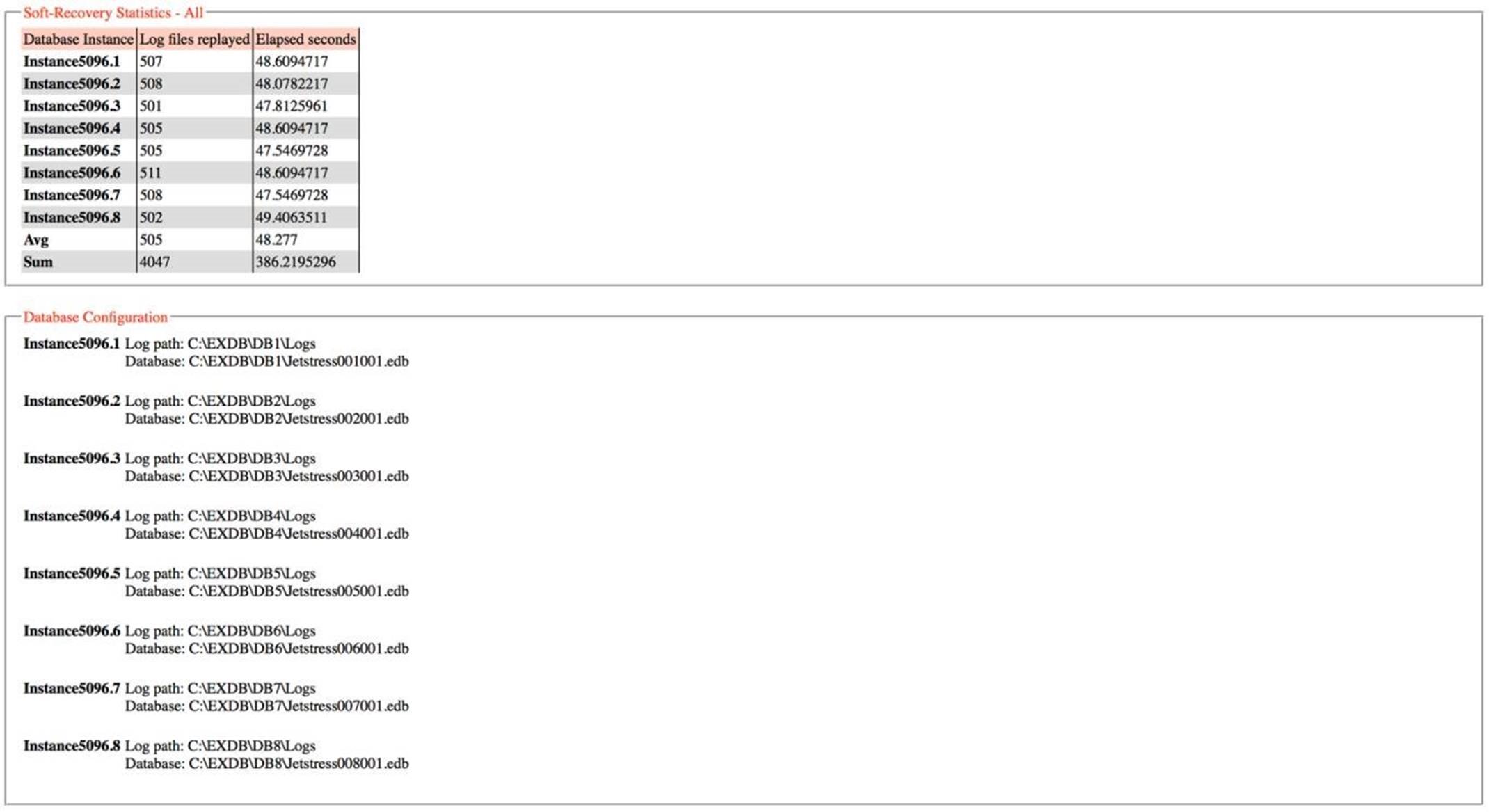

Transaction Log Recovery/Replay Performance

The test is to measure the maximum rate at which the log files can be played against the databases. The following table shows the average rate for more than 500 log files played in a single storage group. Each log file is 1 MB in size.

|

Average time to play one Log file (sec) |

0.095 |

Conclusion

This section provides the solution summary.

This document is developed by storage solution providers, and reviewed by Microsoft Exchange Product team. The test results presented are based on the tests introduced in the ESRP test framework. Customer should not quote the data directly for his/her pre-deployment verification. It is still necessary to go through the exercises to validate the storage design for a specific customer environment.

The ESRP program is not designed to be a benchmarking program; tests are not designed to get the maximum throughput for a giving solution. Rather, it is focused on producing recommendations from Fujitsu and VMware for Exchange application. So the data presented in this document should not be used for direct comparisons among the solutions.

In conclusion, the FUJITSU PRIMEFLEX for VMware vSAN with 4-node and 1+4 single disk group all-flash configuration achieved over 46,000 aggregated Exchange 2016 transactional IOPS with only 1.48 ms read latency and 5.10 ms write latency. With a simple calculation, we have achieved more than 4.6 IOPS per user mailbox, which equals 12.8 times the targeted performance profile (0.36 IOPS per user mailbox including 20 percent headroom). In addition, the performance test generated more than 4,000 log writes per second while keeping the log latency within 2.52 milliseconds.

The achieved backup performance for Exchange 2016 database is over 210 MBps per database and aggregated over 1,700 MBps per mailbox server. And a single log file replay for soft recovery test can be completed within 0.1 second.

Appendix

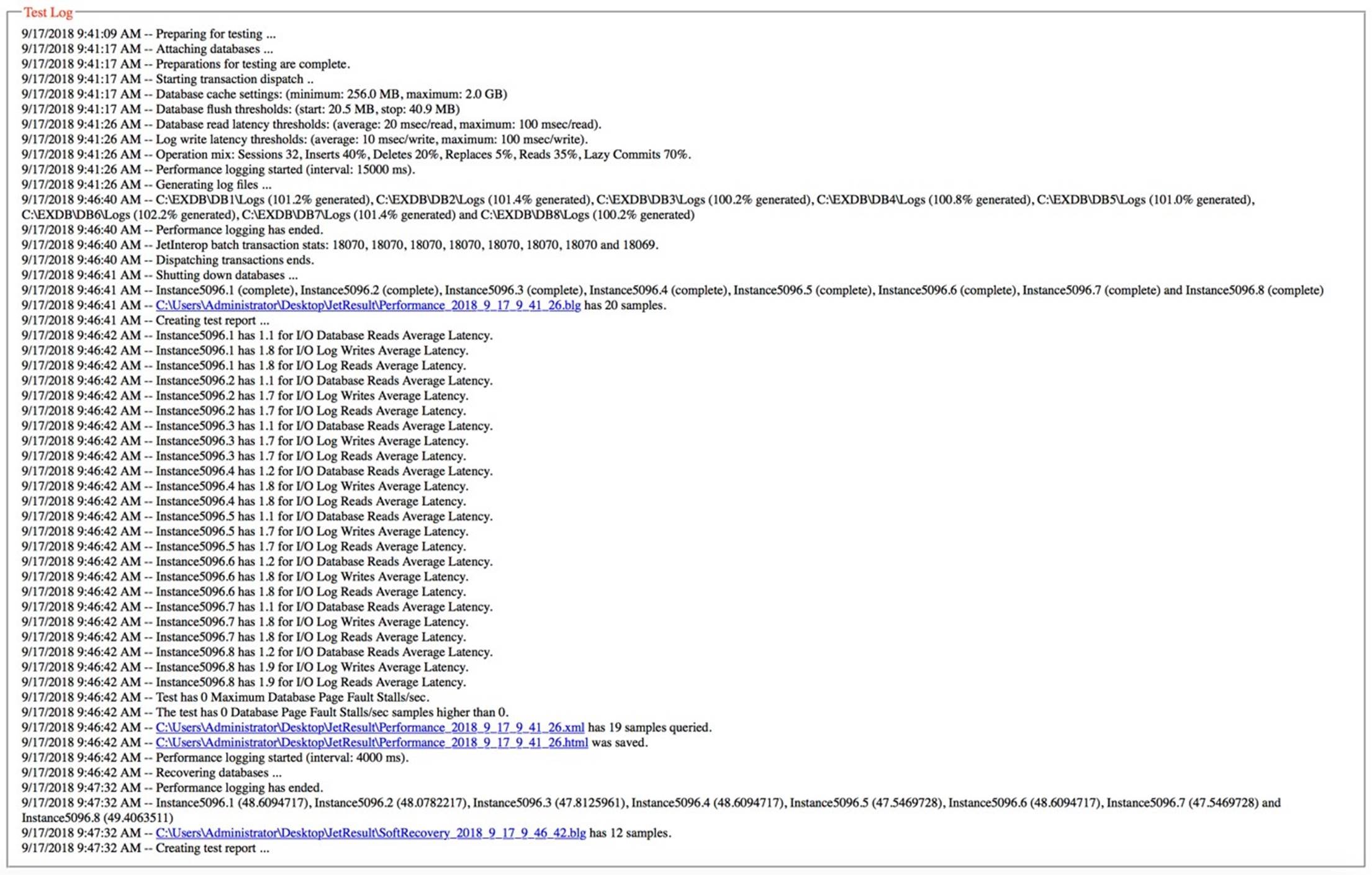

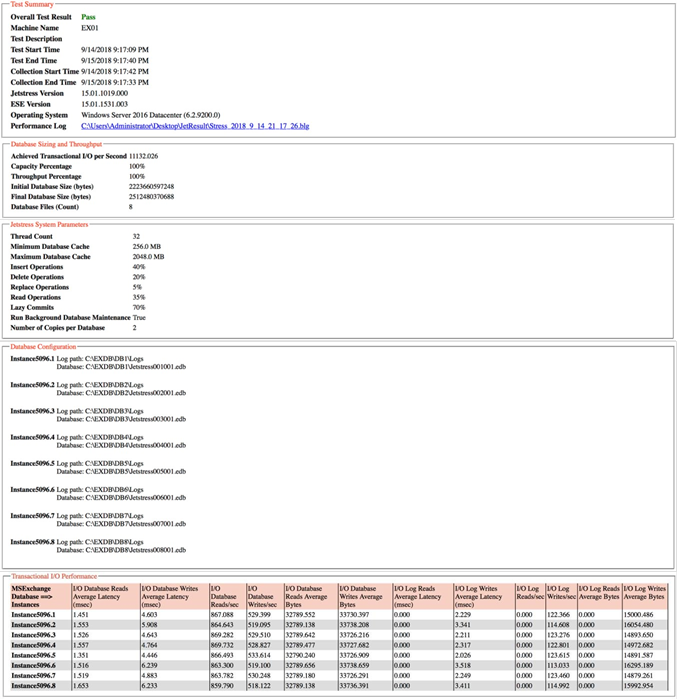

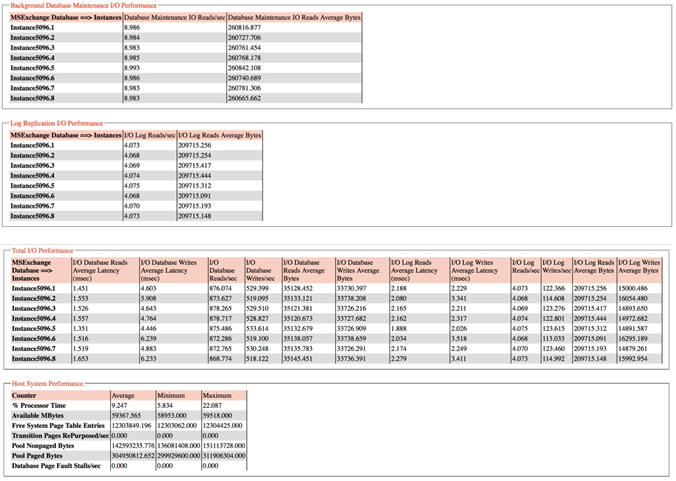

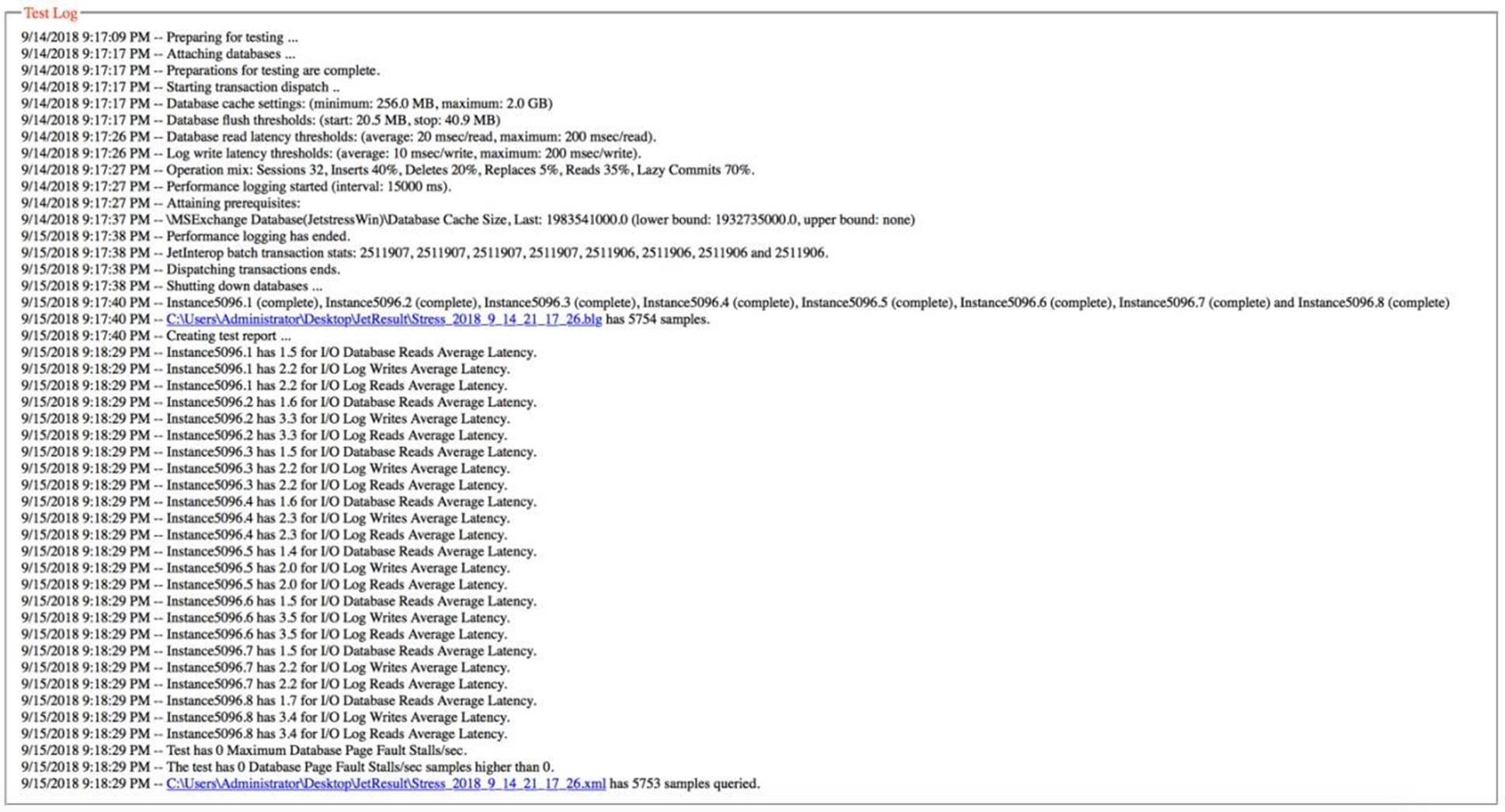

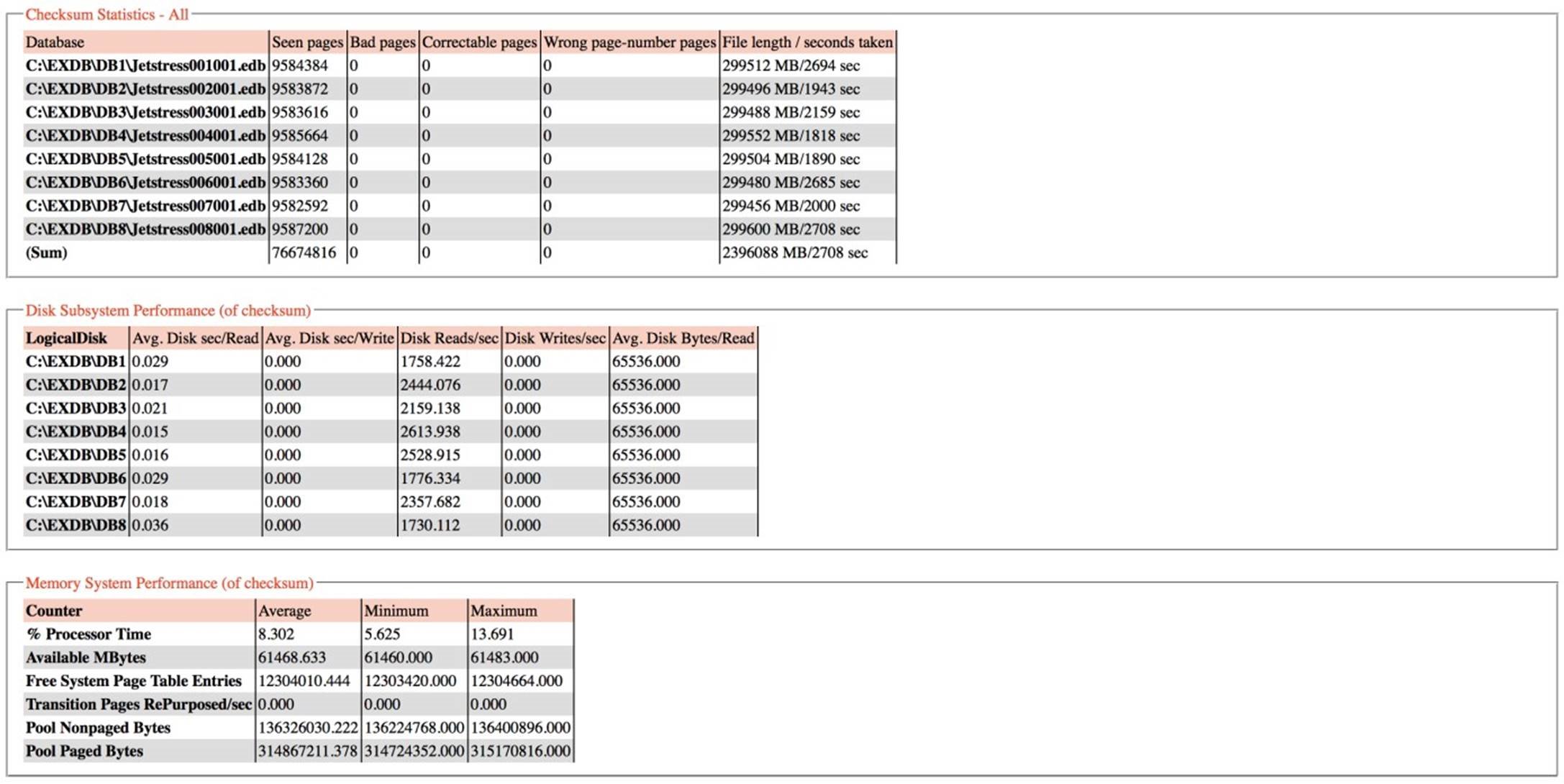

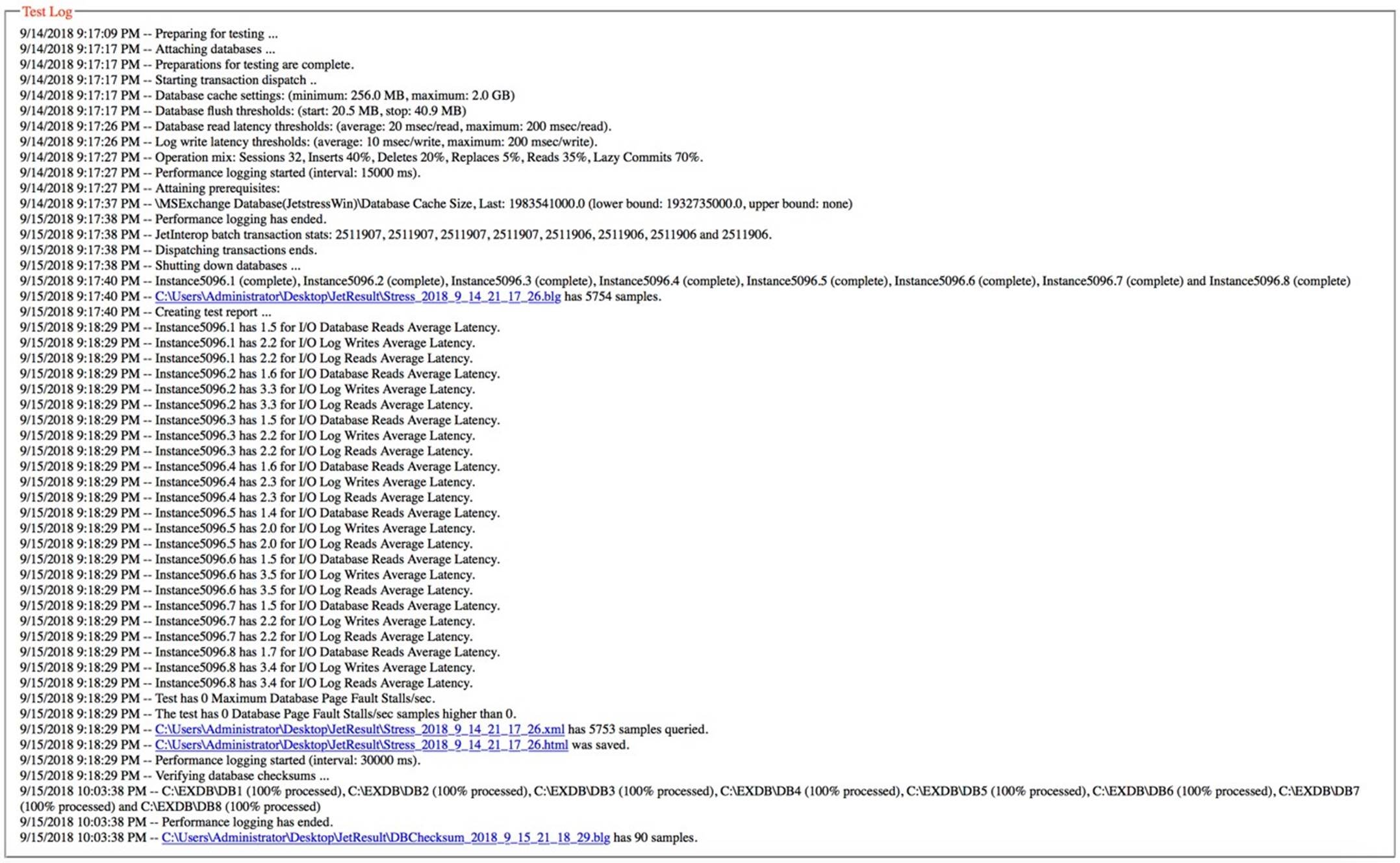

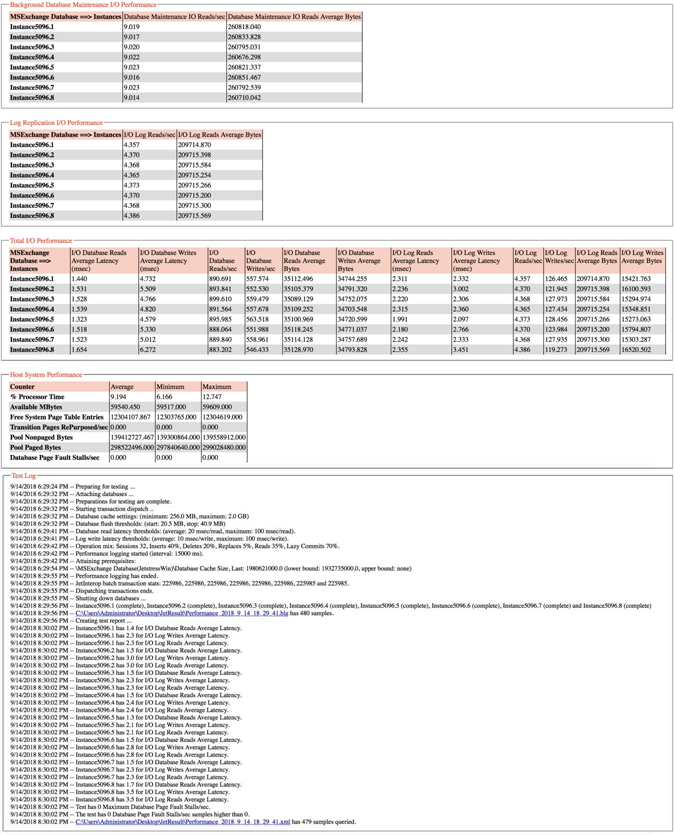

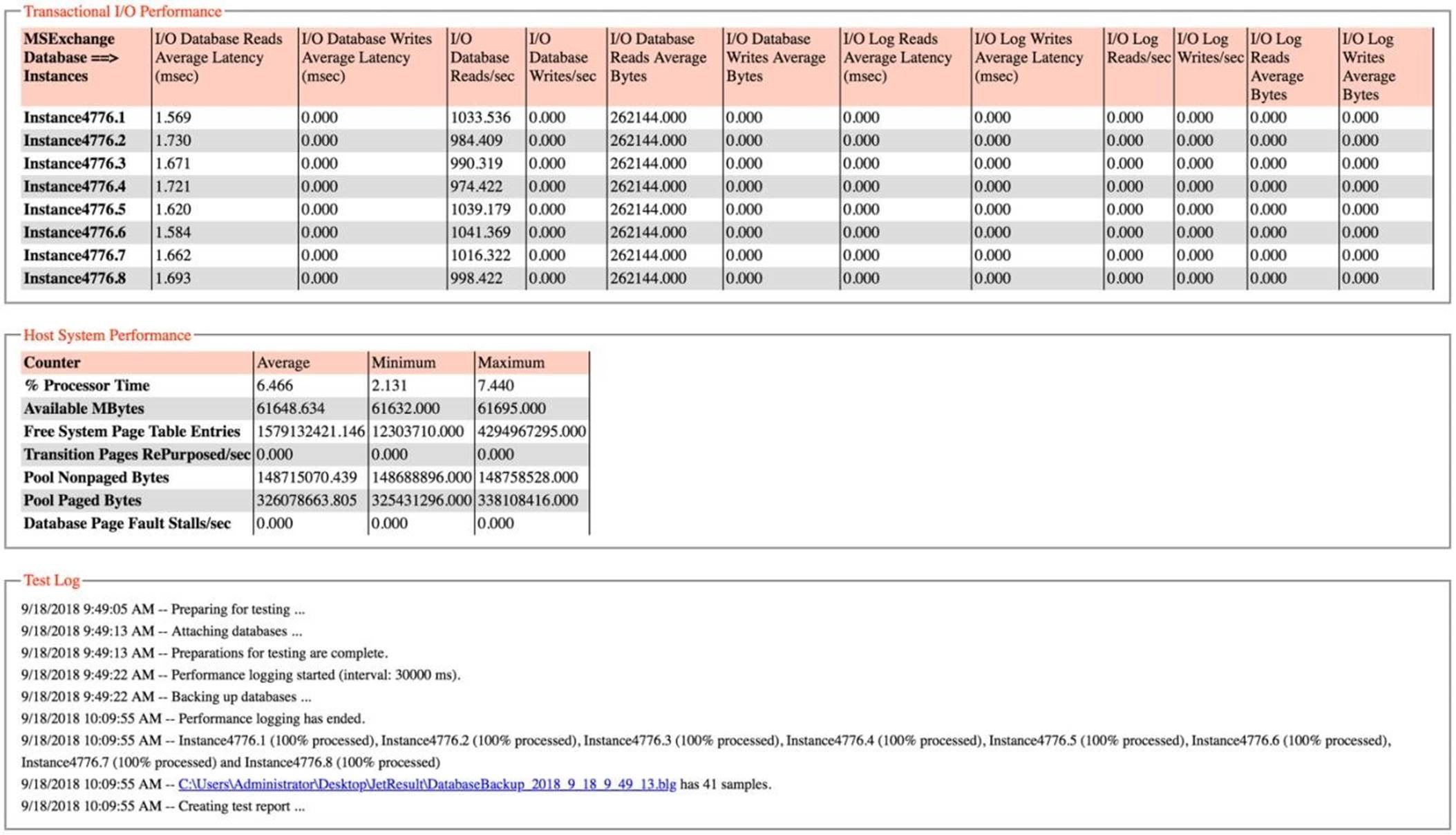

Appendix A—Stress Test Result Report

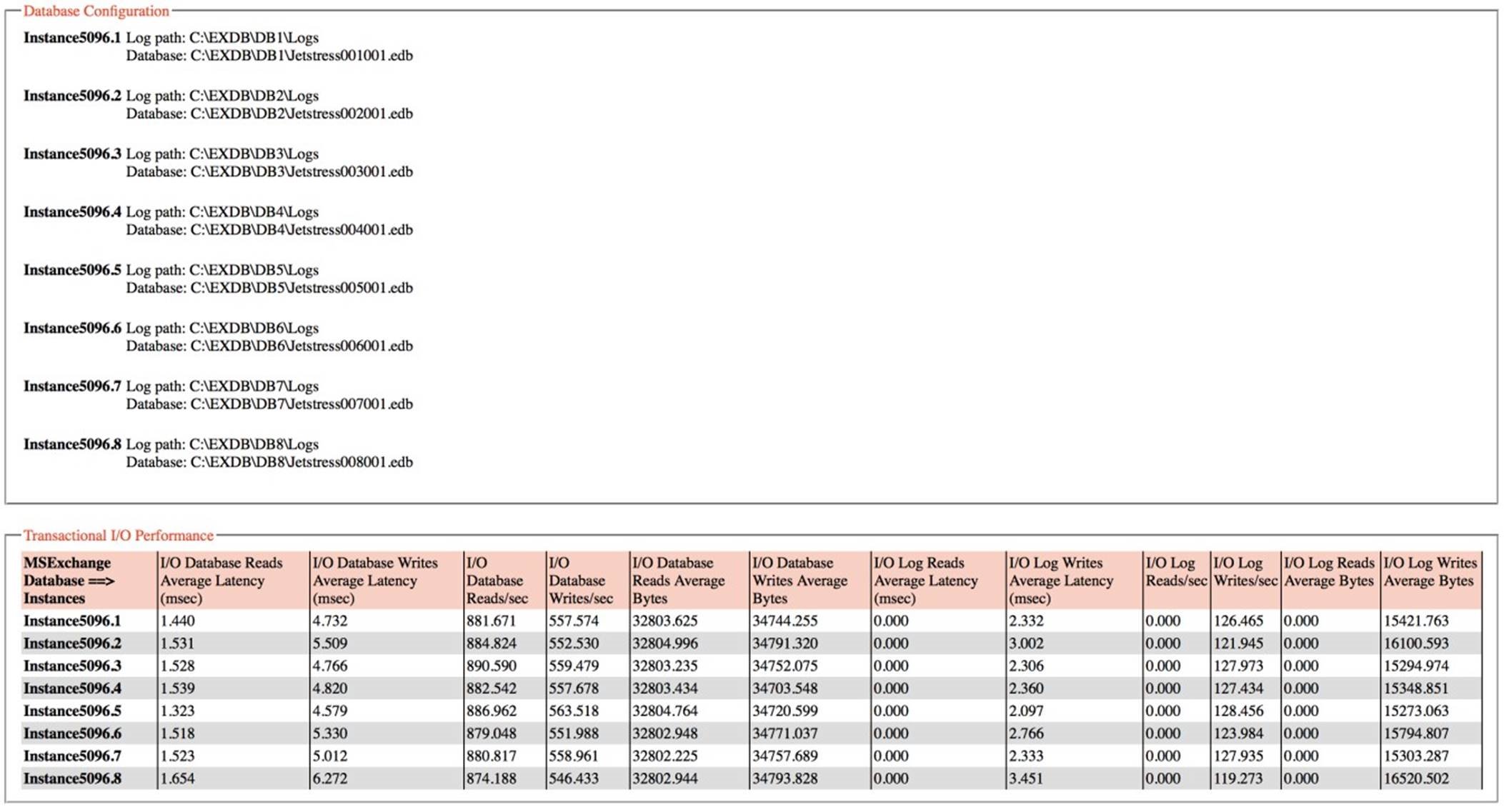

This section provides the 24-hour stress test results on one of the test virtual machine. All the other test results are comparable to one another.

Test Result Report

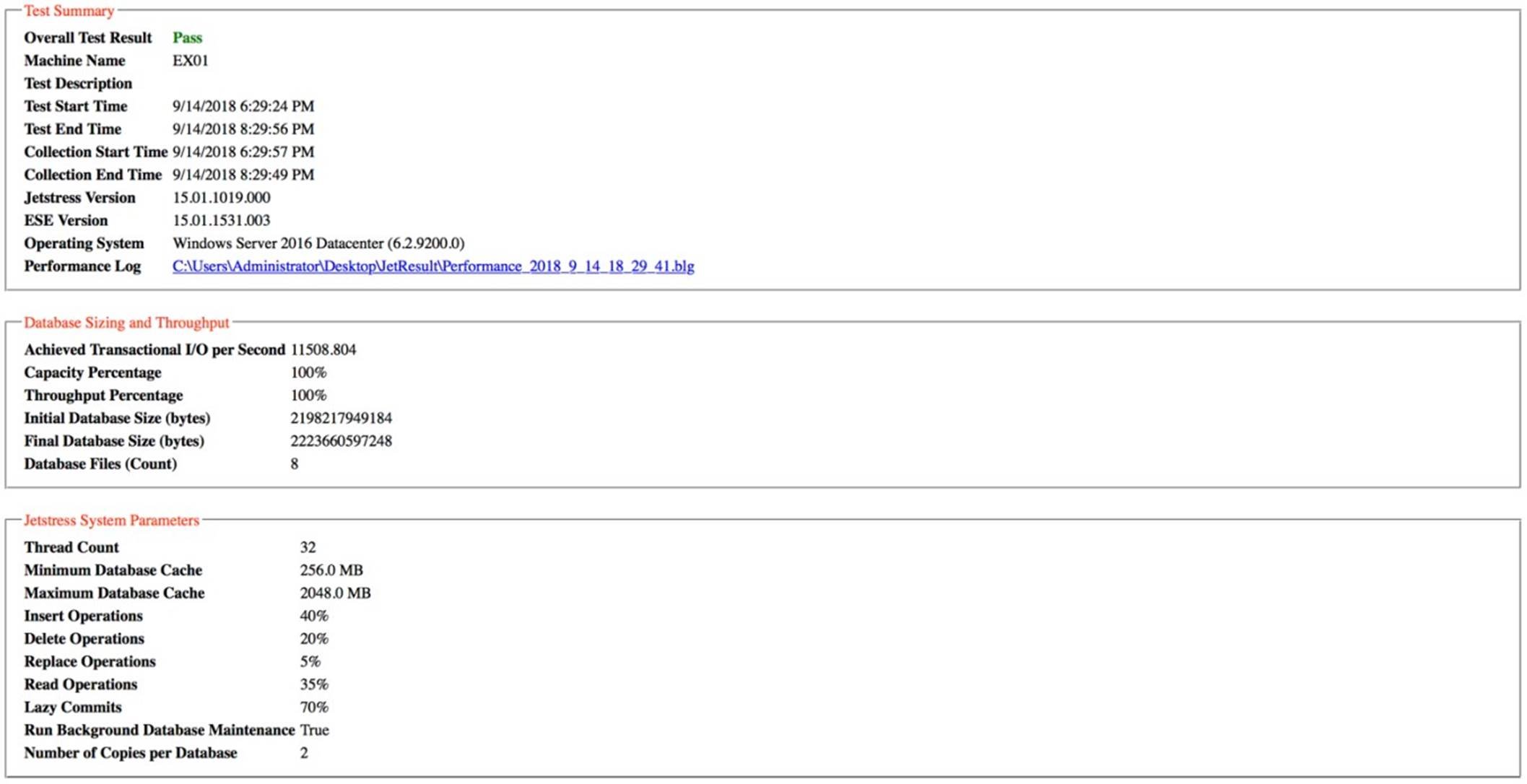

Appendix B—Performance Test Result Report

This section provides the 2-hour performance test results on one of the test virtual machines. All the other test results are comparable to one another.

Appendix C—Database Backup Test Result Report

This section provides the database backup test results on one of the test virtual machine. All the other test results are comparable to one another.

Appendix D—Soft Recovery Test Result Report