Multitenancy in vSAN

Introduction

Service providers or any organization that provides resources to more than one customer or tenant have a unique subset of needs that extend beyond the typical concerns of data center design and operations. Is a tenant's data isolated to meet their security and prioritization criteria? And what does "isolated" even mean in the world of shared resources? Can resources be managed and prioritized in a manner that reflects the different needs of these customers? These are non-trivial matters that often do not have a simple yes or no answer, but rather, a degree of acceptability that depends on requirements.

It is not uncommon to hear questions asking how vSAN handles multitenancy in comparison to environments built in a three-tier architecture using traditional storage arrays. Both approaches can serve as viable options for multi-tenant environments. The information below aims to help you better understand the similarities and differences between the two approaches.

The Trade-offs with Resource Consolidation

The sharing of resources is how most environments achieve efficiency, where the cost savings can be passed onto the business. Service providers and multi-tenant environments use this same method of resource consolidation, and through economies of scale can offer tremendous value to their customers. Virtualization is used to share computational resources across the data center, while shared storage is used to share storage resources - typically through storage arrays connecting to hypervisor clusters or hyperconverged clusters that provide their own storage resources through hosts that make up the cluster.

The value gained by sharing resources may conflict with three topics that are important to service providers, and their tenants.

- Management of resources. This relates to the allocation or partitioning of resources and capabilities to each tenant, whether it be compute, memory, or the focus of this post: Storage.

- Security of resources. This relates to the safeguards in place to meet the security objectives of the tenant and their data. Security goals and thresholds can be different based on the customer.

- Prioritization of resources. In a shared infrastructure, inevitably resources are going to be needed, and perhaps at the same time. Prioritization can have an impact on performance if contention is high enough.

Typically, the more one attempts to ensure security, management, and prioritization for their tenants, the less sharing of resources can occur. For example, if a tenant has data security requirements, will it be sufficient to have their data isolated on its own storage target? And will a logical boundary on shared storage serving up multiple tenants suffice, or will there need to be physically separate? Let's suppose that this data must reside in a physically separate location, how will the data be transmitted to and from the hosts? Will it be using the shared network fabric? And if so, will this meet the security and priority requirements of the tenant?

As a result, multi-tenancy is often a balance of determining the appropriate level of acceptability to take advantage of the benefits that a service provider can give. It involves various shades of gray, with the correct way simply being what is acceptable by the provider and the tenant.

Let's look at how vSAN compares to traditional storage on this topic - sorting through the similarities and differences. Note that storage arrays can serve up data in several ways. For this topic, we'll focus on block-based storage using VMFS. For brevity, NFS-based storage and vVols are not discussed here.

Multitenancy with Storage Arrays

Let's look at how shared storage using storage arrays achieve multi-tenancy.

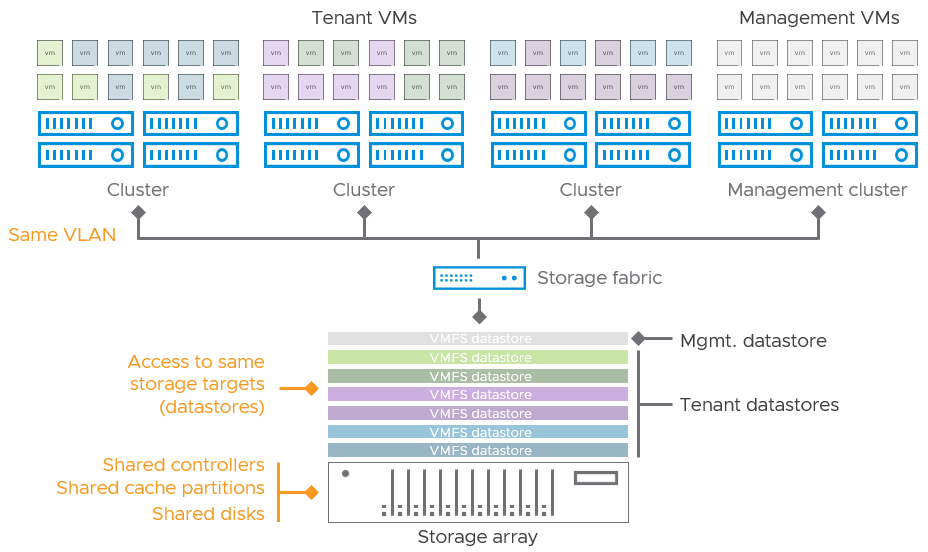

Figure 1. Simplified illustration of multiple tenants using shared storage.

Management of resources

Storage arrays typically provide a degree of multi-tenancy by dedicating specific datastores for a given tenant. The datastore is the logical entity in which the clustered file system (VMFS) provides the capacity to house VMs, and is connected to all hosts in one or more clusters. While not originally intended for this purpose, service providers tend to use a datastore as a mechanism of separation for capacity allocation and management, where a datastore is only used by a given tenant. It serves as an easy, manageable upper boundary of capacity that they feel very comfortable with. In reality, the datastore living on the array is thin provisioned, offering the provider an opportunity to overcommit resources.

While capacity may be allocated using datastores, the backend data services are per array. For example, since storage arrays provide data services such as encryption as part of the array, an environment that has a mix of array vendors may not be able to provide the same capabilities across the various storage targets. This can introduce design and operational challenges for the service provider.

Security of resources

While a datastore can provide a boundary for capacity, it doesn't necessarily provide absolute levels of security. It is common for today's arrays to sprinkle data across various devices in their enclosure next to other data in other datastores. The boundary is only logical. The three-tier architecture also does not treat the datastore as an exclusive resource of the cluster, meaning that it can be connected to other hosts outside of a cluster. While VMFS helps prevent simultaneous access, it doesn't protect against nefarious access. Hosts must use masking or ACLs to limit access, and this typically only restricts access outside of a cluster. The storage fabric used likely serves many storage targets, which means storage traffic serving multiple tenants is traversing the same fabric. Some storage arrays may use Data-at-Rest Encryption, but the I/O traversing the storage fabric is often unencrypted.

Beyond shared VMFS volumes storage arrays present another challenge in data isolation. Since a storage array depends heavily on data consolidation for good TCO, inevitably, more and more customer data from multiple tenants, and possibly even management data is stored in the same array enclosure. As a result, discrete customer data will be sharing cache devices, target ports, and network VLANs, which may not meet isolation efforts for the tenant. For the service provider, failure domains increase, as do vulnerability domains in the event of a compromise in the storage array management plane.

Prioritization of resources

For three-tier architectures, prioritization of resources can be a challenge because it includes factors that live outside of the management domain of the hypervisor, that being the network fabric, and the storage array. For the hypervisor, VMware Storage I/O Control (SIOC) is a useful method of providing relative priority of storage resources to give I/O from more important VMs higher priority than I/O from less important VMs. But storage fabric itself has little understanding of priority and order. The storage array serving VMs living in multiple datastores will in many ways treat I/Os coming in from multiple HBAs as arbitrary. The array will process the I/O as quickly as possible, but it does not understand the priority of I/O requests sitting in the queues of its controllers, or the data sets associated with individual VMs.

Many may find logical separation sufficient, but it does have its limits with the management, security, and prioritization noted above. One way to mitigate some of the challenges above is to use dedicated compute clusters and a dedicated array for a cluster. While this can be a workable solution for larger tenants, it is likely cost-prohibitive for smaller tenants as the cost of dedicated storage arrays and switch fabrics would need to be accounted for. The 1-to-1 model doesn't scale well for the provider in its financial and operational costs.

Multitenancy with vSAN

vSAN shares many similarities to a three-tier architecture when used for multi-tenancy. But vSAN provides storage resources in a unique way, which results in some noteworthy differences.

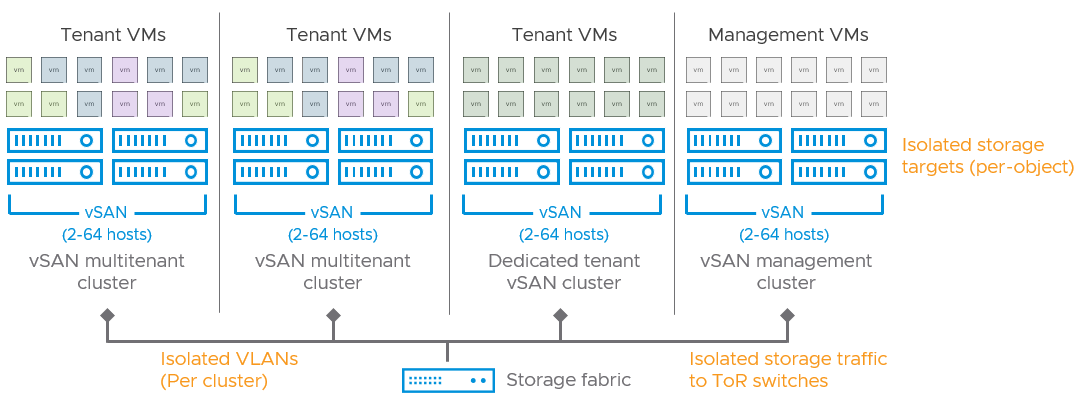

Figure 2. Simplified illustration of multiple tenants using vSAN.

Management of resources

Unlike traditional storage, the storage resources provided by vSAN are rendered as a single datastore per cluster. Thus, using a datastore as a capacity-management or isolation construct isn't available for vSAN. What is unique to vSAN is that vSAN clusters provide storage as a resource of the cluster - similar to memory and compute resources, and can be sized anywhere from 2 data hosts to 64 hosts, a tenant could have a cluster tailored to suit their specific capacity needs. If you are serving multiple tenants in one cluster, one can monitor VM consumption by using vCenter Server or its APIs.

Since vSAN provides its data services as a part of the software stack, capabilities such as Data-at-Rest encryption, Data-in-Transit encryption, and file services can be provided by any cluster that is running vSAN. This can provide a level of ubiquity across vSAN-powered workloads, or be tailored to the needs of the service provider and its tenants.

Security of resources

Much like most storage arrays do within its enclosure, vSAN will sprinkle data around the collection of storage devices that make up a vSAN datastore. But unlike VMFS, vSAN remains the arbiter of access to this data. VMs consist of objects, and vSAN's distributed object manager (DOM) owner of that object knows which hosts at any given time have permission to access that data. Instead of the boundary of data access being a datastore, the boundary of access is the VM object(s), which is similar to the construct of vVols. For more information, see the post vSAN Objects and Components Revisited.

Since vSAN provides storage as a cluster resource, service providers can easily “scale down” the isolation of resources to tailor the needs of the tenant. With vSAN, small, isolated clusters can be built to meet their tenant's security requirements, which avoids the challenges of a traditional array.

Prioritization of resources

vSAN's architecture shines as requirements of physical separation or prioritization become more strict. Whereas a storage array is an indivisible monolith serving storage to potentially many clusters, a standard vSAN cluster provides storage to only the hosts that comprise the cluster. For example, a traditional 8-host vSphere cluster providing resources to two tenants would use a traditional storage array. But the backing storage array may serve many more clusters, and cannot distinguish or prioritize the traffic. Using vSAN, one could have two, 4-host clusters for these two distinct tenants, and all storage resource demands would remain exclusive to the given cluster. They may or may not share the same Top-of-Rack (ToR) switches, but could also use VLANs to logically separate the traffic. For more information on cluster design in vSAN, see vSAN Cluster Design - Large Clusters Versus Small Clusters.

User customizable prioritization of I/O is somewhat limited within a cluster, as vSAN strives to serve requests at high levels of performance. However, vSAN does have tools to help with meeting performance SLAs.

- IOPS limits. vSAN can place limits of I/O commands per second on specified VMs using a storage policy, which will help prevent noisy neighbor effects. See Using Workload Limiting Policies (IOPS Limits) on vSAN-Powered Workloads for more information.

- Fairness Scheduler for vSAN traffic. vSAN was designed to ensure the appropriate balance of resource usage under times of contention. vSAN uses its own I/O scheduler to identify and control the various types of vSAN traffic. The vSAN Original Storage Architecture (OSA) uses a technique called "Adaptive Resync" that helps ensure that vSAN VM traffic has no less than 80% of the host. The vSAN 8 Express Storage Architecture (ESA) adds to this capability by adaptively shaping vSAN network traffic to ensure the appropriate balance of network resource usage under times of contention.

- Capacity Prioritization. The prioritization of capacity can be achieved through an Object Space Reservations (OSR) storage policy rule. Specific VMs will be guaranteed capacity resources over other VMs that are not prioritized.

The flexibility of vSAN allows the architecture to provide services to other clusters as well. HCI Mesh provides the ability to borrow storage resources and the respective data services it provides from one vSAN cluster to another. It can even be used to provide storage resources to traditional vSphere clusters. In either case, HCI Mesh can be a flexible way to run highly demanding Tier-1 applications using existing cluster resources.

Recommendation: Service providers are often interested in reporting as a way to accurately charge their customers for resource usage. vSAN capacity reporting of discrete VMs can be done in vCenter Server, or via API. Since vSAN does report capacity usage a bit differently than storage arrays, the following blog posts are recommended to help you better understand these differences: Demystifying Capacity Reporting in vSAN and The Importance of Space Reclamation for Data Usage Reporting in vSAN

Additional Opportunities when using vSAN in Multitenancy Environments

Some techniques in vSAN can be used by service providers to help with resource management that can be financially beneficial to the providers, the tenants, or both. For example.

- Provide a 'premium performance' offering. The Express Storage Architecture (ESA) in vSAN 8 can offer new levels of performance and efficiency that can run demanding workloads with lower latency and improved performance consistency while driving down TCO to lower than vSAN clusters using the original storage architecture (OSA). For more information, see the post: An Introduction to the vSAN Express Storage Architecture.

- Reduce costs of service with deterministic space efficiency. With the vSAN ESA in vSAN 8, service providers can use RAID-5 or RAID-6 erasure coding without any compromise in performance. This can allow service providers to store tenant data resiliently but in a more space-efficient way. These savings could drive down costs that can help the provider, and their customers. For more information, see the post: RAID-5/6 with the Performance of RAID-1 using the vSAN Express Storage Architecture. vSAN clusters as small as three hosts can now take advantage of space-efficient erasure coding, as described in the post: Adaptive RAID-5 Erasure coding with the Express Storage Architecture in vSAN 8.

- Reduce costs of service with opportunistic space efficiency. With the vSAN ESA in vSAN 8, service providers can use data compression on a per VM basis. While this opportunistic space efficiency feature is not guaranteed, pricing models could factor this in, or it can be used by the provider to reclaim costs. For more information, see the post: vSAN 8 Compression - Express Storage Architecture.

- Reduce costs of service with storage reclamation. Most storage systems (including vSAN) use thin provisioning to minimize the unnecessary hard allocation of capacity until it is necessary. TRIM/UNMAP can be used in vSAN (ESA or OSA) to actively reclaim capacity no longer used by tenant VMs. This can also provide the benefit of more accurate reporting of capacity usage for customer show-back/charge-back. For more information, see the post: The importance of Space Reclamation for Data Usage Reporting in vSAN.

- Offer guaranteed capacity with reservations. With vSAN (ESA and OSA), Object Space Reservations can be applied using a storage policy and is a capacity management technique to ensure the VM(s) using OSR are guaranteed their capacity. For more information on Object Space Reservations, see the post: Demystifying Capacity Reporting in vSAN.

- Offer Data-at-Rest and Data-in-Transit encryption. This is a cluster-level service in vSAN (ESA and OSA) that can help address data security requirements. If a service provider does not want to run vSAN encryption services on all their clusters, they can easily enable Data-at-Rest encryption on one cluster and have a tenant's VM use this encrypted remote vSAN datastore through HCI Mesh. For more information on the new efficiencies brought to vSAN encryption in vSAN 8 using the ESA, see the post: Cluster Level Encryption with the vSAN Express Storage Architecture.

The vSAN Express Storage Architecture in vSAN 8 also introduces a new scalable, high-performance native snapshot engine that provides snapshot capabilities that are quite similar to what is offered in array-based snapshotting by reputable vendors. For more information, see the post: Scalable, High-Performance Native Snapshots in the vSAN Express Storage Architecture.

Summary

Both VMware vSAN, and vSphere using a traditional 3-tier architecture with storage arrays can serve as great solutions for multi-tenancy. For service providers, vSAN’s flexibility in its architecture can mean that tenant requirements can be met more easily. vSAN’s flexible architecture can also provide capabilities that would otherwise be cost-prohibitive in a three-tier architecture.

About the Author

Pete Koehler is a Staff Technical Marketing Architect at VMware. With a primary focus on vSAN, Pete covers topics such as design and sizing, operations, performance, troubleshooting, and integration with other products and platforms.