vSAN 6.7 U3 Proof of Concept Guide

vSAN 6.7u3Introduction

Decision-making choices for vSAN architecture.

Before You Start

Plan on testing a reasonable hardware configuration that resembles what you plan to use in production. Refer to the VMware vSAN Design and Sizing Guide for information on supported hardware configurations and considerations when deploying vSAN. Be sure the hardware you plan to use is listed on the VMware Compatibility Guide (VCG). If you're using a ReadyNode or VxRail, the hardware is guaranteed to be compatible with vSAN -however, BIOS updates, and firmware and device driver versions should be checked to make sure these aspects are updated according to the VCG. For vSAN software layer specifically, pay particular attention to the following areas of the VCG:

BIOS: Choose "Systems / Servers" from "What are you looking for" : http://www.vmware.com/resources/compatibility/search.php

Network cards: Choose "IO Devices" from "What are you looking for" : http://www.vmware.com/resources/compatibility/search.php?deviceCategory=io and select "Network" from "I/O Device Type" field.

vSAN Storage I/O controllers: Choose "vSAN" from "What are you looking for" : http://www.vmware.com/resources/compatibility/search.php?deviceCategory=vsan. Scroll past the inputs in "Step 2" and look for the link to "Build Your Own based on Certified Components" in "Step 3". Click that link. (The link executes javascript and cannot be linked to directly.) From the Build Your Own page, make sure "IO Controller" is selected in the "Search For:" field.

vSAN SSDs: Choose "vSAN" from "What are you looking for" : http://www.vmware.com/resources/compatibility/search.php?deviceCategory=vsan. Scroll past the inputs in "Step 2" and look for the link to "Build Your Own based on Certified Components". Click that link. From the Build Your Own page, select "SSD" from the "Search For:" field.

vSAN HDDs (Hybrid only) : Choose "vSAN" from "What are you looking for" : http://www.vmware.com/resources/compatibility/search.php?deviceCategory=vsan. Scroll past the inputs in "Step 2" and look for the link to "Build Your Own based on Certified Components". Click that link. From the Build Your Own page, select "HDD" from the "Search For:" field.

The following commands may come in handy to help identify firmware and drivers in ESXi and compare against the VCG:

- To list VID DID SVID SSID of a storage controller or network adapter:

vmkchdev -l | egrep 'vmnic|vmhba'

- To show which NIC driver is loaded:

esxcli network nic list

- To show which storage controller driver is loaded:

esxcli storage core adapter list

- To display a driver version information:

vmkload_mod -s <driver-name> | grep -i version

All-Flash or Hybrid

There are a number of additional considerations if you plan to deploy an all-flash vSAN solution:

- All-flash is available in vSAN since version 6.0.

- It requires a 10Gb Ethernet network for the vSAN traffic; it is not supported with 1Gb NICs.

- The maximum number of all-flash hosts in a single cluster is 64.

- Flash devices are used for both cache and capacity.

- Flash read cache reservation is not used with all-flash configurations. Reads come directly from the capacity tier SSDs.

- Endurance and performance classes now become important considerations for both cache and capacity layers.

- Deduplication and compression available on all-flash only.

- Erasure Coding (Raid5/6) is available on all-flash only.

vSAN POC Setup Assumptions and Pre-Requisites

Prior to starting the proof of concept project, the following pre-requisites must be met. The following assumptions are being made with regards to the deployment:

- N+1 servers are available and compliant with the vSAN HCL.

- All servers have had ESXi 6.7 (build number 8169922) or newer deployed.

- vCenter Server 6.7 (build number 8217866) or newer has been deployed to manage these ESXi hosts. These steps will not be covered in this POC guide. vCenter server must be at the same version or higher than ESXi hosts.

- If possible, configure internet connectivity for vCenter such that the HCL database can be updated automatically. Internet connectivity is also a requirement to enable Customer Experience Improvement Program (CEIP), which is enabled by default to benefit the customers for faster problem resolution and advice on how best to deploy their environments.

- Services such as DHCP, DNS, and NTP are available in the environment where the POC is taking place.

- All but one of the ESXi hosts should be placed in a new cluster container in vCenter.

- The cluster must not have any features enabled, such as DRS, HA or vSAN. These will be done throughout the course of the POC.

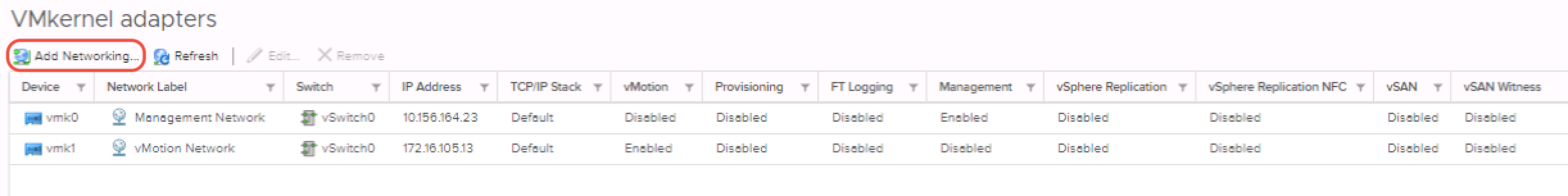

- Each host must have a management network vmkernel and a vMotion network vmkernel already configured. There is no vSAN network configured. This will be done as part of the POC.

- For the purposes of testing Storage vMotion operations, an additional datastore type, such as NFS or VMFS, should be presented to all hosts. This is an optional POC exercise.

- A set of IP addresses, one per ESXi host will be needed for the vSAN network VMkernel ports. The recommendation is that these are all on the same VLAN and network segment.

vSAN POC Overview

Overview of a vSAN POC process

Hardware Selection

The Power of Choice

Choosing the appropriate hardware for a PoC is one of the most important factors in the successful validation of vSAN. Below is a list of the more common options for vSAN PoCs:

Bring your own: Organizations considering vSAN for existing workloads can choose their existing hardware. One of the benefits of this option is 100% validation that vSAN achieves the success criteria and there are no surprises.

Virtual PoCs: Organizations solely interested in seeing vSAN functionality may be interested in the Virtual PoC. This is a virtual environment and is not a true test of performance or hardware compatibility but can help stakeholders feel more comfortable using vSAN. Please contact your VMware HCI specialist to take advantage of our “Test Drive” environment.

Loaner PoC: The vSAN PoC team maintains a collection of loaner gear to validate vSAN when there is no hardware available for testing. Please contact your VMware HCI specialist to take advantage of this option.

Hosted PoCs: Many resellers, partners, distributors, and OEMs recognize the power of vSAN and have procured hardware to make it available to their current, and future customers in order to be able to conduct vSAN proof of concepts.

Try and Buy: Whether a VxRail or a vSAN ReadyNode, many partners will provide hardware for a vSAN POC as a “try and buy” option.

There is no right or wrong choice, however choosing the appropriate hardware for a POC is vitally important. There are many variables with hardware (drivers, controller firmware versions) so be sure to choose hardware that is on the VMware Compatibility List.

Once the appropriate hardware is selected it is time to define the PoC use case, goals, expected results and success metrics.

POC Validation Overview

Testing Overview

The most important aspects to validate in a Proof of Concept are:

- Successful vSAN configuration and deployment

- VMs successfully deployed to vSAN Datastore

- Reliability: VMs and data remain available in the event of failure (host, disk, network, power)

- Serviceability: Maintenance of hosts, disk groups, disks, clusters

- Performance: vSAN and selected hardware can meet the application, as well as business needs

- Validation: vSAN data services working as expected (dedupe/compression, RAID-5/6, checksum, encryption)

- Day 2 Operations: Monitoring, management, troubleshooting, upgrades

These can be grouped into 3 common vSAN PoCS: resiliency testing, performance testing, and operational testing.

Operational Testing

Operational testing is a critical part of a vSAN PoC. Understanding how the solution behaves on day-2 operations is important to consider as part of the evaluation. Fortunately, because vSAN is embedded in the ESXi kernel, a lot of the vSAN operations are also vSphere operations. Adding hosts, migrating VMs between nodes, and cluster creation are some of the many operations that are consistent between vSphere and vSAN, resulting in a smaller learning curve, and eliminating the need to have storage specialists.

Some of the Operational Tests include:

- Adding hosts to a vSAN Cluster

- Adding disks to a vSAN node

- Create/Delete a Disk Group

- Clone/vMotion VMs

- Create/edit/delete storage policies

- Assign storage policies to individual objects (VMDK, VM Home)

- Monitoring vSAN

- Embedded vROps (vSAN 6.7 and above)

- Performance Dashboard on H5 client

- Monitor Resync components

- Monitor via vRealize Log Insight

- Put vSAN nodes in Maintenance Mode

- Evacuate Disks

- Evacuate Disk Groups

For more information about operational tests please visit the following sections on the vSAN PoC Guide:

- Basic vSphere Functionality on vSAN

- Scale-Out vSAN

- Monitoring vSAN

- vSAN Storage Policies

- vSAN Management Tasks

Performance Testing

This particular test seems to get a lot of attention during a vSAN POCs, but it's important to understand the requirements of the environment and pay close attention to details such as workload profiles. Prior to conducting a performance test, it is important to have a clear direction on whether it is a benchmark test or a real application test.

Ideally, having a clone of an environment for testing will yield the most accurate results during the test. Understanding the applications, and use case are important as this will determine the policies for objects and/or VMs. For example, if testing the performance of a SQL server solution, you would want to follow the SQL best practices that include using RAID-1 for performance, and also Object Space Reservation of 100% for objects such as the log drive.

On the other hand, a benchmark test can also be helpful as the test tends to be faster because there is no need to clone production VMs or do additional configuration. However, such tests require that you understand the workload profile to be tested. Some characteristics of a workload profile include block size, read/write percentage and sequential/random percentage among others. Conducting such a test will require knowledge of testing tools like Oracle's vdbench, how to deploy and interpret it. To make this test easier for the public, there is a fling tool available called HCIBench, which automates the deployment of Linux VMs with vdbench as a way to generate load on the cluster. vdbench also provides a Web UI interface for to configure and view results. HCIBench will create the number of VMs you specify, with the number of VMDKs you want in just minutes. HCIBench will trigger vSAN Observer on the back end, and make such output available as part of the results. HCIBench is truly a great tool that allows for faster testing utilizing well-known industry benchmarking tools.

One of the tools you can use to obtain your workload profile includes LiveOptics, previously known as DPACK. This tool is free to use.

For more information about HCIBench, please refer to the following blog posts:

- https://blogs.vmware.com/virtualblocks/2016/09/06/use-hcibench-like-pro-part-1/

- https://blogs.vmware.com/virtualblocks/2016/11/03/use-hcibench-like-pro-part-2/

- https://blogs.vmware.com/virtualblocks/2017/01/11/introducing-hcibench-1-6/

- https://labs.vmware.com/flings/hcibench

A high-level view of Performance Testing:

- Characterize the target workload(s)

- LiveOptics

- Simulate target workloads

- HCIBench

- Change Storage Policies and/or vSAN Services as needed

- Compare result reports & vSAN Observer output

.png)

Resiliency Testing

Conducting resiliency testing on a vSAN cluster is an eye-opening experience. By default, vSAN protects your information with 2 replicas of data, based on the vSAN default storage policy (FTT=1 mirroring). As the number of nodes increases, you are presented with the option to further protect your data from multiple failures by increasing your data replicas. With a minimum of 7 nodes, you are able to have up to 4 data replicas, which protects you from 3 failures at once, while still having VMs available. To simplify this guide, we will keep the vSAN default storage policy in mind for any examples.

Just like with any other storage solution, failures can occur on different components at any time due to age, temperature, firmware, etc. Such failures can occur at the storage controller level, disks, nodes, and network devices among others. A failure on any of these components may manifest itself as a failure in vSAN, but there are cases where this may not be true: for instance, a NIC failure might not result in a node disconnecting from the vSAN network, depending on whether redundancy was configured at the network interface/switch level.

When a failure occurs, the objects can go into an absent state or a degraded state. Depending on the state the components are after the failure, they will either rebuild immediately or wait for the time out. By default, the repair delay value is set to 60 minutes because vSAN is not certain if the failure is transient or permanent. One of the common tests conducted is physically removing a drive from a live vSAN node. In this scenario, vSAN sees the drive is missing, and claims failure. vSAN doesn't know if the missing drive will return, so the objects on the drive are put in an absent state. vSAN notes the "absent" state and initiates a 60-minute repair timer countdown begins. If the drive does not come back within the time specified, vSAN will rebuild the objects to go back into policy compliance. If the drive was pulled by mistake, and put back in within the 60 minutes, there is no rebuild, and after quick sync of metadata, the objects will be healthy again.

In cases of a drive failure (PDL), the disk is marked as degraded. vSAN will receive error codes, mark the drive as degraded, and begin the repair immediately.

Now that we understand the different object states based on failure type, it is important to test each type during the POC. Whether you have access to the physical nodes or not, running a test for a failed drive can be hard, unless the drive happens to die during the POC. Fortunately, there are python scripts available within ESXi that allows you to insert various error codes to generate both absent and degraded states. This python script is called vsanDiskFaultInjection.pyc. You can see the usage of this script below.

[root@cs-ie-h01:/usr/lib/vmware/vsan/bin] python ./vsanDiskFaultInjection.pyc -h

Usage:

injectError.py -t -r error_durationSecs -d deviceName

injectError.py -p -d deviceName

injectError.py -z -d deviceName

injectError.py -c -d deviceName

Options:

-h, --help show this help message and exit

-u Inject hot unplug

-t Inject transient error

-p Inject permanent error

-z Inject health error

-c Clear injected error

-r ERRORDURATION Transient error duration in seconds

-d DEVICENAME, --deviceName=DEVICENAME

Apart from disk failure testing, we also recommend to include the following tests to better understand the resiliency of vSAN:

- Simulate node failure with HA enabled

- Introduce network outage

- with & without redundancy

- Physical cable pull

- Network Switch Failure

- vCenter failure

- not considered a vSAN failure as vSAN keeps running

VMware Proof of Concept Resources

The following is a list of PoC evaluation criteria and VMware resources to assist with your evaluation:

|

Evaluation Criteria |

VMware Resources |

|

Full-stack integration, as well as a path to hybrid cloud |

|

|

Enterprise readiness in terms of feature set completeness, resiliency, availability, etc. |

|

|

Performance and sizing |

|

|

Partner ecosystem choices and strength |

(ReadyNode by all major OEMs, appliances, or BYO with certified hardware) |

|

PoC deployment flexibility and options |

Online or hosted:

On-prem:

|

|

Management, Day 2 operations, and OPEX savings |

vSAN Operations and Management Guidance |

|

Architecture fitness for production workloads, particularly Business/Mission Critical Applications (BCA/MCAs) |

vSAN Reference Architectures (Oracle, SQL Server, MySQL, Exchange, DataStax Cassandra, MongoDB, SAP HANA, Delphix, InterSystems Caché and IRIS, Cloudera, etc.) |

vSAN Network Setup

Note: If version 6.7 U1 or higher is used, optionally skip to 'Using Quickstart' in the next chapter to quickly configure a new cluster and enable vSAN

Before vSAN can be enabled, all but one of the hosts must be added to the cluster and assigned management IP addresses. (One host is reserved for later testing of adding a host to the cluster). All ESXi hosts in a vSAN cluster communicate over a vSAN network. For network design and configuration best practices please refer to the VMware vSAN Network Design Guide.

The following example demonstrates how to configure a vSAN network on an ESXi host.

Creating a VMkernel Port for vSAN

In many deployments, vSAN may be sharing the same uplinks as the management and vMotion traffic, especially when 10GbE NICs are utilized. Later on, we will look at an optional workflow that migrates the standard vSwitches to a distributed virtual switch for the purpose of providing Quality Of Service (QoS) to the vSAN traffic through a feature called Network I/O Control (NIOC). This is only available on distributed virtual switches. The good news is that the license for distributed virtual switches is included with all versions of vSAN.

However, the assumption for this POC is that there is already a standard vSwitch created which contains the uplinks that will be used for vSAN traffic. In this example, a separate vSwitch (vSwitch1) with two dedicated 10GbE NICs has been created for vSAN traffic, while the management and vMotion network use different uplinks on a separate standard vSwitch.

To create a vSAN VMkernel port, follow these steps:

Select an ESXi host in the inventory, then navigate to Configure > Networking > VMkernel Adapters. Click on the icon for Add Networking, as highlighted below:

Ensure that VMkernel Network Adapter is chosen as the connection type.

.png)

The next step gives you the opportunity to build a new standard vSwitch for the vSAN network traffic. In this example, an already existing vSwitch1 contains the uplinks for the vSAN traffic. If you do not have this already configured in your environment, you can use an already existing vSwitch or select the option to create a new standard vSwitch. When you are limited to 2 x 10GbE uplinks, it makes sense to use the same vSwitch. When you have many uplinks, some dedicated to different traffic types (as in this example), management can be a little easier if different VSS with their own uplinks are used for the different traffic types.

As there is an existing vSwitch in our environment that contains the network uplinks for the vSAN traffic, the “BROWSE” button is used to select it as shown below.

.png)

Select an existing standard vSwitch via the "BROWSE" button:

.png)

Choose a vSwitch.

.png)

vSwitch1 is displayed once selected.

The next step is to set up the VMkernel port properties, and choose the services, such as vSAN traffic. This is what the initial port properties window looks like:

.png)

Here is what it looks like when populated with vSAN specific information:

.png)

In the above example, the network label has been designated “vSAN Network”, and the vSAN traffic does not run over a VLAN. If there is a VLAN used for the vSAN traffic in your POC, change this from “None (0)” to an appropriate VLAN ID.

The next step is to provide an IP address and subnet mask for the vSAN VMkernel interface. As per the assumptions and prerequisites section earlier, you should have these available before you start. At this point, you simply add them, one per host by clicking on Use static IPv4 settings as shown below. Alternatively, if you plan on using DHCP IP addresses, leave the default setting which is Obtain IPv4 settings automatically.

.png)

The final window is a preview window. Here you can check that everything is as per the options selected throughout the wizard. If anything is incorrect, you can navigate back through the wizard. If everything looks like it is correct, you can click on the FINISH button.

.png)

If the creation of the VMkernel port is successful, it will appear in the list of VMkernel adapters, as shown below.

.png)

That completes the vSAN networking setup for that host. You must now repeat this for all other ESXi hosts, including the host that is not currently in the cluster you will use for vSAN.

If you wish to use a DVS (distributed vSwitch), the steps to migrate from standard vSwitch (VSS) to DVS are documented in the vSphere documentation.

2-Node Direct Connect

From vSAN 6.5, a separately tagged VMkernel interface can be used instead of extending the vSAN data network to the witness host (note that this capability can only be enabled from the command line). This feature allows for a more flexible network configuration by allowing for separate networks for node-to-node vs. node-to-witness communication.

This Witness Traffic Separation provides the ability to directly connect vSAN data nodes in a 2-node configuration; traffic destined for the Witness host can be tagged on an alternative interface from the directly connected vSAN tagged interface. Direct Connect eliminates the need for a 10Gb switch at remote offices/branch offices where the additional cost of the switch could be cost-prohibitive to the solution.

.png)

Enabling Witness Traffic Separation is not available from the vSphere Web Client. For the example illustrated above, to enable Witness Traffic on vmk1, execute the following on Host1 and Host2:

esxcli vsan network ip add -i vmk1 -T=witnessAny VMkernel port not used for vSAN traffic can be used for Witness traffic. In a more simplistic configuration, the Management VMkernel interface, vmk0, could be tagged for Witness traffic. The VMkernel port tagged for Witness traffic will be required to have IP connectivity to the vSAN traffic tagged interface on the vSAN Witness Appliance.

Enabling vSAN

Steps to enable vSAN

Using Quickstart

Enabling vSAN

Enabling vSAN on the Cluster

Once all the pre-requisites have been met, vSAN can be configured. To enable vSAN complete the following steps:

- Open the vSphere HTML5 Client at https://<vcenter-ip>/ui.

- Click Menu > Hosts and Clusters.

- Select the cluster on which you wish to enable vSAN.

- Click the Configure tab.

- Under vSAN, Select Services and click the CONFIGURE button to start the configuration wizard.

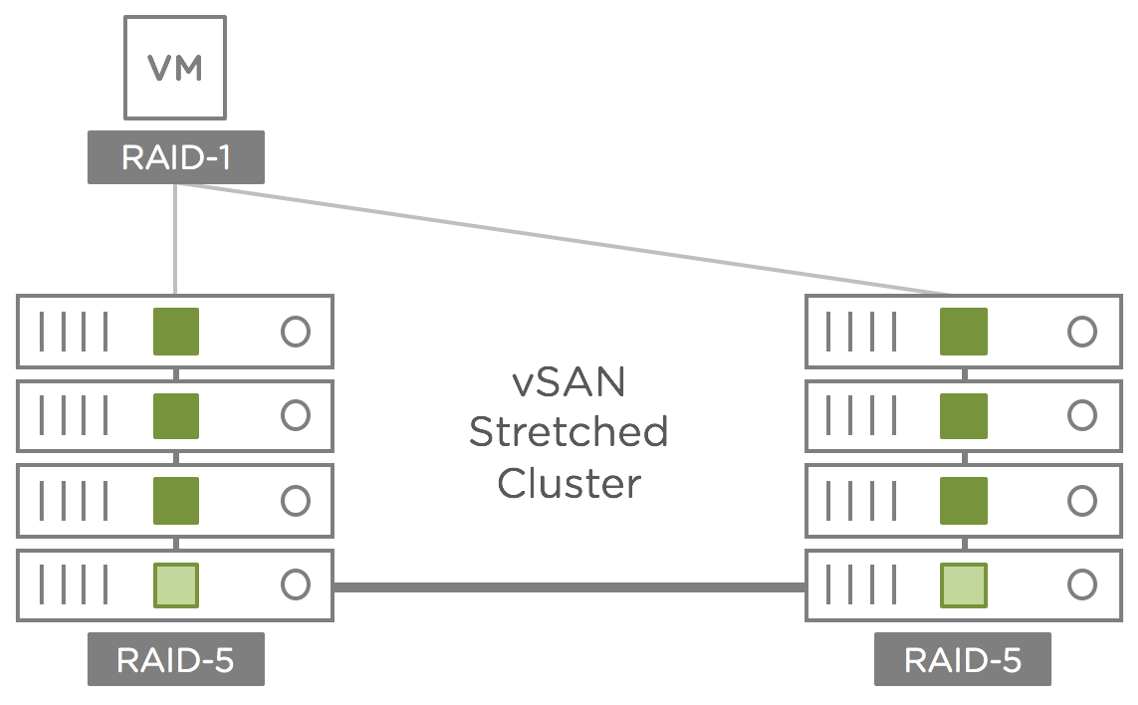

- If desired, Stretched Cluster or 2-Node cluster options can be created as part of the workflow. As part of the basic configuration keep the default selection of Single site cluster and click NEXT.

- When using an All-Flash configuration, you have the option to enable Deduplication and Compression. Deduplication and Compression are covered in a later section of this guide.

- If Encryption of data at rest is a requirement, here is where Encryption can be enabled from the start. We will address encryption later in this POC guide.

- Note: The process of later enabling Deduplication and Compression or Encryption of data at rest can take quite some time, depending on the amount of data that needs to be migrated during the rolling reformat. In a production environment, if Deduplication and Compression is a known requirement, it is advisable to enable this while enabling vSAN to avoid multiple occurrences of rolling reformat.

- Click NEXT

- In the next screen, you can claim all the disks of the same type at once for either vSAN caching tier or capacity tier. For each listed disk make sure it is listed correctly as a flash, HDD, caching device or capacity drive. Click NEXT

- If desired, created fault domains

- Verify the configuration and click FINISH

Once the configuration process is complete, return to the Configure > vSAN > Services view. It may take a few minutes for the cluster to complete all its updates, and you may see some alerts in vCenter until vSAN has settled. After that, you should see the Health and Performance services are enabled by default.

Check Your Network Thoroughly

Basic vSphere Functionality on vSAN

Basic functionality

Deploy Your First VM

This initial test will highlight the fact that general virtual machine operations are unchanged in vSAN environments.

In this section, a VM is deployed to the vSAN datastore using the default storage policy. This default policy is preconfigured and does not require any intervention unless you wish to change the default settings, which we do not recommend.

To examine the default policy settings, navigate to Menu > Shortcuts > VM Storage Policies .

.png)

From there, select vSAN Default Storage Policy. Look under the Rules tab to see the settings on the policy:

.png)

We will return to VM Storage Policies in more detail later, but suffice to say that when a VM is deployed with the default policy, it should have a mirror copy of the VM data created. This second copy of the VM data is placed on storage on a different host to enable the VM to tolerate any single failure. Also note that object space reservation is set to 'Thin provisioning', meaning that the object should be deployed as “thin”. After we have deployed the VM, we will verify that vSAN adheres to both of these capabilities.

One final item to check before we deploy the VM is the current free capacity on the vSAN datastore. This can be viewed from the [vSAN cluster] > Monitor > vSAN > Capacity view. In this example, it is 4.37 TB.

.png)

Make a note of the free capacity in your POC environment before continuing with the deploy VM exercise.

To deploy the VM, simply follow the steps provided in the wizard.

Select New Virtual Machine from the Actions Menu.

Select Create a new virtual machine .

At this point, a name for the VM must be provided, and then the vSAN Cluster must be selected as a compute resource.

Enter a Name for the VM and select a folder:

.png)

Select a compute resource:

Up to this point, the virtual machine deployment process is identical to all other virtual machine deployments that you have done on other storage types. It is the next section that might be new to you. This is where a policy for the virtual machine is chosen.

From the next menu, "4. Select storage", select the vSAN datastore, and the Datastore Default policy will actually point to the vSAN Default Storage Policy.

Once the policy has been chosen, datastores are split into those that are either compliant or non-compliant with the selected policy. As seen below, only the vSAN datastore can utilize the policy settings in the vSAN Default Storage Policy, so it is the only one that shows up as Compatible in the list of datastores.

.png)

The rest of the VM deployment steps in the wizard are quite straightforward, and simply entail selecting ESXi version compatibility (leave at default), a guest OS (leave at default) and customize hardware (no changes). Essentially you can click through the remaining wizard screens without making any changes.

Verifying Disk Layout of a VM stored in vSAN

Once the VM is created, select the new VM in the inventory, navigate to the Configure tab, and then select Policies. There should be two objects shown, "VM home" and "Hard disk 1". Both of these should show a compliance status of Compliant meaning that vSAN was able to deploy these objects in accordance with the policy settings.

.png)

To verify this, navigate to the Cluster's Monitor tab, and then select Virtual Objects. Once again, both the “VM home” and “Hard disk 1” should be displayed. Select “Hard disk 1” followed by View Placement Details. This should display a physical placement of RAID 1 configuration with two components, each component representing a mirrored copy of the virtual disk. It should also be noted that different components are located on different hosts. This implies that the policy setting to tolerate 1 failure is being adhered to.

.png)

.png)

The witness item shown above is used to maintain a quorum on a per-object basis. For more information on the purpose of witnesses, and objects and components in general, refer to the VMware vSAN Design and Sizing Guide on https://storagehub.vmware.com.

The “object space reservation” policy setting defines how much space is initially reserved on the vSAN datastore for a VM's objects. By default, it is set to "Thin provisioning", implying that the VM’s storage objects are entirely “thin” and consume no unnecessary space. Note the free capacity in the vSAN datastore after deploying the VM, we see that the free capacity is very close to what it was before the VM was deployed, as displayed :

.png)

Of course we have not installed anything in the VM (such as a guest OS) - it shows that only a tiny portion of the vSAN datastore has so far been used, verifying that the object space reservation setting of "Thin provisioning" is working correctly (observe that the "Virtual disks" and "VM home objects" consume less than 1GB in total, as highlighted in the "Used Capacity Breakdown" section).

Do not delete this VM as we will use it for other POC tests going forward.

Creating A Snapshot

Using the virtual machine created previously, take a snapshot of it. The snapshot can be taken when the VM is powered on or powered off. The objectives are to see that:

- no setup is needed to make vSAN handle snapshots

- the process for creating a VM snapshot is unchanged with vSAN

- a successful snapshot delta object is created

- the policy settings of the delta object are inherited directly from the base disk object

From the VM object in vCenter, click Actions > Snapshots > Take Snapshot...

.png)

Take a Snapshot of the virtual machine created in the earlier step.

Provide a name for the snapshot and optional description.

Once the snapshot has been requested, monitor tasks and events to ensure that it has been successfully captured. Once the snapshot creation has completed, additional actions will become available in the snapshot drop-down window. For example, there is a new action to Revert to Latest Snapshot and another action to Manage Snapshots.

.png)

Choose the Manage Snapshots option. The following is displayed. It includes details regarding all snapshots in the chain, the ability to delete one or all of them, as well as the ability to revert to a particular snapshot.

.png)

To see snapshot delta object information from the UI, navigate to [vSAN Cluster] > Monitor > vSAN > Virtual Objects .

.png)

There are now three objects that are associated with that virtual machine. First is the "VM Home" namespace. "Hard disk 1" is the base virtual disk, and "Hard disk 1 - poc-test-vm1.vmdk" is the snapshot delta. Notice the snapshot delta inherits its policy settings from the base disk that needs to adhere to the vSAN Default Storage Policy.

The snapshot can now be deleted from the VM. Monitor the VM’s tasks and ensure that it deletes successfully. When complete, snapshot management should look similar to this.

.png)

This completes the snapshot section of this POC. Snapshots in a vSAN datastore are very intuitive because they utilize vSphere native snapshot capabilities. Starting with vSAN 6.0, they are stored efficiently using “vsansparse” technology that improves the performance of snapshots compared to vSAN 5.5. In vSAN 6.1, snapshot chains can be up to 16 snapshots deep.

Clone A Virtual Machine

The next POC test is cloning a VM. We will continue to use the same VM as before. This time make sure the VM is powered on first. There are a number of different cloning operations available in vSphere 6.7. These are shown here.

.png)

The one that we will be running as part of this POC is the “Clone to Virtual Machine”. The cloning operation is a fairly straightforward click-through operation. This next screen is the only one that requires human interaction. One simply provides the name for the newly cloned VM, and a folder if desired.

.png)

We are going to clone the VM in the vSAN cluster, so this must be selected as the compute resource.

.png)

The storage will be the same as the source VM, namely the vsanDatastore. This will all be pre-selected for you if the VM being cloned also resides on the vsanDatastore.

Select Storage

.png)

Select from the available options (leave unchecked - default)

This will take you to the “Ready to complete” screen. If everything is as expected, click FINISH to commence the clone operation. Monitor the VM tasks for the status of the clone operation.

.png)

Do not delete the newly cloned VM. We will be using it in subsequent POC tests.

This completes the cloning section of this POC.

vMotion A Virtual Machine Between Hosts

The first step is to power-on the newly cloned virtual machine. We shall migrate this VM from one vSAN host to another vSAN host using vMotion.

Note: Take a moment to revisit the network configuration and ensure that the vMotion network is distinct from the vSAN network. If these features share the same network, performance will not be optimal.

First, determine which ESXi host the VM currently resides on. Selecting the Summary tab of the VM shows this. In this POC task, the VM that we wish to migrate is on host poc2.vsanpe.vmware.com.

.png)

Right-click on the VM and select Migrate.

.png)

"Migrate" allows you to migrate to a different compute resource (host), a different datastore or both at the same time. In this initial test, we are simply migrating the VM to another host in the cluster, so this initial screen should be left at the default of “Change compute resource only”. The rest of the screens in the migration wizard are pretty self-explanatory.

.png)

Select Change compute resource only

.png)

Select a destination host.

.png)

Select a destination network.

.png)

The vMotion priority can be left as high(default)

At the “Ready to complete” window, click on FINISH to initiate the migration. If the migration is successful, the summary tab of the virtual machine should show that the VM now resides on a different host.

.png)

Verify that the VM has been migrated to a new host.

Do not delete the migrated VM. We will be using it in subsequent POC tests.

This completes the “VM migration using vMotion” section of this POC. As you can see, vMotion works just great with vSAN.

Storage vMotion A VM Between Datastores

This test will only be possible if you have another datastore type available to your hosts, such as NFS/VMFS. If so, then the objective of this test is to successfully migrate a VM from another datastore type into vSAN and vice versa. The VMFS datastore can even be a local VMFS disk on the host.

Mount an NFS Datastore to the Hosts

The steps to mount an NFS datastore to multiple ESXi hosts are described in the vSphere 6.7 Administrators Guide. See the Create NFS Datastore in the vSphere Client topic for detailed steps.

Storage vMotion a VM from vSAN to another Datastore Type

Currently, the VM resides on the vSAN datastore. Launch the migrate wizard, similar to the previous exercise. However, on this occasion move the VM from the vSAN datastore to another datastore type by selecting Change storage only.

.png)

In this POC environment, we have an NFS datastore presented to each of the ESXi hosts in the vSAN cluster. This is the intended destination datastore for the virtual machine.

.png)

Select destination storage.

One other item of interest in this step is that the VM Storage Policy should also be changed to Datastore Default as the NFS datastore will not understand the vSAN policy settings.

At the “Ready to complete” screen, click FINISH to initiate the migration:

.png)

Once the migration completes, the VM Summary tab can be used to examine the datastore on which the VM resides.

.png)

Verify that the VM has been moved to the new storage.

Scale Out vSAN

Scaling out vSAN

Scale Out vSAN By Adding A Host To The Cluster

One of the really nice features is the simplistic scale-out nature of vSAN. If you need more compute or storage resources in the cluster, simply add another host to the cluster.

Before initiating the task, revisit the current state of the cluster. There are currently three hosts in the cluster, and there is a fourth host not in the cluster. We also created two VMs in the previous exercises.

.png)

Let us also remind ourselves of how big the vSAN datastore is.

.png)

In the current state, the size of the vSAN datastore is 3.52TB with a free capacity of 3.47TB free.

Add the Fourth Host to vSAN Cluster

We will now proceed with adding a fourth host to the vSAN Cluster.

Note: Back in section 2 of this POC guide, you should have already set up a vSAN network for this host. If you have not done that, revisit section 2, and set up the vSAN network on this fourth host.

Having verified that the networking is configured correctly on the fourth host, select the new host in the inventory, right-click on it and select the option Move To… as shown below.

.png)

You will then be prompted to select the location to which the host will be moved. In this POC environment, there is only one vSAN cluster. Select that cluster.

Select a cluster as the destination for the host to move into.

The next screen is related to resource pools. You can leave this at the default, which is to use the cluster’s root resource pool, then click OK .

.png)

This moves the host into the cluster. Next, navigate to the Hosts and Clusters view and verify that the cluster now contains the new node.

.png)

As you can see, there are now 4 hosts in the cluster. However, you will also notice from the Capacity view that the vSAN datastore has not changed with regards to total and free capacity. This is because vSAN does not claim any of the new disks automatically. You will need to create a disk group for the new host and claim disks manually. At this point, it would be good practice to re-run the health check tests. If there are any issues with the fourth host joining the cluster, use the vSAN Health Check to see where the issue lies. Verify that the host appears in the same network partition group as the other hosts in the cluster.

Note: Beginning in vSAN 6.7 U1, we have added a faster way to create, and scale-out vSAN clusters. Refer to the Quickstart chapter to learn more.

Create A Disk Group On A New Host

Navigate to [vSAN Cluster] > Configure > vSAN > Disk Management, select the new host and then click on the highlighted icon to claim unused disks for a new disk group:

.png)

As before, we select a flash device as cache disk and three flash devices as capacity disks. This is so that all hosts in the cluster maintain a uniform configuration.

.png)

Select flash and capacity devices.

Verify vSAN Disk Group Configuration on New Host

Once the disk group has been created, the disk management view should be revisited to ensure that it is healthy.

.png)

Verify New vSAN Datastore Capacity

The final step is to ensure that the vSAN datastore has now grown in accordance to the capacity devices in the disk group that was just added on the fourth host. Return to the Capacity view and examine the total and free capacity fields.

.png)

As we can clearly see, the vSAN datastore has now grown in size to 4.69TB. Free space is shown as 4.62TB as the amount of space used is minimal. The original datastore capacity with 3 hosts (in the example POC environment) was 3.52TB.

This completes the “Scale-Out” section of this POC. As seen, scale-out on vSAN is simple but very powerful.

Monitoring vSAN

When it comes to monitoring vSAN, there are a number of areas that need particular attention.

These are the key considerations when it comes to monitoring vSAN:

- Overall vSAN Health

- Resynchronization & rebalance operations in the vSAN cluster

- Performance Monitoring through vCenter UI and command-line utility(vsantop)

- Advanced monitoring through integrated vROPS dashboards

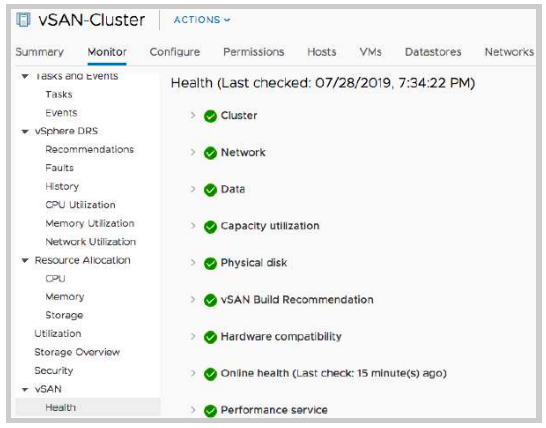

Overall vSAN Health

The first item to monitor is the overall health of the cluster. vSAN Health provides a consolidated list of health checks that correlate to the resiliency and performance of a vSAN cluster. From the vCenter, Navigate to the cluster object, then go to [vSAN Cluster] > Monitor > vSAN > Health. This provides a holistic view of the health states pertaining to hardware and software components that constitute a vSAN cluster. There is an exhaustive validation of components states, configuration, and compatibility.

More information about this is available here - Working with vSAN Health checks.

Resync Operations

Another very useful view is [vSAN Cluster] > Monitor > vSAN > Resyncing Objects view. This will display any resyncing or rebalancing operation that might be taking place on the cluster. For example, if there was a device failure, resyncing or rebuilding activity could be observed here. Resync can also happen if a device was removed or a host failed, and the CLOMd (Cluster Logical Object Manager daemon) timer expired. Resyncing objects dashboard provides details of the resync status, amount of data in transit and estimated time to completion.

With regards to rebalancing, vSAN attempts to keep all physical disks at less than 80% capacity. If any physical disks’ capacity passes this threshold, vSAN will move components from this disk to other disks in the cluster in order to rebalance the physical storage.

In an ideal state, no resync activity should be observed, as shown below.

.png)

Resyncing activity usually indicates:

- a failure of a device or host in the cluster

- a device has been removed from the cluster

- a physical disk has greater than 80% of its capacity consumed

- a policy change has been implemented which necessitates a rebuilding of a VM’s object layout. In this case, a new object layout is created, synchronized with the source object, and then discards the source object

Performance Monitoring through vCenter UI

The Performance monitoring service has existed since vSAN 6.2. The performance service can be used for verification of performance as well as quick troubleshooting of performance-related issues. Performance charts are available for many different levels.

- Cluster

- Hosts

- Virtual Machines and Virtual Disks

- Disk groups

- Physical disks

A detailed list of performance graphs and descriptions can be found here.

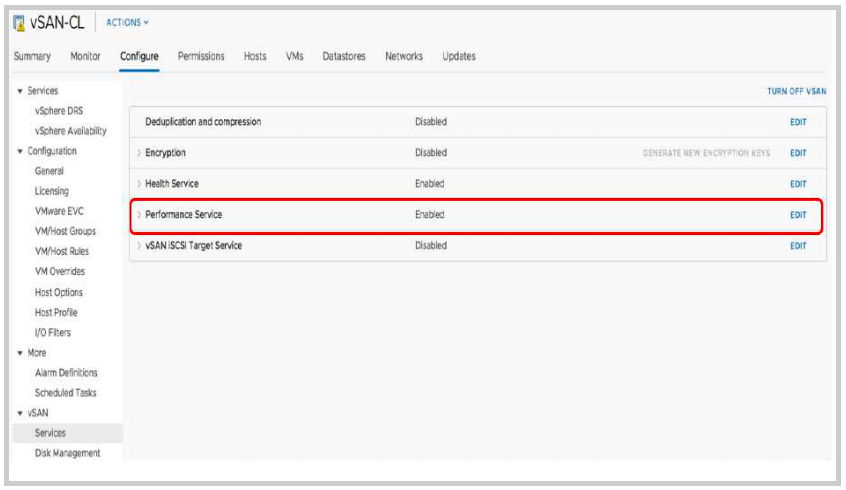

The performance monitoring service is enabled by default starting in vSAN 6.7. If in case it was disabled, it can be re-enabled through the following steps:

Navigate to the vSAN Cluster.

- Click the Configure tab.

- Select Services from the vSAN Section

- Navigate to Performance Service, Click EDIT to edit the performance settings.

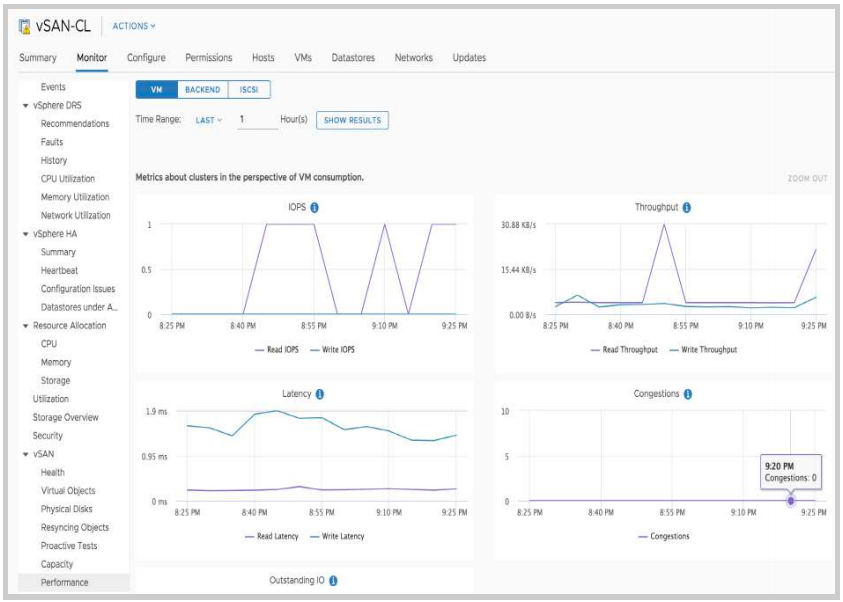

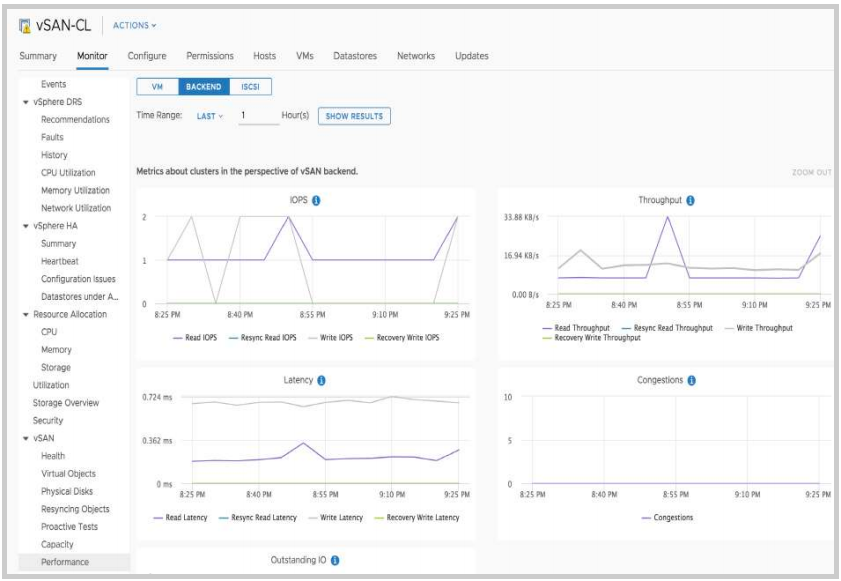

Once the service has been enabled performance statistics can be viewed from the performance menus in vCenter. The following example is meant to provide an overview of using the performance service. For purposes of this exercise, we will examine IOPS, throughput and latency from the Virtual Machine level and the vSAN Backend level.

The cluster level shows performance shows metrics from a cluster level. This includes all virtual machines. Let’s take a look at IOPS from a cluster level. To access cluster-level performance graphs:

- From the Cluster level in vCenter, Click on the Monitor tab.

- Navigate to the vSAN section and click on Performance

For this portion of the example, we will step down a level and the performance statistics for the vSAN Backend. To access the vSAN - Backend performance metrics select the BACKEND tab from the menu on the left.

The performance service allows administrators to view not only real-time data but historical data as well. By default, the performance service looks at the last hour of data. This time window can be increased or changed by specifying a custom range.

Performance monitoring through vsantop utility

With vSphere 6.7 Update 3 a new command-line utility: vsantop has been introduced. This utility is focused on monitoring vSAN performance metrics at an individual host level. Traditionally with ESXi, an embedded utility called esxtop was used to view real-time performance metrics. This utility helped in ascertaining resource utilization and performance of the system. However, vSAN required a custom utility aware of its distributed architecture. vsantop is built with an awareness of vSAN architecture to retrieve focused metrics at a granular interval. vsantop is embedded in the hypervisor. It collects and persists statistical data in a RAM disk. Based on the configured interval rate, the metrics are displayed on the secure shell console. This interval is configurable and can be reduced or increased depending on the amount of detail required. The workflow is illustrated below for a better understanding.

.png)

To initiate vsantop, Log in to the ESXi host through a secure shell(ssh) with root user privileges and run the command vsantop on the ssh console.

vsantop provides detailed insights into vSAN component level metrics at a low interval rate. This helps in understanding resource consumption and utilization pattern. vsantop is primarily intended for advanced vSAN users and VMware support personnel.

Monitoring vSAN through integrated vRealize Operations Manager in vCenter

Monitoring vSAN has become simpler and accessible from the vCenter UI. This is made possible through the integration of vRealize Operations Manager plugin in vCenter.

The feature is enabled through the HTML5 based vSphere client and allows an administrator to either install a new instance or integrate with an existing vRealize Operations Manager.

You can initiate the workflow by navigating to Menu > vRealize Operations as shown below:

.png)

Once the integration is complete, you can access the predefined dashboards as shown below:

.png)

The following out-of-the-box dashboards are available for monitoring purposes,

- vCenter - Overview

- vCenter - Cluster View

- vCenter - Alerts

- vSAN- Overview

- vSAN - Cluster View

- vSAN - Alerts

From a vSAN standpoint, the Overview, Cluster View and Alerts dashboards allow an administrator to have a snapshot of the vSAN cluster. Specific performance metrics such as IOPs, Throughput, Latency, and Capacity related information are available as depicted below,

.png)

VM Storage Policies and Storage

Storage Policies

VM Storage Policies form the basis of VMware’s Software-Defined Storage vision. Rather than deploying VMs directly to a datastore, a VM Storage Policy is chosen during initial deployment. The policy contains the characteristics and capabilities of the storage required by the virtual machine. Based on the policy contents, the correct underlying storage is chosen for the VM.

If the underlying storage meets the VM storage Policy requirements, the VM is said to be in a compatible state.

If the underlying storage fails to meet the VM storage Policy requirements, the VM is said to be in an incompatible state.

In this section of the POC Guide, we shall look at various aspects of VM Storage Policies. The virtual machines that have been deployed thus far have used the vSAN Default Storage Policy, which has the following settings:

| Storage Type | vSAN |

| Site disaster tolerance | None(standard cluster) |

| Failures to tolerate | 1 failure - RAID-1 (Mirroring) |

| Number of disk stripes per object | 1 |

| IOPS limit for object | 0 |

| Object space reservation | Thin provisioning |

| Flash read cache reservation | 0% |

| Disable object checksum | No |

| Force provisioning | No |

In this section of the POC, we will walk through the process of creating additional storage policies.

Create A New VM Storage Policy

In this part of the POC, we will build a policy that creates a stripe width of two for each storage object deployed with this policy. The VM Storage Policies can be accessed from the 'Shortcuts' page on the vSphere client (HTML 5) as shown below.

There will be some existing policies already in place, such as the vSAN Default Storage policy (which we’ve already used to deploy VMs in section 4 of this POC guide). In addition, there is another policy called “VVol No Requirements Policy”, which is used for Virtual Volumes and is not applicable to vSAN.

To create a new policy, click on Create VM Storage Policy .

The next step is to provide a name and an optional description for the new VM Storage Policy. Since this policy will contain a stripe width of 2, we have given it a name to reflect this. You may also give it a name to reflect that it is a vSAN policy.

The next section sets the policy structure. We select Enable rules for "vSAN" Storage to set a vSAN specific policy

Now we get to the point where we create a set of rules. The first step is to select the Availability of the objects associated with this rule, i.e. the failures to tolerate.

We then set the Advanced Policy Rules. Once this is selected, the six customizable capabilities associated with vSAN are exposed. Since this VM Storage Policy is going to have a requirement where the stripe width of an object is set to two, this is what we select from the list of rules. It is officially called “ Number of disk stripes per object ”.

Clicking NEXT moves on to the Storage Compatibility screen. Note that this displays which storage “understands” the policy settings. In this case, the vsanDatastore is the only datastore that is compatible with the policy settings.

Note: This does not mean that the vSAN datastore can successfully deploy a VM with this policy; it simply means that the vSAN datastore understands the rules or requirements in the policy.

.png)

At this point, you can click on NEXT to review the settings. On clicking FINISH, the policy is created.

Let’s now go ahead and deploy a VM with this new policy, and let’s see what effect it has on the layout of the underlying storage objects.

Deploy A New VM With The New Storage Policy

The workflow to deploy a New VM remains the same until we get to the point where the VM Storage Policy is chosen. This time, instead of selecting the default policy, select the newly created StripeWidth=2 policy as shown below.

.png)

As before, the vsanDatastore should show up as the compatible datastore, and thus the one to which this VM should be provisioned.

.png)

Now let's examine the layout of this virtual machine, and see if the policy requirements are met; i.e. do the storage objects of this VM have a stripewidth of 2? First, ensure that the VM is compliant with the policy by navigating to [VM] > Configure > Policies, as shown here.

.png)

The next step is to select the [vSAN Cluster] > Monitor > vSAN > Virtual Objects and check the layout of the VM’s storage objects. The first object to check is the "VM Home" namespace. Select it, and then click on the View Placement Details icon.

.png)

This continues to show that there is only one mirrored component, but no stripe width (which is displayed as a RAID 0 configuration). Why? The reason for this is that the "VM home" namespace object does not benefit from striping so it ignores this policy setting. Therefore this behavior is normal and to be expected.

.png)

Now let’s examine “Hard disk 1” and see if that layout is adhering to the policy. Here we can clearly see a difference. Each replica or mirror copy of the data now contains two components in a RAID 0 configuration. This implies that the hard disk storage objects are indeed adhering to the stripe width requirement that was placed in the VM Storage Policy.

.png)

Note that each striped component must be placed on its own physical disk. There are enough physical disks to meet this requirement in this POC. However, a request for a larger stripe width would not be possible in this configuration. Keep this in mind if you plan a POC with a large stripe width value in the policy.

It should also be noted that snapshots taken of this base disk continue to inherit the policy of the base disk, implying that the snapshot delta objects will also be striped.

Edit VM Storage Policy Of An Existing VM

You can choose to modify the VM Storage Policy of an existing VM deployed on the vSAN datastore. The configuration of the objects associated with the VM will be modified to comply with the newer policy. For example if NumberOfFailuresToTolerate is increased, newer components would be created, synchronized with the existing object, and subsequently, the original object is discarded. VM Storage policies can also be applied to individual objects.

In this case, we will add the new StripeWidth=2 policy to one of the VMs which still only has the default policy (NumberOfFailuresToTolerate =1, NumberOfDiskStripesPerObject =1, ObjectSpaceReservation =0) associated with it.

To begin, select the VM that is going to have its policy changed from the vCenter inventory, then select the Configure > Policies view. This VM should currently be compliant with the vSAN Default Storage Policy. Now click on the EDIT VM STORAGE POLICIES button as highlighted below.

.png)

This takes you to the edit screen, where the policy can be changed.

.png)

Select the new VM Storage Policy from the drop-down list. The policy that we wish to add to this VM is the StripeWidth=2 policy.

Once the policy is selected, click OK button as shown above to ensure the policy gets applied to all storage objects. The VM Storage Policy should now appear updated for all objects.

Now when you revisit the Configure > Policies view, you should see the changes in the process of taking effect (Reconfiguring) or completed, as shown below.

.png)

This is useful when you only need to modify the policy of one or two VMs, but what if you need to change the VM Storage Policy of a significant number of VMs.

That can be achieved by simply changing the policy used by those VMs. All VMs using those VMs can then be “brought to compliance” by reconfiguring their storage object layout to make them compliant with the policy. We shall look at this next.

Note that modifying or applying a new VM Storage Policy causes additional backend IO while the objects are being synchronized.

Modify A VM Storage Policy

In this task, we shall modify an existing VM Storage policy to include an ObjectSpaceReservation =25%. This means that each storage object will now reserve 25% of the VMDK size on the vSAN datastore. Since all VMs were deployed with 40GB VMDKs with Failures to tolerate="1 failure - RAID-1 (Mirroring)", the reservation value will be 20 GB.

As the first step, note the amount of free space in the vSAN datastore. This would help ascertain the impact of the change in the policy.

Select StripeWidth=2 policy from the list of available policies, and then the Edit Settings option. Navigate to vSAN > Advanced Policy Rules and modify the Object space reservation setting to 25%, as shown below

.png)

Proceed to complete the wizard with default values and click FINISH. A pop-up message requiring user input appears with details of the number of VMs using the policy being modified. This is to ascertain the impact of the policy change. Typically such changes are recommended to be performed during a maintenance window. You can choose to enforce a policy change immediately or defer it to be changed manually at a later point. Leave it at the default, which is “Manually later”, by clicking Yes as shown below:

.png)

Next, Select the Storage policy - StripeWidth=2 and click on the VM Compliance tab in the bottom pane. It will display the two VMs along with with their storage objects, and the fact that they are no longer compliant with the policy. They are in an “Out of Date” compliance state as the policy has now been changed.

You can now enforce a policy change by navigating to [VM Storage Policies] and clicking on Reapply VM Storage Policy

When this button is clicked, the following popup appears.

.png)

When the reconfigure activity completes against the storage objects, and the compliance state is once again checked, everything should show as "Compliant".

.png)

Since we have now included an ObjectSpaceReservation value in the policy, you may notice corresponding capacity reduce from the vSAN datastore.

For example, the two VMs with the new policy change have 40GB storage objects. Therefore, there is a 25% ObjectSpaceReservation implying 10GB is reserved per VMDK. So that's 10GB per VMDK, 1 VMDK per VM, 2 VMs equals 20 GB reserved space, right? However, since the VMDK is also mirrored, so there is a total of 40GB reserved on the vSAN datastore.

Adding IOPS Limits and Checksum

vSAN Performance Testing

Performance Testing Overview

Performance testing is an important part of evaluating any storage solution. However, setting up a suitable and consistent test environment can be challenging - there are many tools that simulate workloads, and many ways to collect the generated data and logs. This adds complexity in troubleshooting performance issues and lengthens the evaluation process.

vSAN Performance will primarily depend on: the storage devices that are in the hosts (SSD, magnetic disks); the storage policies configured on the VMs (how widely the data is spread across the devices); the size of the working set and the type of workload, and so on. Moreover, a major factor for VM performance is how the virtual hardware is configured: how many virtual SCSI controllers, VMDKs, outstanding I/O and how many vCPUs that are pushing I/O.

vSAN’s distributed architecture dictates that reasonable performance is achieved when the pooled compute and storage resources in the cluster are well utilized: this usually means VM workloads should be distributed throughout the cluster to achieve consistent aggregated performance. In this context, detailed performance monitoring and analysis becomes ever important.

From version 6.7, the vSAN performance service is automatically enabled when a vSAN cluster is created from the vCenter HTML5 UI. Performance graphs, which provide a valuable first insight into resource consumption and bottlenecks, can be conveniently viewed in vCenter without any additional setup requirements. Moreover, performance benchmarks run from HCIbench are captured and available for viewing within the same UI. More information on HCIbench can be found in the section 'Performance Testing Using HCIbench'.

To view the performance graphs navigate to [vSAN cluster] > Monitor > Performance . Here, one can visualize VM, Backend and ISCSI performance stats over a 24 hour period.

With vSphere 6.7 Update 3 a new command-line utility: vsantop has been introduced. This utility is focused on monitoring vSAN performance metrics at an individual host level. vsantop can be accessed through a secure shell to the ESXi hosts in a vSAN cluster. It is embedded in the hypervisor, collects and persists statistical data in a RAM disk.

More details of the performance service and vsantop can be found in chapter 7, under 'vSAN Monitoring'.

Performance Considerations

Single vs. Multiple Workers

vSAN is designed to support good performance when many VMs are distributed and running simultaneously across the hosts in the cluster. Running a single storage test in a single VM won’t reflect on the aggregate performance of a vSAN-enabled cluster. Regardless of what tool you are using – IOmeter, VDbench or something else – plan on using multiple “workers” or I/O processors to multiple virtual disks to get representative results.

Working Set

For the best performance, a virtual machine’s working set should be mostly in the cache. Care will have to be taken when sizing your vSAN flash to account for all of your virtual machines’ working sets residing in the cache. A general rule of thumb for Hybrid clusters is to size cache as 10% of your consumed virtual machine storage (not including replica objects). While this is adequate for most workloads, understanding your workload’s working set before sizing is a useful exercise. Consider using VMware Infrastructure Planner (VIP) tool to help with this task – http://vip.vmware.com.

Sequential Workloads versus Random Workloads

Sustained sequential write workloads (such as VM cloning operations) that run on vSAN could fill the cache, and future writes will need to wait for the cache to be destaged to the spinning magnetic disk layer before more I/Os can be written to cache, so performance will be a reflection of the spinning disk(s) and not of flash. The same is true for sustained sequential read workflows. If the block is not in the cache, it will have to be fetched from the spinning disk. Mixed workloads will benefit more from vSAN’s caching design.

Outstanding IOs

Most testing tools have a setting for Outstanding IOs, or OIO for short. It shouldn’t be set to 1, nor should it be set to match a device queue depth. Consider a setting between 2 and 8, depending on the number of virtual machines and VMDKs that you plan to run. For a small number of VMs and VMDKs, use 8. For a large number of VMs and VMDKs, consider setting it lower.

Block Size

The block size that you choose is really dependent on the application/workload that you plan to run in your VM. While the block size for a Windows Guest OS varies between 512 bytes and 1MB, the most common block size is 4KB. But if you plan to run SQL Server, or MS Exchange workloads, you may want to pick block sizes appropriate to those applications (they may vary from application version to application version). Since it is unlikely that all of your workloads will use the same block size, consider a number of performance tests with differing, but commonly used, block sizes.

Cache Warming Considerations

Flash as cache helps performance in two important ways. First, frequently read blocks end up in the cache, dramatically improving performance. Second, all writes are committed to the cache first, before being efficiently destaged to disks – again, dramatically improving performance.

However, data still has to move back and forth between disks and cache. Most real-world application workloads take a while for cache to “warm-up” before achieving steady-state performance.

Number of Magnetic Disk Drives in Hybrid Configurations

In the getting started section, we discuss how disk groups with multiple disks perform better than disk groups with fewer, as there are more disk spindles to destage to as well as more spindles to handle read cache misses. Let’s look at a more detailed example of this.

Consider a vSAN environment where you wish to clone a number of VMs to the vSAN datastore. This is a very sequential I/O intensive operation. We may be able to write into the SSD write buffer at approximately 200-300 MB per second. A single magnetic disk can maybe do 100MB per second. So assuming no read operations are taking place at the same time, we would need 2-3 magnetic disks to match the SSD speed for destaging purposes.

Now consider that there might also be some operations going on in parallel. Let’s say that we have another vSAN requirement to achieve 2000 read IOPS. vSAN is designed to achieve a 90% read cache hit rate (approximately). That means 10% of all reads are going to be read cache misses; for example, that is 200 IOPS based on our requirement. A single magnetic disk can perhaps achieve somewhere in the region of 100 IOPS. Therefore, an additional 2 magnetic disks will be required to meet this requirement.

If we combine the destaging requirements and the read cache misses described above, your vSAN design may need 4 or 5 magnetic disks per disk group to satisfy your workload.

Striping Considerations

One of the VM Storage Policy settings is NumberOfDiskStripesPerObject. That allows you to set a stripe width on a VM’s VMDK object. While setting disk striping values can sometimes increase performance, that isn’t always the case.

As an example, if a given test is cache-friendly (e.g. most of the data is in cache), striping won’t impact performance significantly. As another example, if a given VMDK is striped across disks that are busy doing other things, not much performance is gained, and may actually be worse.

Guest File Systems Considerations

Many customers have reported significant differences in performance between different guest file systems and their settings; for example, Windows NTFS and Linux. If you are not getting the performance you expect, consider investigating whether it could be a guest OS file system issue.

Performance during Failure and Rebuild

When vSAN is rebuilding one or more components, application performance can be impacted. For this reason, always check to make sure that vSAN is fully rebuilt and that there are no underlying issues prior to evaluating performance. Verify there are no rebuilds occurring before testing with the Resyncing objects dashboard. The dashboard can be accessed by navigating to [vSAN Cluster] > Monitor > vSAN > Resyncing objects as depicted below:

.png)

Performance Testing Using HCIBench

HCIbench aims to simplify and accelerate customer Proof of Concept (POC) performance testing in a consistent and controlled way. The tool fully automates the end-to-end process of deploying test VMs, coordinating workload runs, aggregating test results, and collecting necessary data for troubleshooting purposes. Evaluators choose the profiles they are interested in and HCIbench does the rest quickly and easily.

This section provides an overview and recommendations for successfully using HCIbench. For complete documentation, refer to the HCIbench User Guide.

Where to Get HCIbench

HCIbench and complete documentation can be downloaded from https://labs.vmware.com/flings/hcibench

This tool is provided free of charge and with no restrictions. Support will be provided solely on a best-effort basis as time and resources allow, by the VMware vSAN Community Forum.

Deploying HCIbench

Step 1 – Deploy the OVA

Firstly, download and deploy the HCIbench appliance. The process for deploying the HCIbench appliance is no different from deploying any other appliance in vSphere platform.

Step 2 – HCIbench Configuration

After deployment, navigate to http://<Controller VM IP>:8080/ to configure the appliance

There are three main sections to consider:

- vSAN cluster and host information

- Guest VM Specification

- Workload Definitions

For detailed steps on configuring and using HCIbench refer to the HCIbench User Guide.

Considerations for Defining Test Workloads

Either FIO or vdbench can be chosen as the testing engine. Here, we recommend using FIO, due to the exhaustive list of parameters that can be set. Pre-defined parameter files can be uploaded to HCIbench to be executed (a wider variety of options are available, such as different read/write block sizes outside of what can be defined within the configuration page, for a full list of FIO options, consult the FIO documentation, e.g. https://www.mankier.com/1/fio)

Although 'Easy Run' can be selected, we recommend explicitly defining a workload pattern to ensure that tests are tailored to the performance requirements of the PoC. Below, we walk through some of the important considerations.

Working set

Defining the appropriate working set is one of the most important factors for correctly running performance tests and obtaining accurate results. For the best performance, a virtual machine’s working set should be mostly in the cache. Care should be taken when sizing your vSAN caching tier to account for all of the virtual machines’ working sets residing in the cache. A general rule of thumb, in hybrid environments, is to size cache as 10% of your consumed virtual machine storage (not including replica objects). While this is adequate for most workloads, understanding your workload’s working set before sizing is a useful exercise. For all-flash environments, consult the table below

.png)

The following process is an example of sizing an appropriate working set for performance testing with HCIbench:

Consider a four-node cluster with one 400GB SSD per node. This gives the cluster a total cache size of 1.6TB. For a Hybrid cluster, the total cache available in vSAN is split 70% for read cache and 30% for write cache. This gives the cluster in our example 1120GB of available read cache and 480GB of available write cache. In order to correctly fit the HCIbench within the available cache, the total capacity of all VMDKs used for I/O testing should not exceed 1,120GB. For All-Flash, 100% of the cache is allocated for writes (thus the total capacity of all VMDKs is 1.6TB). We create a test scenario with four VMs per host, each VM having 5 X 10GB VMDKs, resulting in a total size of 800GB -- this will allow the test working set to fit within the cache.

The number and size of the data disk, along with the number of threads should be adjusted so that the product of the test set is less than the total size of the cache tier.

Thus: # of VMs x # of Data Disk x Size of Data Disk x # of Threads < Size of Cache Disk x Disk Groups per Host x Number of Hosts x [70% read cache (hybrid)]

For example:

4 VMs x 5 Data Disks x 10GB x 1 Thread = 800GB,

400GB SSDs x 70% x 1 Disk Group per Host x 4 Hosts = 1,120GB

Therefore, 800GB working set size is less than the 1,120GB read cache in cluster, i.e there is more read cache available than our defined working set size. Therefore this is an acceptable working set size.

Note: the maximum working set size per cache disk is 600GB. If your cache disk size is greater than this, use this value in the above calculations.

Sequential workloads versus random workloads

Before doing performance tests, it is important to understand the performance characteristics of the production workload to be tested: different applications have different performance characteristics. Understanding these characteristics is crucial to successful performance testing. When it is not possible to test with the actual application or application-specific testing tool it is important to design a test that matches the production workload as closely as possible. Different workload types will perform differently on vSAN.

- Sustained sequential write workloads (such as VM cloning operations) run on vSAN will simply fill the cache and future writes will need to wait for the cache to be destaged to the capacity tier before more I/Os can be written to the cache. Thus, in a hybrid environment, performance will be a reflection of the spinning disk(s) and not of flash. The same is true for sustained sequential reads. If the block is not in the cache, it will have to be fetched from the spinning disk. Mixed workloads will benefit more from vSAN’s caching design.

- HCIbench allows you to change the percentage read and the percentage random parameters; a good starting point here is to set the percentage read parameter to 70% and the percentage random parameter to 30%.

Prepare Virtual Disk Before Testing

To achieve a 'clean' performance run, the disks should be wiped before use. To achieve this, select a value for the 'Prepare Virtual Disk Before Testing'. This option will either zero or randomize the data (depending on the selection) on the disks for each VM being used in the test, helping to alleviate a first write penalty during the performance testing phase. We recommend that the disks are randomized if using the Deduplication & Compression feature.

Warm up period

As a best practice, performance tests should include at least a 15-minute warm-up period. Also, keep in mind that the longer testing runs the more accurate the results will be. In addition to the cache warming period,

Testing Runtime

HCIbench tests should be configured for at least an hour to observe the effects of destaging from the cache to the capacity tier.

Blocksize

It is important to match the block size of the test to that of the workload being simulated, as this will directly affect the throughput and latency of the cluster. Therefore, it is paramount that this information be gathered before the start of the tests (for instance, from a Live Optics assessment).

Results

After testing is completed, you can view the results at http://<Controller VM IP>:8080/results in a web browser. A summary file of the tests will be present inside the subdirectory corresponding to the test run. To export the results to a ZIP file, click on the 'save result' option on the HCIbench configuration page (and wait for the ZIP file to be fully populated).

As HCIbench is integrated with the vSAN performance service, the performance data can also be reviewed within the vCenter HTML5 UI, under [vSAN cluster] > Monitor > vSAN > Performance.

Testing Hardware Failures

Understanding Expected Behaviours

When conducting any kind failure testing, it is important to consider the expected outcome before the test is conducted. With each test described in this section, you should first read the preceding description to first understand how the test will affect the system.

Important: Test one Thing at a Time

By default, VMs deployed on vSAN inherit the default storage policy, with the ability to tolerate one failure. If you do not wait for the first failure to be resolved, and then try to test another failure, you will have introduced two failures to the cluster. The VMs will not be able to tolerate the second failure and will become inaccessible.

VM Behavior when Multiple Failures Encountered

Previously we discussed VM operational states and availability. To recap, a VM remains accessible when the full mirror copy of the objects are available, as well as greater than 50% of the components that make up the VM; the witness components are there to assist with the latter requirement.

Let’s talk a little about VM behavior when there are more failures in the cluster than the NumberOfFailuresToTolerate setting in the policy associated with the VM.

VM Powered on and VM Home Namespace Object Goes Inaccessible

If a running VM has its VM Home Namespace object go inaccessible due to failures in the cluster, a number of different things may happen. Once the VM is powered off, it will be marked "inaccessible" in the vSphere web client UI. There can also be other side effects, such as the VM getting renamed in the UI to its “.vmx” path rather than VM name, or the VM being marked "orphaned".

VM Powered on and Disk Object is inaccessible

If a running VM has one of its disk objects become inaccessible, the VM will keep running, but its VMDK’s I/O is stalled. Typically, the Guest OS will eventually time out I/O. Some operating systems may crash when this occurs. Other operating systems, for example, some Linux distributions, may downgrade the filesystems on the impacted VMDK to read-only. The Guest OS behavior and even the VM behavior is not vSAN specific. It can also be seen on VMs running on traditional storage when the ESXi host suffers an APD(All Paths Down).

Once the VM becomes accessible again, the status should resolve, and things go back to normal. Of course, data remains intact during these scenarios.

What happens when a server fails or is rebooted?

A host failure can occur in a number of ways. It could be a crash, or it could be a network issue (which is discussed in more detail in the next section). However, it could also be something as simple as a reboot, and that the host will be back online when the reboot process completes. Once again, vSAN needs to be able to handle all of these events.

If there are active components of an object residing on the host that is detected to be failed (due to any of the stated reasons) then those components are marked as ABSENT. I/O flow to the object is restored within 5-7 seconds by removing the ABSENT component from the active set of components in the object.

The ABSENT state is chosen rather than the DEGRADED state because in many cases a host failure is a temporary condition. A host might be configured to auto-reboot after a crash, or the host’s power cable was inadvertently removed, but plugged back in immediately. vSAN is designed to allow enough time for a host to reboot before starting to rebuild objects on other hosts so as not to waste resources. Because vSAN cannot tell if this is a host failure, a network disconnect or a host reboot, the 60-minute timer is once again started. If the timer expires, and the host has not rejoined the cluster, a rebuild of components on the remaining hosts in the cluster commences.

If a host fails or is rebooted, this event will trigger a "Host connection and power state" alarm, and if vSphere HA is enabled on the cluster. It will also cause a" vSphere HA host status" alarm and a “Host cannot communicate with all other nodes in the vSAN Enabled Cluster” message.