vSAN 2-node Cluster Guide

Introduction

Concepts in vSAN 2-node Clusters

The Witness Host

vSAN Witness Host Purpose

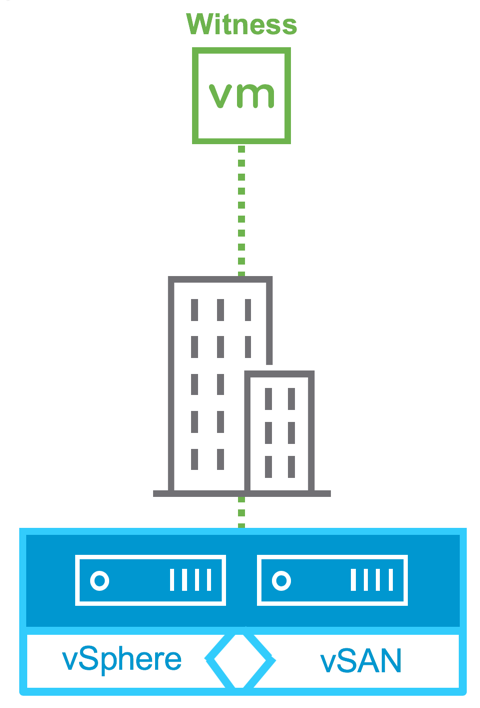

The vSAN Witness Host is a virtual appliance running ESXi. It contains vSAN metadata to ensure data integrity and establish a quorum in case of a physical host failure so that vSAN data remains accessible. The vSAN Witness Host must have connectivity to both vSAN physical nodes in the cluster.

Updating the vSAN Witness Appliance

The vSAN Witness Appliance can easily be maintained/patched using vSphere Lifecycle Manager like physical vSphere hosts. Deploying a new vSAN Witness Appliance is not required when updating or patching vSAN hosts. Normal upgrade mechanisms are supported on the vSAN Witness Appliance. The vSAN witness host should be upgraded first to maintain backward compatibility.

Important: Do not upgrade the on-disk format of the vSAN witness host until all physical hosts have been upgraded.

Read Locality in vSAN 2 Node Clusters

In traditional vSAN clusters, a virtual machine’s read operations are distributed across all replica copies of the data in the cluster. In the case of a policy setting of NumberOfFailuresToTolerate =1, which results in two copies of the data, 50% of the reads will come from replica1, and 50% will come from replica2. In the case of a policy setting of NumberOfFailuresToTolerate =2 in non-stretched vSAN clusters, results in three copies of the data, 33% of the reads will come from replica1, 33% of the reads will come from replica2, and 33% will come from replica3.

In a vSAN 2-node clusters, 100% of reads occur in the site (host) the VM resides on. This aligns with the behavior of vSAN Stretched Clusters. Read locality overrides the NumberOfFailuresToTolerate=1 policy’s behavior to distribute reads across the components.

This is not significant to consider in All-Flash configurations but should be considered in Hybrid vSAN configurations. To understand why, it is important to know how read and write operations behave in 2-node Virtual SAN configurations.

Writes Are Synchronous

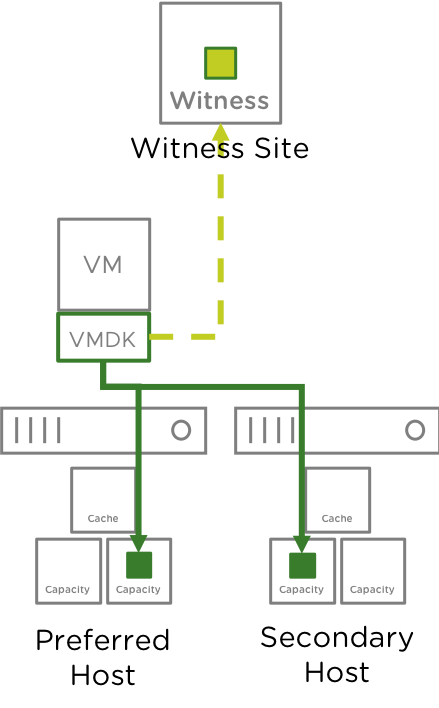

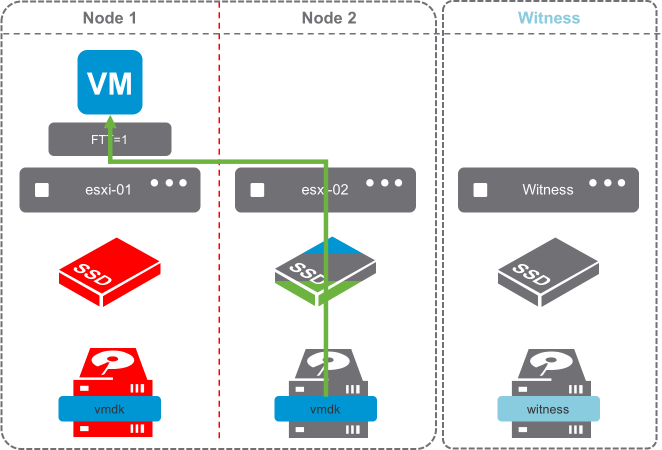

In vSAN, write operations are always synchronous. The image of a Hybrid vSAN cluster shows that writes are being written to Node 1 and Node 2, with Metadata updates being written to the vSAN Witness Host. This is due to a Number of Failures to Tolerate policy of 1.

Notice the blue triangle in the cache devices? That’s 30% of the cache device being allocated as the write buffer. The other 70% of the cache device is green, demonstrating the read cache. It is important to note that All-Flash vSAN clusters do not use the cache devices for read caching.

Writes go to the write buffer on both Nodes 1 and 2. This is always the case because writes occur on both nodes simultaneously.

Default Stretched Cluster / 2-node Read Behavior.

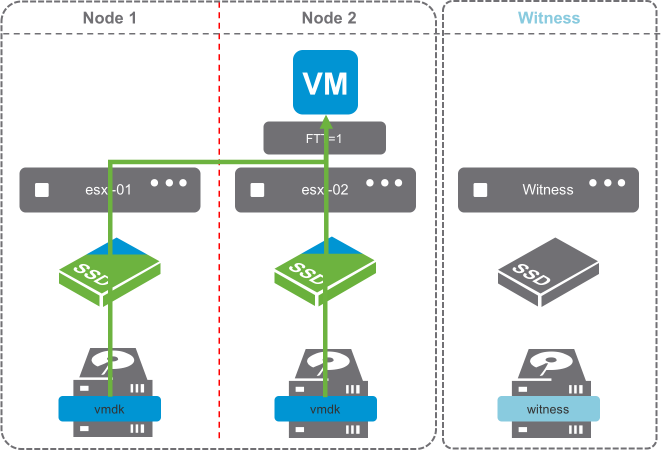

By default, reads are only serviced by the host that the VM is running on.

The image shows a typical read operation. The virtual machine is running on Node 1, and all reads are serviced by the cache device of Node 1’s disk group.

By default, reads do not traverse to the other node. This behavior is the default in 2-node configurations, as they are mechanically similar to Stretched Cluster configurations. This behavior is preferred when the latency between sites is at the upper end of the supported boundary of 5ms round-trip-time (RTT).

This is advantageous in situations where the two sides of a Stretched Cluster are connected by an inter-site link, because it removes additional overhead of reads traversing the inter-site link.

2-node Reads after vMotion

Read operations after a vMotion, are going to behave differently.

Because the cache has not been warmed on the host the virtual machine is now running on, reads will have to occur on the capacity drives as the cache is warmed.

The image shows only part of the cache device as green, indicating that as reads occur, they are cached in the read cache of the disk group.

The process of invoking a vMotion could be from various DRS events, such as putting a host in maintenance mode or balancing workloads. The default Stretched Cluster recommendation, is to keep virtual machines on one site or another, unless there is a failure event.

2 Node Reads after a Disk Failure

Read operations after a disk failure, are going to behave similarly to those of a vMotion. A single disk in the disk group has failed in the image on the right. Reads are going to come from Node 2, and the cache device on Node 2 is going to start caching content from the virtual machine’s disk.

Since only a capacity device failed, and there are others still contributing to the capacity, reads will also traverse the network, as data is rewritten to one of the surviving capacity devices on Node 1 if there is sufficient capacity.

Once data has been reprotected on Node 1, the cache will have to rewarm on Node 1 again.

2 Node Reads after a Cache Device/Disk Group Failure

Read operations after a disk group failure are also going to behave like that of a disk failure.

In configurations where the host with a failed disk group has an additional disk group, rebuilds will occur on the surviving disk group provided there is capacity.

In hosts with a single disk group, rebuilds will not occur, as the disk group is unavailable.

What can be done to prevent the need for rewarming the read cache?

There is an advanced setting which will force reads to always be serviced by both hosts.

The vSAN “DOMOwnerForceWarmCache” setting can be configured to force reads on both Node 1 and Node 2 in a 2-Node configuration.

Forcing the cache to be read across Stretched Cluster sites is not recommended because additional read latency can be introduced.

vSAN 2-node configurations are typically in a single location, directly connected or connected to the same switch, just as a traditional vSAN deployment.

When DOMOwnerForceWarmCache setting is True (1), it will force reads across all mirrors to most effectively use cache space. This means reads would occur across both nodes in a 2-node config.

When it is False (0), site locality is in effect, and reads are only occurring on the site the VM resides on.

In short, DOM Owner Force Warm Cache:

- Doesn’t apply to traditional vSAN clusters

- Stretched Cluster configs with acceptable latency & site locality enabled – Default 0 (False)

- 2-node (typically low, or very low latency) – Modify 1 (True)

Not only does this help in the event of a virtual machine moving across hosts, which would require the cache to be rewarmed, but it also allows reads to occur across both mirrors, distributing the load more evenly across both hosts.

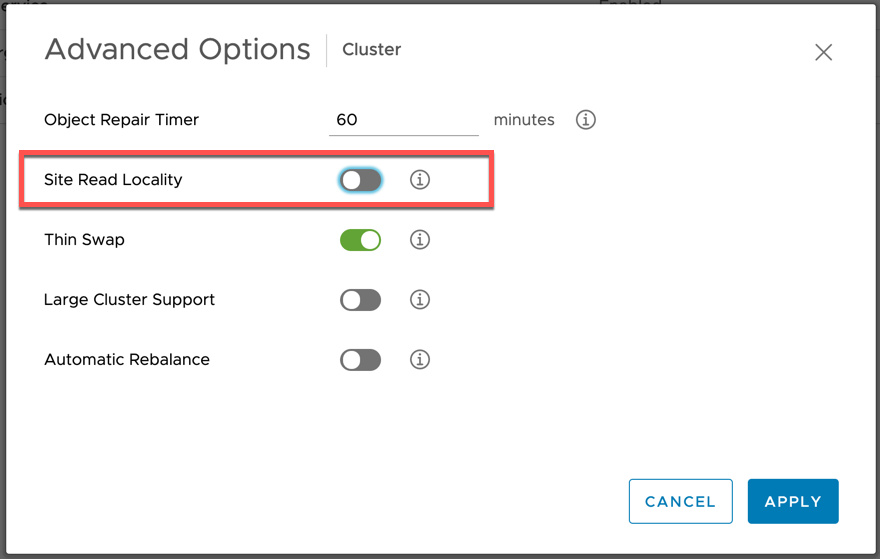

The vSAN 6.7 Advanced Options UI presents an option to deactivate or reactivate Read Locality, but only for 2-node or Stretched Clusters:

This setting can be retrieved or modified ESXi command line on each host as well:

- To check the status of Read Locality, run the following command on each ESXi host:

esxcfg-advcfg -g /VSAN/DOMOwnerForceWarmCacheIf the value is 0, then Read Locality is set to the default (enabled).

- To deactivate Read Locality in a 2-node clusters, run the following command on each ESXi host:

esxcfg-advcfg -s 1 /VSAN/DOMOwnerForceWarmCache

PowerCLI can also be used to deactivate or reactivate Read Locality. Here is a one-liner PowerCLI script to deactivate or reactivate Read Locality for both hosts in the 2-node cluster.

| # Value of 1 to deactivate, a value of 0 to reactivate Get-Cluster -Name <ClusterName> | Get-VMHost | Get-AdvancedSetting -Name "VSAN.DOMOwnerForceWarmCache" | Set-AdvancedSetting -Value "0" -Confirm:$False |

Forcing reads to be read across both nodes in cases where the Number of Failures to Tolerate policy is 1 can prevent having to rewarm the disk group cache in cases of vMotions, host maintenance, or device failures.

Client Cache

VMware vSAN 6.2 introduced Client Cache, a mechanism that allocates 0.4% of host memory, up to 1GB, as an additional read cache tier. Virtual machines leverage the Client Cache of the host they are running on. Client Cache is not associated with Stretched Cluster read locality, and runs independently.

Witness Traffic Separation (WTS)

By default, when using vSAN 2 Node configurations, the Witness VMkernel interface tagged for vSAN traffic must have connectivity with each vSAN data node's VMkernel interface tagged with vSAN traffic.

In vSAN 6.5, an alternate VMkernel interface can be designated to carry traffic destined for the Witness rather than the vSAN tagged VMkernel interface. This feature allows for more flexible network configurations by allowing for separate networks for node-to-node and node-to-witness traffic.

2 Node Direct Connect

This Witness Traffic Separation provides the ability to directly connect vSAN data nodes in a 2 Node configuration. Traffic destined for the Witness host can be tagged on an alternative interface from the directly connected vSAN tagged interface.

In the illustration above, the configuration is as follows:

- Host 1

- vmk0 - Tagged for Management Traffic

- vmk1 - Tagged for Witness Traffic - This must* be done using esxcli vsan network ip add -i vmk1 -T=witness

- vmk2 - Tagged for vSAN Traffic

- vmk3 - Tagged for vMotion Traffic

- Host 2

- vmk0 - Tagged for Management Traffic

- vmk1 - Tagged for Witness Traffic - This must* be done using esxcli vsan network ip add -i vmk1 -T=witness

- vmk2 - Tagged for vSAN Traffic

- vmk3 - Tagged for vMotion Traffic

- vSAN Witness Appliance

- vmk0 - Tagged for Management Traffic***

- vmk1 - Tagged for vSAN Traffic****

*Enabling Witness Traffic is not available from the vSphere Web Client.

**Any VMkernel port, not used for vSAN Traffic, can be used for Witness Traffic. In a more simplistic configuration, the Management VMkernel interface (vmk0) could be tagged for Witness Traffic. The VMkernel port used, will be required to have connectivity to the vSAN Traffic tagged interface on the vSAN Witness Appliance.

***The vmk0 VMkernel Interface, which is used for Management traffic may also be used for vSAN Traffic. In this situation, vSAN Traffic must be unchecked from vmk1.

****The vmk1 VMkernel interface must not have an address that is on the same subnet as vmk0. Because vSAN uses the default tcp/ip stack, in cases where vmk0 and vmk1 are on the same subnet, traffic will use vmk0 rather than vmk1. This is detailed in KB 2010877 . Vmk1 should be configured with an address on a different subnet than vmk0.

The ability to connect 2 Nodes directly removes the requirement for a high speed switch. This design can be significantly more cost effective when deploying tens or hundreds of 2 Node clusters.

vSAN 2 Node Direct Connect was announced with vSAN 6.5, and is available with vSAN 6.5 or higher and 6.6, 6.5, and 6.2*. *6.2 using vSphere 6.0 Patch 3 or higher without an RPQ

vSAN File services support for 2-node cluster

File services can be used in vSAN stretched clusters as well as vSAN 2-Node topologies, which can make it ideal for those edge locations also in need of a file server. File services in vSAN 7 Update 2 support Data-in-Transit encryption, as well as the space reclamation technique known as UNMAP. File services for vSAN 7 Update 2 have a snapshotting mechanism for point-in-time recovery of files. This mechanism, available through API, allows our backup partners to build applications to protect file shares in new and interesting ways. And finally, vSAN 7 Update 2 optimizes some of the metadata handling and data path for more efficient transactions, especially with small files. For more details go to stretched cluster guide document.

vSAN 8 using Express Storage architecture does not support vSAN File services for a 2-node cluster.

Nested fault domains for 2 Node cluster

This new feature is built on the concept of fault domains, where each host or a group of hosts can store redundantly VM object replicas. In a 2 Node cluster configuration, fault domains can be created on a per disk-group level for vSAN OSA and per disk using vSAN ESA, enabling disk-group or disk based data replication. Meaning, each of the two data nodes can host multiple object replicas. Thanks to that secondary level of resilience the 2 Node cluster can ensure data availability in the event of more than one device failure. For instance, one host failure and an additional device or disk group failure, will not impact the data availability of the VMs having a nested fault domain policy applied. The vSAN demo below shows the object replication process across disk groups and across hosts.

Please refer to the "Nested fault domains for 2 Node cluster "sub-section, under the Failure scenarios section in this document for more details.

In vSAN 8 using Express Storage Architecture (ESA), changes to support Nested fault domains for 2-node clusters do not affect existing functionality. However, there are changes needed in the backend to extend support to use Storage Pool disks as fault domains for vSAN ESA.

To summarize:

- In vSAN OSA, object components are placed on available host disk groups to satisfy the policy

- In vSAN ESA, object components are placed on individual disks of a Storage Pool of a host to satisfy the policy

Prerequisites

VMware vCenter Server

A vSAN 2 Node Cluster configuration can be created and managed by a single instance of VMware vCenter.

A Witness Host

In a vSAN 2 Node Cluster, the Witness components are only ever placed on the vSAN Witness Host. Either a physical ESXi host or the vSAN Witness Appliance provided by VMware can be used as a vSAN Witness.

If a vSAN Witness Appliance is used for the Witness, it will not consume any of the customer’s vSphere or vSAN licenses. A physical ESXi host that is used as a vSAN Witness Host will need to be licensed accordingly, as this can still be used to provision virtual machines should a customer choose to do so.

The vSAN Witness appliance, when added as a host to vCenter will have a unique identifier in the vSphere UI to assist with identification. There will be two views of the host when using the witness appliance, one as a virtual machine and one as a vSphere host.

In vSphere 7 it is shown as a “blue” host, as highlighted below.

In vSphere 8 the witness host has a small eye on the host icon.

It is important that the vSAN Witness Host is NOT added to the vSAN cluster. The vSAN Witness Host is selected during the creation of a vSAN 2 Node Cluster. A vSAN Witness Host, regardless of whether it is a Physical host or a vSAN OSA or ESA Witness Appliance, cannot be added to a vSphere Cluster.

Note: This is only visible when the vSAN Witness Appliance is deployed. If a physical host is used as a vSAN Witness Host, then it does not change its appearance in the web client. A dedicated vSAN Witness Host is required for each 2 Node Cluster.

Networking and Latency Requirements

When vSAN is deployed in a 2 Node Cluster there are certain networking requirements that must be adhered.

Layer 2 and Layer 3 Support

Both Layer 2 (same subnet) and Layer 3 (routed) configurations are used in a recommended vSAN 2 Node Cluster deployment.

- VMware recommends that vSAN communication between the vSAN nodes be over L2.

- VMware recommends that vSAN communication between the vSAN nodes and the Witness Host is

- Layer 2 for configurations with the Witness Host in the same site

- Layer 3 for configurations with the Witness Host in an alternate site.

vSAN traffic between nodes is multicast for versions 6.5 and previous, while unicast is used for version 6.6 or later. Witness traffic between each node in a 2 Node cluster and the Witness Host is unicast.

vSAN Node to Witness Network Latency

This refers to the communication between vSAN hosts and the Witness Host/Site.

In typical 2 Node configurations, such as Remote Office/Branch Office deployments, this latency or RTT is supported up to 500msec (250msec one-way).

The latency to the Witness Host is dependent on the number of objects in the cluster.

vSAN Node to Witness Network Bandwidth

Bandwidth between vSAN Nodes hosting VM objects and the Witness Host is dependent on the number of objects residing on vSAN. It is important to size data site to witness bandwidth appropriately for both availability and growth. A standard rule is 2Mbps for every 1000 components on vSAN. Because vSAN nodes have a maximum number of 9000 components per host, the maximum bandwidth requirement from a 2 Node cluster to the Witness Host supporting it, is 18Mbps.

Please refer to the Design Considerations section of this guide for further details on how to determine bandwidth requirements.

Inter-Site MTU Consistency

Knowledge Base Article 2141733 details a situation where data nodes have an MTU of 9000 (Jumbo Frames) and the vSAN Witness Host has an MTU of 1500. The vSAN Health Check looks for a uniform MTU size across all VMkernel interfaces that are tagged for traffic related to vSAN, and reports any inconsistencies. It is important to maintain a consistent MTU size across all vSAN VMkernel interfaces on data nodes and the vSAN Witness Host in a vSAN 2 Node cluster to prevent traffic fragmentation.

As KB 2141733 indicates, the corrective actions are either to reduce the MTU from 9000 on the data node VMkernel interfaces, or increase the MTU value on the vSAN Witness Host's VMkernel interface that is tagged for vSAN Traffic. Either of these are acceptable corrective actions.

The placement of the vSAN Witness Host will likely be the deciding factor in which configuration will be used. Network capability, control, and cost to/from the vSAN Witness Host as well as overall performance characteristics on data nodes are items to consider when making this design decision.

In situations where the vSAN Witness Host VMkernel interface tagged for vSAN traffic is not configured to use Jumbo Frames (or cannot due to underlying infrastructure), VMware recommends that all vSAN VMkernel interfaces use the default MTU of 1500.

As a reminder, there is no requirement to use Jumbo Frames with VMkernel interfaces used for vSAN.

Multiple vSAN Witness Hosts sharing the same VLAN

For customers who have implemented multiple 2 Node vSAN deployments, a common question is whether the Witness traffic from each of the remote sites requires its own VLAN.

The answer is no.

Multiple 2 Node vSAN deployments can send their witness traffic on the same shared VLAN.

Configuration Minimums and Maximums

Virtual Machines Per Host

Witness Host

There is a maximum of 1 vSAN Witness Host per vSAN 2 Node Cluster.

The vSAN Witness Host requirements are discussed in the Design Considerations section of this guide.

VMware provides a fully supported vSAN Witness Appliance, in the Open Virtual Appliance (OVA) format. This is for customers who do not wish to dedicate a physical ESXi host as the witness. This OVA is essentially a pre-licensed ESXi host running in a virtual machine, and can be deployed on a physical ESXi host at the 3rd site or host.

vSAN Storage Policies

Number of Failures To Tolerate (FTT) - Pre-vSAN 6.6

Primary Number of Failures To Tolerate (PFTT) - vSAN 6.6 and forward

The FTT/PFTT policy setting, has a maximum of 1 for objects.

In Pre-vSAN 6.6 2 Node Clusters FTT may not be greater than 1. In vSAN 6.6 or higher 2 Node Clusters, PFTT may not be greater than 1. This is because 2 Node Clusters are comprised of 3 Fault Domains.

Failure Tolerance Method (FTM)

Failure Tolerance Method rules provide object protection with RAID-1 (Mirroring). Mirroring is the only FTM rule that can be satisfied, due to the 3 fault domains present. This means that data is Mirrored across both sites.

Affinity

Affinity rules are used when the PFTT rule value is 0. This rule has 2 values, Preferred or Secondary. This determines which site an Affinity based vmdk would reside on.

Other Policy Rules

Other policy settings are not impacted by deploying vSAN in a 2 Node Cluster configuration and can be used as per a non-stretched vSAN cluster.

Fault Domains

Design Considerations

Cluster Compute Resource Utilization

For full availability, VMware recommends that customers should be running at 50% of resource consumption across the vSAN 2 Node Cluster. In the event of a node failure, all of the virtual machines could be run on the surviving node.

VMware understands that some customers will want to run levels of resource utilization higher than 50%. While it is possible to run at higher utilization in each site, customers should understand that in the event of failure, not all virtual machines will be restarted on the surviving node.

| vSAN Version | Protection | FTT/PFTT | FTM | SFTT | Capacity Required in Preferred Site | Capacity Required in Secondary Site | Capacity Requirement |

|---|---|---|---|---|---|---|---|

| 6.1 and higher | Across Sites Only | 1 | Mirroring | NA | 100% | 100% | 200% |

Network Design Considerations

The Role of vSAN Heartbeats

Bandwidth Calculation

As stated in the requirements section, the bandwidth requirement between the two main sites is dependent on workload and in particular the number of write operations per ESXi host. Other factors such as read locality not in operation (where the virtual machine resides on one site but reads data from the other site) and rebuild traffic, may also need to be factored in.

Requirements Between 2 Node vSAN and the Witness Site

Hosts designated as a vSAN Witness Host do not maintain any VM data, but rather only component metadata, the requirements are much smaller than that of the backend vSAN data network.

Virtual Machines on vSAN are comprised of multiple objects, which can potentially be split into multiple components, depending on factors like policy and size. The number of components on vSAN has a direct impact on the bandwidth requirement between the data sites and the witness.

The required bandwidth between the vSAN Witness Host and each node is equal to ~1138 B x Number of Components /5s

1138 B x NumComp / 5 seconds

The 1138 B value comes from operations that occur when the Preferred Site goes offline, and the Secondary Site takes ownership of all of the components.

When the Preferred Node goes offline, the Secondary Node becomes the Primary Node. The vSAN Witness Host sends updates to the new Primary node followed by the new Primary node replying to the vSAN Witness Host as ownership is updated.

The 1138 B requirement for each component comes from a combination of a payload from the vSAN Witness Host to the backup agent, followed by metadata indicating that the Preferred Site has failed.

In the event of a Preferred Node failure, the link must be large enough to allow for the cluster ownership to change, as well ownership of all of the components within 5 seconds.

Witness to Site Examples

Workload 1

With a VM being comprised of

- 3 objects {VM namespace, vmdk (under 255GB), and vmSwap}

- Failure to Tolerate of 1 (FTT=1)

- Stripe Width of 1

Approximately 25 VMs with the above configuration would require the vSAN Witness Host to contain 75 components.

To successfully satisfy the Witness bandwidth requirements for a total of 75 components on vSAN, the following calculation can be used:

Converting Bytes (B) to Bits (b), multiply by 8 B = 1138 B * 8 * 75 / 5s = 136,560 Bits per second = 136.56 Kbps

VMware recommends adding a 10% safety margin and round up.

B + 10% = 136.56 Kbps + 13.656 Kbps = 0.150 Kbps

With the 10% buffer included, a standard rule can be stated that for every 1,000 components, 2 Mbps is appropriate.

Workload 2

With a VM being comprised of

- 3 objects {VM namespace, vmdk (under 255GB), and vmSwap}

- Failure to Tolerate of 1 (FTT=1)

- Stripe Width of 2

- 2 Snapshots per VM

Approximately 25 VMs with the above configuration would require up to 250 components to be stored on the vSAN Witness Host.

To successfully satisfy the Witness bandwidth requirements for 250 components on vSAN, the resulting calculation is:

B = 1138 B * 8 * 250 / 5s = 455,200 Bits per second = 455.2 Kbps B + 10% = 455.2 Kbps + 45.52 Kbps = 500.7 Kbps

Using the general equation of 2Mbps for every 1,000 components, (NumComp/1000) X 2Mbps, it can be seen that 250 components does in fact require 0.5 Mbps.

Using the vSAN Witness Appliance as a vSAN Witness Host

Using a Physical Host as a vSAN Witness Host

Physical ESXi host used as a vSAN Witness Host:

When using a physical ESXi host as a vSAN Witness Host, the VMkernel interface that will be tagged for "vsan" traffic must have connectivity to the vSAN Data Node VMkernel interace that is tagged for "witness" traffic. This could be over Layer 3, or even over Layer 2 if desired.

If using a physical host as the vSAN Witness Host there are some requirements to consider.

| Requirements | |

| Licensing |

If using a physical host as a vSAN Witness Host, it must be licensed with a valid vSphere license. This does not require the same licensed edition as the vSAN Cluster it is supporting. |

| vSphere Build | If using a physical host as a vSAN Witness Host, it must be running the same build of vSphere as the 2 Node Cluster that it is participating with. |

| Compute & Memory | The minimum specifications required for ESXi meet the minimum requirements for use as a vSAN Witness Host. Minimum requirements for vSphere are dependent on the build of vSphere and can be found in the documentation section for each edition in VMware Documentation: https://www.vmware.com/support/pubs/ |

| Storage | Storage requirements do not change for a physical host being used as a vSAN Witness Host in comparison to the vSAN Witness Appliance. An ESXi boot device, a cache device, and one or more capacity devices are still required.

Required

|

| Other Workloads | If using a physical host as a vSAN Witness Host, it may run other workloads. Because the physical vSAN Witness Host is external to the vSAN Cluster it is contributing to, those workloads will not be part of the vSAN Cluster. The vSAN Disk Group, and the disks it includes, may not be used for those workloads.

*Important consideration: Multiple vSAN Witness Appliances can run on a single physical host. Using vSAN Witness Appliances is typically more cost-effective than dedicating physical hosts for the purpose of meeting the vSAN Witness Host requirement. |

Using the vSAN witness appliance or a physical host as a shared witness.

Overview

VMware vSAN 2-node architecture has always been a perfect solution for organizations that have many small branch offices or retail sites. It is also very beneficial for small businesses and startups who want to avoid the significant up-front costs associated with storage hardware. The shared witness host appliance additionally reduces the amount of physical resources needed at the central site, resulting in a greater level of savings for а large number of 2-node branch office deployments. For example, a topology with 30 remote sites would have previously needed 240 GB of RAM at the central site for the witness host appliance, while using the shared witness model would require 16 GB. One single shared witness can be shared across a maximum of 64 2-node clusters, supporting up to 64, 000 components, and requires at least 6 CPUs, and 32GB memory allocation for the Witness. Additionally, we support a max of 1000 components per 2-node cluster. Build-in checks will monitor if the maximum number of components for a witness in a 2-node cluster has been reached and they would not allow the witness to be converted into a shared witness.

Namely, shared witnesses can be deployed in the same topology scenarios where a standard witness is deployed (e.g. running in a VCPP location), the shared witness can be either a virtual witness host appliance or a physical host.

New UI additions are created to support the shared witness enablement and there is a new health check to cover the non-ROBO cluster with a shared witness.

A couple of conditions should be met before you can enable the shared host appliance architecture:

• As of vSAN 7 U1, the witness node should be upgraded first (to maintain backward compatibility). Upgrading of the witness can be accomplished through VUM but is not supported by vLCM at this time. Alternatively, to upgrading, the witness can be replaced or redeployed. Please refer to the vSAN operations guide to look at the process of replacing a vSAN witness host.

A witness host can be upgraded to version vSAN 7 U1 to become a shared witness or a new host can be deployed and used as a shared witness. If you decide to upgrade your witness host, both the software and the disk format of the witness node should be upgraded before all the other nodes. Once the witness node is upgraded it’ll be able to communicate with the rest of the nodes in the connected clusters that might be of lower version. The witness VM is backward compatible to previous v6 and v7 vSAN clusters, and you may upgrade the disk format version only once upgrading first the vSAN witness VM, then the vSAN cluster. When using a shared witness for vSAN 2-node clusters, the process for upgrading introduces a few additional considerations. See Upgrading 2-node vSAN Clusters from 6.7U3 to 7U1 with a Shared Witness Host for more information. Other design and operation changes for 2-node topologies can be found in the post: New Design and Operation Considerations for vSAN 2-Node Topologies.

• All vSAN clusters participating in a shared witness must use the new on-disk format (ODF) version associated with vSAN 7 U1 (v13).

Ex. If you want to move all your 30 existing 2-node clusters to single shared witness, these are the basic steps to follow:

1. Upgrade the existing witness to version 7 Update 1 or deploy a new one of the same versions.

2. Upgrade the existing clusters to version 7 Update 1.

3. Assign all clusters to the shared witness host or appliance. For OVF sizes and recommendations, you should consult the vSAN Witness Appliance Sizing section in this document.

Limitations

• New witness OVA has been built to meet the increased CPU and memory configuration. A maximum of 1000 components per cluster has been introduced. Please refer to the vSAN Witness Appliance Sizing section in this guide for more details.

- Keep in mind, there is a special consideration when it comes to 2-node clusters with a shared witness host. The on-disk format upgrades in a vSAN environment with multiple 2-node clusters and a shared witness should only be performed once all the hosts sharing the same witness are running the same vSphere/vSAN version. IMPORTANT: Do NOT upgrade the on-disk format of your disks until all hosts sharing the same witness are upgraded to the same vSphere version. You can find more details in the following blog post - "Upgrading vSAN 2-node Clusters with a Shared Witness from 7U1 to 7U2".

• A witness node cannot convert to shared witness if the initial cluster is over the per-cluster component limit.

• Every new cluster using the shared witness option should be below the per-cluster component limit.

• Stretched cluster and data-in-transit encryption are not supported in vSAN 7 Update

vSAN Witness Host Networking Examples

In both options, either a physical ESXi host or vSAN Witness Appliance may be used as vSAN Witness Host or vSAN shred witness host.

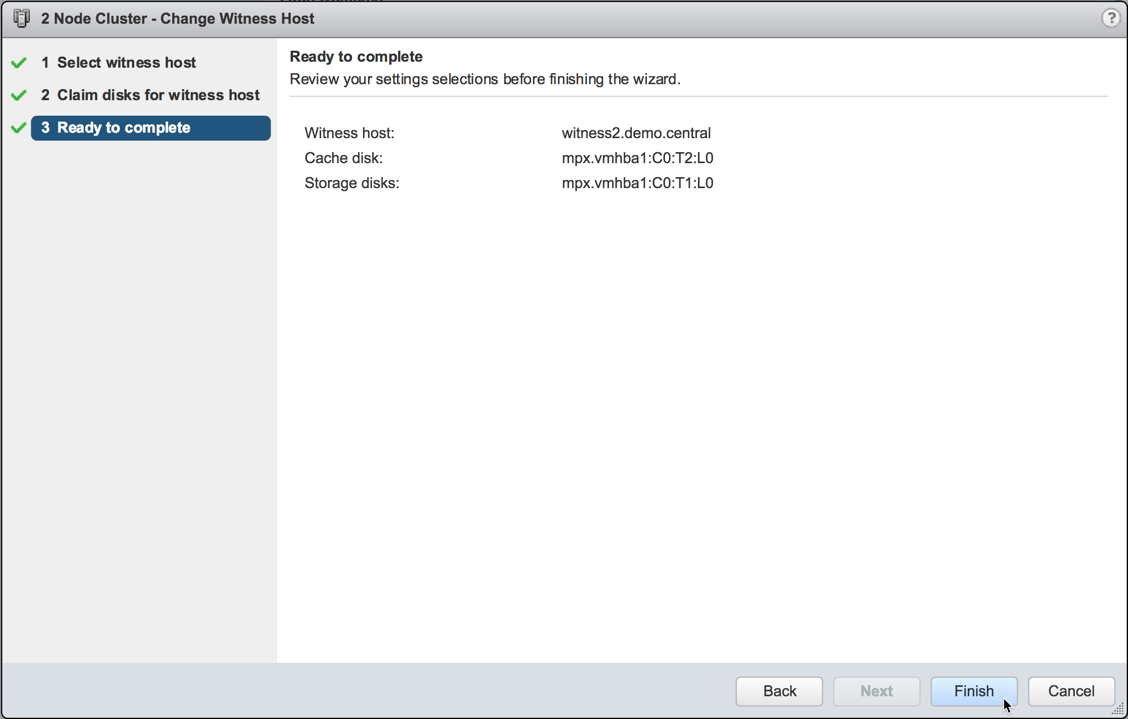

Option 1: 2 Node Configuration for Remote Office/Branch Office Deployment using Witness Traffic Separation with the vSAN Witness in a central datacenter

In the use case of Remote Office/Branch Office (ROBO) deployments, it is common to have 2 Node configurations at one or more remote offices. This deployment model can be very cost competitive when running a limited number of virtual machines no longer require 3 nodes for vSAN.

Fig.1 Dedicated witness host.

.png)

Fig.1 Shared witness host.

Management traffic for the data nodes is typically automatically routed to the vCenter server at the central data center. Because they reside in the same physical location, vSAN traffic networking between data nodes is consistent with that of a traditional vSAN cluster.

These vSAN Nodes are still required to communicate with the vSAN Witness Host residing in the central datacenter. Witness Traffic Separation, allows for a separate VMkernel interface for "witness" traffic. This VMkernel must be able to communicate with the vSAN Witness Host.

In cases where the vSAN Witness Appliance uses the WitnessPG (vmk1) to communicate with vSAN Data Nodes over Layer 3, static routing will be required to ensure proper connectivity.

Adding static routes is achieved using the esxcfg-route –a command on the ESXi hosts and witness VM.

The below illustration shows a vSAN Witness Appliance with the Management (vmk0) and WitnessPg (vmk1) VMkernel interfaces on different networks. This is a typical configuration.

In the illustration above, the vSAN Witness Appliance in the central data center has a Management (vmk0) IP address of 192.168.109.23. This VMkernel interface will use the default gateway to communicate with vCenter Server. The WitnessPG VMkernel interface (vmk1) has an IP address of 192.168.110.23.

The vSAN 2 Node configuration has the Management (vmk0) IP addresses of 192.168.1.21 and 192.168.1.22 for Host 1 and Host 2 respectively. As these are the Management interfaces for these hosts, they will also use the default gateway to communicate with the vCenter Server. Traffic is tagged as "witness" for vmk1 in both Host 1 and Host 2 and is configured with IP addresses of 192.168.15.21 and 192.168.15.22 respectively.

Because vSAN based traffic (tagged as "vsan" or "witness") uses the default gateway, static routes must be used in this configuration. A static route on Host 1 and Host 2 for their vmk1 VMkernel interfaces to properly connect to the WitnessPg VMkernel interface on the vSAN Witness Appliance. The routing command on Host 1 and Host 2 would look like this:

esxcfg-route -a 192.168.110.0/24 192.168.15.1

Additionally, a static route would need to be configured on the vSAN Witness Appliance as well:

esxcfg-route -a 192.168.15.0/24 192.168.110.1

*Note the address of x.x.x.1 is the gateway in each of these networks for this example. This may differ from your environment.

The below illustration shows a vSAN Witness Appliance with the Management (vmk0) and WitnessPg (vmk1) VMkernel interfaces on the same network. This is not a typical configuration but is supported if configured properly.

Notice that the WitnessPg (vmk1) VMkernel interface is NOT tagged with "vsan" traffic, but rather the Management (vmk0) VMkernel interface has both "Management" and "vsan" traffic tagged, In cases were vmk0 and vmk1 would happen to reside on the same network, a multi-homing issue will occur if the WitnessPg (vmk1) continues to have "vsan" traffic tagged.

The correct configuration in this situation, would be untag "vsan" traffic from the WitnessPg (vmk1) VMkernel interface, and tag the Management (vmk0) interface instead. This is also a supported configuration.

*Some concerns have been raised in the past about exposing the vSAN Data Network to the WAN in 2 Node vSAN Clusters. Witness Traffic Separation mitigates these concerns because only vSAN Obect metadata traverses the WAN to the vSAN Witness Host.

Option 2: 2 Node Configuration for Remote Office/Branch Office Deployment using Witness Traffic Separation with the vSAN Witness in the same location

Some VMware customers that have deployed 2 Node vSAN in a remote office/branch office locations have decided to include a 3rd node for use as a vSAN Witness Host, or as a location to run the vSAN Witness Appliance.

Other customers have asked, "why?" A 3rd host running locally could potentially be a lesser capable host that has enough resources to run a minimal workload that includes the vSAN Witness Host role, possibly local backups, as well as other resources such as networking based virtual machines.

In the below illustration, the 2 Node vSAN Cluster Hosts, Host 1 and Host 2, are both connected to the same Management network as the physical host that is running the vSAN Witness Appliance.

In this configuration, each vSAN Data Node has a dedicated VMkernel interface tagged for "witness" traffic on the 192.168.15.x network. The vSAN Witness Appliance has the WitnessPg (vmk1) VMkernel interface tagged for "vsan" traffic, also on the 192.168.15.x network. In this configuration, static routing is not required because Layer 2 networking is used.

This is a supported configuration.

In the below illustration, the 2 Node vSAN Cluster Hosts, Host 1 and Host 2, are both connected to the same Management network as the physical host that is running the vSAN Witness Appliance.

In this configuration, each vSAN Data Node's Management (vmk0) VMkernel interface is tagged for "witness" traffic on the 192.168.1.x network. The vSAN Witness Appliance has the Management (vmk0) VMkernel interface tagged for "vsan" traffic, also on the 192.168.1.x network. In this configuration, static routing is not required because Layer 2 networking is in use.

This is a supported configuration.

vSAN Witness Appliance Sizing

vSAN Witness Appliance Size

The vSAN witness appliance OVFs are also used for shared witness. When using a vSAN Witness Appliance, the size is dependent on the configurations and this is decided during the deployment process. vSAN Witness Appliance deployment options are hardcoded upon deployment and there is typically no need to modify these.

Compute Requirements

The vSAN Witness Appliance uses a different number of vCPUs depending on the configuration.

Memory Requirements

Memory requirements are dependent on the number of components.

Storage Requirements

Cache Device Size: Each vSAN Witness Appliance deployment option has a cache device size of 10GB. This is sufficient for each for the maximum of 64,000 components. In a typical vSAN deployment, the cache device must be a Flash/SSD device. Because the vSAN Witness Appliance has virtual disks, the 10GB cache device is configured as a virtual SSD. There is no requirement for this device to reside on a physical flash/SSD device. Traditional spinning drives are sufficient.

Capacity Device Sizing: First consider that a capacity device can support up to 21,000 components. Also, consider that a vSAN 2 Node Cluster can support a maximum of 27,000 components. Each Witness Component is 16MB, as a result, the largest capacity device that can be used for storing Witness Components is approaching 350GB.

vSAN Witness Appliance Deployment Sizes & Requirements Summary

Dedicated witness

• Tiny - Supports up to 10 VMs/750 Witness Components -Typical for 2 Node vSAN deployments

o Compute - 2 vCPUs

o Memory - 8GB vRAM

o ESXi Boot Disk - 12GB Virtual HDD

o Cache Device - 10GB Virtual SSD

o Capacity Device - 15GB Virtual HDD

• Medium - Supports up to 500 VMs/21,000 Witness Components - Alternative for 2 Node vSAN deployments with a significant number of components

o Compute - 2 vCPUs

o Memory - 16GB vRAM

o ESXi Boot Disk - 12GB Virtual HDD

o Cache Device - 10GB Virtual SSD

o Capacity Device - 350GB Virtual HDD

• Large - Supports over 500 VMs/45,000 Witness Components - Unnecessary for 2 Node vSAN deployments.

o Compute: 2 vCPUs

o Memory - 32 GB vRAM

o ESXi Boot Disk - 12GB Virtual HDD

o Cache Device - 10GB Virtual SSD

o Capacity Devices - 3x350GB Virtual HDD

8GB ESXi Boot Disk*, one 10GB SSD, three 350GB HDDs

Supports a maximum of 45,000 witness components

Shared witness OVA file for vSAN 7 Update 1

An extra-large option is introduced to accommodate the shared witness appliance's increased number of components.

1000 components per 2-node cluster.

• Tiny - Supports up to 10 VMs/750 Witness Components

o Compute -1 vCPUs

o Memory - 8GB vRAM

• Medium - Supports up to 500 VMs/21,000 Components

o Up to 21 clusters 2-node clusters

o Compute - 2 vCPUs

o Memory - 16GB vRAM

• Large - Supports over 500 VMs/ 24,000 Components

o Up to 24 clusters 2-node clusters

o Compute: 2 vCPUs

o Memory - 32 GB vRAM

• Extra-large - Supports over 500 VMs/ 64,000 Components

o Up to 64 clusters 2-node clusters

o Compute: 6 vCPUs

o Memory - 32 GB vRAM

vSAN Witness Host Versioning & Updating

vSAN Witness Appliance Version

A vSAN Witness Appliance is provided with each release of vSAN. Upon initial deployment of the vSAN Witness Appliance, it is required to be the same as the version of vSAN. The vSphere host that the vSAN Witness Appliance runs on, is not required to be the same version.

Example 1: A new vSAN 6.6.1 deployment

- Requires vSphere 6.5 Update 1 hosts

- Requires vSAN 6.6.1 based vSAN Witness Appliance

- Underlying host can be vSphere 5.5 or higher

Example 2: A new vSAN 6.7 deployment

- Requires vSphere 6.7 hosts

- Requires vSAN 6.7 based vSAN Witness Appliance

- Underlying host can be vSphere 5.5 or higher, but the CPU must be supported by vSphere 6.7

This is because the vSAN Cluster is vSphere 6.7 based and the vSAN Witness Appliance is running vSphere 6.7.

When upgrading the vSAN Cluster, upgrade the vSAN Witness Appliance in the same fashion as upgrading vSphere . This keeps the versions aligned. Be certain to ensure that the underlying hosts and the vCenter Server version supports the version of vSAN being upgraded to.

Successful Example: Upgrade to vSAN 6.6 from vSAN 6.2

- Upgrade vCenter to 6.5 Update 1 using the VCSA embedded update mechanism

- Upgrade vSAN hosts to vSphere 6.5 Update 1 using VMware Update Manager

- Upgrade vSAN Witness Host using VMware Update Manager.

Unsuccessful Example 1: Upgrade to vSAN 6.7 from vSAN 6.6

- Do not upgrade vCenter to 6.7

- Upgrade vSAN hosts to vSphere 6.7 using VMware Update Manager

- Do not upgrade vSAN Witness Host

Unsuccessful Example 2: Upgrade to vSAN 6.7 from vSAN 6.6

- Upgrade vCenter to 6.7 using the VCSA embedded update mechanism

- Upgrade vSAN hosts to vSphere 6.7 using VMware Update Manager

- Do not upgrade vSAN Witness Host

Unsuccessful Example 3: Upgrade to vSAN 6.7 from vSAN 6.6

- Upgrade vCenter to 6.7 using the VCSA embedded update mechanism

- Upgrade vSAN hosts to vSphere 6.7 using VMware Update Manager

- Attempt to upgrade a vSAN Witness Host that is running a vSphere 5.5-6.5 host with CPUs that are not supported by vSphere 6.7

Cluster Settings

Cluster Settings – vSphere HA

Certain vSphere HA behaviors have been modified especially for vSAN. It checks the state of the virtual machines on a per virtual machine basis. vSphere HA can make a decision on whether a virtual machine should be failed over based on the number of components belonging to a virtual machine that can be accessed from a particular partition.

When vSphere HA is configured on a vSAN 2 Node Cluster, VMware recommends the following:

| vSphere HA | Turn on |

| Host Monitoring | Enabled |

| Host Hardware Monitoring – VM Component Protection: “Protect against Storage Connectivity Loss” | Deactivated (default) |

| Virtual Machine Monitoring | Customer Preference – Deactivated by default |

| Admission Control | Set to 50% |

| Host Isolation Response | Deactivated |

| Datastore Heartbeats | “Use datastores only from the specified list”, but do not select any datastores from the list. This Deactivates Datastore Heartbeats |

Note that a 2-node Direct Connect configuration is a special case. In this situation, it is impossible to configure a valid external isolation address within the vSAN network. VMware recommends disabling the isolation response for a 2-node Direct Connect configuration. If however, a host becomes isolated, vSAN has the ability to halt VMs which are "ghosted" (no access to any of the components). This will allow for vSphere HA to safely restart the impacted VMs, without the need to use the Isolation Response.

Using vSphere HA and performing Maintenance

When attempting to perform maintenance on a 2-node vSAN Cluster and vSphere HA is enabled, hosts will not go into maintenance mode automatically. This is because putting one of the 2 hosts into maintenance mode will violate the vSphere HA requirement of having a full host available in the event of a failure.

The correct method to perform maintenance in this situation is to either 1) put the host to be worked on in maintenance mode manually or 2) deactivate vShphere HA during the maintenance operation. This is addressed in KB Article 53682. This is not a vSAN centric issue but affects all 2 host vSphere cluster configurations.

Turn on vSphere HA

Admission Control

Admission control ensures that HA has sufficient resources available to restart virtual machines after a failure. As a full site failure is one scenario that needs to be taken into account in a resilient architecture, VMware recommends enabling vSphere HA Admission Control. Availability of workloads is the primary driver for most stretched cluster environments. Sufficient capacity must therefore be available for a host failure. Since virtual machines could be equally divided across both hosts in a vSAN 2 Node Cluster, and to ensure that all workloads can be restarted by vSphere HA, VMware recommends configuring the admission control policy to 50 percent for both memory and CPU.

VMware recommends using the percentage-based policy as it offers the most flexibility and reduces operational overhead. For more details about admission control policies and the associated algorithms, we would like to refer to the vSphere 7.0 Availability Guide.

The following screenshot shows a vSphere HA cluster configured with admission control enabled using the percentage-based admission control policy set to 50%.

When you reserve capacity for your vSphere HA cluster with an admission control policy, this setting must be coordinated with the corresponding Primary level of failures to tolerate policy setting in the vSAN rule set. It must not be lower than the capacity reserved by the vSphere HA admission control setting. For example, if the vSAN ruleset allows for only two failures, the vSphere HA admission control policy must reserve capacity that is equivalent to only one or two host failures.

Host Hardware Monitoring – VM Component Protection

vSphere 6.0 introduced a new enhancement to vSphere HA called VM Component Protection (VMCP) to allow for an automated fail-over of virtual machines residing on a datastore that has either an “All Paths Down” (APD) or a “Permanent Device Loss” (PDL) condition.

A PDL, permanent device loss condition, is a condition that is communicated by the storage controller to ESXi host via a SCSI sense code. This condition indicates that a disk device has become unavailable and is likely permanently unavailable. When it is not possible for the storage controller to communicate back the status to the ESXi host, then the condition is treated as an “All Paths Down” (APD) condition.

In traditional datastores, APD/PDL on a datastore affects all the virtual machines using that datastore. However, for vSAN this may not be the case. An APD/PDL may only affect one or few VMs, but not all VMs on the vSAN datastore. Also in the event of an APD/PDL occurring on a subset of hosts, there is no guarantee that the remaining hosts will have access to all the virtual machine objects, and be able to restart the virtual machine. Therefore, a partition may result in such a way that the virtual machine is not accessible on any partition.

Note that the VM Component Protection (VMCP) way of handling a failover is to terminate the running virtual machine and restart it elsewhere in the cluster. VMCP/HA cannot determine the cluster-wide accessibility of a virtual machine on vSAN, and thus cannot guarantee that the virtual machine will be able to restart elsewhere after termination. For example, there may be resources available to restart the virtual machine, but accessibility to the virtual machine by the remaining hosts in the cluster is not known to HA. For traditional datastores, this is not a problem, since we know host-datastore accessibility for the entire cluster, and by using that, we can determine if a virtual machine can be restarted on a host or not.

At the moment, it is not possible for vSphere HA to understand the complete inaccessibility vs. partial inaccessibility on a per virtual machine basis on vSAN; hence the lack of VMCP support by HA for vSAN.

VMware recommends leaving VM Component Protection (VMCP) deactivated.

Datastore for Heartbeating

Virtual Machine Response for Host Isolation

This setting determines what happens to the virtual machines on an isolated host, i.e. a host that can no longer communicate to other nodes in the cluster, nor is able to reach the isolation response IP address.

If we do not have a direct connection between both data nodes in the 2-node cluster - VMware recommends that the Response for Host Isolation is to Power off and restart VMs.

The reason for this is that a clean shutdown will not be possible as on an isolated host the access to the vSAN Datastore, and as such the ability to write to disk, is lost.

Note: A 2-node direct connect configuration is a special case. In this situation, it is impossible to configure a valid external isolation address within the vSAN network. VMware recommends disabling the isolation response for a 2-node direct connect configuration. If however, a host becomes isolated, vSAN has the ability to halt VMs which are "ghosted" (no access to any of the components). This will allow for vSphere HA to safely restart the impacted VMs, without the need to use the Isolation Response.

Advanced Options

When vSphere HA is enabled on a vSAN Cluster, uses heartbeat mechanisms to validate the state of an ESXi host. Network heart beating is the primary mechanism for HA to validate availability of the hosts.

If a host is not receiving any heartbeats, it uses a fail-safe mechanism to detect if it is merely isolated from its HA primary node or completely isolated from the network. It does this by pinging the default gateway.

In vSAN environments, vSphere HA uses the vSAN traffic network for communication. This is different from traditional vSphere environments where the management network is used for vSphere HA communication. However, even in vSAN environments, vSphere HA continues to use the default gateway on the management network for isolation detection responses. This should be changed so that the isolation response IP address is on the vSAN network, as this allows HA to react to a vSAN network failure.

In addition to selecting an isolation response address on the vSAN network, additional isolation addresses can be specified manually to enhance the reliability of isolation validation.

Note: When using vSAN 2 Node clusters in the same location, there is no need to have a separate das.isolationaddress for each of the hosts.

Cluster Settings - DRS

Using a vSAN Witness Appliance

VMware vSAN 2 Node Clusters supports the use of a vSAN Witness Appliance as the vSAN Witness host. This is available as an OVA (Open Virtual Appliance) from VMware. However this vSAN Witness Appliance needs to reside on a physical ESXi host, which requires some special networking configuration.

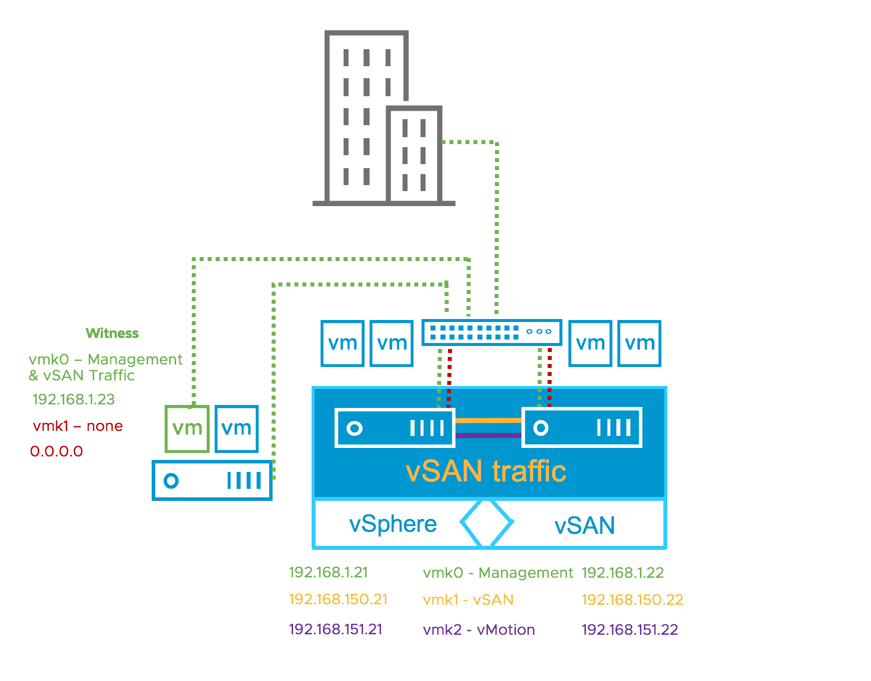

Setup Step 1: Deploy the vSAN Witness Appliance

The vSAN Witness Appliance must be deployed on different infrastructure than the 2 Node vSAN Cluster itself. This step will cover deploying the vSAN Witness Appliance to a different cluster.

Note: It is considered supported for a dedicated server located in the same chassis as is the case in a HPE Synergy frame, or a Dell XR4000.

The first step is to download and deploy the vSAN Witness Appliance, or deploy it directly via a URL, as shown below. In this example it has been downloaded:

Select a Datacenter for the vSAN Witness Appliance to be deployed to and provide a name (Witness1 or something similar).

Select a cluster for the vSAN Witness Appliance to reside on.

Review the details of the deployment and press next to proceed.

The license must be accepted to proceed.

At this point a decision needs to be made regarding the expected size of the 2 Node vSAN Cluster configuration. There are three options offered. If you expect the number of VMs deployed on the vSAN 2 Node Cluster to be 10 or fewer, select the Tiny configuration. If you expect to deploy more than 10 VMs, but less than 500 VMs, then the Normal (default option) should be chosen. On selecting a particular configuration, the resources consumed by the appliance and displayed in the wizard (CPU, Memory and Disk):

Select a datastore for the vSAN Witness Appliance. This will be one of the datastore available to the underlying physical host. You should consider when the vSAN Witness Appliance is deployed as thick or thin, as thin VMs may grow over time, so ensure there is enough capacity on the selected datastore.

Select a network for the Management Network and for the Witness (or Secondary) Network.

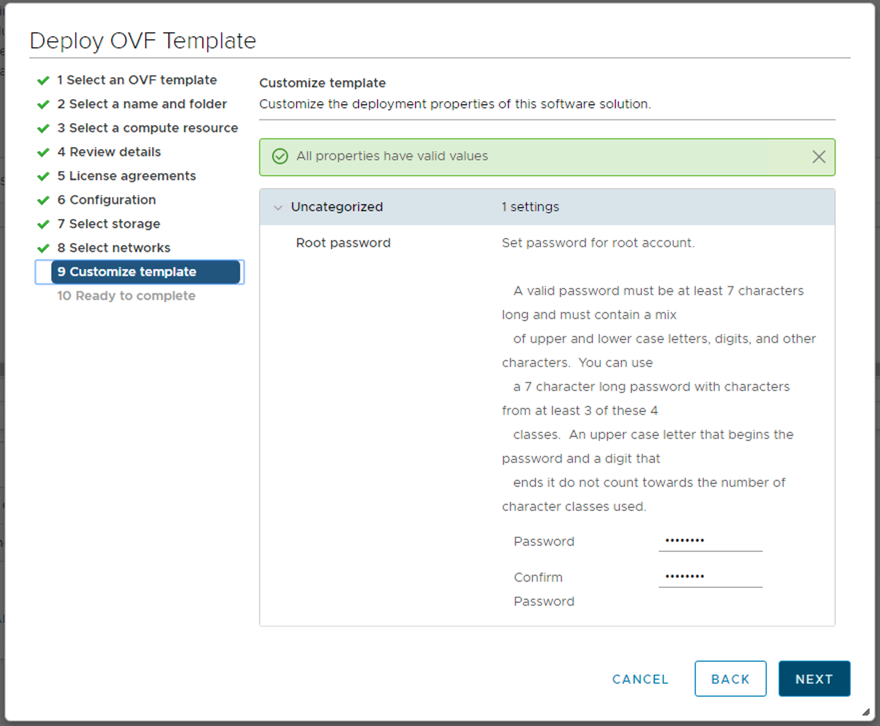

Give a root password for the vSAN Witness Appliance:

At this point, the vSAN Witness Appliance is ready to be deployed. It will need to be powered on manually via the vSphere web client UI later:

Once the vSAN Witness Appliance is deployed and powered on, select it in the vSphere web client UI and begin the next steps in the configuration process.

Setup Step 2: vSAN Witness Appliance Management

Once the vSAN Witness Appliance has been deployed, select it in the vSphere web client UI, open the console.

The console of the vSAN Witness Appliance should be access to add the correct networking information, such as IP address and DNS, for the management network.

On launching the console, unless you have a DHCP server on the management network, it is very likely that the landing page of the DCUI will look something similar to the following:

Use the <F2> key to customize the system. The root login and password will need to be provided at this point. This is the root password that was added during the OVA deployment earlier.

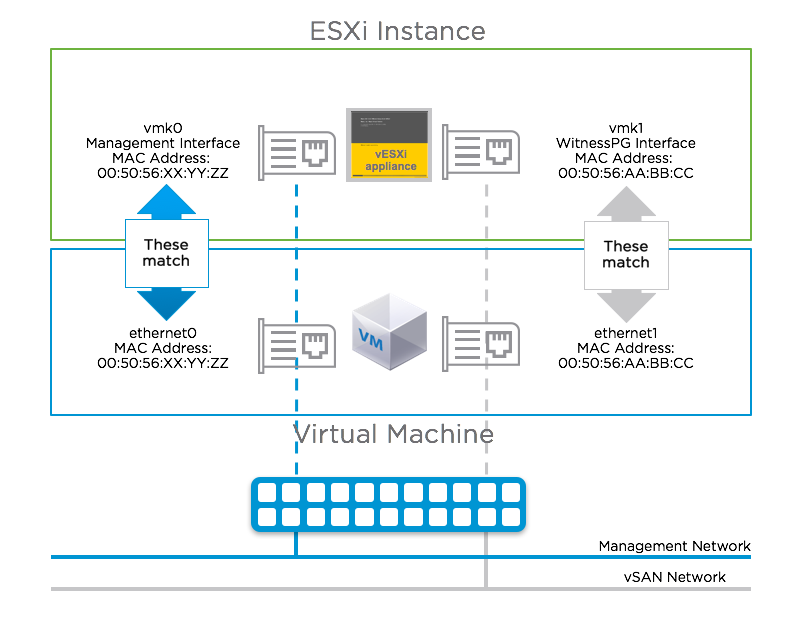

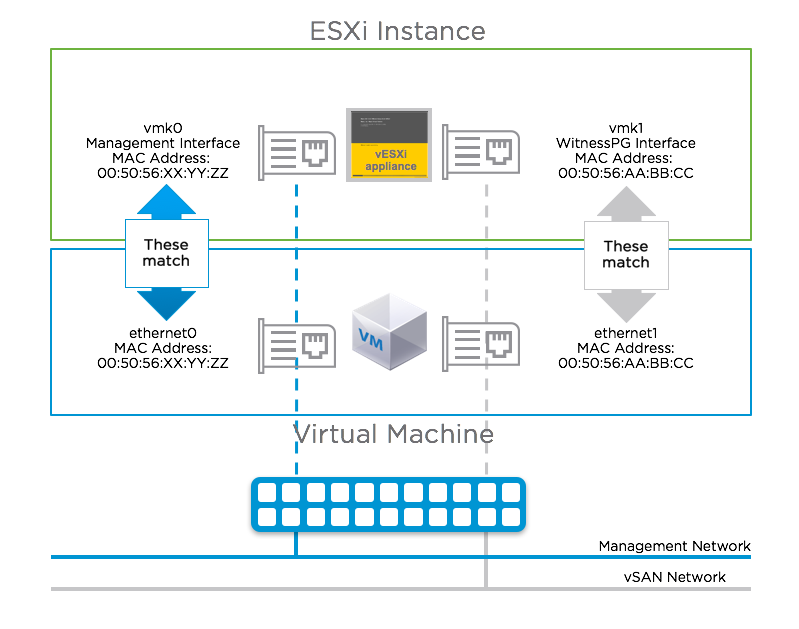

Select the Network Adapters view. There will be two network adapters, each corresponding to the network adapters on the virtual machine. You should note that the MAC address of the network adapters from the DCUI view match the MAC address of the network adapters from the virtual machine view. Because these match, there is no need to use promiscuous mode on the network, as discussed earlier.

Select vmnic0, and if you wish to view further information, select the key <D> to see more details.

Navigate to the IPv4 Configuration section. This will be using DHCP by default. Select the static option as shown below and add the appropriate IP address, subnet mask and default gateway for this vSAN Witness Appliance Management Network.

The next step is to configure DNS. A primary DNS server should be added and an optional alternate DNS server can also be added. The FQDN, fully qualified domain name, of the host should also be added at this point.

One final recommendation is to do a test of the management network. One can also try adding the IP address of the vCenter server at this point just to make sure that it is also reachable.

When all the tests have passed, and the FQDN is resolvable, administrators can move onto the next step of the configuration, which is adding the vSAN Witness Appliance ESXi instance to the vCenter server

Setup Step 3: Add Witness to vCenter Server

There is no difference to adding the vSAN Witness Appliance ESXi instance to vCenter server when compared to adding physical ESXi hosts. However, there are some interesting items to highlight during the process. First step is to provide the name of the Witness. In this example, vCenter server is managing multiple data centers, so we are adding the host to the witness data center.

Provide the appropriate credentials. In this example, the root user and password.

Acknowledge the certificate warning:

There should be no virtual machines on the vSAN Witness Appliance. Note: It can never run VMs in a vSAN 2 Node Cluster configuration. Note also the mode: VMware Virtual Platform. Note also that builds number may differ to the one shown here.

The vSAN Witness Appliance also comes with its own license. You do not need to consume vSphere licenses for the witness appliance:

Lockdown mode is deactivated by default. Depending on the policies in use at a customer’s site, the administrator may choose a different mode to the default:

Click Finish when ready to complete the addition of the Witness to the vCenter server:

One final item of note is the appearance of the vSAN Witness Appliance ESXi instance in the vCenter inventory. It has a light blue shading, to differentiate it from standard ESXi hosts. It might be a little difficult to see in the screen shot below, but should be clearly visible in your infrastructure. (Note: In vSAN 6.1 and 6. 2 deployments, the “No datastores have been configured” message is because the nested ESXi host has no VMFS datastore. This can be ignored.)

One final recommendation is to verify that the settings of the vSAN Witness Appliance matches the Tiny, Normal or Large configuration selected during deployment. For example, the Normal deployment should have an 12GB HDD for boot in vSAN 6.5 (8GB for vSAN 6.1/6.2), a 10GB Flash that will be configured later on as a cache device and another 350 HDD that will also be configured later on as a capacity device.

Once confirmed, you can proceed to the next step of configuring the vSAN network for the vSAN Witness Appliance

Setup Step 4: Config vSAN Witness Host Networking

The next step is to configure the vSAN network correctly on the vSAN Witness Appliance. When the Witness is selected, navigate to Configure > Networking > Virtual switches as shown below.

The Witness has a portgroup pre-defined called witnessPg. Here the VMkernel port to be used for vSAN traffic is visible. If there is no DHCP server on the vSAN network (which is likely), then the VMkernel adapter will not have a valid IP address. Select VMkernel adapters > vmk1 to view the properties of the witnessPg. Validate that "vSAN" is an enabled service as depicted below.

* Engineering note: A few things to consider when configuring vSAN traffic on the vSAN Witness Appliance.

- The default configuration has vmk0 configured for Management Traffic and vmk1 configured for vSAN Traffic.

- The vmk1 interface cannot be configured with an IP address on the same range as that of vmk0. This is because Management traffic and vSAN traffic use the default TCP/IP stack. If both vmk0 and vmk1 are configured on the same range, a multihoming condition will occur and vSAN traffic will flow from vmk0, rather than vmk1. Health Check reporting will fail because vmk0 does not have vSAN enabled. The multihoming issue is detailed in KB 2010877 ( https://kb.vmware.com/kb/2010877 ).

- In the case of 2 Node vSAN, If it is desired to have vSAN traffic on the same subnet as vmk0, (or simply use a single interface for simplicity), it is recommended todeactivate vSAN services on vmk1 (WitnessPg) and enable vSAN services on vmk0 (Management). This is a perfectly valid and supported configuration.

Configure the network address

Select the witnessPg and edit the properties by selecting the pencil icon.

If vSAN is not an enabled service, select the witnessPg portgroup, and then select the option to edit it. Tag the VMkernel port for vSAN traffic, as shown below:

Next, ensure the MTU is set to the same value as the vSAN Data Node hosts’ vSAN VMkernel interface. *

In the IPV4 settings, a default IP address has been allocated. Modify it for the vSAN traffic network.

Once the witnessPg VMkernel interface address has been configured, click OK.

Static routes are still required by the witnessPg VMkernel interface (vmk1) as in vSAN 6.1 or 6.2. The "Override default gateway for this adapter" setting is not supported for the witness VMkernel interface (vmk1). Static routing is covered in detail in the Validate Networking section

* Note - Mixed MTU for witness traffic separation introduced in vSAN 6.7 Update 1. vSAN now supports different MTU settings for the witness traffic VMkernel interface and the vSAN data network VMkernel interface. This capability provides increased network flexibility for stretched clusters and 2-node clusters that utilize witness traffic separation.

Networking & Promiscuous mode

The vSAN Witness Appliance contains two network adapters that are connected to separate vSphere Standard Switches (VSS).

The vSAN Witness Appliance Management VMkernel is attached to one VSS, and the WitnessPG is attached to the other VSS. The Management VMkernel (vmk0) is used to communicate with the vCenter Server for appliance management. The WitnessPG VMkernel interface (vmk1) is used to communicate with the vSAN Network. This is the recommended configuration. These network adapters can be connected to different, or the same, networks. As long as they are fully routable to each other, it’s supported, separate subnets or otherwise.

The Management VMkernel interface could be tagged to include vSAN Network traffic as well as Management traffic. In this case, vmk0 would require connectivity to both vCenter Server and the vSAN Network.

In many nested ESXi environments, there is a recommendation to enable promiscuous mode to allow all Ethernet frames to pass to all VMs that are attached to the port group, even if it is not intended for that particular VM. The reason promiscuous mode is enabled in many nested environments is to prevent a virtual switch from dropping packets for (nested) vmnics that it does not know about on nested ESXi hosts.

A Note About Promiscuous Mode

In many nested ESXi environments, there is a recommendation to enable promiscuous mode to allow all Ethernet frames to pass to all VMs that are attached to the port group, even if it is not intended for that particular VM. The reason promiscuous mode is enabled in these environments is to prevent a virtual switch from dropping packets for (nested) vmnics that it does not know about on nested ESXi hosts. Nested ESXi deployments are not supported by VMware other than the vSAN Witness Appliance.

The vSAN Witness Appliance is essentially a nested ESXi installation tailored for use with vSAN Stretched Clusters.

The MAC addresses of the VMkernel interfaces vmk0 & vmk1 are configured to match the MAC addresses of the vSAN Witness Appliance host's NICs, vmnic0 and vmnic1. Because of this, packets destined for either the Management VMkernel interface (vmk0) or the WitnessPG VMkernel interface, are not dropped.

Because of this, promiscuous mode is not required when using a vSAN Witness Appliance

Configuring 2 Node vSAN

Pre-Requisites

To properly configure 2 Node vSAN, it is important to have a few pre-requisites in place.

- Determine whether Witness Traffic Separation will be used or not.

- Determine whether the vSAN Witness Host will be a vSAN Witness Appliance or a Physical vSphere Host

- Determine where the vSAN Witness Appliance will be hosted (if used)

- Determine where the vSAN Witness Appliance will be hosted (if used)

- Ensure connectivity between the 2 Node cluster and the vSAN Witness Host

- If using Witness Traffic Separation - A VMkernel interface other than the vSAN Network will be required

- If not using Witness Traffic Separation - The vSAN Network will be required to have connectivity to the vSAN Witness Host

- Configure appropriate networking

- For vCenter

- vCenter must be able to communicate with the Management interface on each vSAN Host

- vCenter must be able to communicate with the Management interface for the vSAN Witness Host

- For vSAN Hosts

- The vSAN Host Management interface must be able to communicate with vCenter

- vSAN Hosts must be able to communicate with each other

- vSAN Hosts must be able to communicate with the vSAN Witness Host vSAN Tagged interface

- For vSAN Witness Host

- The vSAN Witness Host Management interface must be able to communicate with vCenter

- The vSAN Witness Host vSAN Tagged interface must be able to communicate with the vSAN Nodes

- For vCenter

VMkernel Interfaces

VMkernel interfaces provide connectivity for different inter-node communication between vSpehre hosts. IP-based storage protocols typically have dedicated VMkernel interfaces on isolated networks. It is considered a best practice and VMware recommendation to isolate storage traffic. vSAN may only use VMkernel interfaces that are appropriate tagged for vSAN traffic types.

In most vSAN configurations, each vSAN tagged VMkernel interface must be able to commuicate with each and every other vSAN tagged VMkernel interface. This is true for normal vSAN clusters as well as 2 Node vSAN Clusters up to version 6.2 using vSphere 6.0 Update 2.

Witness Traffic Separation was publicly introduced with vSAN 6.5, but is also included in vSAN 6.2 as of vSphere 6.0 Update 3 (vCenter and vSphere versions). Witness Traffic Separation removes the requirement for traffic to and from the vSAN Witness Host to be available from the vSAN data network. A "front-end facing" VMkernel interface may be used, allowing the "back-end" of 2 Node vSAN to use VMkernel ports that are directly connected across hosts. The back-end vSAN network can still be connected to a switch if desired.

Using Witness Traffic Separation is the preferred method for configuring 2 Node vSAN as of vSphere 6.0 Update 3, wether using direct connect or using a switch for the back-end vSAN data network. Because of this, the previously required method will not be addressed.

Configuring the back-end VMkernel ports may be accomplished using Configuration Assist, but the Witness Traffic Separation ports must still be configured manually.

It is also recommended to configure 2 Node vSAN networking before configuring the 2 Node vSAN cluster.

VMkernel Interfaces - Witness Traffic

Using Witness Traffic Separation for vSAN Witness Traffic

Since the public introduction of Witness Traffic Separation in vSphere 6.5, this has become the preferred method of allowing communication with the vSAN Witness Host.

As previously discussed, Witness Traffic Separation separates the networking requirements for vSAN data and vSAN metadata communication to the vSAN Witness Host. Communication to the vSAN Witness Host is performed through a different VMkernel interface than the interface used to communicate between vSAN Data nodes.

Data nodes can use a direct connection between nodes or may be connected to a traditional switch, which could be part of an isolated data network.

Communication with the vSAN Witness Host is configured separately from the back-end vSAN Data network.

Creating the vSAN Witness Traffic VMkernel interfaces can be accomplished in the following fashion:

- Create a new VMkernel port for use as a Witness Traffic interface

- This will typically be on the same virtual switch as the Management interface (vSwitch0)

- Give the VMkernel interface a descriptive label and be certain to select the appropriate VLAN if necessary.

Do not enable any services on the VMkernel interface.

- Enter the appropriate network settings

*Note: the Default gateway may not be set. vSAN uses the same TCP/IP stack as the Management interface and may not use an alternate gateway. It is important to also ensure that this VMkernel interface is NOT on the same TCP/IP network as the Management interface.

- Select Finish to complete creating the VMkernel interface.

- Reviewing the VMkernel adapters, the newly created VMkernel interface does not have the proper tagging in place.

- In the HTML5 client (vSphere 6.7):

- From the ESXi console:

- In the HTML5 client (vSphere 6.7):

- To enable this vSAN Witness traffic on the VMkernel interface in the illustration (vmk1) there are a couple methods that can be used to enable vSAN Witness Traffic.

- Enable SSH on the vSAN Host and run the following command:

esxcli vsan network ip add -i <VMkernel for Witness Traffic> -T=witness example: esxcli vsan network ip add -i vmk1 -T=witness

- Use the vSphere CLI to connect to the vSAN Host and run the following command:

esxcli vsan network ip add -i <VMkernel for Witness Traffic> -T=witness example: esxcli vsan network ip add -i vmk1 -T=witness

- Run the following single line PowerCLI script against the vSAN Host:

Connect-VIServer <vSAN Host>; $EsxCli = Get-EsxCli -V2 ; $VMkArgs = $EsxCli.vsan.network.ip.add.CreateArgs() ; $VMkArgs.interfacename = <VMkernel for Witness Traffic> ; $VMkArgs.traffictype = "witness" ; $EsxCli.vsan.network.ip.add.Invoke($VMkArgs) ; Disconnect-VIServer -Confirm:$false example: Connect-VIServer "host1.demo.local"; $EsxCli = Get-EsxCli -V2; $VMkArgs = $EsxCli.vsan.network.ip.add.CreateArgs(); $VMkArgs.interfacename = "vmk1"; $VMkArgs.traffictype = "witness" ; $EsxCli.vsan.network.ip.add.Invoke($VMkArgs); Disconnect-VIServer -Confirm:$false

- Enable SSH on the vSAN Host and run the following command:

- Reviewing the VMkernel adapters again, the newly tagged VMkernel interface does have the proper tagging in place.

- In the HTML5 client (vSphere 6.7):

- From the ESXi console:

- In the HTML5 client (vSphere 6.7):

- The "Witness Tagged" VMkernel interface will need to have connectivity to the "vSAN Traffic" tagged interface on the vSAN Witness Host. In cases where this connectivity is over Layer 3, static routes must be added. This is because vSAN uses the default TCP/IP stack and will attempt to use the Management VMkernel's default gateway.

To add a static route on the vSAN data nodes to the vSAN Witness Host's "vSAN Tagged" VMkernel interface, perform one of the following methods:

- Enable SSH on the vSAN Host, login, and run the following command:

esxcfg-route -a <target address/prefixlength> <gateway to use> example: esxcfg-route -a "192.168.110.0/24 192.168.15.1"

- Use the vSphere CLI to connect to the vSAN Host and run the following command:

esxcfg-route -a <target address/prefixlength> <gateway to use> example: esxcfg-route -a "192.168.110.0/24 192.168.15.1"

- Run the following single line PowerCLI script against the vSAN Host:

Connect-VIServer <vSAN Host>; New-VMHostRoute -Destination <target address> -PrefixLength <prefixlength> -Gateway <gateway to use> -Confirm:$false; Disconnect-VIServer -Confirm:$false example: Connect-VIServer "host1.demo.local"; New-VMHostRoute -Destination "192.168.110.0" -PrefixLength "24" -Gateway "192.168.15.1" -Confirm:$false; Disconnect-VIServer -Confirm:$false

- Enable SSH on the vSAN Host, login, and run the following command:

- Confirm the Witness Tagged VMkernel interface can ping the vSAN Witness Host vSAN Traffic tagged VMkernel interface:

- Enable SSH on the vSAN Host, login, and run the following command:

vmkping -I <host Witness Tagged VMkernel interface name> <vSAN Witness Host vSAN Tagged interface IP address> example: vmkping -I vmk1 192.168.110.23

- Run the following single line PowerCLI script against the vSAN Host:

Connect-VIServer <vSAN Host> -user root; EsxCli = Get-EsxCli -V2; $VMkArgs = $EsxCli.network.diag.ping.CreateArgs(); $VMkArgs.interface = <Witness Tagged interface name>; $VMkArgs.host = <vSAN Witness Host vSAN Tagged interface IP address>; $EsxCli.network.diag.ping.Invoke($VMkArgs).Summary; Disconnect-VIServer -Confirm:$false example: Connect-VIServer "host1.demo.local" -user root; EsxCli = Get-EsxCli -V2; $VMkArgs = $EsxCli.network.diag.ping.CreateArgs(); $VMkArgs.interface = "vmk1"; $VMkArgs.host = "192.168.110.23"; $EsxCli.network.diag.ping.Invoke($VMkArgs).Summary; Disconnect-VIServer -Confirm:$false

- Enable SSH on the vSAN Host, login, and run the following command:

Once Witness Traffic Separation networking has been configured on the first host, repeat the process for the second host.

*Important things to consider/remember:

- The VMkernel interface for Witness Traffic may not be on the same network as the Management interface (vmk0). If Witness Traffic must be run on the same network as vmk0, simply skip steps 1-6 and tag vmk0 in step 7 instead.

- Witness Traffic from data nodes to the vSAN Witness Host contain no virtual machine data, but rather vSAN metadata, such as vSAN component placement, vSAN node ownership, etc. Tagging vmk0 for Witness Traffic is fully supported.

VMkernel Interfaces - vSAN Traffic - VSS

The back-end vSAN Data network is configured in the same fashion for 2 Node vSAN as any other vSAN network.

vSAN back-end networking may use either a vSphere Standard Switch or vSphere Distributed Switch. This document will only focus on the setup process and not the feature set differences between each of these.

Using a vSphere Standard Switch

Creating the vSAN Traffic VMkernel interfaces can be accomplished in the following fashion:

- Create a new VMkernel port for use as a vSAN Traffic interface on the first host.

- This will typically be on a different virtual switch from the Management interface (vSwitch0).

- When creating a new vSphere Standard Switch, adapters will have to be assigned. Click the plus to assign adapters to this vSphere Standard Switch.

- Select one or more adapters that will be used for vSAN Traffic and select OK.

- When all adapters have been added, select Next.

- Give the VMkernel interface a descriptive label and be certain to select the appropriate VLAN if necessary.

Enable vSAN services on the VMkernel interface.

- Enter the appropriate network settings

*Note: the Default gateway may not be set. vSAN uses the same TCP/IP stack as the Management interface and may not use an alternate gateway. It is important to also ensure that this VMkernel interface is NOT on the same TCP/IP network as the Management interface.

- Select Finish to complete creating the VMkernel interface.

- Reviewing the VMkernel adapters, the newly created VMkernel interface does not have the proper tagging in place.

- In the HTML5 client (vSphere 6.7):

- From the ESXi console:

Notice vmk2 has vSAN Traffic Tagged and vmk1 has Witness Traffic Tagged.

- In the HTML5 client (vSphere 6.7):

- Repeat this process for the second host.

VMkernel Interfaces - vSAN Traffic - VDS

Using a vSphere Distributed Switch

Creating the vSAN Witness Traffic VMkernel interfaces can be accomplished in the following fashion:

- Create a new VMkernel port for use as a vSAN Traffic interface on the first host.

- When using a vSphere Distributed Switch, select an existing network that has been previously created.

- Select browse and choose the vSAN Port Group on the VDS and select OK.

- Select Next.

- Select the appropriate VLAN if necessary and check vSAN in the Available services. This is "tagging" this VMkernel interface for vSAN Traffic.

- Enter the appropriate network settings

*Note: the Default gateway may not be set. vSAN uses the same TCP/IP stack as the Management interface and may not use an alternate gateway. It is important to also ensure that this VMkernel interface is NOT on the same TCP/IP network as the Management interface.

*Note: the Default gateway may not be set. vSAN uses the same TCP/IP stack as the Management interface and may not use an alternate gateway. It is important to also ensure that this VMkernel interface is NOT on the same TCP/IP network as the Management interface.

- Select Finish to complete creating the VMkernel interface.

- Reviewing the VMkernel adapters, the newly created VMkernel interface does not have the proper tagging in place.

- In the HTML5 client (vSphere 6.7):

- From the ESXi console:

Notice vmk2 has vSAN Traffic Tagged and vmk1 has Witness Traffic Tagged.

Notice vmk2 has vSAN Traffic Tagged and vmk1 has Witness Traffic Tagged.

- In the HTML5 client (vSphere 6.7):

- Repeat this process for the vMotion Network.

- Repeat this process for the vSAN and vMotion Network for the second host.

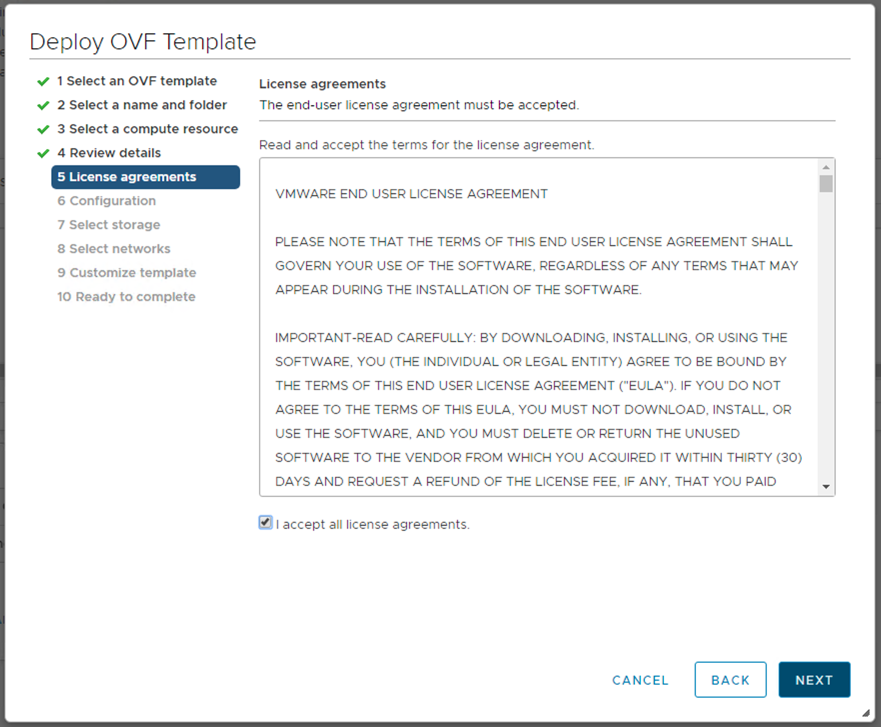

Creating a New 2 Node vSAN Cluster

Upgrading a older 2 Node vSAN Cluster

Upgrading a vSAN 2 Node Cluster is very easy. It is important though to follow a sequence of steps to ensure the upgrade goes smoothly.

Upgrading Step 1: Upgrade vCenter Server

As with any vSphere upgrades, it is typically recommended to upgrade vCenter Server first. While vCenter Server for Windows installations are supported, the steps below describe the process when using the vCenter Server Appliance (VCSA).

Log in to the VAM I interface and select Update from the Navigator pane to begin the upgrade process.

*Refer to the documentation for vCenter Server for Windows to properly upgrade to a newer release of vCenter Server.

After the upgrade has completed, the VCSA will have to be rebooted for the updates to be applied. It is important to remember that vSAN Health Check will not be available until after hosts have been upgraded.

Upgrading Step 2: Upgrade Each Host

Upgrading each host is the next task to be completed. There are a few considerations to remember when performing these steps.